Recursive least squares filter

Recursive least squares (RLS) is an adaptive filter algorithm that recursively finds the coefficients that minimize a weighted linear least squares cost function relating to the input signals. This approach is in contrast to other algorithms such as the least mean squares (LMS) that aim to reduce the mean square error. In the derivation of the RLS, the input signals are considered deterministic, while for the LMS and similar algorithms they are considered stochastic. Compared to most of its competitors, the RLS exhibits extremely fast convergence. However, this benefit comes at the cost of high computational complexity.

Motivation

RLS was discovered by Gauss but lay unused or ignored until 1950 when Plackett rediscovered the original work of Gauss from 1821. In general, the RLS can be used to solve any problem that can be solved by adaptive filters. For example, suppose that a signal is transmitted over an echoey, noisy channel that causes it to be received as

where represents additive noise. The intent of the RLS filter is to recover the desired signal by use of a -tap FIR filter, :

where is the column vector containing the most recent samples of . The estimate of the recovered desired signal is

The goal is to estimate the parameters of the filter , and at each time we refer to the current estimate as and the adapted least-squares estimate by . is also a column vector, as shown below, and the transpose, , is a row vector. The matrix product (which is the dot product of and ) is , a scalar. The estimate is "good" if is small in magnitude in some least squares sense.

As time evolves, it is desired to avoid completely redoing the least squares algorithm to find the new estimate for , in terms of .

The benefit of the RLS algorithm is that there is no need to invert matrices, thereby saving computational cost. Another advantage is that it provides intuition behind such results as the Kalman filter.

Discussion

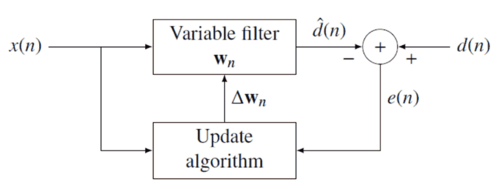

The idea behind RLS filters is to minimize a cost function by appropriately selecting the filter coefficients , updating the filter as new data arrives. The error signal and desired signal are defined in the negative feedback diagram below:

The error implicitly depends on the filter coefficients through the estimate :

The weighted least squares error function —the cost function we desire to minimize—being a function of is therefore also dependent on the filter coefficients:

where is the "forgetting factor" which gives exponentially less weight to older error samples.

The cost function is minimized by taking the partial derivatives for all entries of the coefficient vector and setting the results to zero

Next, replace with the definition of the error signal

Rearranging the equation yields

This form can be expressed in terms of matrices

where is the weighted sample covariance matrix for , and is the equivalent estimate for the cross-covariance between and . Based on this expression we find the coefficients which minimize the cost function as

This is the main result of the discussion.

Choosing λ

The smaller is, the smaller is the contribution of previous samples to the covariance matrix. This makes the filter more sensitive to recent samples, which means more fluctuations in the filter co-efficients. The case is referred to as the growing window RLS algorithm. In practice, is usually chosen between 0.98 and 1.[1] By using type-II maximum likelihood estimation the optimal can be estimated from a set of data.[2]

Recursive algorithm

The discussion resulted in a single equation to determine a coefficient vector which minimizes the cost function. In this section we want to derive a recursive solution of the form

where is a correction factor at time . We start the derivation of the recursive algorithm by expressing the cross covariance in terms of

where is the dimensional data vector

Similarly we express in terms of by

In order to generate the coefficient vector we are interested in the inverse of the deterministic auto-covariance matrix. For that task the Woodbury matrix identity comes in handy. With

is -by- is -by-1 (column vector) is 1-by- (row vector) is the 1-by-1 identity matrix

The Woodbury matrix identity follows

To come in line with the standard literature, we define

where the gain vector is

Before we move on, it is necessary to bring into another form

Subtracting the second term on the left side yields

With the recursive definition of the desired form follows

Now we are ready to complete the recursion. As discussed

The second step follows from the recursive definition of . Next we incorporate the recursive definition of together with the alternate form of and get

With we arrive at the update equation

where is the a priori error. Compare this with the a posteriori error; the error calculated after the filter is updated:

That means we found the correction factor

This intuitively satisfying result indicates that the correction factor is directly proportional to both the error and the gain vector, which controls how much sensitivity is desired, through the weighting factor, .

RLS algorithm summary

The RLS algorithm for a p-th order RLS filter can be summarized as

| Parameters: | filter order |

| forgetting factor | |

| value to initialize | |

| Initialization: | , |

| , | |

| where is the identity matrix of rank | |

| Computation: | For |

|

| |

| . |

The recursion for follows an algebraic Riccati equation and thus draws parallels to the Kalman filter.[3]

Lattice recursive least squares filter (LRLS)

The lattice recursive least squares adaptive filter is related to the standard RLS except that it requires fewer arithmetic operations (order N).[4] It offers additional advantages over conventional LMS algorithms such as faster convergence rates, modular structure, and insensitivity to variations in eigenvalue spread of the input correlation matrix. The LRLS algorithm described is based on a posteriori errors and includes the normalized form. The derivation is similar to the standard RLS algorithm and is based on the definition of . In the forward prediction case, we have with the input signal as the most up to date sample. The backward prediction case is , where i is the index of the sample in the past we want to predict, and the input signal is the most recent sample.[5]

Parameter summary

- is the forward reflection coefficient

- is the backward reflection coefficient

- represents the instantaneous a posteriori forward prediction error

- represents the instantaneous a posteriori backward prediction error

- is the minimum least-squares backward prediction error

- is the minimum least-squares forward prediction error

- is a conversion factor between a priori and a posteriori errors

- are the feedforward multiplier coefficients.

- is a small positive constant that can be 0.01

LRLS algorithm summary

The algorithm for a LRLS filter can be summarized as

| Initialization: | |

| For | |

| (if for ) | |

| End | |

| Computation: | |

| For | |

| For | |

| Feedforward filtering | |

| End | |

| End | |

Normalized lattice recursive least squares filter (NLRLS)

The normalized form of the LRLS has fewer recursions and variables. It can be calculated by applying a normalization to the internal variables of the algorithm which will keep their magnitude bounded by one. This is generally not used in real-time applications because of the number of division and square-root operations which comes with a high computational load.

NLRLS algorithm summary

The algorithm for a NLRLS filter can be summarized as

| Initialization: | |

| For | |

| (if for ) | |

| End | |

| Computation: | |

| For | |

| (Input signal energy) | |

| (Reference signal energy) | |

| For | |

| Feedforward filter | |

| End | |

| End | |

See also

References

- Hayes, Monson H. (1996). "9.4: Recursive Least Squares". Statistical Digital Signal Processing and Modeling. Wiley. p. 541. ISBN 0-471-59431-8.

- Simon Haykin, Adaptive Filter Theory, Prentice Hall, 2002, ISBN 0-13-048434-2

- M.H.A Davis, R.B. Vinter, Stochastic Modelling and Control, Springer, 1985, ISBN 0-412-16200-8

- Weifeng Liu, Jose Principe and Simon Haykin, Kernel Adaptive Filtering: A Comprehensive Introduction, John Wiley, 2010, ISBN 0-470-44753-2

- R.L.Plackett, Some Theorems in Least Squares, Biometrika, 1950, 37, 149–157, ISSN 0006-3444

- C.F.Gauss, Theoria combinationis observationum erroribus minimis obnoxiae, 1821, Werke, 4. Gottinge

Notes

- ↑ Emannual C. Ifeacor, Barrie W. Jervis. Digital signal processing: a practical approach, second edition. Indianapolis: Pearson Education Limited, 2002, p. 718

- ↑ Steven Van Vaerenbergh, Ignacio Santamaría, Miguel Lázaro-Gredilla "Estimation of the forgetting factor in kernel recursive least squares", 2012 IEEE International Workshop on Machine Learning for Signal Processing, 2012, accessed June 23, 2016.

- ↑ Welch, Greg and Bishop, Gary "An Introduction to the Kalman Filter", Department of Computer Science, University of North Carolina at Chapel Hill, September 17, 1997, accessed July 19, 2011.

- ↑ Diniz, Paulo S.R., "Adaptive Filtering: Algorithms and Practical Implementation", Springer Nature Switzerland AG 2020, Chapter 7: Adaptive Lattice-Based RLS Algorithms. https://doi.org/10.1007/978-3-030-29057-3_7

- ↑ Albu, Kadlec, Softley, Matousek, Hermanek, Coleman, Fagan "Implementation of (Normalised) RLS Lattice on Virtex", Digital Signal Processing, 2001, accessed December 24, 2011.

|