Secretary problem

The secretary problem demonstrates a scenario involving optimal stopping theory[1][2] that is studied extensively in the fields of applied probability, statistics, and decision theory. It is also known as the marriage problem, the sultan's dowry problem, the fussy suitor problem, the googol game, and the best choice problem. Its solution is also known as the 37% rule.[3][4]

The basic form of the problem is the following: imagine an administrator who wants to hire the best secretary out of [math]\displaystyle{ n }[/math] rankable applicants for a position. The applicants are interviewed one by one in random order. A decision about each particular applicant is to be made immediately after the interview. Once rejected, an applicant cannot be recalled. During the interview, the administrator gains information sufficient to rank the applicant among all applicants interviewed so far, but is unaware of the quality of yet unseen applicants. The question is about the optimal strategy (stopping rule) to maximize the probability of selecting the best applicant. If the decision can be deferred to the end, this can be solved by the simple maximum selection algorithm of tracking the running maximum (and who achieved it), and selecting the overall maximum at the end. The difficulty is that the decision must be made immediately.

The shortest rigorous proof known so far is provided by the odds algorithm. It implies that the optimal win probability is always at least [math]\displaystyle{ 1/e }[/math] (where e is the base of the natural logarithm), and that the latter holds even in a much greater generality. The optimal stopping rule prescribes always rejecting the first [math]\displaystyle{ \sim n/e }[/math] applicants that are interviewed and then stopping at the first applicant who is better than every applicant interviewed so far (or continuing to the last applicant if this never occurs). Sometimes this strategy is called the [math]\displaystyle{ 1/e }[/math] stopping rule, because the probability of stopping at the best applicant with this strategy is already about [math]\displaystyle{ 1/e }[/math] for moderate values of [math]\displaystyle{ n }[/math]. One reason why the secretary problem has received so much attention is that the optimal policy for the problem (the stopping rule) is simple and selects the single best candidate about 37% of the time, irrespective of whether there are 100 or 100 million applicants.

Formulation

Although there are many variations, the basic problem can be stated as follows:

- There is a single position to fill.

- There are n applicants for the position, and the value of n is known.

- The applicants, if all seen together, can be ranked from best to worst unambiguously.

- The applicants are interviewed sequentially in random order, with each order being equally likely.

- Immediately after an interview, the interviewed applicant is either accepted or rejected, and the decision is irrevocable.

- The decision to accept or reject an applicant can be based only on the relative ranks of the applicants interviewed so far.

- The objective of the general solution is to have the highest probability of selecting the best applicant of the whole group. This is the same as maximizing the expected payoff, with payoff defined to be one for the best applicant and zero otherwise.

A candidate is defined as an applicant who, when interviewed, is better than all the applicants interviewed previously. Skip is used to mean "reject immediately after the interview". Since the objective in the problem is to select the single best applicant, only candidates will be considered for acceptance. The "candidate" in this context corresponds to the concept of record in permutation.

Deriving the optimal policy

The optimal policy for the problem is a stopping rule. Under it, the interviewer rejects the first r − 1 applicants (let applicant M be the best applicant among these r − 1 applicants), and then selects the first subsequent applicant that is better than applicant M. It can be shown that the optimal strategy lies in this class of strategies.[citation needed] (Note that we should never choose an applicant who is not the best we have seen so far, since they cannot be the best overall applicant.) For an arbitrary cutoff r, the probability that the best applicant is selected is

- [math]\displaystyle{ \begin{align} P(r) &= \sum_{i=1}^{n} P\left(\text{applicant } i \text{ is selected} \cap \text{applicant } i \text{ is the best}\right) \\ &= \sum_{i=1}^{n} P\left(\text{applicant } i \text{ is selected} | \text{applicant } i \text{ is the best}\right) \cdot P\left(\text{applicant } i \text{ is the best}\right) \\ &= \left[ \sum_{i=1}^{r-1} 0 + \sum_{i=r}^{n} P\left( \left. \begin{array}{l} \text{the best of the first } i - 1 \text{ applicants} \\ \text{is in the first } r-1 \text{ applicants} \end{array} \right| \text{applicant } i \text{ is the best} \right) \right] \cdot \frac{1}{n} \\ &= \left[\sum_{i=r}^{n} \frac{r-1}{i-1}\right] \cdot \frac{1}{n} \\ &=\frac{r-1}{n} \sum_{i=r}^{n} \frac{1}{i-1}. \end{align} }[/math]

The sum is not defined for r = 1, but in this case the only feasible policy is to select the first applicant, and hence P(1) = 1/n. This sum is obtained by noting that if applicant i is the best applicant, then it is selected if and only if the best applicant among the first i − 1 applicants is among the first r − 1 applicants that were rejected. Letting n tend to infinity, writing [math]\displaystyle{ x }[/math] as the limit of (r−1)/n, using t for (i−1)/n and dt for 1/n, the sum can be approximated by the integral

- [math]\displaystyle{ P(x)=x \int_{x}^{1}\frac{1}{t}\,dt = -x \ln(x)\;. }[/math]

Taking the derivative of P(x) with respect to [math]\displaystyle{ x }[/math], setting it to 0, and solving for x, we find that the optimal x is equal to 1/e. Thus, the optimal cutoff tends to n/e as n increases, and the best applicant is selected with probability 1/e.

For small values of n, the optimal r can also be obtained by standard dynamic programming methods. The optimal thresholds r and probability of selecting the best alternative P for several values of n are shown in the following table.[note 1]

| [math]\displaystyle{ n }[/math] | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| [math]\displaystyle{ r }[/math] | 1 | 1 | 2 | 2 | 3 | 3 | 3 | 4 | 4 | 4 |

| [math]\displaystyle{ P }[/math] | 1.000 | 0.500 | 0.500 | 0.458 | 0.433 | 0.428 | 0.414 | 0.410 | 0.406 | 0.399 |

The probability of selecting the best applicant in the classical secretary problem converges toward [math]\displaystyle{ 1/e\approx 0.368 }[/math].

Alternative solution

This problem and several modifications can be solved (including the proof of optimality) in a straightforward manner by the odds algorithm, which also has other applications. Modifications for the secretary problem that can be solved by this algorithm include random availabilities of applicants, more general hypotheses for applicants to be of interest to the decision maker, group interviews for applicants, as well as certain models for a random number of applicants.[citation needed]

Limitations

The solution of the secretary problem is only meaningful if it is justified to assume that the applicants have no knowledge of the decision strategy employed, because early applicants have no chance at all and may not show up otherwise.

One important drawback for applications of the solution of the classical secretary problem is that the number of applicants [math]\displaystyle{ n }[/math] must be known in advance, which is rarely the case. One way to overcome this problem is to suppose that the number of applicants is a random variable [math]\displaystyle{ N }[/math] with a known distribution of [math]\displaystyle{ P(N=k)_{k=1,2,\cdots} }[/math] (Presman and Sonin, 1972). For this model, the optimal solution is in general much harder, however. Moreover, the optimal success probability is now no longer around 1/e but typically lower. This can be understood in the context of having a "price" to pay for not knowing the number of applicants. However, in this model the price is high. Depending on the choice of the distribution of [math]\displaystyle{ N }[/math], the optimal win probability can approach zero. Looking for ways to cope with this new problem led to a new model yielding the so-called 1/e-law of best choice.

1/e-law of best choice

The essence of the model is based on the idea that life is sequential and that real-world problems pose themselves in real time. Also, it is easier to estimate times in which specific events (arrivals of applicants) should occur more frequently (if they do) than to estimate the distribution of the number of specific events which will occur. This idea led to the following approach, the so-called unified approach (1984):

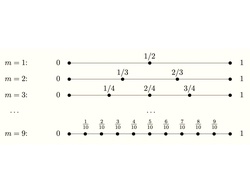

The model is defined as follows: An applicant must be selected on some time interval [math]\displaystyle{ [0,T] }[/math] from an unknown number [math]\displaystyle{ N }[/math] of rankable applicants. The goal is to maximize the probability of selecting only the best under the hypothesis that all arrival orders of different ranks are equally likely. Suppose that all applicants have the same, but independent to each other, arrival time density [math]\displaystyle{ f }[/math] on [math]\displaystyle{ [0,T] }[/math] and let [math]\displaystyle{ F }[/math] denote the corresponding arrival time distribution function, that is

- [math]\displaystyle{ F(t) = \int_{0}^{t} f(s)ds }[/math], [math]\displaystyle{ \, 0\le t\le T }[/math].

Let [math]\displaystyle{ \tau }[/math] be such that [math]\displaystyle{ F(\tau)=1/e. }[/math] Consider the strategy to wait and observe all applicants up to time [math]\displaystyle{ \tau }[/math] and then to select, if possible, the first candidate after time [math]\displaystyle{ \tau }[/math] which is better than all preceding ones. Then this strategy, called 1/e-strategy, has the following properties:

The 1/e-strategy

- (i) yields for all [math]\displaystyle{ N }[/math] a success probability of at least 1/e,

- (ii) is a minimax-optimal strategy for the selector who does not know [math]\displaystyle{ N }[/math],

- (iii) selects, if there is at least one applicant, none at all with probability exactly 1/e.

The 1/e-law, proved in 1984 by F. Thomas Bruss, came as a surprise. The reason was that a value of about 1/e had been considered before as being out of reach in a model for unknown [math]\displaystyle{ N }[/math], whereas this value 1/e was now achieved as a lower bound for the success probability, and this in a model with arguably much weaker hypotheses (see e.g. Math. Reviews 85:m).

However, there are many other strategies that achieve (i) and (ii) and, moreover, perform strictly better than the 1/e-strategy simultaneously for all [math]\displaystyle{ N }[/math]>2. A simple example is the strategy which selects (if possible) the first relatively best candidate after time [math]\displaystyle{ \tau }[/math] provided that at least one applicant arrived before this time, and otherwise selects (if possible) the second relatively best candidate after time [math]\displaystyle{ \tau }[/math].[5]

The 1/e-law is sometimes confused with the solution for the classical secretary problem described above because of the similar role of the number 1/e. However, in the 1/e-law, this role is more general. The result is also stronger, since it holds for an unknown number of applicants and since the model based on an arrival time distribution F is more tractable for applications.

The game of googol

In the article "Who solved the Secretary problem?" (Ferguson, 1989)[1], it's claimed the secretary problem first appeared in print in Martin Gardner's February 1960 Mathematical Games column in Scientific American:

Ask someone to take as many slips of paper as he pleases, and on each slip write a different positive number. The numbers may range from small fractions of 1 to a number the size of a googol (1 followed by a hundred zeroes) or even larger. These slips are turned face down and shuffled over the top of a table. One at a time you turn the slips face up. The aim is to stop turning when you come to the number that you guess to be the largest of the series. You cannot go back and pick a previously turned slip. If you turn over all the slips, then of course you must pick the last one turned.[6]

Ferguson pointed out that the secretary game remained unsolved, as a zero-sum game with two antagonistic players.[1] In this game:

- Alice, the informed player, writes secretly distinct numbers on [math]\displaystyle{ n }[/math] cards.

- Bob, the stopping player, observes the actual values and can stop turning cards whenever he wants, winning if the last card turned has the overall maximal number.

- Bob wants to guess the maximal number with the highest possible probability, while Alice's goal is to keep this probability as low as possible.

The difference with the basic secretary problem are two:

- Alice does not have to write numbers uniformly at random. She may write them according to any joint probability distribution to trick Bob.

- Bob observes the actual values written on the cards, which he can use in his decision procedures.

Strategic analysis

Alice first writes down n numbers, which are then shuffled. So, their ordering does not matter, meaning that Alice's numbers must be an exchangeable random variable sequence [math]\displaystyle{ X_1, X_2, ..., X_n }[/math]. Alice's strategy is then just picking the trickiest exchangeable random variable sequence.

Bob's strategy is formalizable as a stopping rule [math]\displaystyle{ \tau }[/math] for the sequence [math]\displaystyle{ X_1, X_2, ..., X_n }[/math].

We say that a stopping rule [math]\displaystyle{ \tau }[/math] for Bob is a relative rank stopping strategy iff it depends on only the relative ranks of [math]\displaystyle{ X_1, X_2, ..., X_n }[/math], and not on their numerical values. In other words, it is as if someone secretly intervened after Alice picked her numbers, and changed each number in [math]\displaystyle{ X_1, X_2, ..., X_n }[/math] into its relative rank (breaking ties randomly). For example, [math]\displaystyle{ 0.2, 0.3, 0.3, 0.1 }[/math] is changed to [math]\displaystyle{ 2, 3, 4, 1 }[/math] or [math]\displaystyle{ 2, 4, 3, 1 }[/math] with equal probability. This makes it as if Alice played an exchangeable random permutation on [math]\displaystyle{ \{1, 2, ..., n\} }[/math]. Now, since the only exchangeable random permutation on [math]\displaystyle{ \{1, 2, ..., n\} }[/math] is just the uniform distribution over all permutations on [math]\displaystyle{ \{1, 2, ..., n\} }[/math], the optimal relative rank stopping strategy is the optimal stopping rule for the secretary problem, given above, with a winning probability[math]\displaystyle{ Pr(X_\tau = \max_{i\in 1:n} X_i) = \max_{r\in 1:n}\frac{r-1}{n} \sum_{i=r}^{n} \frac{1}{i-1} }[/math]Alice's goal then is to make sure Bob cannot do better than the relative-rank stopping strategy.

By the rules of the game, Alice's sequence must be exchangeable, but to do well in the game, Alice should not pick it to be independent. If Alice samples the numbers independently from some fixed distribution, it would allow Bob to do better. To see this intuitively, imagine if [math]\displaystyle{ n=2 }[/math], and Alice is to pick both numbers from the normal distribution [math]\displaystyle{ N(0, 1) }[/math], independently. Then if Bob turns over one number and sees [math]\displaystyle{ -3 }[/math], then he can quite confidently turn over the second number, and if Bob turns over one number and sees [math]\displaystyle{ +3 }[/math], then he can quite confidently pick the first number. Alice can do better by picking [math]\displaystyle{ X_1, X_2 }[/math] that are positively correlated.

So the fully formal statement is as below:

Does there exist an exchangeable sequence of random variables [math]\displaystyle{ X_1, ..., X_n }[/math], such that for any stopping rule [math]\displaystyle{ \tau }[/math], [math]\displaystyle{ Pr(X_\tau = \max_{i\in 1:n} X_i) \leq \max_{r\in 1:n}\frac{r-1}{n} \sum_{i=r}^{n} \frac{1}{i-1} }[/math]?

Solution

For [math]\displaystyle{ n=2 }[/math], if Bob plays the optimal relative-rank stoppings strategy, then Bob has winning probability 1/2. Surprisingly, Alice has no minimax strategy, which is closely related to a paradox of T. Cover[7] and the two envelopes paradox. Concretely, Bob can play this strategy: sample a random number [math]\displaystyle{ Y }[/math]. If [math]\displaystyle{ X_1 \gt Y }[/math], then pick [math]\displaystyle{ X_1 }[/math], else pick [math]\displaystyle{ X_2 }[/math]. Now, Bob can win with probability strictly greater than 1/2. Suppose Alice's numbers are different, then conditional on [math]\displaystyle{ Y \not\in [\min(X_1, X_2), \max(X_1, X_2)] }[/math], Bob wins with probability 1/2, but conditional on [math]\displaystyle{ Y \in [\min(X_1, X_2), \max(X_1, X_2)] }[/math], Bob wins with probability 1.

Note that the random number [math]\displaystyle{ Y }[/math] can be sampled from any random distribution, as long as [math]\displaystyle{ Y \in [\min(X_1, X_2), \max(X_1, X_2)] }[/math] has nonzero probability.

However, for any [math]\displaystyle{ \epsilon \gt 0 }[/math], Alice can construct an exchangeable sequence [math]\displaystyle{ X_1, X_2 }[/math] such that Bob's winning probability is at most [math]\displaystyle{ 1/2 + \epsilon }[/math].[1]

But for [math]\displaystyle{ n\gt 2 }[/math], the answer is yes: Alice can choose random numbers (which are dependent random variables) in such a way that Bob cannot play better than using the classical stopping strategy based on the relative ranks.[8]

Heuristic performance

The remainder of the article deals again with the secretary problem for a known number of applicants.

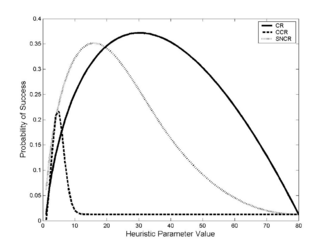

Stein, Seale & Rapoport 2003 derived the expected success probabilities for several psychologically plausible heuristics that might be employed in the secretary problem. The heuristics they examined were:

- The cutoff rule (CR): Do not accept any of the first y applicants; thereafter, select the first encountered candidate (i.e., an applicant with relative rank 1). This rule has as a special case the optimal policy for the classical secretary problem for which y = r.

- Candidate count rule (CCR): Select the y-th encountered candidate. Note, that this rule does not necessarily skip any applicants; it only considers how many candidates have been observed, not how deep the decision maker is in the applicant sequence.

- Successive non-candidate rule (SNCR): Select the first encountered candidate after observing y non-candidates (i.e., applicants with relative rank > 1).

Each heuristic has a single parameter y. The figure (shown on right) displays the expected success probabilities for each heuristic as a function of y for problems with n = 80.

Cardinal payoff variant

Finding the single best applicant might seem like a rather strict objective. One can imagine that the interviewer would rather hire a higher-valued applicant than a lower-valued one, and not only be concerned with getting the best. That is, the interviewer will derive some value from selecting an applicant that is not necessarily the best, and the derived value increases with the value of the one selected.

To model this problem, suppose that the [math]\displaystyle{ n }[/math] applicants have "true" values that are random variables X drawn i.i.d. from a uniform distribution on [0, 1]. Similar to the classical problem described above, the interviewer only observes whether each applicant is the best so far (a candidate), must accept or reject each on the spot, and must accept the last one if he/she is reached. (To be clear, the interviewer does not learn the actual relative rank of each applicant. He/she learns only whether the applicant has relative rank 1.) However, in this version the payoff is given by the true value of the selected applicant. For example, if he/she selects an applicant whose true value is 0.8, then he/she will earn 0.8. The interviewer's objective is to maximize the expected value of the selected applicant.

Since the applicant's values are i.i.d. draws from a uniform distribution on [0, 1], the expected value of the tth applicant given that [math]\displaystyle{ x_{t}=\max\left\{x_1, x_2, \ldots, x_t\right\} }[/math] is given by

- [math]\displaystyle{ E_{t}=E\left(X_{t}|I_{t}=1\right)=\frac{t}{t+1}. }[/math]

As in the classical problem, the optimal policy is given by a threshold, which for this problem we will denote by [math]\displaystyle{ c }[/math], at which the interviewer should begin accepting candidates. Bearden showed that c is either [math]\displaystyle{ \lfloor \sqrt n \rfloor }[/math] or [math]\displaystyle{ \lceil \sqrt n \rceil }[/math].[9] (In fact, whichever is closest to [math]\displaystyle{ \sqrt n }[/math].) This follows from the fact that given a problem with [math]\displaystyle{ n }[/math] applicants, the expected payoff for some arbitrary threshold [math]\displaystyle{ 1 \le c \le n }[/math] is

- [math]\displaystyle{ V_{n}(c)=\sum_{t=c}^{n-1}\left[\prod_{s=c}^{t-1}\left(\frac{s-1}{s}\right)\right]\left(\frac{1}{t+1}\right) +\left[\prod_{s=c}^{n-1}\left(\frac{s-1}{s}\right)\right]\frac{1}{2}={\frac {2cn-{c}^{2}+c-n}{2cn}}. }[/math]

Differentiating [math]\displaystyle{ V_{n}(c) }[/math] with respect to c, one gets

- [math]\displaystyle{ \frac{\partial V}{\partial c} = \frac{ -{c}^{\,2}+n }{ 2{c}^{\,2}n }. }[/math]

Since [math]\displaystyle{ \partial^{\,2}V / \partial c^{\,2}\lt 0 }[/math] for all permissible values of [math]\displaystyle{ c }[/math], we find that [math]\displaystyle{ V }[/math] is maximized at [math]\displaystyle{ c=\sqrt n }[/math]. Since V is convex in [math]\displaystyle{ c }[/math], the optimal integer-valued threshold must be either [math]\displaystyle{ \lfloor \sqrt n \rfloor }[/math] or [math]\displaystyle{ \lceil \sqrt n \rceil }[/math]. Thus, for most values of [math]\displaystyle{ n }[/math] the interviewer will begin accepting applicants sooner in the cardinal payoff version than in the classical version where the objective is to select the single best applicant. Note that this is not an asymptotic result: It holds for all [math]\displaystyle{ n }[/math]. However, this is not the optimal policy to maximize expected value from a known distribution. In the case of a known distribution, optimal play can be calculated via dynamic programming.

A more general form of this problem introduced by Palley and Kremer (2014)[10] assumes that as each new applicant arrives, the interviewer observes their rank relative to all of the applicants that have been observed previously. This model is consistent with the notion of an interviewer learning as they continue the search process by accumulating a set of past data points that they can use to evaluate new candidates as they arrive. A benefit of this so-called partial-information model is that decisions and outcomes achieved given the relative rank information can be directly compared to the corresponding optimal decisions and outcomes if the interviewer had been given full information about the value of each applicant. This full-information problem, in which applicants are drawn independently from a known distribution and the interviewer seeks to maximize the expected value of the applicant selected, was originally solved by Moser (1956),[11] Sakaguchi (1961),[12] and Karlin (1962).

Other modifications

There are several variants of the secretary problem that also have simple and elegant solutions.

Pick the second-best, using one try

One variant replaces the desire to pick the best with the desire to pick the second-best.[13][14][15] Robert J. Vanderbei calls this the "postdoc" problem arguing that the "best" will go to Harvard. For this problem, the probability of success for an even number of applicants is exactly [math]\displaystyle{ \frac{0.25n^2}{n(n-1)} }[/math]. This probability tends to 1/4 as n tends to infinity illustrating the fact that it is easier to pick the best than the second-best.

Pick the top-k ones, using k tries

Consider the problem of picking the k best secretaries out of n candidates, using k tries.

In general, the optimal decision method starts by observing [math]\displaystyle{ r=\left\lfloor\frac{n}{k e^{1 / k}}\right\rfloor }[/math] candidates without picking any one of them, then pick every candidate that is better than those first [math]\displaystyle{ r }[/math] candidates until we run out of candidates or picks. If [math]\displaystyle{ k }[/math] is held constant while [math]\displaystyle{ n\to\infty }[/math], then the probability of success converges to [math]\displaystyle{ \frac{1}{ek} }[/math].[16] By Vanderbei 1980, if [math]\displaystyle{ k = n/2 }[/math], then the probability of success is [math]\displaystyle{ \frac{1}{n/2+1} }[/math].

Pick the best, using multiple tries

In this variant, a player is allowed [math]\displaystyle{ r }[/math] choices and wins if any choice is the best. An optimal strategy for this problem belongs to the class of strategies defined by a set of threshold numbers [math]\displaystyle{ (a_1, a_2, ..., a_r) }[/math], where [math]\displaystyle{ a_1 \gt a_2 \gt \cdots \gt a_r }[/math].

Specifically, imagine that you have [math]\displaystyle{ r }[/math] letters of acceptance labelled from [math]\displaystyle{ 1 }[/math] to [math]\displaystyle{ r }[/math]. You would have [math]\displaystyle{ r }[/math] application officers, each holding one letter. You keep interviewing the candidates and rank them on a chart that every application officer can see. Now officer [math]\displaystyle{ i }[/math] would send their letter of acceptance to the first candidate that is better than all candidates [math]\displaystyle{ 1 }[/math] to [math]\displaystyle{ a_i }[/math]. (Unsent letters of acceptance are by default given to the last applicants, the same as in the standard secretary problem.)[17]

At [math]\displaystyle{ n \rightarrow \infty }[/math] limit, each [math]\displaystyle{ a_i \sim ne^{-k_i} }[/math], for some rational number [math]\displaystyle{ k_i }[/math].[18]

Probability of winning

When [math]\displaystyle{ r=2 }[/math], the probability of winning converges to [math]\displaystyle{ e^{-1} + e^{-\frac{3}{2}} , (n \rightarrow \infty) }[/math]. More generally, for positive integers [math]\displaystyle{ r }[/math], the probability of winning converges to [math]\displaystyle{ p_1 + p_2 + \cdots + p_r }[/math], where [math]\displaystyle{ p_i = \lim_{n \rightarrow \infty} \frac{a_i}{n} }[/math].[18]

[17] computed up to [math]\displaystyle{ r=4 }[/math], with [math]\displaystyle{ e^{-1} + e^{-\frac{3}{2}} + e^{-\frac{47}{24}} + e^{-\frac{2761}{1152}} }[/math].

Matsui & Ano 2016 gave a general algorithm. For example, [math]\displaystyle{ p_5 = e^{-\frac{4162637}{1474560}} }[/math].

Experimental studies

Experimental psychologists and economists have studied the decision behavior of actual people in secretary problem situations.[19] In large part, this work has shown that people tend to stop searching too soon. This may be explained, at least in part, by the cost of evaluating candidates. In real world settings, this might suggest that people do not search enough whenever they are faced with problems where the decision alternatives are encountered sequentially. For example, when trying to decide at which gas station along a highway to stop for gas, people might not search enough before stopping. If true, then they would tend to pay more for gas than if they had searched longer. The same may be true when people search online for airline tickets. Experimental research on problems such as the secretary problem is sometimes referred to as behavioral operations research.

Neural correlates

While there is a substantial body of neuroscience research on information integration, or the representation of belief, in perceptual decision-making tasks using both animal[20][21] and human subjects,[22] there is relatively little known about how the decision to stop gathering information is arrived at.

Researchers have studied the neural bases of solving the secretary problem in healthy volunteers using functional MRI.[23] A Markov decision process (MDP) was used to quantify the value of continuing to search versus committing to the current option. Decisions to take versus decline an option engaged parietal and dorsolateral prefrontal cortices, as well ventral striatum, anterior insula, and anterior cingulate. Therefore, brain regions previously implicated in evidence integration and reward representation encode threshold crossings that trigger decisions to commit to a choice.

History

The secretary problem was apparently introduced in 1949 by Merrill M. Flood, who called it the fiancée problem in a lecture he gave that year. He referred to it several times during the 1950s, for example, in a conference talk at Purdue on 9 May 1958, and it eventually became widely known in the folklore although nothing was published at the time. In 1958 he sent a letter to Leonard Gillman, with copies to a dozen friends including Samuel Karlin and J. Robbins, outlining a proof of the optimum strategy, with an appendix by R. Palermo who proved that all strategies are dominated by a strategy of the form "reject the first p unconditionally, then accept the next candidate who is better".[24]

The first publication was apparently by Martin Gardner in Scientific American, February 1960. He had heard about it from John H. Fox Jr., and L. Gerald Marnie, who had independently come up with an equivalent problem in 1958; they called it the "game of googol". Fox and Marnie did not know the optimum solution; Gardner asked for advice from Leo Moser, who (together with J. R. Pounder) provided a correct analysis for publication in the magazine. Soon afterwards, several mathematicians wrote to Gardner to tell him about the equivalent problem they had heard via the grapevine, all of which can most likely be traced to Flood's original work.[25]

The 1/e-law of best choice is due to F. Thomas Bruss.[26]

Ferguson has an extensive bibliography and points out that a similar (but different) problem had been considered by Arthur Cayley in 1875 and even by Johannes Kepler long before that, who spent 2 years investigating 11 candidates for marriage during 1611 -- 1613 after the death of his first wife.[27]

Combinatorial generalization

The secretary problem can be generalized to the case where there are multiple different jobs. Again, there are [math]\displaystyle{ n }[/math] applicants coming in random order. When a candidate arrives, she reveals a set of nonnegative numbers. Each value specifies her qualification for one of the jobs. The administrator not only has to decide whether or not to take the applicant but, if so, also has to assign her permanently to one of the jobs. The objective is to find an assignment where the sum of qualifications is as big as possible. This problem is identical to finding a maximum-weight matching in an edge-weighted bipartite graph where the [math]\displaystyle{ n }[/math] nodes of one side arrive online in random order. Thus, it is a special case of the online bipartite matching problem.

By a generalization of the classic algorithm for the secretary problem, it is possible to obtain an assignment where the expected sum of qualifications is only a factor of [math]\displaystyle{ e }[/math] less than an optimal (offline) assignment.[28]

See also

- Assignment problem

- Odds algorithm

- Optimal stopping

- Robbins' problem

- Search theory

- Stable marriage problem

Notes

- ↑ Jump up to: 1.0 1.1 1.2 1.3 Ferguson, Thomas S. (August 1989). "Who Solved the Secretary Problem?". Statistical Science 4 (3): 282–289. doi:10.1214/ss/1177012493.

- ↑ Hill, Theodore P. (2009). "Knowing When to Stop". American Scientist 97 (2): 126–133. doi:10.1511/2009.77.126. ISSN 1545-2786. For French translation, see cover story in the July issue of Pour la Science (2009).

- ↑ http://quantumthinker.medium.com/37-percent-rule-rule-which-gives-you-exclusive-power-in-decision-making-f9c58982d3f6

- ↑ http://bigthink.com/neuropsych/the-37-percent-rule/

- ↑ Gnedin 2021.

- ↑ Gardner 1966.

- ↑ Cover, Thomas M. (1987), Cover, Thomas M.; Gopinath, B., eds., "Pick the Largest Number" (in en), Open Problems in Communication and Computation (New York, NY: Springer): pp. 152–152, doi:10.1007/978-1-4612-4808-8_43, ISBN 978-1-4612-4808-8, https://doi.org/10.1007/978-1-4612-4808-8_43, retrieved 2023-06-25

- ↑ Gnedin 1994.

- ↑ Bearden 2006.

- ↑ Palley, Asa B.; Kremer, Mirko (2014-07-08). "Sequential Search and Learning from Rank Feedback: Theory and Experimental Evidence". Management Science 60 (10): 2525–2542. doi:10.1287/mnsc.2014.1902. ISSN 0025-1909. https://pubsonline.informs.org/doi/abs/10.1287/mnsc.2014.1902.

- ↑ Moser, Leo (1956). "On a problem of Cayley". Scripta Math 22: 289–292.

- ↑ Sakaguchi, Minoru (1961-06-01). "Dynamic programming of some sequential sampling design" (in en). Journal of Mathematical Analysis and Applications 2 (3): 446–466. doi:10.1016/0022-247X(61)90023-3. ISSN 0022-247X.

- ↑ Rose, John S. (1982). "Selection of nonextremal candidates from a random sequence". J. Optim. Theory Appl. 38 (2): 207–219. doi:10.1007/BF00934083. ISSN 0022-3239.

- ↑ Szajowski, Krzysztof (1982). "Optimal choice of an object with ath rank". Matematyka Stosowana. Annales Societatis Mathematicae Polonae, Series III 10 (19): 51–65. doi:10.14708/ma.v10i19.1533. ISSN 0137-2890.

- ↑ Vanderbei, Robert J. (2021-06-21). "The postdoc variant of the secretary problem". Mathematica Applicanda. Annales Societatis Mathematicae Polonae, Series III 49 (1): 3–13. doi:10.14708/ma.v49i1.7076. ISSN 2299-4009. https://wydawnictwa.ptm.org.pl/index.php/matematyka-stosowana/article/view/7076.

- ↑ Girdhar & Dudek 2009.

- ↑ Jump up to: 17.0 17.1 Gilbert & Mosteller 1966.

- ↑ Jump up to: 18.0 18.1 Matsui & Ano 2016.

- ↑ Bearden, Murphy, and Rapoport, 2006; Bearden, Rapoport, and Murphy, 2006; Seale and Rapoport, 1997; Palley and Kremer, 2014

- ↑ Shadlen, M. N.; Newsome, W. T. (23 January 1996). "Motion perception: seeing and deciding.". Proceedings of the National Academy of Sciences 93 (2): 628–633. doi:10.1073/pnas.93.2.628. PMID 8570606. Bibcode: 1996PNAS...93..628S.

- ↑ Roitman, Jamie D.; Shadlen, Michael N. (1 November 2002). "Response of Neurons in the Lateral Intraparietal Area during a Combined Visual Discrimination Reaction Time Task". The Journal of Neuroscience 22 (21): 9475–9489. doi:10.1523/JNEUROSCI.22-21-09475.2002. PMID 12417672.

- ↑ Heekeren, Hauke R.; Marrett, Sean; Ungerleider, Leslie G. (9 May 2008). "The neural systems that mediate human perceptual decision making". Nature Reviews Neuroscience 9 (6): 467–479. doi:10.1038/nrn2374. PMID 18464792.

- ↑ Costa, V. D.; Averbeck, B. B. (18 October 2013). "Frontal-Parietal and Limbic-Striatal Activity Underlies Information Sampling in the Best Choice Problem". Cerebral Cortex 25 (4): 972–982. doi:10.1093/cercor/bht286. PMID 24142842.

- ↑ Flood 1958.

- ↑ Gardner 1966, Problem 3.

- ↑ Bruss 1984.

- ↑ Ferguson 1989.

- ↑ Kesselheim, Thomas; Radke, Klaus; Tönnis, Andreas; Vöcking, Berthold (2013). "An Optimal Online Algorithm for Weighted Bipartite Matching and Extensions to Combinatorial Auctions". Algorithms – ESA 2013. Lecture Notes in Computer Science. 8125. pp. 589–600. doi:10.1007/978-3-642-40450-4_50. ISBN 978-3-642-40449-8.

References

- Bearden, J.N. (2006). "A new secretary problem with rank-based selection and cardinal payoffs". Journal of Mathematical Psychology 50: 58–9. doi:10.1016/j.jmp.2005.11.003.

- Bearden, J.N.; Murphy, R.O.; Rapoport, A. (2005). "A multi-attribute extension of the secretary problem: Theory and experiments". Journal of Mathematical Psychology 49 (5): 410–425. doi:10.1016/j.jmp.2005.08.002.

- Bearden, J. Neil; Rapoport, Amnon; Murphy, Ryan O. (September 2006). "Sequential Observation and Selection with Rank-Dependent Payoffs: An Experimental Study". Management Science 52 (9): 1437–1449. doi:10.1287/mnsc.1060.0535.

- Bruss, F. Thomas (June 2000). "Sum the odds to one and stop". The Annals of Probability 28 (3): 1384–1391. doi:10.1214/aop/1019160340.

- Bruss, F. Thomas (October 2003). "A note on bounds for the odds theorem of optimal stopping". The Annals of Probability 31 (4): 1859–1961. doi:10.1214/aop/1068646368.

- Bruss, F. Thomas (August 1984). "A Unified Approach to a Class of Best Choice Problems with an Unknown Number of Options". The Annals of Probability 12 (3): 882–889. doi:10.1214/aop/1176993237.

- Flood, Merrill R. (1958). "Proof of the optimum strategy". Letter to Martin Gardner. Martin Gardner papers series 1, box 5, folder 19: Stanford University Archives.CS1 maint: location (link)

- Freeman, P.R. (1983). "The secretary problem and its extensions: A review". International Statistical Review / Revue Internationale de Statistique 51 (2): 189–206. doi:10.2307/1402748.

- Gardner, Martin (1966). "3". New Mathematical Diversions from Scientific American. Simon and Schuster. [reprints his original column published in February 1960 with additional comments]

- Girdhar, Yogesh; Dudek, Gregory (2009). "Optimal Online Data Sampling or How to Hire the Best Secretaries". 2009 Canadian Conference on Computer and Robot Vision. pp. 292–298. doi:10.1109/CRV.2009.30. ISBN 978-1-4244-4211-9.

- Gilbert, J; Mosteller, F (1966). "Recognizing the Maximum of a Sequence". Journal of the American Statistical Association 61 (313): 35–73. doi:10.2307/2283044.

- Gnedin, A. (1994). "A solution to the game of Googol". Annals of Probability 22 (3): 1588–1595. doi:10.1214/aop/1176988613.

- Gnedin, A. (2021). "The best choice problem with random arrivals: How to beat the 1/e-strategy". Stochastic Processes and Their Applications 145: 226–240. doi:10.1016/j.spa.2021.12.008.

- Hill, T.P. "Knowing When to Stop". American Scientist, Vol. 97, 126-133 (2009). (For French translation, see cover story in the July issue of Pour la Science (2009))

- Ketelaar, Timothy; Todd, Peter M. (2001). "Framing Our Thoughts: Ecological Rationality as Evolutionary Psychology's Answer to the Frame Problem". Conceptual Challenges in Evolutionary Psychology. Studies in Cognitive Systems. 27. pp. 179–211. doi:10.1007/978-94-010-0618-7_7. ISBN 978-94-010-3890-4.

- Matsui, T; Ano, K (2016). "Lower bounds for Bruss' odds problem with multiple stoppings". Mathematics of Operations Research 41 (2): 700–714. doi:10.1287/moor.2015.0748.

- Miller, Geoffrey F. (2001). The mating mind: how sexual choice shaped the evolution of human nature. Anchor Books. ISBN 978-0-385-49517-2.

- Sardelis, Dimitris A.; Valahas, Theodoros M. (March 1999). "Decision Making: A Golden Rule". The American Mathematical Monthly 106 (3): 215. doi:10.2307/2589677.

- Seale, D.A.; Rapoport, A. (1997). "Sequential decision making with relative ranks: An experimental investigation of the 'secretary problem'". Organizational Behavior and Human Decision Processes 69 (3): 221–236. doi:10.1006/obhd.1997.2683.

- Stein, W.E.; Seale, D.A.; Rapoport, A. (2003). "Analysis of heuristic solutions to the best choice problem". European Journal of Operational Research 151: 140–152. doi:10.1016/S0377-2217(02)00601-X.

- Vanderbei, R. J. (November 1980). "The Optimal Choice of a Subset of a Population". Mathematics of Operations Research 5 (4): 481–486. doi:10.1287/moor.5.4.481.

- Vanderbei, Robert J. (2012). The postdoc variant of the secretary problem (Report). https://vanderbei.princeton.edu/tex/PostdocProblem/PostdocProb.pdf.

External links

- OEIS sequence A054404 (Number of daughters to wait before picking in sultan's dowry problem with n daughters)

- Weisstein, Eric W.. "Sultan's Dowry Problem". http://mathworld.wolfram.com/SultansDowryProblem.html.

- Neil Bearden. "Optimal Search (Secretary Problems)". http://www.spotlightmind.com/optimal-search.

- Optimal Stopping and Applications book by Thomas S. Ferguson

Notes

- ↑

import numpy as np import pandas as pd # Define the function for which you want to find the maximum def func(r, n): if r == 1: return 0 else: return (r - 1) / n * np.sum([1 / (i - 1) for i in range(r, n+1)]) # Define a function to solve the problem for a specific n def solve(n): values = [func(r, n) for r in range(1, n+1)] r_max = np.argmax(values) + 1 return r_max, values[r_max - 1] # Define a function to print the results as a Markdown table def print_table(n_max): # Prepare data for the table data = [solve(n) for n in range(1, n_max+1)] df = pd.DataFrame(data, columns=['r', 'Max Value'], index=range(1, n_max+1)) df.index.name = 'n' # Convert the DataFrame to Markdown and print print(df.transpose().to_markdown()) # Print the table for n from 1 to 10 print_table(10)

|