Semi-global matching

Semi-global matching (SGM) is a computer vision algorithm for the estimation of a dense disparity map from a rectified stereo image pair, introduced in 2005 by Heiko Hirschmüller while working at the German Aerospace Center.[1] Given its predictable run time, its favourable trade-off between quality of the results and computing time, and its suitability for fast parallel implementation in ASIC or FPGA, it has encountered wide adoption in real-time stereo vision applications such as robotics and advanced driver assistance systems.[2][3]

Problem

Pixelwise stereo matching allows to perform real-time calculation of disparity maps by measuring the similarity of each pixel in one stereo image to each pixel within a subset in the other stereo image. Given a rectified stereo image pair, for a pixel with coordinates the set of pixels in the other image is usually selected as , where is a maximum allowed disparity shift.[1]

A simple search for the best matching pixel produces many spurious matches, and this problem can be mitigated with the addition of a regularisation term that penalises jumps in disparity between adjacent pixels, with a cost function in the form

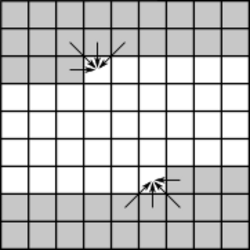

where is the pixel-wise dissimilarity cost at pixel with disparity , and is the regularisation cost between pixels and with disparities and respectively, for all pairs of neighbouring pixels . Such constraint can be efficiently enforced on a per-scanline basis by using dynamic programming (e.g. the Viterbi algorithm), but such limitation can still introduce streaking artefacts in the depth map, because little or no regularisation is performed across scanlines.[4]

A possible solution is to perform global optimisation in 2D, which is however an NP-complete problem in the general case. For some families of cost functions (e.g. submodular functions) a solution with strong optimality properties can be found in polynomial time using graph cut optimization, however such global methods are generally too expensive for real-time processing.[5]

Algorithm

The idea behind SGM is to perform line optimisation along multiple directions and computing an aggregated cost by summing the costs to reach pixel with disparity from each direction. The number of directions affects the run time of the algorithm, and while 16 directions usually ensure good quality, a lower number can be used to achieve faster execution.[6] A typical 8-direction implementation of the algorithm can compute the cost in two passes, a forward pass accumulating the cost from the left, top-left, top, and top-right, and a backward pass accumulating the cost from right, bottom-right, bottom, and bottom-left.[7] A single-pass algorithm can be implemented with only five directions.[8]

The cost is composed by a matching term and a binary regularisation term . The former can be in principle any local image dissimilarity measure, and commonly used functions are absolute or squared intensity difference (usually summed over a window around the pixel, and after applying a high-pass filter to the images to gain some illumination invariance), Birchfield–Tomasi dissimilarity, Hamming distance of the census transform, Pearson correlation (normalized cross-correlation). Even mutual information can be approximated as a sum over the pixels, and thus used as a local similarity metric.[9] The regularisation term has the form

where and are two constant parameters, with . The three-way comparison allows to assign a smaller penalty for unitary changes in disparity, thus allowing smooth transitions corresponding e.g. to slanted surfaces, and penalising larger jumps while preserving discontinuities due to the constant penalty term. To further preserve discontinuities, the gradient of the intensity can be used to adapt the penalty term, because discontinuities in depth usually correspond to a discontinuity in image intensity , by setting

for each pair of pixels and .[10]

The accumulated cost is the sum of all costs to reach pixel with disparity along direction . Each term can be expressed recursively as

where the minimum cost at the previous pixel is subtracted for numerical stability, since it is constant for all values of disparity at the current pixel and therefore it does not affect the optimisation.[6]

The value of disparity at each pixel is given by , and sub-pixel accuracy can be achieved by fitting a curve in and its neighbouring costs and taking the minimum along the curve. Since the two images in the stereo pair are not treated symmetrically in the calculations, a consistency check can be performed by computing the disparity a second time in the opposite direction, swapping the role of the left and right image, and invalidating the result for the pixels where the result differs between the two calculations. Further post-processing techniques for the refinement of the disparity image include morphological filtering to remove outliers, intensity consistency checks to refine textureless regions, and interpolation to fill in pixels invalidated by consistency checks.[11]

The cost volume for all values of and can be precomputed and in an implementation of the full algorithm, using possible disparity shifts and directions, each pixel is subsequently visited times, therefore the computational complexity of the algorithm for an image of size is .[7]

Memory efficient variant

The main drawback of SGM is its memory consumption. An implementation of the two-pass 8-directions version of the algorithm requires to store elements, since the accumulated cost volume has a size of and to compute the cost for a pixel during each pass it is necessary to keep track of the path costs of its left or right neighbour along one direction and of the path costs of the pixels in the row above or below along 3 directions.[7] One solution to reduce memory consumption is to compute SGM on partially overlapping image tiles, interpolating the values over the overlapping regions. This method also allows to apply SGM to very large images, that would not fit within memory in the first place.[12]

A memory-efficient approximation of SGM stores for each pixel only the costs for the disparity values that represent a minimum along some direction, instead of all possible disparity values. The true minimum is highly likely to be predicted by the minima along the eight directions, thus yielding similar quality of the results. The algorithm uses eight directions and three passes, and during the first pass it stores for each pixel the cost for the optimal disparity along the four top-down directions, plus the two closest lower and higher values (for sub-pixel interpolation). Since the cost volume is stored in a sparse fashion, the four values of optimal disparity need also to be stored. In the second pass, the other four bottom-up directions are computed, completing the calculations for the four disparity values selected in the first pass, that now have been evaluated along all eight directions. An intermediate value of cost and disparity is computed from the output of the first pass and stored, and the memory of the four outputs from the first pass is replaced with the four optimal disparity values and their costs from the directions in the second pass. A third pass goes again along the same directions used in the first pass, completing the calculations for the disparity values from the second pass. The final result is then selected among the four minima from the third pass and the intermediate result computed during the second pass.[13]

In each pass four disparity values are stored, together with three cost values each (the minimum and its two closest neighbouring costs), plus the disparity and cost values of the intermediate result, for a total of eighteen values for each pixel, making the total memory consumption equal to , at the cost in time of an additional pass over the image.[13]

See also

References

- ↑ 1.0 1.1 Hirschmüller (2005), pp. 807-814

- ↑ Hirschmüller (2011), pp. 178–184

- ↑ Spangenberg et al. (2013), pp. 34–41

- ↑ Hirschmüller (2005), p. 809

- ↑ Hirschmüller (2005), p. 807

- ↑ 6.0 6.1 Hirschmüller (2007), p. 331

- ↑ 7.0 7.1 7.2 Hirschmüller et al. (2012), p. 372

- ↑ "OpenCV cv::StereoSGBM Class Reference". https://docs.opencv.org/4.1.1/d2/d85/classcv_1_1StereoSGBM.html#details.

- ↑ Kim et al. (2003), pp. 1033–1040

- ↑ Hirschmüller (2007), p. 330

- ↑ Hirschmüller (2007), p. 332-334

- ↑ Hirschmüller (2007), p. 334-335

- ↑ 13.0 13.1 Hirschmüller et al. (2012), p. 373

- Hirschmüller, Heiko (2005). "Accurate and efficient stereo processing by semi-global matching and mutual information". pp. 807–814.

- Hirschmuller, Heiko (2007). "Stereo processing by semiglobal matching and mutual information". IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE) 30 (2): 328–341. doi:10.1109/TPAMI.2007.1166. PMID 18084062.

- Hirschmüller, Heiko (2011). "Semi-global matching-motivation, developments and applications". 11. pp. 173–184.

- Hirschmüller, Heiko; Buder, Maximilian; Ernst, Ines (2012). "Memory efficient semi-global matching". ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences 3: 371–376. doi:10.5194/isprsannals-I-3-371-2012. Bibcode: 2012ISPAn..I3..371H.

- Kim, Junhwan; Kolmogorov, Vladimir; Zabih, Ramin (2003). "Visual correspondence using energy minimization and mutual information". pp. 1033–1040.

- Spangenberg, Robert; Langner, Tobias; Rojas, Raúl (2013). "Weighted semi-global matching and center-symmetric census transform for robust driver assistance". pp. 34–41.

External links

|