Surprisal analysis

Surprisal analysis is an information-theoretical analysis technique that integrates and applies principles of thermodynamics and maximal entropy. Surprisal analysis is capable of relating the underlying microscopic properties to the macroscopic bulk properties of a system. It has already been applied to a spectrum of disciplines including engineering, physics, chemistry and biomedical engineering. Recently, it has been extended to characterize the state of living cells, specifically monitoring and characterizing biological processes in real time using transcriptional data.

History

Surprisal analysis was formulated at the Hebrew University of Jerusalem as a joint effort between Raphael David Levine, Richard Barry Bernstein and Avinoam Ben-Shaul in 1972. Levine and colleagues had recognized a need to better understand the dynamics of non-equilibrium systems, particularly of small systems, that are not seemingly applicable to thermodynamic reasoning.[1] Alhassid and Levine first applied surprisal analysis in nuclear physics, to characterize the distribution of products in heavy ion reactions. Since its formulation, surprisal analysis has become a critical tool for the analysis of reaction dynamics and is an official IUPAC term.[2]*

Application

Maximum entropy methods are at the core of a new view of scientific inference, allowing analysis and interpretation of large and sometimes noisy data. Surprisal analysis extends principles of maximal entropy and of thermodynamics, where both equilibrium thermodynamics and statistical mechanics are assumed to be inferential processes. This enables surprisal analysis to be an effective method of information quantification and compaction and of providing an unbiased characterization of systems. Surprisal analysis is particularly useful to characterize and understand dynamics in small systems, where energy fluxes that are otherwise negligible in large systems heavily influence system behavior.

Foremost, surprisal analysis identifies the state of a system when it reaches its maximal entropy, or thermodynamic equilibrium. This is known as balance state of the system because once a system reaches its maximal entropy, it can no longer initiate or participate in spontaneous processes. Following the determination of the balanced state, surprisal analysis then characterizes all the states in which the system deviates away from the balance state. These deviations are caused by constraints; these constraints on the system prevent the system from reaching its maximal entropy. Surprisal analysis is applied to both identify and characterize these constraints. In terms of the constraints, the probability of an event is quantified by

- .

Here is the probability of the event in the balanced state. It is usually called the “prior probability” because it is the probability of an event prior to any constraints. The surprisal itself is defined as

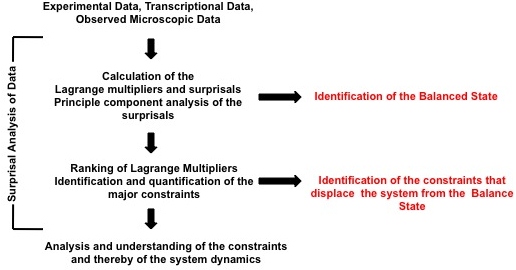

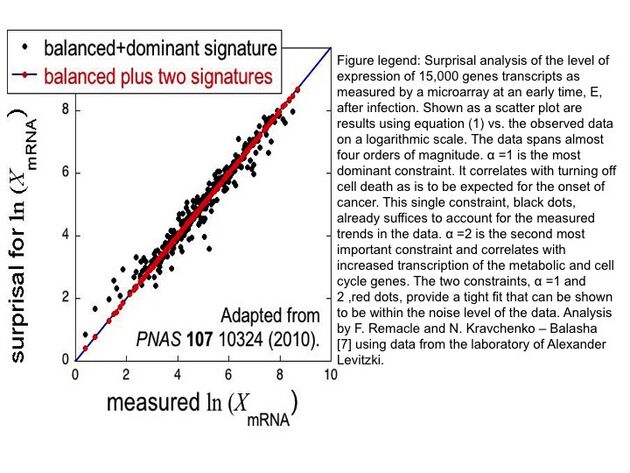

The surprisal equals the sum over the constraints and is a measure of the deviation from the balanced state. These deviations are ranked on the degree of deviation from the balance state and ordered on the most to least influential to the system. This ranking is provided through the use of Lagrange multipliers. The most important constraint and usually the constraint sufficient to characterize a system exhibit the largest Lagrange multiplier. The multiplier for constraint is denoted above as ; larger multipliers identify more influential constraints. The event variable is the value of the constraint for the event . Using the method of Lagrange multipliers[3] requires that the prior probability and the nature of the constraints be experimentally identified. A numerical algorithm for determining Lagrange multipliers has been introduced by Agmon et al.[4] Recently, singular value decomposition and principal component analysis of the surprisal was utilized to identify constraints on biological systems, extending surprisal analysis to better understanding biological dynamics as shown in the figure.

In physics

Surprisal (a term coined[5] in this context by Myron Tribus[6]) was first introduced to better understand the specificity of energy release and selectivity of energy requirements of elementary chemical reactions.[1] This gave rise to a series of new experiments which demonstrated that in elementary reactions, the nascent products could be probed and that the energy is preferentially released and not statistically distributed.[1] Surprisal analysis was initially applied to characterize a small three molecule system that did not seemingly conform to principles of thermodynamics and a single dominant constraint was identified that was sufficient to describe the dynamic behavior of the three molecule system. Similar results were then observed in nuclear reactions, where differential states with varying energy partitioning are possible. Often chemical reactions require energy to overcome an activation barrier. Surprisal analysis is applicable to such applications as well.[7] Later, surprisal analysis was extended to mesoscopic systems, bulk systems [3] and to dynamical processes.[8]

In biology and biomedical sciences

Surprisal analysis was extended to better characterize and understand cellular processes,[9] see figure, biological phenomena and human disease with reference to personalized diagnostics. Surprisal analysis was first utilized to identify genes implicated in the balance state of cells in vitro; the genes mostly present in the balance state were genes directly responsible for the maintenance of cellular homeostasis.[10] Similarly, it has been used to discern two distinct phenotypes during the EMT of cancer cells.[11]

See also

- Information content

- Information theory

- Singular value decomposition

- Principal component analysis

- Entropy

- Decision tree learning

- Information gain in decision trees

References

- ↑ 1.0 1.1 1.2 Levine, Raphael D. (2005). Molecular Reaction Dynamics. Cambridge University Press. ISBN 9780521842761. https://books.google.com/books?id=FVqyS31OM7sC.

- ↑ Agmon, N; Alhassid, Y; Levine, RD (1979). "An algorithm for finding the distribution of maximal entropy". Journal of Computational Physics 30 (2): 250–258. doi:10.1016/0021-9991(79)90102-5. Bibcode: 1979JCoPh..30..250A.

- ↑ 3.0 3.1 Levine, RD (1980). "An information theoretical approach to inversion problems". J. Phys. A 13 (1): 91. doi:10.1088/0305-4470/13/1/011. Bibcode: 1980JPhA...13...91L.

- ↑ Levine, RD; Bernstein, RB (1974). "Energy disposal and energy consumption in elementary chemical relations: The information theoretic approach". Acc. Chem. Res. 7 (12): 393–400. doi:10.1021/ar50084a001.

- ↑ Bernstein, R. B.; Levine, R. D. (1972). "Entropy and Chemical Change. I. Characterization of Product (and Reactant) Energy Distributions in Reactive Molecular Collisions: Information and Entropy Deficiency". The Journal of Chemical Physics 57 (1): 434–449. doi:10.1063/1.1677983. Bibcode: 1972JChPh..57..434B.

- ↑ Myron Tribus (1961) Thermodynamics and Thermostatics: An Introduction to Energy, Information and States of Matter, with Engineering Applications (D. Van Nostrand, 24 West 40 Street, New York 18, New York, U.S.A) Tribus, Myron (1961), pp. 64-66 borrow.

- ↑ Levine, RD (1978). "Information theory approach to molecular reaction dynamics". Annu. Rev. Phys. Chem. 29: 59–92. doi:10.1146/annurev.pc.29.100178.000423. Bibcode: 1978ARPC...29...59L.

- ↑ Remacle, F; Levine, RD (1993). "Maximal entropy spectral fluctuations and the sampling of phase space". J. Chem. Phys. 99 (4): 2383–2395. doi:10.1063/1.465253. Bibcode: 1993JChPh..99.2383R.

- ↑ Remacle, F; Kravchenko-Balasha, N; Levitzki, A; Levine, RD (June 1, 2010). "Information-theoretic analysis of phenotype changes in early stages of carcinogenesis". PNAS 107 (22): 10324–29. doi:10.1073/pnas.1005283107. PMID 20479229. Bibcode: 2010PNAS..10710324R.

- ↑ Kravchenko-Balasha, Nataly; Levitzki, Alexander; Goldstein, Andrew; Rotter, Varda; Gross, A.; Remacle, F.; Levine, R. D. (March 20, 2012). "On a fundamental structure of gene networks in living cells". PNAS 109 (12): 4702–4707. doi:10.1073/pnas.1200790109. PMID 22392990. Bibcode: 2012PNAS..109.4702K.

- ↑ Zadran, Sohila; Arumugam, Rameshkumar; Herschman, Harvey; Phelps, Michael; Levine, R. D. (August 3, 2014). "Surprisal analysis characterizes the free energy time course of cancer cells undergoing epithelial-to-mesenchymal transition". PNAS 111 (36): 13235–13240. doi:10.1073/pnas.1414714111. PMID 25157127. Bibcode: 2014PNAS..11113235Z.

|