Truncated distribution

|

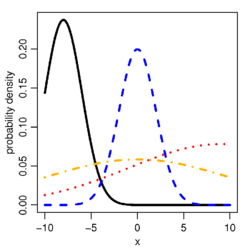

Probability density function  Probability density function for the truncated normal distribution for different sets of parameters. In all cases, a = −10 and b = 10. For the black: μ = −8, σ = 2; blue: μ = 0, σ = 2; red: μ = 9, σ = 10; orange: μ = 0, σ = 10. | |||

| Support | [math]\displaystyle{ x \in (a,b] }[/math] | ||

|---|---|---|---|

| [math]\displaystyle{ \frac{g(x)}{F(b)-F(a)} }[/math] | |||

| CDF | [math]\displaystyle{ \frac{\int_a^xdF(t)}{F(b)-F(a)}=\frac{F(x)-F(a)}{F(b)-F(a)} }[/math] | ||

| Mean | [math]\displaystyle{ \frac{\int_a^b x dF(x)}{F(b)-F(a)} }[/math] | ||

| Median | [math]\displaystyle{ F^{-1}\left(\frac{F(a)+F(b)}{2}\right) }[/math] | ||

In statistics, a truncated distribution is a conditional distribution that results from restricting the domain of some other probability distribution. Truncated distributions arise in practical statistics in cases where the ability to record, or even to know about, occurrences is limited to values which lie above or below a given threshold or within a specified range. For example, if the dates of birth of children in a school are examined, these would typically be subject to truncation relative to those of all children in the area given that the school accepts only children in a given age range on a specific date. There would be no information about how many children in the locality had dates of birth before or after the school's cutoff dates if only a direct approach to the school were used to obtain information.

Where sampling is such as to retain knowledge of items that fall outside the required range, without recording the actual values, this is known as censoring, as opposed to the truncation here.[1]

Definition

The following discussion is in terms of a random variable having a continuous distribution although the same ideas apply to discrete distributions. Similarly, the discussion assumes that truncation is to a semi-open interval y ∈ (a,b] but other possibilities can be handled straightforwardly.

Suppose we have a random variable, [math]\displaystyle{ X }[/math] that is distributed according to some probability density function, [math]\displaystyle{ f(x) }[/math], with cumulative distribution function [math]\displaystyle{ F(x) }[/math] both of which have infinite support. Suppose we wish to know the probability density of the random variable after restricting the support to be between two constants so that the support, [math]\displaystyle{ y = (a,b] }[/math]. That is to say, suppose we wish to know how [math]\displaystyle{ X }[/math] is distributed given [math]\displaystyle{ a \lt X \leq b }[/math].

- [math]\displaystyle{ f(x|a \lt X \leq b) = \frac{g(x)}{F(b)-F(a)} = \frac{f(x) \cdot I(\{a \lt x \leq b\})}{F(b)-F(a)} \propto_x f(x) \cdot I(\{a \lt x \leq b\}) }[/math]

where [math]\displaystyle{ g(x) = f(x) }[/math] for all [math]\displaystyle{ a \lt x \leq b }[/math] and [math]\displaystyle{ g(x) = 0 }[/math] everywhere else. That is, [math]\displaystyle{ g(x) = f(x)\cdot I(\{a \lt x \leq b\}) }[/math] where [math]\displaystyle{ I }[/math] is the indicator function. Note that the denominator in the truncated distribution is constant with respect to the [math]\displaystyle{ x }[/math].

Notice that in fact [math]\displaystyle{ f(x|a \lt X \leq b) }[/math] is a density:

- [math]\displaystyle{ \int_{a}^{b} f(x|a \lt X \leq b)dx = \frac{1}{F(b)-F(a)} \int_{a}^{b} g(x) dx = 1 }[/math].

Truncated distributions need not have parts removed from the top and bottom. A truncated distribution where just the bottom of the distribution has been removed is as follows:

- [math]\displaystyle{ f(x|X\gt y) = \frac{g(x)}{1-F(y)} }[/math]

where [math]\displaystyle{ g(x) = f(x) }[/math] for all [math]\displaystyle{ y \lt x }[/math] and [math]\displaystyle{ g(x) = 0 }[/math] everywhere else, and [math]\displaystyle{ F(x) }[/math] is the cumulative distribution function.

A truncated distribution where the top of the distribution has been removed is as follows:

- [math]\displaystyle{ f(x|X \leq y) = \frac{g(x)}{F(y)} }[/math]

where [math]\displaystyle{ g(x) = f(x) }[/math] for all [math]\displaystyle{ x \leq y }[/math] and [math]\displaystyle{ g(x) = 0 }[/math] everywhere else, and [math]\displaystyle{ F(x) }[/math] is the cumulative distribution function.

Expectation of truncated random variable

Suppose we wish to find the expected value of a random variable distributed according to the density [math]\displaystyle{ f(x) }[/math] and a cumulative distribution of [math]\displaystyle{ F(x) }[/math] given that the random variable, [math]\displaystyle{ X }[/math], is greater than some known value [math]\displaystyle{ y }[/math]. The expectation of a truncated random variable is thus:

- [math]\displaystyle{ E(X|X\gt y) = \frac{\int_y^\infty x g(x) dx}{1 - F(y)} }[/math]

where again [math]\displaystyle{ g(x) }[/math] is [math]\displaystyle{ g(x) = f(x) }[/math] for all [math]\displaystyle{ x \gt y }[/math] and [math]\displaystyle{ g(x) = 0 }[/math] everywhere else.

Letting [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math] be the lower and upper limits respectively of support for the original density function [math]\displaystyle{ f }[/math] (which we assume is continuous), properties of [math]\displaystyle{ E(u(X)|X\gt y) }[/math], where [math]\displaystyle{ u }[/math] is some continuous function with a continuous derivative, include:

- [math]\displaystyle{ \lim_{y \to a} E(u(X)|X\gt y) = E(u(X)) }[/math]

- [math]\displaystyle{ \lim_{y \to b} E(u(X)|X\gt y) = u(b) }[/math]

- [math]\displaystyle{ \frac{\partial}{\partial y}[E(u(X)|X\gt y)] = \frac{f(y)}{1-F(y)}[E(u(X)|X\gt y) - u(y)] }[/math]

- and [math]\displaystyle{ \frac{\partial}{\partial y}[E(u(X)|X\lt y)] = \frac{f(y)}{F(y)}[-E(u(X)|X\lt y) + u(y)] }[/math]

- [math]\displaystyle{ \lim_{y \to a}\frac{\partial}{\partial y}[E(u(X)|X\gt y)] = f(a)[E(u(X)) - u(a)] }[/math]

- [math]\displaystyle{ \lim_{y \to b}\frac{\partial}{\partial y}[E(u(X)|X\gt y)] = \frac{1}{2}u'(b) }[/math]

Provided that the limits exist, that is: [math]\displaystyle{ \lim_{y \to c} u'(y) = u'(c) }[/math], [math]\displaystyle{ \lim_{y \to c} u(y) = u(c) }[/math] and [math]\displaystyle{ \lim_{y \to c} f(y) = f(c) }[/math] where [math]\displaystyle{ c }[/math] represents either [math]\displaystyle{ a }[/math] or [math]\displaystyle{ b }[/math].

Examples

The truncated normal distribution is an important example.[2]

The Tobit model employs truncated distributions. Other examples include truncated binomial at x=0 and truncated poisson at x=0.

Random truncation

Suppose we have the following set up: a truncation value, [math]\displaystyle{ t }[/math], is selected at random from a density, [math]\displaystyle{ g(t) }[/math], but this value is not observed. Then a value, [math]\displaystyle{ x }[/math], is selected at random from the truncated distribution, [math]\displaystyle{ f(x|t)=Tr(x) }[/math]. Suppose we observe [math]\displaystyle{ x }[/math] and wish to update our belief about the density of [math]\displaystyle{ t }[/math] given the observation.

First, by definition:

- [math]\displaystyle{ f(x)=\int_{x}^{\infty} f(x|t)g(t)dt }[/math], and

- [math]\displaystyle{ F(a)=\int_{x}^a \left[\int_{-\infty}^{\infty} f(x|t)g(t)dt \right]dx . }[/math]

Notice that [math]\displaystyle{ t }[/math] must be greater than [math]\displaystyle{ x }[/math], hence when we integrate over [math]\displaystyle{ t }[/math], we set a lower bound of [math]\displaystyle{ x }[/math]. The functions [math]\displaystyle{ f(x) }[/math] and [math]\displaystyle{ F(x) }[/math] are the unconditional density and unconditional cumulative distribution function, respectively.

By Bayes' rule,

- [math]\displaystyle{ g(t|x)= \frac{f(x|t)g(t)}{f(x)} , }[/math]

which expands to

- [math]\displaystyle{ g(t|x) = \frac{f(x|t)g(t)}{\int_{x}^{\infty} f(x|t)g(t)dt} . }[/math]

Two uniform distributions (example)

Suppose we know that t is uniformly distributed from [0,T] and x|t is distributed uniformly on [0,t]. Let g(t) and f(x|t) be the densities that describe t and x respectively. Suppose we observe a value of x and wish to know the distribution of t given that value of x.

- [math]\displaystyle{ g(t|x) =\frac{f(x|t)g(t)}{f(x)} = \frac{1}{t(\ln(T) - \ln(x))} \quad \text{for all } t \gt x . }[/math]

See also

References

|