Truncated normal distribution

This article includes a list of general references, but it remains largely unverified because it lacks sufficient corresponding inline citations. (June 2010) (Learn how and when to remove this template message) |

|

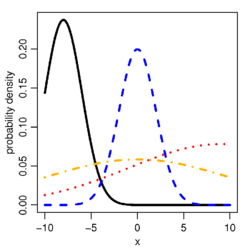

Probability density function  Probability density function for the truncated normal distribution for different sets of parameters. In all cases, a = −10 and b = 10. For the black: μ = −8, σ = 2; blue: μ = 0, σ = 2; red: μ = 9, σ = 10; orange: μ = 0, σ = 10. | |||

|

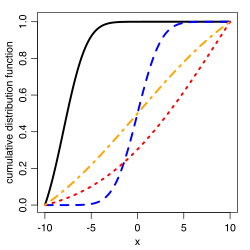

Cumulative distribution function  Cumulative distribution function for the truncated normal distribution for different sets of parameters. In all cases, a = −10 and b = 10. For the black: μ = −8, σ = 2; blue: μ = 0, σ = 2; red: μ = 9, σ = 10; orange: μ = 0, σ = 10. | |||

| Notation |

| ||

|---|---|---|---|

| Parameters |

(but see definition) — minimum value of — maximum value of () | ||

| Support | |||

| [1] | |||

| CDF | |||

| Mean | |||

| Median | |||

| Mode | |||

| Variance | |||

| Entropy | |||

| MGF | |||

In probability and statistics, the truncated normal distribution is the probability distribution derived from that of a normally distributed random variable by bounding the random variable from either below or above (or both). The truncated normal distribution has wide applications in statistics and econometrics.

Definitions

Suppose has a normal distribution with mean and variance and lies within the interval . Then conditional on has a truncated normal distribution.

Its probability density function, , for , is given by

and by otherwise.

Here, is the probability density function of the standard normal distribution and is its cumulative distribution function By definition, if , then , and similarly, if , then .

The above formulae show that when the scale parameter of the truncated normal distribution is allowed to assume negative values. The parameter is in this case imaginary, but the function is nevertheless real, positive, and normalizable. The scale parameter of the untruncated normal distribution must be positive because the distribution would not be normalizable otherwise. The doubly truncated normal distribution, on the other hand, can in principle have a negative scale parameter (which is different from the variance, see summary formulae), because no such integrability problems arise on a bounded domain. In this case the distribution cannot be interpreted as an untruncated normal conditional on , of course, but can still be interpreted as a maximum-entropy distribution with first and second moments as constraints, and has an additional peculiar feature: it presents two local maxima instead of one, located at and .

Properties

The truncated normal is one of two possible maximum entropy probability distributions for a fixed mean and variance constrained to the interval [a,b], the other being the truncated U.[2] Truncated normals with fixed support form an exponential family. Nielsen[3] reported closed-form formula for calculating the Kullback-Leibler divergence and the Bhattacharyya distance between two truncated normal distributions with the support of the first distribution nested into the support of the second distribution.

Moments

If the random variable has been truncated only from below, some probability mass has been shifted to higher values, giving a first-order stochastically dominating distribution and hence increasing the mean to a value higher than the mean of the original normal distribution. Likewise, if the random variable has been truncated only from above, the truncated distribution has a mean less than

Regardless of whether the random variable is bounded above, below, or both, the truncation is a mean-preserving contraction combined with a mean-changing rigid shift, and hence the variance of the truncated distribution is less than the variance of the original normal distribution.

Two sided truncation[4]

Let and . Then: and

Care must be taken in the numerical evaluation of these formulas, which can result in catastrophic cancellation when the interval does not include . There are better ways to rewrite them that avoid this issue.[5]

In this case then

and

where

One sided truncation (of upper tail)

In this case then

(Barr Sherrill) give a simpler expression for the variance of one sided truncations. Their formula is in terms of the chi-square CDF, which is implemented in standard software libraries. (Bebu Mathew) provide formulas for (generalized) confidence intervals around the truncated moments.

A recursive formula

As for the non-truncated case, there is a recursive formula for the truncated moments.[8]

In particular, for , we have

Proof

By the change of variables , one obtains Using integration by parts yields which gives the equation to be proven.

Multivariate

Computing the moments of a multivariate truncated normal is harder.

Generating values from the truncated normal distribution

This section's use of external links may not follow Wikipedia's policies or guidelines. (May 2022) (Learn how and when to remove this template message) |

A random variate defined as with the cumulative distribution function of the normal distribution to be sampled from (i.e. with correct mean and variance) and its inverse, a uniform random number on , follows the distribution truncated to the range . This is simply the inverse transform method for simulating random variables. Although one of the simplest, this method can either fail when sampling in the tail of the normal distribution,[9] or be much too slow.[10] Thus, in practice, one has to find alternative methods of simulation.

One such truncated normal generator (implemented in Matlab and in R (programming language) as trandn.R ) is based on an acceptance rejection idea due to Marsaglia.[11] Despite the slightly suboptimal acceptance rate of (Marsaglia 1964) in comparison with (Robert 1995), Marsaglia's method is typically faster,[10] because it does not require the costly numerical evaluation of the exponential function.

For more on simulating a draw from the truncated normal distribution, see (Robert 1995), (Lynch 2007), (Devroye 1986). The MSM package in R has a function, rtnorm, that calculates draws from a truncated normal. The truncnorm package in R also has functions to draw from a truncated normal.

(Chopin 2011) proposed (arXiv) an algorithm inspired from the Ziggurat algorithm of Marsaglia and Tsang (1984, 2000), which is usually considered as the fastest Gaussian sampler, and is also very close to Ahrens's algorithm (1995). Implementations can be found in C, C++, Matlab and Python.

Sampling from the multivariate truncated normal distribution is considerably more difficult.[12] Exact or perfect simulation is only feasible in the case of truncation of the normal distribution to a polytope region.[12][13] In more general cases, (Damien Walker) introduce a general methodology for sampling truncated densities within a Gibbs sampling framework. Their algorithm introduces one latent variable and, within a Gibbs sampling framework, it is more computationally efficient than the algorithm of (Robert 1995).

See also

- Folded normal distribution

- Half-normal distribution

- Modified half-normal distribution[14] with the pdf on is given as , where denotes the Fox–Wright Psi function.

- Normal distribution

- Rectified Gaussian distribution

- Truncated distribution

- PERT distribution

Notes

- ↑ "Lecture 4: Selection". Instituto Superior Técnico. November 11, 2002. p. 1. http://web.ist.utl.pt/~ist11038/compute/qc/,truncG/lecture4k.pdf.

- ↑ Dowson, D.; Wragg, A. (September 1973). "Maximum-entropy distributions having prescribed first and second moments (Corresp.)". IEEE Transactions on Information Theory 19 (5): 689–693. doi:10.1109/TIT.1973.1055060. ISSN 1557-9654.

- ↑ Frank Nielsen (2022). "Statistical Divergences between Densities of Truncated Exponential Families with Nested Supports: Duo Bregman and Duo Jensen Divergences". Entropy (MDPI) 24 (3): 421. doi:10.3390/e24030421. PMID 35327931. Bibcode: 2022Entrp..24..421N.

- ↑ Johnson, Norman Lloyd; Kotz, Samuel; Balakrishnan, N. (1994). Continuous Univariate Distributions. 1 (2nd ed.). New York: Wiley. Section 10.1. ISBN 0-471-58495-9. OCLC 29428092.

- ↑ Fernandez-de-Cossio-Diaz, Jorge (2017-12-06), TruncatedNormal.jl: Compute mean and variance of the univariate truncated normal distribution (works far from the peak), https://github.com/cossio/TruncatedNormal.jl, retrieved 2017-12-06

- ↑ Greene, William H. (2003). Econometric Analysis (5th ed.). Prentice Hall. ISBN 978-0-13-066189-0.

- ↑ del Castillo, Joan (March 1994). "The singly truncated normal distribution: A non-steep exponential family". Annals of the Institute of Statistical Mathematics 46 (1): 57–66. doi:10.1007/BF00773592. https://www.ism.ac.jp/editsec/aism/pdf/046_1_0057.pdf.

- ↑ Document by Eric Orjebin, "https://people.smp.uq.edu.au/YoniNazarathy/teaching_projects/studentWork/EricOrjebin_TruncatedNormalMoments.pdf"

- ↑ Kroese, D. P.; Taimre, T.; Botev, Z. I. (2011). Handbook of Monte Carlo methods. John Wiley & Sons.

- ↑ 10.0 10.1 Botev, Z. I.; L'Ecuyer, P. (2017). "Simulation from the Normal Distribution Truncated to an Interval in the Tail". 25th–28th Oct 2016 Taormina, Italy: ACM. pp. 23–29. doi:10.4108/eai.25-10-2016.2266879. ISBN 978-1-63190-141-6.

- ↑ Marsaglia, George (1964). "Generating a variable from the tail of the normal distribution". Technometrics 6 (1): 101–102. doi:10.2307/1266749.

- ↑ 12.0 12.1 Botev, Z. I. (2016). "The normal law under linear restrictions: simulation and estimation via minimax tilting". Journal of the Royal Statistical Society, Series B 79: 125–148. doi:10.1111/rssb.12162.

- ↑ Botev, Zdravko; L'Ecuyer, Pierre (2018). "Chapter 8: Simulation from the Tail of the Univariate and Multivariate Normal Distribution". in Puliafito, Antonio. Systems Modeling: Methodologies and Tools. EAI/Springer Innovations in Communication and Computing.. Springer, Cham. pp. 115–132. doi:10.1007/978-3-319-92378-9_8. ISBN 978-3-319-92377-2.

- ↑ Sun, Jingchao; Kong, Maiying; Pal, Subhadip (22 June 2021). "The Modified-Half-Normal distribution: Properties and an efficient sampling scheme". Communications in Statistics - Theory and Methods 52 (5): 1591–1613. doi:10.1080/03610926.2021.1934700. ISSN 0361-0926. https://www.tandfonline.com/doi/abs/10.1080/03610926.2021.1934700?journalCode=lsta20.

References

- Botev, Zdravko; L'Ecuyer, Pierre (2018). "Chapter 8: Simulation from the Tail of the Univariate and Multivariate Normal Distribution". in Puliafito, Antonio. Systems Modeling: Methodologies and Tools. EAI/Springer Innovations in Communication and Computing. Springer, Cham. pp. 115–132. doi:10.1007/978-3-319-92378-9_8. ISBN 978-3-319-92377-2.

- Devroye, Luc (1986). Non-Uniform Random Variate Generation. New York: Springer-Verlag. http://www.eirene.de/Devroye.pdf. Retrieved 2012-04-12.

- Greene, William H. (2003). Econometric Analysis (5th ed.). Prentice Hall. ISBN 978-0-13-066189-0.

- Norman L. Johnson and Samuel Kotz (1970). Continuous univariate distributions-1, chapter 13. John Wiley & Sons.

- Lynch, Scott (2007). Introduction to Applied Bayesian Statistics and Estimation for Social Scientists. New York: Springer. ISBN 978-1-4419-2434-6. https://www.springer.com/social+sciences/book/978-0-387-71264-2.

- Robert, Christian P. (1995). "Simulation of truncated normal variables". Statistics and Computing 5 (2): 121–125. doi:10.1007/BF00143942.

- Barr, Donald R.; Sherrill, E.Todd (1999). "Mean and variance of truncated normal distributions". The American Statistician 53 (4): 357–361. doi:10.1080/00031305.1999.10474490.

- Bebu, Ionut; Mathew, Thomas (2009). "Confidence intervals for limited moments and truncated moments in normal and lognormal models". Statistics and Probability Letters 79 (3): 375–380. doi:10.1016/j.spl.2008.09.006.

- Damien, Paul; Walker, Stephen G. (2001). "Sampling truncated normal, beta, and gamma densities". Journal of Computational and Graphical Statistics 10 (2): 206–215. doi:10.1198/10618600152627906.

- Chopin, Nicolas (2011-04-01). "Fast simulation of truncated Gaussian distributions" (in en). Statistics and Computing 21 (2): 275–288. doi:10.1007/s11222-009-9168-1. ISSN 1573-1375. https://link.springer.com/article/10.1007/s11222-009-9168-1.

- Burkardt, John. "The Truncated Normal Distribution". Florida State University. https://people.sc.fsu.edu/~jburkardt/presentations/truncated_normal.pdf.

fr:Loi tronquée#Loi normale tronquée

|