Gradient-enhanced kriging

Gradient-enhanced kriging (GEK) is a surrogate modeling technique used in engineering. A surrogate model (alternatively known as a metamodel, response surface or emulator) is a prediction of the output of an expensive computer code.[1] This prediction is based on a small number of evaluations of the expensive computer code.

Introduction

Adjoint solvers are now becoming available in a range of computational fluid dynamics (CFD) solvers, such as Fluent, OpenFOAM, SU2 and US3D. Originally developed for optimization, adjoint solvers are now finding more and more use in uncertainty quantification.

Linear speedup

An adjoint solver allows one to compute the gradient of the quantity of interest with respect to all design parameters at the cost of one additional solve. This, potentially, leads to a linear speedup: the computational cost of constructing an accurate surrogate decrease, and the resulting computational speedup [math]\displaystyle{ s }[/math] scales linearly with the number [math]\displaystyle{ d }[/math] of design parameters.

The reasoning behind this linear speedup is straightforward. Assume we run [math]\displaystyle{ N }[/math] primal solves and [math]\displaystyle{ N }[/math] adjoint solves, at a total cost of [math]\displaystyle{ 2N }[/math]. This results in [math]\displaystyle{ N+dN }[/math] data; [math]\displaystyle{ N }[/math] values for the quantity of interest and [math]\displaystyle{ d }[/math] partial derivatives in each of the [math]\displaystyle{ N }[/math] gradients. Now assume that each partial derivative provides as much information for our surrogate as a single primal solve. Then, the total cost of getting the same amount of information from primal solves only is [math]\displaystyle{ N+dN }[/math]. The speedup is the ratio of these costs: [2] [3]

- [math]\displaystyle{ s = \frac{N+dN}{2N} = \frac{1}{2} + \frac{1}{2}d. }[/math]

A linear speedup has been demonstrated for a fluid-structure interaction problem [2] and for a transonic airfoil.[3]

Noise

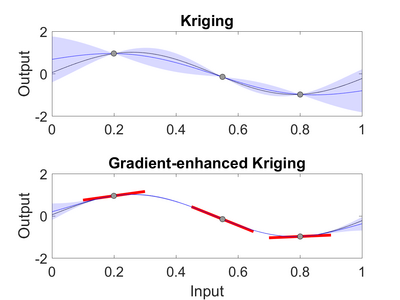

One issue with adjoint-based gradients in CFD is that they can be particularly noisy. [4] [5] When derived in a Bayesian framework, GEK allows one to incorporate not only the gradient information, but also the uncertainty in that gradient information.[6]

Approach

When using GEK one takes the following steps:

- Create a design of experiment (DoE): The DoE or 'sampling plan' is a list of different locations in the design space. The DoE indicates which combinations of parameters one will use to sample the computer simulation. With Kriging and GEK, a common choice is to use a Latin Hypercube Design (LHS) design with a 'maximin' criterion. The LHS-design is available in scripting codes like MATLAB or Python.

- Make observations: For each sample in our DoE one runs the computer simulation to obtain the Quantity of Interest (QoI).

- Construct the surrogate: One uses the GEK predictor equations to construct the surrogate conditional on the obtained observations.

Once the surrogate has been constructed it can be used in different ways, for example for surrogate-based uncertainty quantification (UQ) or optimization.

Predictor equations

In a Bayesian framework, we use Bayes' Theorem to predict the Kriging mean and covariance conditional on the observations. When using GEK, the observations are usually the results of a number of computer simulations. GEK can be interpreted as a form of Gaussian process regression.

Kriging

Along the lines of, [7] we are interested in the output [math]\displaystyle{ X }[/math] of our computer simulation, for which we assume the normal prior probability distribution:

- [math]\displaystyle{ X\sim\mathcal{N}(\mu,P), }[/math]

with prior mean [math]\displaystyle{ \mu }[/math] and prior covariance matrix [math]\displaystyle{ P }[/math]. The observations [math]\displaystyle{ y }[/math] have the normal likelihood:

- [math]\displaystyle{ Y\mid x\sim\mathcal{N}(Hx,R), }[/math]

with [math]\displaystyle{ H }[/math] the observation matrix and [math]\displaystyle{ R }[/math] the observation error covariance matrix, which contains the observation uncertainties. After applying Bayes' Theorem we obtain a normally distributed posterior probability distribution, with Kriging mean:

- [math]\displaystyle{ \operatorname E(X\mid y) = \mu + K(y-H\mu), }[/math]

and Kriging covariance:

- [math]\displaystyle{ \operatorname{cov}(X\mid y) = (I-KH)P, }[/math]

where we have the gain matrix:

- [math]\displaystyle{ K = PH^T(R+HPH^T)^{-1}. }[/math]

In Kriging, the prior covariance matrix [math]\displaystyle{ P }[/math] is generated from a covariance function. One example of a covariance function is the Gaussian covariance:

- [math]\displaystyle{ P_{ij} = \sigma^2 \exp\left(-\sum_k\frac{|\xi_{jk}-\xi_{ik}|^2}{2 \theta_k^2}\right), }[/math]

where we sum over the dimensions [math]\displaystyle{ k }[/math] and [math]\displaystyle{ \xi }[/math] are the input parameters. The hyperparameters [math]\displaystyle{ \mu }[/math], [math]\displaystyle{ \sigma }[/math] and [math]\displaystyle{ \theta }[/math] can be estimated from a Maximum Likelihood Estimate (MLE).[6] [8] [9]

Indirect GEK

There are several ways of implementing GEK. The first method, indirect GEK, defines a small but finite stepsize [math]\displaystyle{ h }[/math], and uses the gradient information to append synthetic data to the observations [math]\displaystyle{ y }[/math], see for example.[8] Indirect Kriging is sensitive to the choice of the step-size [math]\displaystyle{ h }[/math] and cannot include observation uncertainties.

Direct GEK (through prior covariance matrix)

Direct GEK is a form of co-Kriging, where we add the gradient information as co-variables. This can be done by modifying the prior covariance [math]\displaystyle{ P }[/math] or by modifying the observation matrix [math]\displaystyle{ H }[/math]; both approaches lead to the same GEK predictor. When we construct direct GEK through the prior covariance matrix, we append the partial derivatives to [math]\displaystyle{ y }[/math], and modify the prior covariance matrix [math]\displaystyle{ P }[/math] such that it also contains the derivatives (and second derivatives) of the covariance function, see for example [10] .[6] The main advantages of direct GEK over indirect GEK are: 1) we do not have to choose a step-size, 2) we can include observation uncertainties for the gradients in [math]\displaystyle{ R }[/math], and 3) it is less susceptible to poor conditioning of the gain matrix [math]\displaystyle{ K }[/math]. [6] [8]

Direct GEK (through observation matrix)

Another way of arriving at the same direct GEK predictor is to append the partial derivatives to the observations [math]\displaystyle{ y }[/math] and include partial derivative operators in the observation matrix [math]\displaystyle{ H }[/math], see for example.[11]

Gradient-enhanced kriging for high-dimensional problems (Indirect method)

Current gradient-enhanced kriging methods do not scale well with the number of sampling points due to the rapid growth in the size of the correlation matrix, where new information is added for each sampling point in each direction of the design space. Furthermore, they do not scale well with the number of independent variables due to the increase in the number of hyperparameters that needs to be estimated. To address this issue, a new gradient-enhanced surrogate model approach that drastically reduced the number of hyperparameters through the use of the partial-least squares method that maintains accuracy is developed. In addition, this method is able to control the size of the correlation matrix by adding only relevant points defined through the information provided by the partial-least squares method. For more details, see.[12] This approach is implemented into the Surrogate Modeling Toolbox (SMT) in Python (https://github.com/SMTorg/SMT), and it runs on Linux, macOS, and Windows. SMT is distributed under the New BSD license.

Augmented gradient-enhanced kriging (direct method)

A universal augmented framework is proposed in [9] to append derivatives of any order to the observations. This method can be viewed as a generalization of Direct GEK that takes into account higher-order derivatives. Also, the observations and derivatives are not required to be measured at the same location under this framework.

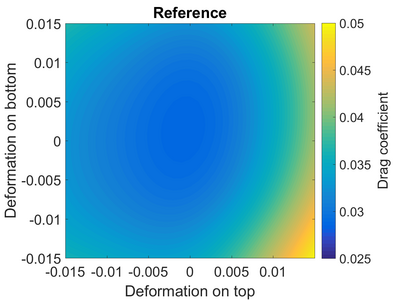

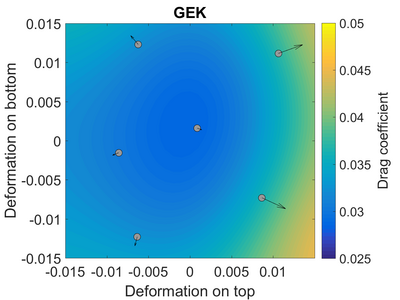

Example: Drag coefficient of a transonic airfoil

As an example, consider the flow over a transonic airfoil.[3] The airfoil is operating at a Mach number of 0.8 and an angle of attack of 1.25 degrees. We assume that the shape of the airfoil is uncertain; the top and the bottom of the airfoil might have shifted up or down due to manufacturing tolerances. In other words, the shape of the airfoil that we are using might be slightly different from the airfoil that we designed.

On the right we see the reference results for the drag coefficient of the airfoil, based on a large number of CFD simulations. Note that the lowest drag, which corresponds to 'optimal' performance, is close to the undeformed 'baseline' design of the airfoil at (0,0).

After designing a sampling plan (indicated by the gray dots) and running the CFD solver at those sample locations, we obtain the Kriging surrogate model. The Kriging surrogate is close to the reference, but perhaps not as close as we would desire.

In the last figure, we have improved the accuracy of this surrogate model by including the adjoint-based gradient information, indicated by the arrows, and applying GEK.

Applications

GEK has found the following applications:

- 1993: Design problem for a borehole model test-function.[13]

- 2002: Aerodynamic design of a supersonic business jet.[14]

- 2008: Uncertainty quantification for a transonic airfoil with uncertain shape parameters.[10]

- 2009: Uncertainty quantification for a transonic airfoil with uncertain shape parameters.[8]

- 2012: Surrogate model construction for a panel divergence problem, a fluid-structure interaction problem. Demonstration of a linear speedup.[2]

- 2013: Uncertainty quantification for a transonic airfoil with uncertain angle of attack and Mach number.[15]

- 2014: Uncertainty quantification for the RANS simulation of an airfoil, with the model parameters of the k-epsilon turbulence model as uncertain inputs.[6]

- 2015: Uncertainty quantification for the Euler simulation of a transonic airfoil with uncertain shape parameters. Demonstration of a linear speedup.[3]

- 2016: Surrogate model construction for two fluid-structure interaction problems.[16]

- 2017: Large review of gradient-enhanced surrogate models including many details concerning gradient-enhanced kriging.[17]

- 2017: Uncertainty propagation for a nuclear energy system.[18]

- 2020: Molecular geometry optimization.[19]

References

- ↑ Mitchell, M.; Morris, M. (1992). "Bayesian design and analysis of computer experiments: two examples". Statistica Sinica (2): 359–379. http://www3.stat.sinica.edu.tw/statistica/oldpdf/A2n23.pdf.

- ↑ 2.0 2.1 2.2 de Baar, J.H.S.; Scholcz, T.P.; Verhoosel, C.V.; Dwight, R.P.; van Zuijlen, A.H.; Bijl, H. (2012). "Efficient uncertainty quantification with gradient-enhanced Kriging: Applications in FSI". ECCOMAS, Vienna, Austria, September 10–14. https://aerodynamics.lr.tudelft.nl/~bayesiancomputing/files/GEK_UQ_ECCOMAS_submitted.pdf.

- ↑ 3.0 3.1 3.2 3.3 de Baar, J.H.S.; Scholcz, T.P.; Dwight, R.P. (2015). "Exploiting Adjoint Derivatives in High-Dimensional Metamodels". AIAA Journal 53 (5): 1391–1395. doi:10.2514/1.J053678. Bibcode: 2015AIAAJ..53.1391D.

- ↑ Dwight, R.; Brezillon, J. (2006). "Effect of Approximations of the Discrete Adjoint on Gradient-Based Optimization". AIAA Journal 44 (12): 3022–3031. doi:10.2514/1.21744. Bibcode: 2006AIAAJ..44.3022D.

- ↑ Giles, M.; Duta, M.; Muller, J.; Pierce, N. (2003). "Algorithm Developments for Discrete Adjoint Methods". AIAA Journal 41 (2): 198–205. doi:10.2514/2.1961. Bibcode: 2003AIAAJ..41..198G. https://ora.ox.ac.uk/objects/uuid:47cb04d7-d913-4d0b-a7c4-03b6137418e2.

- ↑ 6.0 6.1 6.2 6.3 6.4 de Baar, J.H.S.; Dwight, R.P.; Bijl, H. (2014). "Improvements to gradient-enhanced Kriging using a Bayesian interpretation". International Journal for Uncertainty Quantification 4 (3): 205–223. doi:10.1615/Int.J.UncertaintyQuantification.2013006809.

- ↑ Wikle, C.K.; Berliner, L.M. (2007). "A Bayesian tutorial for data assimilation". Physica D 230 (1–2): 1–16. doi:10.1016/j.physd.2006.09.017. Bibcode: 2007PhyD..230....1W.

- ↑ 8.0 8.1 8.2 8.3 Dwight, R.P.; Han, Z.-H. (2009). Efficient uncertainty quantification using gradient-enhanced Kriging. doi:10.2514/6.2009-2276. ISBN 978-1-60086-975-4. https://elib.dlr.de/63754/1/PV2009_2276.pdf.

- ↑ 9.0 9.1 Zhang, Sheng; Yang, Xiu; Tindel, Samy; Lin, Guang (2021). "Augmented Gaussian random field: Theory and computation". Discrete & Continuous Dynamical Systems - S 15 (4): 931. doi:10.3934/dcdss.2021098.

- ↑ 10.0 10.1 Laurenceau, J.; Sagaut, P. (2008). "Building efficient response surfaces of aerodynamic functions with Kriging and coKriging". AIAA Journal 46 (2): 498–507. doi:10.2514/1.32308. Bibcode: 2008AIAAJ..46..498L.

- ↑ de Baar, J.H.S. (2014). "Stochastic Surrogates for Measurements and Computer Models of Fluids". PhD Thesis, Delft University of Technology: 99–101. http://repository.tudelft.nl/islandora/object/uuid%3A79d2dc5a-9a7c-4179-a417-a50cbba2bc5f?collection=research.

- ↑ Bouhlel, M.A.; Martins, J.R.R.A. (2018). "Gradient-enhanced kriging for high-dimensional problems". Engineering with Computers 35: 157–173. doi:10.1007/s00366-018-0590-x.

- ↑ Morris, M.D.; Mitchell, T.J.; Ylvisaker, D. (1993). "Bayesian Design and Analysis of Computer Experiments: Use of Derivatives in Surface Prediction". Technometrics 35 (3): 243–255. doi:10.1080/00401706.1993.10485320. https://digital.library.unt.edu/ark:/67531/metadc1100641/.

- ↑ Chung, H.-S.; Alonso, J.J. (2002). "Using Gradients to Construct Cokriging Approximation Models for High-Dimensional Design Optimization Problems". AIAA 40th Aerospace Sciences Meeting and Exhibit: 2002–0317. doi:10.2514/6.2002-317.

- ↑ Han, Z.-H.; Gortz, S.; Zimmermann, R. (2013). "Improving variable-fidelity surrogate modeling via gradient-enhanced kriging and a generalized hybrid bridge function". Engineering with Computers 32 (1): 15–34. doi:10.1016/j.ast.2012.01.006.

- ↑ Ulaganathan, S.; Couckuyt, I.; Dhaene, T.; Degroote, J.; Laermans, E. (2016). "Performance study of gradient-enhanced Kriging". Aerospace Science and Technology 25 (1): 177–189. http://www.sciencedirect.com/science/article/pii/S127096381200017X.

- ↑ Laurent, L.; Le Riche, R.; Soulier, B.; Boucard, P.-A. (2017). "An overview of gradient-enhanced metamodels with applications". Archives of Computational Methods in Engineering 26: 1–46. doi:10.1007/s11831-017-9226-3. https://hal-emse.ccsd.cnrs.fr/emse-01525674/file/paperHAL.pdf.

- ↑ Lockwood, B.A.; Anitescu, M. (2012). "Gradient-Enhanced Universal Kriging for Uncertainty Propagation". Nuclear Science and Engineering 170 (2): 168–195. doi:10.13182/NSE10-86. http://www.mcs.anl.gov/papers/1808-1110.pdf.

- ↑ Raggi, G.; Fdez. Galván, I.; Ritterhoff, C. L.; Vacher, M.; Lindh, R. (2020). "Restricted-Variance Molecular Geometry Optimization Based on Gradient-Enhanced Kriging". Journal of Chemical Theory and Computation 16 (6): 3989–4001. doi:10.1021/acs.jctc.0c00257. PMID 32374164.

|