Engineering:Synthetic-aperture radar

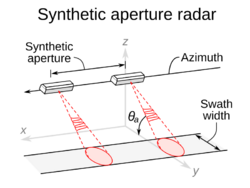

Synthetic-aperture radar (SAR) is a form of radar that is used to create two-dimensional images or three-dimensional reconstructions of objects, such as landscapes.[1] SAR uses the motion of the radar antenna over a target region to provide finer spatial resolution than conventional stationary beam-scanning radars. SAR is typically mounted on a moving platform, such as an aircraft or spacecraft, and has its origins in an advanced form of side looking airborne radar (SLAR). The distance the SAR device travels over a target during the period when the target scene is illuminated creates the large synthetic antenna aperture (the size of the antenna). Typically, the larger the aperture, the higher the image resolution will be, regardless of whether the aperture is physical (a large antenna) or synthetic (a moving antenna) – this allows SAR to create high-resolution images with comparatively small physical antennas. For a fixed antenna size and orientation, objects which are further away remain illuminated longer – therefore SAR has the property of creating larger synthetic apertures for more distant objects, which results in a consistent spatial resolution over a range of viewing distances.

To create a SAR image, successive pulses of radio waves are transmitted to "illuminate" a target scene, and the echo of each pulse is received and recorded. The pulses are transmitted and the echoes received using a single beam-forming antenna, with wavelengths of a meter down to several millimeters. As the SAR device on board the aircraft or spacecraft moves, the antenna location relative to the target changes with time. Signal processing of the successive recorded radar echoes allows the combining of the recordings from these multiple antenna positions. This process forms the synthetic antenna aperture and allows the creation of higher-resolution images than would otherwise be possible with a given physical antenna.[2]

Motivation and applications

SAR is capable of high-resolution remote sensing, independent of flight altitude, and independent of weather, as SAR can select frequencies to avoid weather-caused signal attenuation. SAR has day and night imaging capability as illumination is provided by the SAR.[3][4][5]

SAR images have wide applications in remote sensing and mapping of surfaces of the Earth and other planets. Applications of SAR are numerous. Examples include topography, oceanography, glaciology, geology (for example, terrain discrimination and subsurface imaging). SAR can also be used in forestry to determine forest height, biomass, and deforestation. Volcano and earthquake monitoring use differential interferometry. SAR can also be applied for monitoring civil infrastructure stability such as bridges.[6] SAR is useful in environment monitoring such as oil spills, flooding,[7][8] urban growth,[9] military surveillance: including strategic policy and tactical assessment.[5] SAR can be implemented as inverse SAR by observing a moving target over a substantial time with a stationary antenna.

Basic principle

A synthetic-aperture radar is an imaging radar mounted on a moving platform.[10] Electromagnetic waves are transmitted sequentially, the echoes are collected and the system electronics digitizes and stores the data for subsequent processing. As transmission and reception occur at different times, they map to different small positions. The well ordered combination of the received signals builds a virtual aperture that is much longer than the physical antenna width. That is the source of the term "synthetic aperture," giving it the property of an imaging radar.[5] The range direction is perpendicular to the flight track and perpendicular to the azimuth direction, which is also known as the along-track direction because it is in line with the position of the object within the antenna's field of view.

The 3D processing is done in two stages. The azimuth and range direction are focused for the generation of 2D (azimuth-range) high-resolution images, after which a digital elevation model (DEM)[11][12] is used to measure the phase differences between complex images, which is determined from different look angles to recover the height information. This height information, along with the azimuth-range coordinates provided by 2-D SAR focusing, gives the third dimension, which is the elevation.[3] The first step requires only standard processing algorithms,[12] for the second step, additional pre-processing such as image co-registration and phase calibration is used.[3][13]

In addition, multiple baselines can be used to extend 3D imaging to the time dimension. 4D and multi-D SAR imaging allows imaging of complex scenarios, such as urban areas, and has improved performance with respect to classical interferometric techniques such as persistent scatterer interferometry (PSI).[14]

Algorithm

SAR algorithms model the scene as a set of point targets that do not interact with each other (the Born approximation).

While the details of various SAR algorithms differ, SAR processing in each case is the application of a matched filter to the raw data, for each pixel in the output image, where the matched filter coefficients are the response from a single isolated point target.[15] In the early days of SAR processing, the raw data was recorded on film and the postprocessing by matched filter was implemented optically using lenses of conical, cylindrical and spherical shape. The Range-Doppler algorithm is an example of a more recent approach.

Existing spectral estimation approaches

Synthetic-aperture radar determines the 3D reflectivity from measured SAR data. It is basically a spectrum estimation, because for a specific cell of an image, the complex-value SAR measurements of the SAR image stack are a sampled version of the Fourier transform of reflectivity in elevation direction, but the Fourier transform is irregular.[16] Thus the spectral estimation techniques are used to improve the resolution and reduce speckle compared to the results of conventional Fourier transform SAR imaging techniques.[17]

Non-parametric methods

FFT

FFT (Fast Fourier Transform i.e., periodogram or matched filter) is one such method, which is used in majority of the spectral estimation algorithms, and there are many fast algorithms for computing the multidimensional discrete Fourier transform. Computational Kronecker-core array algebra[18] is a popular algorithm used as new variant of FFT algorithms for the processing in multidimensional synthetic-aperture radar (SAR) systems. This algorithm uses a study of theoretical properties of input/output data indexing sets and groups of permutations.

A branch of finite multi-dimensional linear algebra is used to identify similarities and differences among various FFT algorithm variants and to create new variants. Each multidimensional DFT computation is expressed in matrix form. The multidimensional DFT matrix, in turn, is disintegrated into a set of factors, called functional primitives, which are individually identified with an underlying software/hardware computational design.[5]

The FFT implementation is essentially a realization of the mapping of the mathematical framework through generation of the variants and executing matrix operations. The performance of this implementation may vary from machine to machine, and the objective is to identify on which machine it performs best.[19]

Advantages

- Additive group-theoretic properties of multidimensional input/output indexing sets are used for the mathematical formulations, therefore, it is easier to identify mapping between computing structures and mathematical expressions, thus, better than conventional methods.[20]

- The language of CKA algebra helps the application developer in understanding which are the more computational efficient FFT variants thus reducing the computational effort and improve their implementation time.[20][21]

Disadvantages

- FFT cannot separate sinusoids close in frequency. If the periodicity of the data does not match FFT, edge effects are seen.[19]

Capon method

The Capon spectral method, also called the minimum-variance method, is a multidimensional array-processing technique.[22] It is a nonparametric covariance-based method, which uses an adaptive matched-filterbank approach and follows two main steps:

- Passing the data through a 2D bandpass filter with varying center frequencies ().

- Estimating the power at () for all of interest from the filtered data.

The adaptive Capon bandpass filter is designed to minimize the power of the filter output, as well as pass the frequencies () without any attenuation, i.e., to satisfy, for each (),

- subject to

where R is the covariance matrix, is the complex conjugate transpose of the impulse response of the FIR filter, is the 2D Fourier vector, defined as , denotes Kronecker product.[22]

Therefore, it passes a 2D sinusoid at a given frequency without distortion while minimizing the variance of the noise of the resulting image. The purpose is to compute the spectral estimate efficiently.[22]

Spectral estimate is given as

where R is the covariance matrix, and is the 2D complex-conjugate transpose of the Fourier vector. The computation of this equation over all frequencies is time-consuming. It is seen that the forward–backward Capon estimator yields better estimation than the forward-only classical capon approach. The main reason behind this is that while the forward–backward Capon uses both the forward and backward data vectors to obtain the estimate of the covariance matrix, the forward-only Capon uses only the forward data vectors to estimate the covariance matrix.[22]

Advantages

- Capon can yield more accurate spectral estimates with much lower sidelobes and narrower spectral peaks than the fast Fourier transform (FFT) method.[23]

- Capon method can provide much better resolution.

Disadvantages

- Implementation requires computation of two intensive tasks: inversion of the covariance matrix R and multiplication by the matrix, which has to be done for each point .[3]

APES method

The APES (amplitude and phase estimation) method is also a matched-filter-bank method, which assumes that the phase history data is a sum of 2D sinusoids in noise.

APES spectral estimator has 2-step filtering interpretation:

- Passing data through a bank of FIR bandpass filters with varying center frequency .

- Obtaining the spectrum estimate for from the filtered data.[24]

Empirically, the APES method results in wider spectral peaks than the Capon method, but more accurate spectral estimates for amplitude in SAR.[25] In the Capon method, although the spectral peaks are narrower than the APES, the sidelobes are higher than that for the APES. As a result, the estimate for the amplitude is expected to be less accurate for the Capon method than for the APES method. The APES method requires about 1.5 times more computation than the Capon method.[26]

Advantages

- Filtering reduces the number of available samples, but when it is designed tactically, the increase in signal-to-noise ratio (SNR) in the filtered data will compensate this reduction, and the amplitude of a sinusoidal component with frequency can be estimated more accurately from the filtered data than from the original signal.[27]

Disadvantages

- The autocovariance matrix is much larger in 2D than in 1D, therefore it is limited by memory available.[5]

SAMV method

SAMV method is a parameter-free sparse signal reconstruction based algorithm. It achieves super-resolution and is robust to highly correlated signals. The name emphasizes its basis on the asymptotically minimum variance (AMV) criterion. It is a powerful tool for the recovery of both the amplitude and frequency characteristics of multiple highly correlated sources in challenging environment (e.g., limited number of snapshots, low signal-to-noise ratio. Applications include synthetic-aperture radar imaging and various source localization.

Advantages

SAMV method is capable of achieving resolution higher than some established parametric methods, e.g., MUSIC, especially with highly correlated signals.

Disadvantages

Computational complexity of the SAMV method is higher due to its iterative procedure.

Parametric subspace decomposition methods

Eigenvector method

This subspace decomposition method separates the eigenvectors of the autocovariance matrix into those corresponding to signals and to clutter.[5] The amplitude of the image at a point ( ) is given by:

where is the amplitude of the image at a point , is the coherency matrix and is the Hermitian of the coherency matrix, is the inverse of the eigenvalues of the clutter subspace, are vectors defined as[5]

where ⊗ denotes the Kronecker product of the two vectors.

Advantages

- Shows features of image more accurately.[5]

Disadvantages

- High computational complexity.[13]

MUSIC method

MUSIC detects frequencies in a signal by performing an eigen decomposition on the covariance matrix of a data vector of the samples obtained from the samples of the received signal. When all of the eigenvectors are included in the clutter subspace (model order = 0) the EV method becomes identical to the Capon method. Thus the determination of model order is critical to operation of the EV method. The eigenvalue of the R matrix decides whether its corresponding eigenvector corresponds to the clutter or to the signal subspace.[5]

The MUSIC method is considered to be a poor performer in SAR applications. This method uses a constant instead of the clutter subspace.[5]

In this method, the denominator is equated to zero when a sinusoidal signal corresponding to a point in the SAR image is in alignment to one of the signal subspace eigenvectors which is the peak in image estimate. Thus this method does not accurately represent the scattering intensity at each point, but show the particular points of the image.[5][28]

Advantages

Disadvantages

- Resolution loss due to the averaging operation.[10]

Backprojection algorithm

Backprojection Algorithm has two methods: Time-domain Backprojection and Frequency-domain Backprojection. The time-domain Backprojection has more advantages over frequency-domain and thus, is more preferred. The time-domain Backprojection forms images or spectrums by matching the data acquired from the radar and as per what it expects to receive. It can be considered as an ideal matched-filter for synthetic-aperture radar. There is no need of having a different motion compensation step due to its quality of handling non-ideal motion/sampling. It can also be used for various imaging geometries.[29]

Advantages

- It is invariant to the imaging mode: which means, that it uses the same algorithm irrespective of the imaging mode present, whereas, frequency domain methods require changes depending on the mode and geometry.[29]

- Ambiguous azimuth aliasing usually occurs when the Nyquist spatial sampling requirements are exceeded by frequencies. Unambiguous aliasing occurs in squinted geometries where the signal bandwidth does not exceed the sampling limits, but has undergone "spectral wrapping." Backprojection Algorithm does not get affected by any such kind of aliasing effects.[29]

- It matches the space/time filter: uses the information about the imaging geometry, to produce a pixel-by-pixel varying matched filter to approximate the expected return signal. This usually yields antenna gain compensation.[29]

- With reference to the previous advantage, the back projection algorithm compensates for the motion. This becomes an advantage at areas having low altitudes.[29]

Disadvantages

- The computational expense is more for Backprojection algorithm as compared to other frequency domain methods.

- It requires very precise knowledge of imaging geometry.[29]

Application: geosynchronous orbit synthetic-aperture radar (GEO-SAR)

In GEO-SAR, to focus specially on the relative moving track, the backprojection algorithm works very well. It uses the concept of Azimuth Processing in the time domain. For the satellite-ground geometry, GEO-SAR plays a significant role.[30]

The procedure of this concept is elaborated as follows.[30]

- The raw data acquired is segmented or drawn into sub-apertures for simplification of speedy conduction of procedure.

- The range of the data is then compressed, using the concept of "Matched Filtering" for every segment/sub-aperture created. It is given by- where τ is the range time, t is the azimuthal time, λ is the wavelength, c is the speed of light.

- Accuracy in the "Range Migration Curve" is achieved by range interpolation.

- The pixel locations of the ground in the image is dependent on the satellite–ground geometry model. Grid-division is now done as per the azimuth time.

- Calculations for the "slant range" (range between the antenna's phase center and the point on the ground) are done for every azimuth time using coordinate transformations.

- Azimuth Compression is done after the previous step.

- Step 5 and 6 are repeated for every pixel, to cover every pixel, and conduct the procedure on every sub-aperture.

- Lastly, all the sub-apertures of the image created throughout, are superimposed onto each other and the ultimate HD image is generated.

Comparison between the algorithms

Capon and APES can yield more accurate spectral estimates with much lower sidelobes and more narrow spectral peaks than the fast Fourier transform (FFT) method, which is also a special case of the FIR filtering approaches. It is seen that although the APES algorithm gives slightly wider spectral peaks than the Capon method, the former yields more accurate overall spectral estimates than the latter and the FFT method.[25]

FFT method is fast and simple but have larger sidelobes. Capon has high resolution but high computational complexity. EV also has high resolution and high computational complexity. APES has higher resolution, faster than capon and EV but high computational complexity.[10]

MUSIC method is not generally suitable for SAR imaging, as whitening the clutter eigenvalues destroys the spatial inhomogeneities associated with terrain clutter or other diffuse scattering in SAR imagery. But it offers higher frequency resolution in the resulting power spectral density (PSD) than the fast Fourier transform (FFT)-based methods.[31]

The backprojection algorithm is computationally expensive. It is specifically attractive for sensors that are wideband, wide-angle, and/or have long coherent apertures with substantial off-track motion.[32]

Multistatic operation

SAR requires that echo captures be taken at multiple antenna positions. The more captures taken (at different antenna locations) the more reliable the target characterization.

Multiple captures can be obtained by moving a single antenna to different locations, by placing multiple stationary antennas at different locations, or combinations thereof.

The advantage of a single moving antenna is that it can be easily placed in any number of positions to provide any number of monostatic waveforms. For example, an antenna mounted on an airplane takes many captures per second as the plane travels.

The principal advantages of multiple static antennas are that a moving target can be characterized (assuming the capture electronics are fast enough), that no vehicle or motion machinery is necessary, and that antenna positions need not be derived from other, sometimes unreliable, information. (One problem with SAR aboard an airplane is knowing precise antenna positions as the plane travels).

For multiple static antennas, all combinations of monostatic and multistatic radar waveform captures are possible. Note, however, that it is not advantageous to capture a waveform for each of both transmission directions for a given pair of antennas, because those waveforms will be identical. When multiple static antennas are used, the total number of unique echo waveforms that can be captured is

where N is the number of unique antenna positions.

Scanning modes

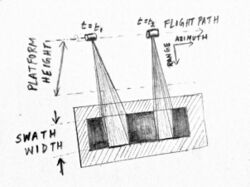

Stripmap mode airborne SAR

The antenna stays in a fixed position. It may be orthogonal to the flight path, or it may be squinted slightly forward or backward.[5]

When the antenna aperture travels along the flight path, a signal is transmitted at a rate equal to the pulse repetition frequency (PRF). The lower boundary of the PRF is determined by the Doppler bandwidth of the radar. The backscatter of each of these signals is commutatively added on a pixel-by-pixel basis to attain the fine azimuth resolution desired in radar imagery.[33]

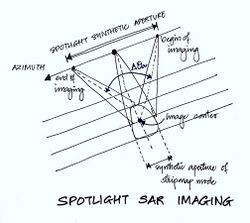

Spotlight mode SAR

The spotlight synthetic aperture is given by

where is the angle formed between the beginning and end of the imaging, as shown in the diagram of spotlight imaging and is the range distance.

The spotlight mode gives better resolution albeit for a smaller ground patch. In this mode, the illuminating radar beam is steered continually as the aircraft moves, so that it illuminates the same patch over a longer period of time. This mode is not a traditional continuous-strip imaging mode; however, it has high azimuth resolution.[28] A technical explanation of spotlight SAR from first principles is offered in.[34]

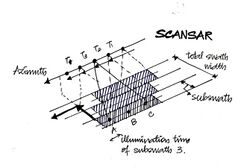

Scan mode SAR

While operating as a scan mode SAR, the antenna beam sweeps periodically and thus cover much larger area than the spotlight and stripmap modes. However, the azimuth resolution become much lower than the stripmap mode due to the decreased azimuth bandwidth. Clearly there is a balance achieved between the azimuth resolution and the scan area of SAR.[35] Here, the synthetic aperture is shared between the sub swaths, and it is not in direct contact within one subswath. Mosaic operation is required in azimuth and range directions to join the azimuth bursts and the range sub-swaths.[28]

- ScanSAR makes the swath beam huge.

- The azimuth signal has many bursts.

- The azimuth resolution is limited due to the burst duration.

- Each target contains varied frequencies which completely depends on where the azimuth is present.[28]

Special techniques

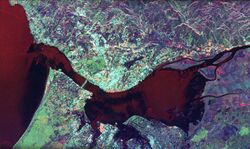

Polarimetry

Radar waves have a polarization. Different materials reflect radar waves with different intensities, but anisotropic materials such as grass often reflect different polarizations with different intensities. Some materials will also convert one polarization into another. By emitting a mixture of polarizations and using receiving antennas with a specific polarization, several images can be collected from the same series of pulses. Frequently three such RX-TX polarizations (HH-pol, VV-pol, VH-pol) are used as the three color channels in a synthesized image. This is what has been done in the picture at right. Interpretation of the resulting colors requires significant testing of known materials.

New developments in polarimetry include using the changes in the random polarization returns of some surfaces (such as grass or sand) and between two images of the same location at different times to determine where changes not visible to optical systems occurred. Examples include subterranean tunneling or paths of vehicles driving through the area being imaged. Enhanced SAR sea oil slick observation has been developed by appropriate physical modelling and use of fully polarimetric and dual-polarimetric measurements.

SAR polarimetry

SAR polarimetry is a technique used for deriving qualitative and quantitative physical information for land, snow and ice, ocean and urban applications based on the measurement and exploration of the polarimetric properties of man-made and natural scatterers. Terrain and land use classification is one of the most important applications of polarimetric synthetic-aperture radar (PolSAR).[36]

SAR polarimetry uses a scattering matrix (S) to identify the scattering behavior of objects after an interaction with electromagnetic wave. The matrix is represented by a combination of horizontal and vertical polarization states of transmitted and received signals.

where, HH is for horizontal transmit and horizontal receive, VV is for vertical transmit and vertical receive, HV is for horizontal transmit and vertical receive, and VH – for vertical transmit and horizontal receive.

The first two of these polarization combinations are referred to as like-polarized (or co-polarized), because the transmit and receive polarizations are the same. The last two combinations are referred to as cross-polarized because the transmit and receive polarizations are orthogonal to one another.[37]

Three-component scattering power model

The three-component scattering power model by Freeman and Durden[38] is successfully used for the decomposition of a PolSAR image, applying the reflection symmetry condition using covariance matrix. The method is based on simple physical scattering mechanisms (surface scattering, double-bounce scattering, and volume scattering). The advantage of this scattering model is that it is simple and easy to implement for image processing. There are 2 major approaches for a 33 polarimetric matrix decomposition. One is the lexicographic covariance matrix approach based on physically measurable parameters,[38] and the other is the Pauli decomposition which is a coherent decomposition matrix. It represents all the polarimetric information in a single SAR image. The polarimetric information of [S] could be represented by the combination of the intensities in a single RGB image where all the previous intensities will be coded as a color channel.[39]

Four-component scattering power model

For PolSAR image analysis, there can be cases where reflection symmetry condition does not hold. In those cases a four-component scattering model[36][40] can be used to decompose polarimetric synthetic-aperture radar (SAR) images. This approach deals with the non-reflection symmetric scattering case. It includes and extends the three-component decomposition method introduced by Freeman and Durden[38] to a fourth component by adding the helix scattering power. This helix power term generally appears in complex urban area but disappears for a natural distributed scatterer.[36]

There is also an improved method using the four-component decomposition algorithm, which was introduced for the general polSAR data image analyses. The SAR data is first filtered which is known as speckle reduction, then each pixel is decomposed by four-component model to determine the surface scattering power (), double-bounce scattering power (), volume scattering power (), and helix scattering power ().[36] The pixels are then divided into 5 classes (surface, double-bounce, volume, helix, and mixed pixels) classified with respect to maximum powers. A mixed category is added for the pixels having two or three equal dominant scattering powers after computation. The process continues as the pixels in all these categories are divided in 20 small clutter approximately of same number of pixels and merged as desirable, this is called cluster merging. They are iteratively classified and then automatically color is delivered to each class. The summarization of this algorithm leads to an understanding that, brown colors denotes the surface scattering classes, red colors for double-bounce scattering classes, green colors for volume scattering classes, and blue colors for helix scattering classes.[41]

Although this method is aimed for non-reflection case, it automatically includes the reflection symmetry condition, therefore in can be used as a general case. It also preserves the scattering characteristics by taking the mixed scattering category into account therefore proving to be a better algorithm.

Interferometry

Rather than discarding the phase data, information can be extracted from it. If two observations of the same terrain from very similar positions are available, aperture synthesis can be performed to provide the resolution performance which would be given by a radar system with dimensions equal to the separation of the two measurements. This technique is called interferometric SAR or InSAR.

If the two samples are obtained simultaneously (perhaps by placing two antennas on the same aircraft, some distance apart), then any phase difference will contain information about the angle from which the radar echo returned. Combining this with the distance information, one can determine the position in three dimensions of the image pixel. In other words, one can extract terrain altitude as well as radar reflectivity, producing a digital elevation model (DEM) with a single airplane pass. One aircraft application at the Canada Centre for Remote Sensing produced digital elevation maps with a resolution of 5 m and altitude errors also about 5 m. Interferometry was used to map many regions of the Earth's surface with unprecedented accuracy using data from the Shuttle Radar Topography Mission.

If the two samples are separated in time, perhaps from two flights over the same terrain, then there are two possible sources of phase shift. The first is terrain altitude, as discussed above. The second is terrain motion: if the terrain has shifted between observations, it will return a different phase. The amount of shift required to cause a significant phase difference is on the order of the wavelength used. This means that if the terrain shifts by centimeters, it can be seen in the resulting image (a digital elevation map must be available to separate the two kinds of phase difference; a third pass may be necessary to produce one).

This second method offers a powerful tool in geology and geography. Glacier flow can be mapped with two passes. Maps showing the land deformation after a minor earthquake or after a volcanic eruption (showing the shrinkage of the whole volcano by several centimeters) have been published.[42][43][44]

Differential interferometry

Differential interferometry (D-InSAR) requires taking at least two images with addition of a DEM. The DEM can be either produced by GPS measurements or could be generated by interferometry as long as the time between acquisition of the image pairs is short, which guarantees minimal distortion of the image of the target surface. In principle, 3 images of the ground area with similar image acquisition geometry is often adequate for D-InSar. The principle for detecting ground movement is quite simple. One interferogram is created from the first two images; this is also called the reference interferogram or topographical interferogram. A second interferogram is created that captures topography + distortion. Subtracting the latter from the reference interferogram can reveal differential fringes, indicating movement. The described 3 image D-InSAR generation technique is called 3-pass or double-difference method.

Differential fringes which remain as fringes in the differential interferogram are a result of SAR range changes of any displaced point on the ground from one interferogram to the next. In the differential interferogram, each fringe is directly proportional to the SAR wavelength, which is about 5.6 cm for ERS and RADARSAT single phase cycle. Surface displacement away from the satellite look direction causes an increase in path (translating to phase) difference. Since the signal travels from the SAR antenna to the target and back again, the measured displacement is twice the unit of wavelength. This means in differential interferometry one fringe cycle −π to +π or one wavelength corresponds to a displacement relative to SAR antenna of only half wavelength (2.8 cm). There are various publications on measuring subsidence movement, slope stability analysis, landslide, glacier movement, etc. tooling D-InSAR. Further advancement to this technique whereby differential interferometry from satellite SAR ascending pass and descending pass can be used to estimate 3-D ground movement. Research in this area has shown accurate measurements of 3-D ground movement with accuracies comparable to GPS based measurements can be achieved.

Tomo-SAR

SAR Tomography is a subfield of a concept named as multi-baseline interferometry. It has been developed to give a 3D exposure to the imaging, which uses the beam formation concept. It can be used when the use demands a focused phase concern between the magnitude and the phase components of the SAR data, during information retrieval. One of the major advantages of Tomo-SAR is that it can separate out the parameters which get scattered, irrespective of how different their motions are.[45] On using Tomo-SAR with differential interferometry, a new combination named "differential tomography" (Diff-Tomo) is developed.[45]

Tomo-SAR has an application based on radar imaging, which is the depiction of Ice Volume and Forest Temporal Coherence (Temporal coherence describes the correlation between waves observed at different moments in time).[45]

Ultra-wideband SAR

Conventional radar systems emit bursts of radio energy with a fairly narrow range of frequencies. A narrow-band channel, by definition, does not allow rapid changes in modulation. Since it is the change in a received signal that reveals the time of arrival of the signal (obviously an unchanging signal would reveal nothing about "when" it reflected from the target), a signal with only a slow change in modulation cannot reveal the distance to the target as well as a signal with a quick change in modulation.

Ultra-wideband (UWB) refers to any radio transmission that uses a very large bandwidth – which is the same as saying it uses very rapid changes in modulation. Although there is no set bandwidth value that qualifies a signal as "UWB", systems using bandwidths greater than a sizable portion of the center frequency (typically about ten percent, or so) are most often called "UWB" systems. A typical UWB system might use a bandwidth of one-third to one-half of its center frequency. For example, some systems use a bandwidth of about 1 GHz centered around 3 GHz.

The two most common methods to increase signal bandwidth used in UWB radar, including SAR, are very short pulses and high-bandwidth chirping. A general description of chirping appears elsewhere in this article. The bandwidth of a chirped system can be as narrow or as wide as the designers desire. Pulse-based UWB systems, being the more common method associated with the term "UWB radar", are described here.

A pulse-based radar system transmits very short pulses of electromagnetic energy, typically only a few waves or less. A very short pulse is, of course, a very rapidly changing signal, and thus occupies a very wide bandwidth. This allows far more accurate measurement of distance, and thus resolution.

The main disadvantage of pulse-based UWB SAR is that the transmitting and receiving front-end electronics are difficult to design for high-power applications. Specifically, the transmit duty cycle is so exceptionally low and pulse time so exceptionally short, that the electronics must be capable of extremely high instantaneous power to rival the average power of conventional radars. (Although it is true that UWB provides a notable gain in channel capacity over a narrow band signal because of the relationship of bandwidth in the Shannon–Hartley theorem and because the low receive duty cycle receives less noise, increasing the signal-to-noise ratio, there is still a notable disparity in link budget because conventional radar might be several orders of magnitude more powerful than a typical pulse-based radar.) So pulse-based UWB SAR is typically used in applications requiring average power levels in the microwatt or milliwatt range, and thus is used for scanning smaller, nearer target areas (several tens of meters), or in cases where lengthy integration (over a span of minutes) of the received signal is possible. However, that this limitation is solved in chirped UWB radar systems.

The principal advantages of UWB radar are better resolution (a few millimeters using commercial off-the-shelf electronics) and more spectral information of target reflectivity.

Doppler-beam sharpening

Doppler Beam Sharpening commonly refers to the method of processing unfocused real-beam phase history to achieve better resolution than could be achieved by processing the real beam without it. Because the real aperture of the radar antenna is so small (compared to the wavelength in use), the radar energy spreads over a wide area (usually many degrees wide in a direction orthogonal (at right angles) to the direction of the platform (aircraft)). Doppler-beam sharpening takes advantage of the motion of the platform in that targets ahead of the platform return a Doppler upshifted signal (slightly higher in frequency) and targets behind the platform return a Doppler downshifted signal (slightly lower in frequency).

The amount of shift varies with the angle forward or backward from the ortho-normal direction. By knowing the speed of the platform, target signal return is placed in a specific angle "bin" that changes over time. Signals are integrated over time and thus the radar "beam" is synthetically reduced to a much smaller aperture – or more accurately (and based on the ability to distinguish smaller Doppler shifts) the system can have hundreds of very "tight" beams concurrently. This technique dramatically improves angular resolution; however, it is far more difficult to take advantage of this technique for range resolution. (See pulse-doppler radar).

Chirped (pulse-compressed) radars

A common technique for many radar systems (usually also found in SAR systems) is to "chirp" the signal. In a "chirped" radar, the pulse is allowed to be much longer. A longer pulse allows more energy to be emitted, and hence received, but usually hinders range resolution. But in a chirped radar, this longer pulse also has a frequency shift during the pulse (hence the chirp or frequency shift). When the "chirped" signal is returned, it must be correlated with the sent pulse. Classically, in analog systems, it is passed to a dispersive delay line (often a surface acoustic wave device) that has the property of varying velocity of propagation based on frequency. This technique "compresses" the pulse in time – thus having the effect of a much shorter pulse (improved range resolution) while having the benefit of longer pulse length (much more signal returned). Newer systems use digital pulse correlation to find the pulse return in the signal.

Typical operation

Data collection

In a typical SAR application, a single radar antenna is attached to an aircraft or spacecraft such that a substantial component of the antenna's radiated beam has a wave-propagation direction perpendicular to the flight-path direction. The beam is allowed to be broad in the vertical direction so it will illuminate the terrain from nearly beneath the aircraft out toward the horizon.

Image resolution and bandwidth

Resolution in the range dimension of the image is accomplished by creating pulses which define very short time intervals, either by emitting short pulses consisting of a carrier frequency and the necessary sidebands, all within a certain bandwidth, or by using longer "chirp pulses" in which frequency varies (often linearly) with time within that bandwidth. The differing times at which echoes return allow points at different distances to be distinguished.

Image resolution of SAR in its range coordinate (expressed in image pixels per distance unit) is mainly proportional to the radio bandwidth of whatever type of pulse is used. In the cross-range coordinate, the similar resolution is mainly proportional to the bandwidth of the Doppler shift of the signal returns within the beamwidth. Since Doppler frequency depends on the angle of the scattering point's direction from the broadside direction, the Doppler bandwidth available within the beamwidth is the same at all ranges. Hence the theoretical spatial resolution limits in both image dimensions remain constant with variation of range. However, in practice, both the errors that accumulate with data-collection time and the particular techniques used in post-processing further limit cross-range resolution at long ranges.

Image resolution and beamwidth

The total signal is that from a beamwidth-sized patch of the ground. To produce a beam that is narrow in the cross-range direction[clarification needed], diffraction effects require that the antenna be wide in that dimension. Therefore, the distinguishing, from each other, of co-range points simply by strengths of returns that persist for as long as they are within the beam width is difficult with aircraft-carryable antennas, because their beams can have linear widths only about two orders of magnitude (hundreds of times) smaller than the range. (Spacecraft-carryable ones can do 10 or more times better.) However, if both the amplitude and the phase of returns are recorded, then the portion of that multi-target return that was scattered radially from any smaller scene element can be extracted by phase-vector correlation of the total return with the form of the return expected from each such element.

The process can be thought of as combining the series of spatially distributed observations as if all had been made simultaneously with an antenna as long as the beamwidth and focused on that particular point. The "synthetic aperture" simulated at maximum system range by this process not only is longer than the real antenna, but, in practical applications, it is much longer than the radar aircraft, and tremendously longer than the radar spacecraft.

Although some references to SARs have characterized them as "radar telescopes", their actual optical analogy is the microscope, the detail in their images being smaller than the length of the synthetic aperture. In radar-engineering terms, while the target area is in the "far field" of the illuminating antenna, it is in the "near field" of the simulated one. Careful design and operation can accomplish resolution of items smaller than a millionth of the range, for example, 30 cm at 300 km, or about one foot at nearly 200 miles (320 km).

Pulse transmission and reception

The conversion of return delay time to geometric range can be very accurate because of the natural constancy of the speed and direction of propagation of electromagnetic waves. However, for an aircraft flying through the never-uniform and never-quiescent atmosphere, the relating of pulse transmission and reception times to successive geometric positions of the antenna must be accompanied by constant adjusting of the return phases to account for sensed irregularities in the flight path. SAR's in spacecraft avoid that atmosphere problem, but still must make corrections for known antenna movements due to rotations of the spacecraft, even those that are reactions to movements of onboard machinery. Locating a SAR in a crewed space vehicle may require that the humans carefully remain motionless relative to the vehicle during data collection periods.

Returns from scatterers within the range extent of any image are spread over a matching time interval. The inter-pulse period must be long enough to allow farthest-range returns from any pulse to finish arriving before the nearest-range ones from the next pulse begin to appear, so that those do not overlap each other in time. On the other hand, the interpulse rate must be fast enough to provide sufficient samples for the desired across-range (or across-beam) resolution. When the radar is to be carried by a high-speed vehicle and is to image a large area at fine resolution, those conditions may clash, leading to what has been called SAR's ambiguity problem. The same considerations apply to "conventional" radars also, but this problem occurs significantly only when resolution is so fine as to be available only through SAR processes. Since the basis of the problem is the information-carrying capacity of the single signal-input channel provided by one antenna, the only solution is to use additional channels fed by additional antennas. The system then becomes a hybrid of a SAR and a phased array, sometimes being called a Vernier array.

Data processing

Combining the series of observations requires significant computational resources, usually using Fourier transform techniques. The high digital computing speed now available allows such processing to be done in near-real time on board a SAR aircraft. (There is necessarily a minimum time delay until all parts of the signal have been received.) The result is a map of radar reflectivity, including both amplitude and phase.

Amplitude data

The amplitude information, when shown in a map-like display, gives information about ground cover in much the same way that a black-and-white photo does. Variations in processing may also be done in either vehicle-borne stations or ground stations for various purposes, so as to accentuate certain image features for detailed target-area analysis.

Phase data

Although the phase information in an image is generally not made available to a human observer of an image display device, it can be preserved numerically, and sometimes allows certain additional features of targets to be recognized.

Coherence speckle

Unfortunately, the phase differences between adjacent image picture elements ("pixels") also produce random interference effects called "coherence speckle", which is a sort of graininess with dimensions on the order of the resolution, causing the concept of resolution to take on a subtly different meaning. This effect is the same as is apparent both visually and photographically in laser-illuminated optical scenes. The scale of that random speckle structure is governed by the size of the synthetic aperture in wavelengths, and cannot be finer than the system's resolution. Speckle structure can be subdued at the expense of resolution.

Optical holography

Before rapid digital computers were available, the data processing was done using an optical holography technique. The analog radar data were recorded as a holographic interference pattern on photographic film at a scale permitting the film to preserve the signal bandwidths (for example, 1:1,000,000 for a radar using a 0.6-meter wavelength). Then light using, for example, 0.6-micrometer waves (as from a helium–neon laser) passing through the hologram could project a terrain image at a scale recordable on another film at reasonable processor focal distances of around a meter. This worked because both SAR and phased arrays are fundamentally similar to optical holography, but using microwaves instead of light waves. The "optical data-processors" developed for this radar purpose[46][47][48] were the first effective analog optical computer systems, and were, in fact, devised before the holographic technique was fully adapted to optical imaging. Because of the different sources of range and across-range signal structures in the radar signals, optical data-processors for SAR included not only both spherical and cylindrical lenses, but sometimes conical ones.

Image appearance

The following considerations apply also to real-aperture terrain-imaging radars, but are more consequential when resolution in range is matched to a cross-beam resolution that is available only from a SAR.

Range, cross-range, and angles

The two dimensions of a radar image are range and cross-range. Radar images of limited patches of terrain can resemble oblique photographs, but not ones taken from the location of the radar. This is because the range coordinate in a radar image is perpendicular to the vertical-angle coordinate of an oblique photo. The apparent entrance-pupil position (or camera center) for viewing such an image is therefore not as if at the radar, but as if at a point from which the viewer's line of sight is perpendicular to the slant-range direction connecting radar and target, with slant-range increasing from top to bottom of the image.

Because slant ranges to level terrain vary in vertical angle, each elevation of such terrain appears as a curved surface, specifically a hyperbolic cosine one. Verticals at various ranges are perpendiculars to those curves. The viewer's apparent looking directions are parallel to the curve's "hypcos" axis. Items directly beneath the radar appear as if optically viewed horizontally (i.e., from the side) and those at far ranges as if optically viewed from directly above. These curvatures are not evident unless large extents of near-range terrain, including steep slant ranges, are being viewed.

Visibility

When viewed as specified above, fine-resolution radar images of small areas can appear most nearly like familiar optical ones, for two reasons. The first reason is easily understood by imagining a flagpole in the scene. The slant-range to its upper end is less than that to its base. Therefore, the pole can appear correctly top-end up only when viewed in the above orientation. Secondly, the radar illumination then being downward, shadows are seen in their most-familiar "overhead-lighting" direction.

The image of the pole's top will overlay that of some terrain point which is on the same slant range arc but at a shorter horizontal range ("ground-range"). Images of scene surfaces which faced both the illumination and the apparent eyepoint will have geometries that resemble those of an optical scene viewed from that eyepoint. However, slopes facing the radar will be foreshortened and ones facing away from it will be lengthened from their horizontal (map) dimensions. The former will therefore be brightened and the latter dimmed.

Returns from slopes steeper than perpendicular to slant range will be overlaid on those of lower-elevation terrain at a nearer ground-range, both being visible but intermingled. This is especially the case for vertical surfaces like the walls of buildings. Another viewing inconvenience that arises when a surface is steeper than perpendicular to the slant range is that it is then illuminated on one face but "viewed" from the reverse face. Then one "sees", for example, the radar-facing wall of a building as if from the inside, while the building's interior and the rear wall (that nearest to, hence expected to be optically visible to, the viewer) have vanished, since they lack illumination, being in the shadow of the front wall and the roof. Some return from the roof may overlay that from the front wall, and both of those may overlay return from terrain in front of the building. The visible building shadow will include those of all illuminated items. Long shadows may exhibit blurred edges due to the illuminating antenna's movement during the "time exposure" needed to create the image.

Mirroring artefacts and shadows

Surfaces that we usually consider rough will, if that roughness consists of relief less than the radar wavelength, behave as smooth mirrors, showing, beyond such a surface, additional images of items in front of it. Those mirror images will appear within the shadow of the mirroring surface, sometimes filling the entire shadow, thus preventing recognition of the shadow.

The direction of overlay of any scene point is not directly toward the radar, but toward that point of the SAR's current path direction that is nearest to the target point. If the SAR is "squinting" forward or aft away from the exactly broadside direction, then the illumination direction, and hence the shadow direction, will not be opposite to the overlay direction, but slanted to right or left from it. An image will appear with the correct projection geometry when viewed so that the overlay direction is vertical, the SAR's flight-path is above the image, and range increases somewhat downward.

Objects in motion

Objects in motion within a SAR scene alter the Doppler frequencies of the returns. Such objects therefore appear in the image at locations offset in the across-range direction by amounts proportional to the range-direction component of their velocity. Road vehicles may be depicted off the roadway and therefore not recognized as road traffic items. Trains appearing away from their tracks are more easily properly recognized by their length parallel to known trackage as well as by the absence of an equal length of railbed signature and of some adjacent terrain, both having been shadowed by the train. While images of moving vessels can be offset from the line of the earlier parts of their wakes, the more recent parts of the wake, which still partake of some of the vessel's motion, appear as curves connecting the vessel image to the relatively quiescent far-aft wake. In such identifiable cases, speed and direction of the moving items can be determined from the amounts of their offsets. The along-track component of a target's motion causes some defocus. Random motions such as that of wind-driven tree foliage, vehicles driven over rough terrain, or humans or other animals walking or running generally render those items not focusable, resulting in blurring or even effective invisibility.

These considerations, along with the speckle structure due to coherence, take some getting used to in order to correctly interpret SAR images. To assist in that, large collections of significant target signatures have been accumulated by performing many test flights over known terrains and cultural objects.

History

Relationship to phased arrays

A technique closely related to SAR uses an array (referred to as a "phased array") of real antenna elements spatially distributed over either one or two dimensions perpendicular to the radar-range dimension. These physical arrays are truly synthetic ones, indeed being created by synthesis of a collection of subsidiary physical antennas. Their operation need not involve motion relative to targets. All elements of these arrays receive simultaneously in real time, and the signals passing through them can be individually subjected to controlled shifts of the phases of those signals. One result can be to respond most strongly to radiation received from a specific small scene area, focusing on that area to determine its contribution to the total signal received. The coherently detected set of signals received over the entire array aperture can be replicated in several data-processing channels and processed differently in each. The set of responses thus traced to different small scene areas can be displayed together as an image of the scene.

In comparison, a SAR's (commonly) single physical antenna element gathers signals at different positions at different times. When the radar is carried by an aircraft or an orbiting vehicle, those positions are functions of a single variable, distance along the vehicle's path, which is a single mathematical dimension (not necessarily the same as a linear geometric dimension). The signals are stored, thus becoming functions, no longer of time, but of recording locations along that dimension. When the stored signals are read out later and combined with specific phase shifts, the result is the same as if the recorded data had been gathered by an equally long and shaped phased array. What is thus synthesized is a set of signals equivalent to what could have been received simultaneously by such an actual large-aperture (in one dimension) phased array. The SAR simulates (rather than synthesizes) that long one-dimensional phased array. Although the term in the title of this article has thus been incorrectly derived, it is now firmly established by half a century of usage.

While operation of a phased array is readily understood as a completely geometric technique, the fact that a synthetic aperture system gathers its data as it (or its target) moves at some speed means that phases which varied with the distance traveled originally varied with time, hence constituted temporal frequencies. Temporal frequencies being the variables commonly used by radar engineers, their analyses of SAR systems are usually (and very productively) couched in such terms. In particular, the variation of phase during flight over the length of the synthetic aperture is seen as a sequence of Doppler shifts of the received frequency from that of the transmitted frequency. Once the received data have been recorded and thus have become timeless, the SAR data-processing situation is also understandable as a special type of phased array, treatable as a completely geometric process.

The core of both the SAR and the phased array techniques is that the distances that radar waves travel to and back from each scene element consist of some integer number of wavelengths plus some fraction of a "final" wavelength. Those fractions cause differences between the phases of the re-radiation received at various SAR or array positions. Coherent detection is needed to capture the signal phase information in addition to the signal amplitude information. That type of detection requires finding the differences between the phases of the received signals and the simultaneous phase of a well-preserved sample of the transmitted illumination.

See also

- Alaska Satellite Facility

- Aperture synthesis

- Beamforming

- Earth observation satellite

- High Resolution Wide Swath SAR imaging

- Interferometric synthetic aperture radar (InSAR)

- Inverse synthetic aperture radar (ISAR)

- Magellan space probe

- Pulse-Doppler radar

- Radar MASINT

- Remote sensing

- SAR Lupe

- Seasat

- Sentinel-1

- Speckle noise

- Synthetic aperture sonar

- Synthetic Aperture Ultrasound

- Synthetic array heterodyne detection (SAHD)

- Synthetically thinned aperture radar

- TerraSAR-X

- Terrestrial SAR Interferometry (TInSAR)

- Very long baseline interferometry (VLBI)

- Wave radar

References

- ↑ Kirscht, Martin, and Carsten Rinke. "3D Reconstruction of Buildings and Vegetation from Synthetic Aperture Radar (SAR) Images." MVA. 1998.

- ↑ "Introduction to Airborne RADAR", G. W. Stimson, Chapter 1 (13 pp).

- ↑ 3.0 3.1 3.2 3.3 Tomographic SAR. Gianfranco Fornaro. National Research Council (CNR). Institute for Electromagnetic Sensing of the Environment (IREA) Via Diocleziano, 328, I-80124 Napoli, ITALY

- ↑ Oliver, C. and Quegan, S. Understanding Synthetic Aperture Radar Images. Artech House, Boston, 1998.

- ↑ 5.00 5.01 5.02 5.03 5.04 5.05 5.06 5.07 5.08 5.09 5.10 5.11 Synthetic Aperture Radar Imaging Using Spectral Estimation Techniques. Shivakumar Ramakrishnan, Vincent Demarcus, Jerome Le Ny, Neal Patwari, Joel Gussy. University of Michigan.

- ↑ "Science Engineering & Sustainability: Bridge monitoring with satellite data SAR". https://sciengsustainability.blogspot.com/2017/02/bridge-monitoring-with-satellite-data.html.

- ↑ Wu, Xuan; Zhang, Zhijie; Xiong, Shengqing; Zhang, Wanchang; Tang, Jiakui; Li, Zhenghao; An, Bangsheng; Li, Rui (12 April 2023). "A Near-Real-Time Flood Detection Method Based on Deep Learning and SAR Images" (in en). Remote Sensing 15 (8): 2046. doi:10.3390/rs15082046. ISSN 2072-4292. Bibcode: 2023RemS...15.2046W.

- ↑ Garg, Shubhika; Feinstein, Ben; Timnat, Shahar; Batchu, Vishal; Dror, Gideon; Rosenthal, Adi Gerzi; Gulshan, Varun (7 November 2023). "Cross-modal distillation for flood extent mapping" (in en). Environmental Data Science 2: e37. doi:10.1017/eds.2023.34. ISSN 2634-4602.

- ↑ A. Maity (2016). "Supervised Classification of RADARSAT-2 Polarimetric Data for Different Land Features". arXiv:1608.00501 [cs.CV].

- ↑ 10.0 10.1 10.2 Moreira, Alberto; Prats-Iraola, Pau; Younis, Marwan; Krieger, Gerhard; Hajnsek, Irena; P. Papathanassiou, Konstantinos (2013). "A tutorial on synthetic aperture radar". IEEE Geoscience and Remote Sensing Magazine 1 (1): 6–43. doi:10.1109/MGRS.2013.2248301. Bibcode: 2013IGRSM...1a...6M. https://elib.dlr.de/82313/1/SAR-Tutorial-March-2013.pdf.

- ↑ R. Bamler; P. Hartl (August 1998). "Synthetic aperture radar interferometry". Inverse Problems 14 (4): R1–R54. doi:10.1088/0266-5611/14/4/001. Bibcode: 1998InvPr..14R...1B.

- ↑ 12.0 12.1 G. Fornaro, G. Franceschetti, "SAR Interferometry", Chapter IV in G. Franceschetti, R. Lanari, Synthetic Aperture Radar Processing, CRC-PRESS, Boca Raton, Marzo 1999.

- ↑ 13.0 13.1 Fornaro, Gianfranco; Pascazio, Vito (2014). "SAR Interferometry and Tomography: Theory and Applications". Academic Press Library in Signal Processing: Volume 2 – Communications and Radar Signal Processing. 2. pp. 1043–1117. doi:10.1016/B978-0-12-396500-4.00020-X. ISBN 9780123965004.

- ↑ Reigber, Andreas; Lombardini, Fabrizio; Viviani, Federico; Nannini, Matteo; Martinez Del Hoyo, Antonio (2015). "Three-dimensional and higher-order imaging with tomographic SAR: Techniques, applications, issues". 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS). pp. 2915–2918. doi:10.1109/IGARSS.2015.7326425. ISBN 978-1-4799-7929-5.

- ↑ Allen, Chris. "Synthetic-Aperture Radar (SAR) Implementation". https://people.eecs.ku.edu/~callen58/826/826_SAR_Implementation_03.pptx.

- ↑ Xiaoxiang Zhu, "Spectral Estimation for Synthetic Aperture Radar Tomography", Earth Oriented Space Science and Technology – ESPACE, 19 September 2008.

- ↑ 17.0 17.1 DeGraaf, S. R. (May 1998). "SAR Imaging via Modern 2-D Spectral Estimation Methods". IEEE Transactions on Image Processing 7 (5): 729–761. doi:10.1109/83.668029. PMID 18276288. Bibcode: 1998ITIP....7..729D.

- ↑ D. Rodriguez. "A computational Kronecker-core array algebra SAR raw data generation modeling system". Signals, Systems and Computers, 2001. Conference Record of the Thirty-Fifth Asilomar Conference on Year: 2001 1.

- ↑ 19.0 19.1 T. Gough, Peter (June 1994). "A Fast Spectral Estimation Algorithm Based on the FFT". IEEE Transactions on Signal Processing 42 (6): 1317–1322. doi:10.1109/78.286949. Bibcode: 1994ITSP...42.1317G.

- ↑ 20.0 20.1 Datcu, Mihai; Popescu, Anca; Gavat, Inge (2008). "Complex SAR image characterization using space variant spectral analysis". 2008 IEEE Radar Conference.

- ↑ J. Capo4 (August 1969). "High resolution frequency wave-number spectrum analysis". Proceedings of the IEEE 57 (8): 1408–1418. doi:10.1109/PROC.1969.7278.

- ↑ 22.0 22.1 22.2 22.3 A. Jakobsson; S. L. Marple; P. Stoica (2000). "Computationally efficient two-dimensional Capon spectrum analysis". IEEE Transactions on Signal Processing 48 (9): 2651–2661. doi:10.1109/78.863072. Bibcode: 2000ITSP...48.2651J.

- ↑ I. Yildirim; N. S. Tezel; I. Erer; B. Yazgan. "A comparison of non-parametric spectral estimators for SAR imaging". Recent Advances in Space Technologies, 2003. RAST '03. International Conference on. Proceedings of Year: 2003.

- ↑ "Iterative realization of the 2-D Capon method applied in SAR image processing", IET International Radar Conference 2015.

- ↑ 25.0 25.1 R. Alty, Stephen; Jakobsson, Andreas; G. Larsson, Erik. "Efficient implementation of the time-recursive Capon and APES spectral estimators". Signal Processing Conference, 2004 12th European.

- ↑ Li, Jian; P. Stoica (1996). "An adaptive filtering approach to spectral estimation and SAR imaging". IEEE Transactions on Signal Processing 44 (6): 1469–1484. doi:10.1109/78.506612. Bibcode: 1996ITSP...44.1469L.

- ↑ Li, Jian; E. G. Larsson; P. Stoica (2002). "Amplitude spectrum estimation for two-dimensional gapped data". IEEE Transactions on Signal Processing 50 (6): 1343–1354. doi:10.1109/tsp.2002.1003059. Bibcode: 2002ITSP...50.1343L.

- ↑ 28.0 28.1 28.2 28.3 28.4 Moreira, Alberto. "Synthetic Aperture Radar: Principles and Applications". https://earth.esa.int/documents/10174/642943/6-LTC2013-SAR-Moreira.pdf.

- ↑ 29.0 29.1 29.2 29.3 29.4 29.5 Duersch, Michael. "Backprojection for Synthetic Aperture Radar". BYU ScholarsArchive.

- ↑ 30.0 30.1 Zhuo, LI; Chungsheng, LI (2011). "Back projection algorithm for high resolution GEO-SAR image formation". 2011 IEEE International Geoscience and Remote Sensing Symposium. 336–339. doi:10.1109/IGARSS.2011.6048967. ISBN 978-1-4577-1003-2.

- ↑ Xiaoling, Zhang; Chen, Cheng. "A new super-resolution 3D-SAR imaging method based on MUSIC algorithm". 2011 IEEE RadarCon (RADAR).

- ↑ A. F. Yegulalp. "Fast backprojection algorithm for synthetic aperture radar". Radar Conference, 1999. The Record of the 1999 IEEE Year: 1999.

- ↑ Mark T. Crockett, "An Introduction to Synthetic Aperture Radar:A High-Resolution Alternative to Optical Imaging"

- ↑ Bauck, Jerald (19 October 2019). A Rationale for Backprojection in Spotlight Synthetic Aperture Radar Image Formation (Report). doi:10.31224/osf.io/5wv2d. https://engrxiv.org/preprint/view/698/1540.

- ↑ C. Romero, High Resolution Simulation of Synthetic Aperture Radar Imaging. 2010. [Online]. Available: http://digitalcommons.calpoly.edu/cgi/viewcontent.cgi?article=1364&context=theses. Accessed: 14 November 2016.

- ↑ 36.0 36.1 36.2 36.3 Y. Yamaguchi; T. Moriyama; M. Ishido; H. Yamada (2005). "Four-component scattering model for polarimetric SAR image decomposition". IEEE Transactions on Geoscience and Remote Sensing 43 (8): 1699. doi:10.1109/TGRS.2005.852084. Bibcode: 2005ITGRS..43.1699Y.

- ↑ Woodhouse, H.I. 2009. Introduction to microwave remote sensing. CRC Press, Taylor & Fancis Group, Special Indian Edition.

- ↑ 38.0 38.1 38.2 A. Freeman; S. L. Durden (May 1998). "A three-component scattering model for polarimetric SAR data". IEEE Transactions on Geoscience and Remote Sensing 36 (3): 963–973. doi:10.1109/36.673687. Bibcode: 1998ITGRS..36..963F.

- ↑ "PolSARpro v6.0 (Biomass Edition) Toolbox". ESA. https://earth.esa.int/documents/653194/656796/Polarimetric_Decompositions.pdf.

- ↑ "Gianfranco Fornaro; Diego Reale; Francesco Serafino,"Four-Dimensional SAR Imaging for Height Estimation and Monitoring of Single and Double Scatterers"". IEEE Transactions on Geoscience and Remote Sensing 47 (1). 2009.

- ↑ "Haijian Zhang; Wen Yang; Jiayu Chen; Hong Sun," Improved Classification of Polarimetric SAR Data Based on Four-component Scattering Model"". 2006 CIE International Conference on Radar.

- ↑ Bathke, H.; Shirzaei, M.; Walter, T. R. (2011). "Inflation and deflation at the steep‐sided Llaima stratovolcano (Chile) detected by using InSAR". Geophys. Res. Lett. 38 (10): L10304. doi:10.1029/2011GL047168. Bibcode: 2011GeoRL..3810304B. http://gfzpublic.gfz-potsdam.de/pubman/item/escidoc:243727.

- ↑ Dawson, J.; Cummins, P.; Tregoning, P.; Leonard, M. (2008). "Shallow intraplate earthquakes in Western Australia observed by Interferometric Synthetic Aperture Radar". J. Geophys. Res. 113 (B11): B11408. doi:10.1029/2008JB005807. Bibcode: 2008JGRB..11311408D.

- ↑ "Volcano Monitoring: Using InSAR to see changes in volcano shape- Incorporated Research Institutions for Seismology". https://www.iris.edu/hq/inclass/animation/volcano_monitoring_using_insar_to_see_changes_in_volcano_shape.

- ↑ 45.0 45.1 45.2 Lombardini, Fabrizio; Viviani, Federico (2014). "Multidimensional SAR Tomography: Advances for Urban and Prospects for Forest/Ice Applications". 2014 11th European Radar Conference. pp. 225–228. doi:10.1109/EuRAD.2014.6991248. ISBN 978-2-8748-7037-8.

- ↑ "Synthetic Aperture Radar", L. J. Cutrona, Chapter 23 (25 pp) of the McGraw Hill "Radar Handbook", 1970. (Written while optical data processing was still the only workable method, by the person who first led that development.)

- ↑ "A short history of the Optics Group of the Willow Run Laboratories", Emmett N. Leith, in Trends in Optics: Research, Development, and Applications (book), Anna Consortini, Academic Press, San Diego: 1996.

- ↑ "Sighted Automation and Fine Resolution Imaging", W. M. Brown, J. L. Walker, and W. R. Boario, IEEE Transactions on Aerospace and Electronic Systems, Vol. 40, No. 4, October 2004, pp 1426–1445.

Bibliography

- Curlander, John C.; McDonough, Robert N.. Synthetic Aperture Radar: Systems and Signal Processing. Remote Sensing and Image Processing. Wiley.

- Gart, Jason H (2006). Electronics and Aerospace Industry in Cold War Arizona, 1945–1968: Motorola, Hughes Aircraft, Goodyear Aircraft (Thesis). Arizona State University.

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K. P. (2013). "A tutorial on synthetic aperture radar". IEEE Geoscience and Remote Sensing Magazine 1 (1): 6–43. doi:10.1109/MGRS.2013.2248301. Bibcode: 2013IGRSM...1a...6M. https://elib.dlr.de/82313/1/SAR-Tutorial-March-2013.pdf.

- Woodhouse, Iain H (2006). Introduction to Microwave Remote Sensing. CRC Press.

External links

- InSAR measurements from the Space Shuttle

- Images from the Space Shuttle SAR instrument

- The Alaska Satellite Facility has numerous technical documents, including an introductory text on SAR theory and scientific applications

- SAR Journal SAR Journal tracks the Synthetic Aperture Radar (SAR) industry

- NASA radar reveals hidden remains at ancient Angkor – Jet Propulsion Laboratory

|