Base rate fallacy

The base rate fallacy, also called base rate neglect[2] or base rate bias, is a type of fallacy in which people tend to ignore the base rate (e.g., general prevalence) in favor of the individuating information (i.e., information pertaining only to a specific case).[3] Base rate neglect is a specific form of the more general extension neglect.

It is also called the prosecutor's fallacy or defense attorney's fallacy when applied to the results of statistical tests (such as DNA tests) in the context of law proceedings. These terms were introduced by William C. Thompson and Edward Schumann in 1987,[4][5] although it has been argued that their definition of the prosecutor's fallacy extends to many additional invalid imputations of guilt or liability that are not analyzable as errors in base rates or Bayes's theorem.[6]

False positive paradox

An example of the base rate fallacy is the false positive paradox (also known as accuracy paradox). This paradox describes situations where there are more false positive test results than true positives (this means the classifier has a low precision). For example, if a facial recognition camera can identify wanted criminals 99% accurately, but analyzes 10,000 people a day, the high accuracy is outweighed by the number of tests, and the program's list of criminals will likely have far more false positives than true. The probability of a positive test result is determined not only by the accuracy of the test but also by the characteristics of the sampled population.[7] When the prevalence, the proportion of those who have a given condition, is lower than the test's false positive rate, even tests that have a very low risk of giving a false positive in an individual case will give more false than true positives overall.[8]

It is especially counter-intuitive when interpreting a positive result in a test on a low-prevalence population after having dealt with positive results drawn from a high-prevalence population.[8] If the false positive rate of the test is higher than the proportion of the new population with the condition, then a test administrator whose experience has been drawn from testing in a high-prevalence population may conclude from experience that a positive test result usually indicates a positive subject, when in fact a false positive is far more likely to have occurred.

Examples

Example 1: Disease

High-prevalence population

| Number of people |

Infected | Uninfected | Total |

|---|---|---|---|

| Test positive |

400 (true positive) |

30 (false positive) |

430 |

| Test negative |

0 (false negative) |

570 (true negative) |

570 |

| Total | 400 | 600 | 1000 |

Imagine running an infectious disease test on a population A of 1000 persons, of which 40% are infected. The test has a false positive rate of 5% (0.05) and a false negative rate of zero. The expected outcome of the 1000 tests on population A would be:

- Infected and test indicates disease (true positive)

- 1000 × 40/100 = 400 people would receive a true positive

- Uninfected and test indicates disease (false positive)

- 1000 × 100 – 40/100 × 0.05 = 30 people would receive a false positive

- The remaining 570 tests are correctly negative.

So, in population A, a person receiving a positive test could be over 93% confident (400/30 + 400) that it correctly indicates infection.

Low-prevalence population

| Number of people |

Infected | Uninfected | Total |

|---|---|---|---|

| Test positive |

20 (true positive) |

49 (false positive) |

69 |

| Test negative |

0 (false negative) |

931 (true negative) |

931 |

| Total | 20 | 980 | 1000 |

Now consider the same test applied to population B, of which only 2% are infected. The expected outcome of 1000 tests on population B would be:

- Infected and test indicates disease (true positive)

- 1000 × 2/100 = 20 people would receive a true positive

- Uninfected and test indicates disease (false positive)

- 1000 × 100 – 2/100 × 0.05 = 49 people would receive a false positive

- The remaining 931 tests are correctly negative.

In population B, only 20 of the 69 total people with a positive test result are actually infected. So, the probability of actually being infected after one is told that one is infected is only 29% (20/20 + 49) for a test that otherwise appears to be "95% accurate".

A tester with experience of group A might find it a paradox that in group B, a result that had usually correctly indicated infection is now usually a false positive. The confusion of the posterior probability of infection with the prior probability of receiving a false positive is a natural error after receiving a health-threatening test result.

Example 2: Drunk drivers

Imagine that a group of police officers have breathalyzers displaying false drunkenness in 5% of the cases in which the driver is sober. However, the breathalyzers never fail to detect a truly drunk person. One in a thousand drivers is driving drunk. Suppose the police officers then stop a driver at random to administer a breathalyzer test. It indicates that the driver is drunk. No other information is known about them.

Many would estimate the probability that the driver is drunk as high as 95%, but the correct probability is about 2%.

An explanation for this is as follows: on average, for every 1,000 drivers tested,

- 1 driver is drunk, and it is 100% certain that for that driver there is a true positive test result, so there is 1 true positive test result

- 999 drivers are not drunk, and among those drivers there are 5% false positive test results, so there are 49.95 false positive test results

Therefore, the probability that any given driver among the 1 + 49.95 = 50.95 positive test results really is drunk is .

The validity of this result does, however, hinge on the validity of the initial assumption that the police officer stopped the driver truly at random, and not because of bad driving. If that or another non-arbitrary reason for stopping the driver was present, then the calculation also involves the probability of a drunk driver driving competently and a non-drunk driver driving (in-)competently.

More formally, the same probability of roughly 0.02 can be established using Bayes's theorem. The goal is to find the probability that the driver is drunk given that the breathalyzer indicated they are drunk, which can be represented as

where D means that the breathalyzer indicates that the driver is drunk. Using Bayes's theorem,

The following information is known in this scenario:

- and

As can be seen from the formula, one needs p(D) for Bayes' theorem, which can be computed from the preceding values using the law of total probability:

which gives

Plugging these numbers into Bayes' theorem, one finds that

which is the precision of the test.

Example 3: Terrorist identification

In a city of 1 million inhabitants, let there be 100 terrorists and 999,900 non-terrorists. To simplify the example, it is assumed that all people present in the city are inhabitants. Thus, the base rate probability of a randomly selected inhabitant of the city being a terrorist is 0.0001, and the base rate probability of that same inhabitant being a non-terrorist is 0.9999. In an attempt to catch the terrorists, the city installs an alarm system with a surveillance camera and automatic facial recognition software.

The software has two failure rates of 1%:

- The false negative rate: If the camera scans a terrorist, a bell will ring 99% of the time, and it will fail to ring 1% of the time.

- The false positive rate: If the camera scans a non-terrorist, a bell will not ring 99% of the time, but it will ring 1% of the time.

Suppose now that an inhabitant triggers the alarm. Someone making the base rate fallacy would infer that there is a 99% probability that the detected person is a terrorist. Although the inference seems to make sense, it is actually bad reasoning, and a calculation below will show that the probability of a terrorist is actually near 1%, not near 99%.

The fallacy arises from confusing the natures of two different failure rates. The 'number of non-bells per 100 terrorists' (P(¬B | T), or the probability that the bell fails to ring given the inhabitant is a terrorist) and the 'number of non-terrorists per 100 bells' (P(¬T | B), or the probability that the inhabitant is a non-terrorist given the bell rings) are unrelated quantities; one does not necessarily equal—or even be close to—the other. To show this, consider what happens if an identical alarm system were set up in a second city with no terrorists at all. As in the first city, the alarm sounds for 1 out of every 100 non-terrorist inhabitants detected, but unlike in the first city, the alarm never sounds for a terrorist. Therefore, 100% of all occasions of the alarm sounding are for non-terrorists, but a false negative rate cannot even be calculated. The 'number of non-terrorists per 100 bells' in that city is 100, yet P(T | B) = 0%. There is zero chance that a terrorist has been detected given the ringing of the bell.

Imagine that the first city's entire population of one million people pass in front of the camera. About 99 of the 100 terrorists will trigger the alarm—and so will about 9,999 of the 999,900 non-terrorists. Therefore, about 10,098 people will trigger the alarm, among which about 99 will be terrorists. The probability that a person triggering the alarm actually is a terrorist is only about 99 in 10,098, which is less than 1% and very, very far below the initial guess of 99%.

The base rate fallacy is so misleading in this example because there are many more non-terrorists than terrorists, and the number of false positives (non-terrorists scanned as terrorists) is so much larger than the true positives (terrorists scanned as terrorists).

Multiple practitioners have argued that as the base rate of terrorism is extremely low, using data mining and predictive algorithms to identify terrorists cannot feasibly work due to the false positive paradox.[9][10][11][12] Estimates of the number of false positives for each accurate result vary from over ten thousand[12] to one billion;[10] consequently, investigating each lead would be cost- and time-prohibitive.[9][11] The level of accuracy required to make these models viable is likely unachievable. Foremost, the low base rate of terrorism also means there is a lack of data with which to make an accurate algorithm.[11] Further, in the context of detecting terrorism false negatives are highly undesirable and thus must be minimised as much as possible; however, this requires increasing sensitivity at the cost of specificity, increasing false positives.[12] It is also questionable whether the use of such models by law enforcement would meet the requisite burden of proof given that over 99% of results would be false positives.[12]

Example 4: biological testing of a suspect

A crime is committed. Forensic analysis determines that the perpetrator has a certain blood type shared by 10% of the population. A suspect is arrested, and found to have that same blood type.

A prosecutor might charge the suspect with the crime on that basis alone, and claim at trial that the probability that the defendant is guilty is 90%. However, this conclusion is only close to correct if the defendant was selected as the main suspect based on robust evidence discovered prior to the blood test and unrelated to it. Otherwise, the reasoning presented is flawed, as it overlooks the high prior probability (that is, prior to the blood test) that he is a random innocent person. Assume, for instance, that 1000 people live in the town where the crime occurred. This means that 100 people live there who have the perpetrator's blood type, of whom only one is the true perpetrator; therefore, the true probability that the defendant is guilty – based only on the fact that his blood type matches that of the killer – is only 1%, far less than the 90% argued by the prosecutor.

The prosecutor's fallacy involves assuming that the prior probability of a random match is equal to the probability that the defendant is innocent. When using it, a prosecutor questioning an expert witness may ask: "The odds of finding this evidence on an innocent man are so small that the jury can safely disregard the possibility that this defendant is innocent, correct?"[13] The claim assumes that the probability that evidence is found on an innocent man is the same as the probability that a man is innocent given that evidence was found on him, which is not true. Whilst the former is usually small (10% in the previous example) due to good forensic evidence procedures, the latter (99% in that example) does not directly relate to it and will often be much higher, since, in fact, it depends on the likely quite high prior odds of the defendant being a random innocent person.

Examples in law

O. J. Simpson trial

O. J. Simpson was tried and acquitted in 1995 for the murders of his ex-wife Nicole Brown Simpson and her friend Ronald Goldman.

Crime scene blood matched Simpson's with characteristics shared by 1 in 400 people. However, the defense argued that the number of people from Los Angeles matching the sample could fill a football stadium and that the figure of 1 in 400 was useless.[14][15] It would have been incorrect, and an example of prosecutor's fallacy, to rely solely on the "1 in 400" figure to deduce that a given person matching the sample would be likely to be the culprit.

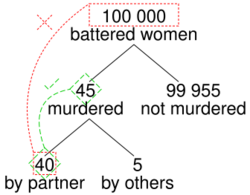

In the same trial, the prosecution presented evidence that Simpson had been violent toward his wife. The defense argued that there was only one woman murdered for every 2500 women who were subjected to spousal abuse, and that any history of Simpson being violent toward his wife was irrelevant to the trial. However, the reasoning behind the defense's calculation was fallacious. According to author Gerd Gigerenzer, the correct probability requires additional context: Simpson's wife had not only been subjected to domestic violence, but rather subjected to domestic violence (by Simpson) and killed (by someone). Gigerenzer writes "the chances that a batterer actually murdered his partner, given that she has been killed, is about 8 in 9 or approximately 90%".[16] While most cases of spousal abuse do not end in murder, most cases of murder where there is a history of spousal abuse were committed by the spouse.

Sally Clark case

Sally Clark, a British woman, was accused in 1998 of having killed her first child at 11 weeks of age and then her second child at 8 weeks of age. The prosecution had expert witness Sir Roy Meadow, a professor and consultant paediatrician,[17] testify that the probability of two children in the same family dying from SIDS is about 1 in 73 million. That was much less frequent than the actual rate measured in historical data – Meadow estimated it from single-SIDS death data, and the assumption that the probability of such deaths should be uncorrelated between infants.[18]

Meadow acknowledged that 1-in-73 million is not an impossibility, but argued that such accidents would happen "once every hundred years" and that, in a country of 15 million 2-child families, it is vastly more likely that the double-deaths are due to Münchausen syndrome by proxy than to such a rare accident. However, there is good reason to suppose that the likelihood of a death from SIDS in a family is significantly greater if a previous child has already died in these circumstances, (a genetic predisposition to SIDS is likely to invalidate that assumed statistical independence[19]) making some families more susceptible to SIDS and the error an outcome of the ecological fallacy.[20] The likelihood of two SIDS deaths in the same family cannot be soundly estimated by squaring the likelihood of a single such death in all otherwise similar families.[21]

The 1-in-73 million figure greatly underestimated the chance of two successive accidents, but even if that assessment were accurate, the court seems to have missed the fact that the 1-in-73 million number meant nothing on its own. As an a priori probability, it should have been weighed against the a priori probabilities of the alternatives. Given that two deaths had occurred, one of the following explanations must be true, and all of them are a priori extremely improbable:

- Two successive deaths in the same family, both by SIDS

- Double homicide (the prosecution's case)

- Other possibilities (including one homicide and one case of SIDS)

It is unclear whether an estimate of the probability for the second possibility was ever proposed during the trial, or whether the comparison of the first two probabilities was understood to be the key estimate to make in the statistical analysis assessing the prosecution's case against the case for innocence.

Clark was convicted in 1999, resulting in a press release by the Royal Statistical Society which pointed out the mistakes.[22]

In 2002, Ray Hill (a mathematics professor at Salford) attempted to accurately compare the chances of these two possible explanations; he concluded that successive accidents are between 4.5 and 9 times more likely than are successive murders, so that the a priori odds of Clark's guilt were between 4.5 to 1 and 9 to 1 against.[23]

After the court found that the forensic pathologist who had examined both babies had withheld exculpatory evidence, a higher court later quashed Clark's conviction, on 29 January 2003.[24]

Findings in psychology

In experiments, people have been found to prefer individuating information over general information when the former is available.[25][26][27]

In some experiments, students were asked to estimate the grade point averages (GPAs) of hypothetical students. When given relevant statistics about GPA distribution, students tended to ignore them if given descriptive information about the particular student even if the new descriptive information was obviously of little or no relevance to school performance.[26] This finding has been used to argue that interviews are an unnecessary part of the college admissions process because interviewers are unable to pick successful candidates better than basic statistics.

Psychologists Daniel Kahneman and Amos Tversky attempted to explain this finding in terms of a simple rule or "heuristic" called representativeness. They argued that many judgments relating to likelihood, or to cause and effect, are based on how representative one thing is of another, or of a category.[26] Kahneman considers base rate neglect to be a specific form of extension neglect.[28] Richard Nisbett has argued that some attributional biases like the fundamental attribution error are instances of the base rate fallacy: people do not use the "consensus information" (the "base rate") about how others behaved in similar situations and instead prefer simpler dispositional attributions.[29]

There is considerable debate in psychology on the conditions under which people do or do not appreciate base rate information.[30][31] Researchers in the heuristics-and-biases program have stressed empirical findings showing that people tend to ignore base rates and make inferences that violate certain norms of probabilistic reasoning, such as Bayes' theorem. The conclusion drawn from this line of research was that human probabilistic thinking is fundamentally flawed and error-prone.[32] Other researchers have emphasized the link between cognitive processes and information formats, arguing that such conclusions are not generally warranted.[33][34]

Consider again Example 2 from above. The required inference is to estimate the (posterior) probability that a (randomly picked) driver is drunk, given that the breathalyzer test is positive. Formally, this probability can be calculated using Bayes' theorem, as shown above. However, there are different ways of presenting the relevant information. Consider the following, formally equivalent variant of the problem:

- 1 out of 1000 drivers are driving drunk. The breathalyzers never fail to detect a truly drunk person. For 50 out of the 999 drivers who are not drunk the breathalyzer falsely displays drunkenness. Suppose the policemen then stop a driver at random, and force them to take a breathalyzer test. It indicates that they are drunk. No other information is known about them. Estimate the probability the driver is really drunk.

In this case, the relevant numerical information—p(drunk), p(D | drunk), p(D | sober)—is presented in terms of natural frequencies with respect to a certain reference class (see reference class problem). Empirical studies show that people's inferences correspond more closely to Bayes' rule when information is presented this way, helping to overcome base-rate neglect in laypeople[34] and experts.[35] As a consequence, organizations like the Cochrane Collaboration recommend using this kind of format for communicating health statistics.[36] Teaching people to translate these kinds of Bayesian reasoning problems into natural frequency formats is more effective than merely teaching them to plug probabilities (or percentages) into Bayes' theorem.[37] It has also been shown that graphical representations of natural frequencies (e.g., icon arrays, hypothetical outcome plots) help people to make better inferences.[37][38][39][40]

One important reason why natural frequency formats are helpful is that this information format facilitates the required inference because it simplifies the necessary calculations. This can be seen when using an alternative way of computing the required probability p(drunk|D):

where N(drunk ∩ D) denotes the number of drivers that are drunk and get a positive breathalyzer result, and N(D) denotes the total number of cases with a positive breathalyzer result. The equivalence of this equation to the above one follows from the axioms of probability theory, according to which N(drunk ∩ D) = N × p (D | drunk) × p (drunk). Importantly, although this equation is formally equivalent to Bayes' rule, it is not psychologically equivalent. Using natural frequencies simplifies the inference because the required mathematical operation can be performed on natural numbers, instead of normalized fractions (i.e., probabilities), because it makes the high number of false positives more transparent, and because natural frequencies exhibit a "nested-set structure".[41][42]

Not every frequency format facilitates Bayesian reasoning.[42][43] Natural frequencies refer to frequency information that results from natural sampling,[44] which preserves base rate information (e.g., number of drunken drivers when taking a random sample of drivers). This is different from systematic sampling, in which base rates are fixed a priori (e.g., in scientific experiments). In the latter case it is not possible to infer the posterior probability p(drunk | positive test) from comparing the number of drivers who are drunk and test positive compared to the total number of people who get a positive breathalyzer result, because base rate information is not preserved and must be explicitly re-introduced using Bayes' theorem.

See also

- Precision and recall

- Data dredging – Misuse of data analysis

- Evidence under Bayes' theorem

- Philosophy:List of cognitive biases – Systematic patterns of deviation from norm or rationality in judgment

- List of paradoxes – List of statements that appear to contradict themselves

- Prevention paradox – Situation in epidemiology

- Simpson's paradox – Error in statistical reasoning with groups

- Intuitive statistics

References

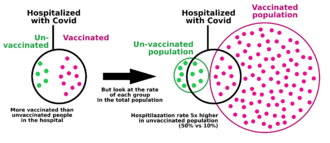

- ↑ "COVID-19 Cases, Hospitalizations, and Deaths by Vaccination Status". Washington State Department of Health. 2023-01-18. https://doh.wa.gov/sites/default/files/2022-02/421-010-CasesInNotFullyVaccinated.pdf. "If the exposure to COVID-19 stays the same, as more individuals are vaccinated, more cases, hospitalizations, and deaths will be in vaccinated individuals, as they will continue to make up more and more of the population. For example, if 100% of the population was vaccinated, 100% of cases would be among vaccinated people."

- ↑ Welsh, Matthew B.; Navarro, Daniel J. (2012). "Seeing is believing: Priors, trust, and base rate neglect". Organizational Behavior and Human Decision Processes 119 (1): 1–14. doi:10.1016/j.obhdp.2012.04.001. ISSN 0749-5978. http://dx.doi.org/10.1016/j.obhdp.2012.04.001.

- ↑ "Logical Fallacy: The Base Rate Fallacy". Fallacyfiles.org. http://www.fallacyfiles.org/baserate.html.

- ↑ Thompson, W.C.; Schumann, E.L. (1987). "Interpretation of Statistical Evidence in Criminal Trials: The Prosecutor's Fallacy and the Defense Attorney's Fallacy". Law and Human Behavior 2 (3): 167. doi:10.1007/BF01044641.

- ↑ Fountain, John; Gunby, Philip (February 2010). "Ambiguity, the Certainty Illusion, and Gigerenzer's Natural Frequency Approach to Reasoning with Inverse Probabilities". University of Canterbury. p. 6. http://uctv.canterbury.ac.nz/viewfile.php/4/sharing/55/74/74/NZEPVersionofImpreciseProbabilitiespaperVersi.pdf.[yes|permanent dead link|dead link}}]

- ↑ Suss, Richard A. (October 4, 2023). "The Prosecutor's Fallacy Framed as a Sample Space Substitution" (in en). OSF Preprints. doi:10.31219/osf.io/cs248.

- ↑ Rheinfurth, M. H.; Howell, L. W. (March 1998). Probability and Statistics in Aerospace Engineering. NASA. p. 16. https://ntrs.nasa.gov/citations/19980045313. "MESSAGE: False positive tests are more probable than true positive tests when the overall population has a low prevalence of the disease. This is called the false-positive paradox."

- ↑ 8.0 8.1 Vacher, H. L. (May 2003). "Quantitative literacy - drug testing, cancer screening, and the identification of igneous rocks". Journal of Geoscience Education: 2. http://findarticles.com/p/articles/mi_qa4089/is_200305/ai_n9252796/pg_2/. "At first glance, this seems perverse: the less the students as a whole use steroids, the more likely a student identified as a user will be a non-user. This has been called the False Positive Paradox". - Citing: Gonick, L.; Smith, W. (1993). The cartoon guide to statistics. New York: Harper Collins. p. 49.

- ↑ 9.0 9.1 Munk, Timme Bisgaard (1 September 2017). "100,000 false positives for every real terrorist: Why anti-terror algorithms don't work". First Monday 22 (9). doi:10.5210/fm.v22i9.7126. https://firstmonday.org/ojs/index.php/fm/article/view/7126.

- ↑ 10.0 10.1 Schneier, Bruce. "Why Data Mining Won't Stop Terror" (in en-US). Wired. ISSN 1059-1028. https://www.wired.com/2006/03/why-data-mining-wont-stop-terror-2/. Retrieved 2022-08-30.

- ↑ 11.0 11.1 11.2 Jonas, Jeff; Harper, Jim (2006-12-11). "Effective Counterterrorism and the Limited Role of Predictive Data Mining". https://www.cato.org/policy-analysis/effective-counterterrorism-limited-role-predictive-data-mining#.

- ↑ 12.0 12.1 12.2 12.3 Sageman, Marc (2021-02-17). "The Implication of Terrorism's Extremely Low Base Rate". Terrorism and Political Violence 33 (2): 302–311. doi:10.1080/09546553.2021.1880226. ISSN 0954-6553. https://doi.org/10.1080/09546553.2021.1880226.

- ↑ Fenton, Norman; Neil, Martin; Berger, Daniel (June 2016). "Bayes and the Law". Annual Review of Statistics and Its Application 3 (1): 51–77. doi:10.1146/annurev-statistics-041715-033428. PMID 27398389. Bibcode: 2016AnRSA...3...51F.

- ↑ Robertson, B., & Vignaux, G. A. (1995). Interpreting evidence: Evaluating forensic evidence in the courtroom. Chichester: John Wiley and Sons.

- ↑ Rossmo, D. Kim (2009). Criminal Investigative Failures. CRC Press Taylor & Francis Group.

- ↑ Gigerenzer, G., Reckoning with Risk: Learning to Live with Uncertainty, Penguin, (2003)

- ↑ "Resolution adopted by the Senate (21 October 1998) on the retirement of Professor Sir Roy Meadow". Reporter (University of Leeds) (428). 30 November 1998. http://reporter.leeds.ac.uk/428/mead.htm. Retrieved 2015-10-17.

- ↑ The population-wide probability of a SIDS fatality was about 1 in 1,303; Meadow generated his 1-in-73 million estimate from the lesser probability of SIDS death in the Clark household, which had lower risk factors (e.g. non-smoking). In this sub-population he estimated the probability of a single death at 1 in 8,500. See: Joyce, H. (September 2002). "Beyond reasonable doubt" (pdf). plus.maths.org. http://plus.maths.org/issue21/features/clark/.. Professor Ray Hill questioned even this first step (1/8,500 vs 1/1,300) in two ways: firstly, on the grounds that it was biased, excluding those factors that increased risk (especially that both children were boys) and (more importantly) because reductions in SIDS risk factors will proportionately reduce murder risk factors, so that the relative frequencies of Münchausen syndrome by proxy and SIDS will remain in the same ratio as in the general population: Hill, Ray (2002). "Cot Death or Murder? – Weighing the Probabilities". http://www.mrbartonmaths.com/resources/a%20level/s1/Beyond%20reasonable%20doubt.doc. "it is patently unfair to use the characteristics which basically make her a good, clean-living, mother as factors which count against her. Yes, we can agree that such factors make a natural death less likely – but those same characteristics also make murder less likely."

- ↑ Sweeney, John; Law, Bill (July 15, 2001). "Gene find casts doubt on double 'cot death' murders". The Observer. http://observer.guardian.co.uk/print/0,,4221973-102285,00.html.

- ↑ Vincent Scheurer. "Convicted on Statistics?". http://understandinguncertainty.org/node/545#notes.

- ↑ Hill, R. (2004). "Multiple sudden infant deaths – coincidence or beyond coincidence?". Paediatric and Perinatal Epidemiology 18 (5): 321. doi:10.1111/j.1365-3016.2004.00560.x. PMID 15367318. http://www.cse.salford.ac.uk/staff/RHill/ppe_5601.pdf. Retrieved 2010-06-13.

- ↑ "Royal Statistical Society concerned by issues raised in Sally Clark case". 23 October 2001. http://www.rss.org.uk/uploadedfiles/documentlibrary/744.pdf. "Society does not tolerate doctors making serious clinical errors because it is widely understood that such errors could mean the difference between life and death. The case of R v. Sally Clark is one example of a medical expert witness making a serious statistical error, one which may have had a profound effect on the outcome of the case"

- ↑ The uncertainty in this range is mainly driven by uncertainty in the likelihood of killing a second child, having killed a first, see: Hill, R. (2004). "Multiple sudden infant deaths – coincidence or beyond coincidence?". Paediatric and Perinatal Epidemiology 18 (5): 322–323. doi:10.1111/j.1365-3016.2004.00560.x. PMID 15367318. http://www.cse.salford.ac.uk/staff/RHill/ppe_5601.pdf. Retrieved 2010-06-13.

- ↑ "R v Clark. [2003 EWCA Crim 1020 (11 April 2003)"]. http://www.bailii.org/ew/cases/EWCA/Crim/2003/1020.html.

- ↑ Bar-Hillel, Maya (1980). "The base-rate fallacy in probability judgments". Acta Psychologica 44 (3): 211–233. doi:10.1016/0001-6918(80)90046-3. http://ratio.huji.ac.il/sites/default/files/publications/dp732.pdf.

- ↑ 26.0 26.1 26.2 Kahneman, Daniel; Amos Tversky (1973). "On the psychology of prediction". Psychological Review 80 (4): 237–251. doi:10.1037/h0034747.

- ↑ Tversky, Amos; Kahneman, Daniel (1974-09-27). "Judgment under uncertainty: Heuristics and biases". Science 185 (4157): 1124–1131. doi:10.1126/science.185.4157.1124. PMID 17835457. Bibcode: 1974Sci...185.1124T.

- ↑ Kahneman, Daniel (2000). "Evaluation by moments, past and future". in Daniel Kahneman and Amos Tversky. Choices, Values and Frames. ISBN 0-521-62749-4.

- ↑ Nisbett, Richard E.; E. Borgida; R. Crandall; H. Reed (1976). "Popular induction: Information is not always informative". in J. S. Carroll & J. W. Payne. Cognition and social behavior. 2. John Wiley & Sons, Incorporated. pp. 227–236. ISBN 0-470-99007-4.

- ↑ Koehler, J. J. (2010). "The base rate fallacy reconsidered: Descriptive, normative, and methodological challenges". Behavioral and Brain Sciences 19: 1–17. doi:10.1017/S0140525X00041157.

- ↑ Barbey, A. K.; Sloman, S. A. (2007). "Base-rate respect: From ecological rationality to dual processes". Behavioral and Brain Sciences 30 (3): 241–254; discussion 255–297. doi:10.1017/S0140525X07001653. PMID 17963533.

- ↑ Tversky, A.; Kahneman, D. (1974). "Judgment under Uncertainty: Heuristics and Biases". Science 185 (4157): 1124–1131. doi:10.1126/science.185.4157.1124. PMID 17835457. Bibcode: 1974Sci...185.1124T.

- ↑ Cosmides, Leda; John Tooby (1996). "Are humans good intuitive statisticians after all? Rethinking some conclusions of the literature on judgment under uncertainty". Cognition 58: 1–73. doi:10.1016/0010-0277(95)00664-8.

- ↑ 34.0 34.1 Gigerenzer, G.; Hoffrage, U. (1995). "How to improve Bayesian reasoning without instruction: Frequency formats". Psychological Review 102 (4): 684. doi:10.1037/0033-295X.102.4.684.

- ↑ Hoffrage, U.; Lindsey, S.; Hertwig, R.; Gigerenzer, G. (2000). "Medicine: Communicating Statistical Information". Science 290 (5500): 2261–2262. doi:10.1126/science.290.5500.2261. PMID 11188724.

- ↑ Akl, E. A.; Oxman, A. D.; Herrin, J.; Vist, G. E.; Terrenato, I.; Sperati, F.; Costiniuk, C.; Blank, D. et al. (2011). Schünemann, Holger. ed. "Using alternative statistical formats for presenting risks and risk reductions". The Cochrane Database of Systematic Reviews 2011 (3): CD006776. doi:10.1002/14651858.CD006776.pub2. PMID 21412897.

- ↑ 37.0 37.1 Sedlmeier, P.; Gigerenzer, G. (2001). "Teaching Bayesian reasoning in less than two hours". Journal of Experimental Psychology: General 130 (3): 380–400. doi:10.1037/0096-3445.130.3.380. PMID 11561916. http://edoc.mpg.de/175640.

- ↑ Brase, G. L. (2009). "Pictorial representations in statistical reasoning". Applied Cognitive Psychology 23 (3): 369–381. doi:10.1002/acp.1460.

- ↑ Edwards, A.; Elwyn, G.; Mulley, A. (2002). "Explaining risks: Turning numerical data into meaningful pictures". BMJ 324 (7341): 827–830. doi:10.1136/bmj.324.7341.827. PMID 11934777.

- ↑ Kim, Yea-Seul; Walls, Logan A.; Krafft, Peter; Hullman, Jessica (2 May 2019). "A Bayesian Cognition Approach to Improve Data Visualization". Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. pp. 1–14. doi:10.1145/3290605.3300912. ISBN 9781450359702. https://idl.cs.washington.edu/papers/bayesian-cognition-vis/.

- ↑ Girotto, V.; Gonzalez, M. (2001). "Solving probabilistic and statistical problems: A matter of information structure and question form". Cognition 78 (3): 247–276. doi:10.1016/S0010-0277(00)00133-5. PMID 11124351.

- ↑ 42.0 42.1 Hoffrage, U.; Gigerenzer, G.; Krauss, S.; Martignon, L. (2002). "Representation facilitates reasoning: What natural frequencies are and what they are not". Cognition 84 (3): 343–352. doi:10.1016/S0010-0277(02)00050-1. PMID 12044739.

- ↑ Gigerenzer, G.; Hoffrage, U. (1999). "Overcoming difficulties in Bayesian reasoning: A reply to Lewis and Keren (1999) and Mellers and McGraw (1999)". Psychological Review 106 (2): 425. doi:10.1037/0033-295X.106.2.425. http://edoc.mpg.de/2936.

- ↑ Kleiter, G. D. (1994). "Natural Sampling: Rationality without Base Rates". Contributions to Mathematical Psychology, Psychometrics, and Methodology. Recent Research in Psychology. pp. 375–388. doi:10.1007/978-1-4612-4308-3_27. ISBN 978-0-387-94169-1.

External links

- The Base Rate Fallacy The Fallacy Files

|