Negative hypergeometric distribution

|

Probability mass function  | |||

|

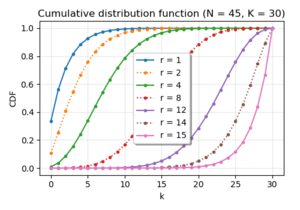

Cumulative distribution function  | |||

| Parameters |

[math]\displaystyle{ N \in \left\{0,1,2,\dots\right\} }[/math] - total number of elements | ||

|---|---|---|---|

| Support | [math]\displaystyle{ k \in \left\{0,\, \dots,\, K\right\} }[/math] - number of successes when experiment is stopped. | ||

| pmf | [math]\displaystyle{ \frac{{{k+r-1}\choose{k}}{{N-r-k}\choose{K-k}}}{N \choose K} }[/math] | ||

| Mean | [math]\displaystyle{ r\frac{K}{N-K+1} }[/math] | ||

| Variance | [math]\displaystyle{ r\frac{(N+1)K}{(N-K+1)(N-K+2)}[1-\frac{r}{N-K+1}] }[/math] | ||

In probability theory and statistics, the negative hypergeometric distribution describes probabilities for when sampling from a finite population without replacement in which each sample can be classified into two mutually exclusive categories like Pass/Fail or Employed/Unemployed. As random selections are made from the population, each subsequent draw decreases the population causing the probability of success to change with each draw. Unlike the standard hypergeometric distribution, which describes the number of successes in a fixed sample size, in the negative hypergeometric distribution, samples are drawn until [math]\displaystyle{ r }[/math] failures have been found, and the distribution describes the probability of finding [math]\displaystyle{ k }[/math] successes in such a sample. In other words, the negative hypergeometric distribution describes the likelihood of [math]\displaystyle{ k }[/math] successes in a sample with exactly [math]\displaystyle{ r }[/math] failures.

Definition

There are [math]\displaystyle{ N }[/math] elements, of which [math]\displaystyle{ K }[/math] are defined as "successes" and the rest are "failures".

Elements are drawn one after the other, without replacements, until [math]\displaystyle{ r }[/math] failures are encountered. Then, the drawing stops and the number [math]\displaystyle{ k }[/math] of successes is counted. The negative hypergeometric distribution, [math]\displaystyle{ NHG_{N,K,r}(k) }[/math] is the discrete distribution of this [math]\displaystyle{ k }[/math].

The negative hypergeometric distribution is a special case of the beta-binomial distribution[2] with parameters [math]\displaystyle{ \alpha = r }[/math] and [math]\displaystyle{ \beta = N-K-r+1 }[/math] both being integers (and [math]\displaystyle{ n = K }[/math]).

The outcome requires that we observe [math]\displaystyle{ k }[/math] successes in [math]\displaystyle{ (k+r-1) }[/math] draws and the [math]\displaystyle{ (k+r)\text{-th} }[/math] bit must be a failure. The probability of the former can be found by the direct application of the hypergeometric distribution [math]\displaystyle{ (HG_{N,K,k+r-1}(k)) }[/math] and the probability of the latter is simply the number of failures remaining [math]\displaystyle{ (=N-K-(r-1)) }[/math] divided by the size of the remaining population [math]\displaystyle{ (=N-(k+r-1) }[/math]. The probability of having exactly [math]\displaystyle{ k }[/math] successes up to the [math]\displaystyle{ r\text{-th} }[/math] failure (i.e. the drawing stops as soon as the sample includes the predefined number of [math]\displaystyle{ r }[/math] failures) is then the product of these two probabilities:

[math]\displaystyle{ \frac{\binom{K}{k} \binom{N - K}{k+r-1-k}}{\binom{N}{k+r-1}} \cdot \frac{N-K-(r-1)}{N-(k+r-1)} =\frac{{{k+r-1}\choose{k}}{{N-r-k}\choose{K-k}}}{N \choose K}. }[/math]

Therefore, a random variable [math]\displaystyle{ X }[/math] follows the negative hypergeometric distribution if its probability mass function (pmf) is given by

[math]\displaystyle{ f(k; N, K, r) \equiv \Pr(X = k) =\frac{{{k+r-1}\choose{k}}{{N-r-k}\choose{K-k}}}{N \choose K}\quad\text{for }k = 0, 1, 2, \dotsc, K }[/math]

where

- [math]\displaystyle{ N }[/math] is the population size,

- [math]\displaystyle{ K }[/math] is the number of success states in the population,

- [math]\displaystyle{ r }[/math] is the number of failures,

- [math]\displaystyle{ k }[/math] is the number of observed successes,

- [math]\displaystyle{ a \choose b }[/math] is a binomial coefficient

By design the probabilities sum up to 1. However, in case we want show it explicitly we have:

[math]\displaystyle{ \sum_{k=0}^{K} \Pr(X=k) = \sum_{k=0}^{K} \frac{{{k+r-1}\choose{k}}{{N-r-k}\choose{K-k}}}{N \choose K} = \frac{1}{N \choose K}\sum_{k=0}^{K} {{k+r-1}\choose{k}}{{N-r-k}\choose{K-k}} = \frac{1}{N \choose K} {N \choose K} = 1, }[/math]

where we have used that,

[math]\displaystyle{ \begin{align} \sum_{j=0}^k \binom{j+m}{j} \binom{n-m-j}{k-j} &=\sum_{j=0}^k (-1)^{j} \binom{-m-1}{j} (-1)^{k-j} \binom{m+1+k-n-2}{k-j}\\ &=(-1)^{k} \binom{k-n-2}{k} = (-1)^{k} \binom{k-(n+1)-1}{k} = \binom{n+1}{k}, \end{align} }[/math]

which can be derived using the binomial identity, [math]\displaystyle{ {{n \choose k} = (-1)^k {k-n-1 \choose k}} }[/math], and the Chu–Vandermonde identity, [math]\displaystyle{ \sum_{j=0}^k \binom m j \binom{n-m}{k-j} = \binom n k }[/math], which holds for any complex-values [math]\displaystyle{ m }[/math] and [math]\displaystyle{ n }[/math] and any non-negative integer [math]\displaystyle{ k }[/math].

Expectation

When counting the number [math]\displaystyle{ k }[/math] of successes before [math]\displaystyle{ r }[/math] failures, the expected number of successes is [math]\displaystyle{ \frac{r K}{N-K+1} }[/math] and can be derived as follows.

[math]\displaystyle{ \begin{align} E[X] &= \sum_{k=0}^{K} k \Pr(X=k) = \sum_{k=0}^{K} k \frac{{{k+r-1}\choose{k}}{{N-r-k}\choose{K-k}}}{N \choose K} = \frac{r}{N \choose K}\left[\sum_{k=0}^{K} \frac{(k+r)}{r} {{k+r-1}\choose{r-1}}{{N-r-k}\choose{K-k}}\right]-r \\ &= \frac{r}{N \choose K}\left[\sum_{k=0}^{K} {{k+r}\choose{r}}{{N-r-k}\choose{K-k}}\right]-r = \frac{r}{N \choose K}\left[\sum_{k=0}^{K} {{k+r}\choose{k}}{{N-r-k}\choose{K-k}}\right]-r\\ &= \frac{r}{N \choose K}\left[{{N+1} \choose K} \right]-r = \frac{rK}{N-K+1}, \end{align} }[/math]

where we have used the relationship [math]\displaystyle{ \sum_{j=0}^k \binom{j+m}{j} \binom{n-m-j}{k-j}= \binom{n+1}{k} }[/math], that we derived above to show that the negative hypergeometric distribution was properly normalized.

Variance

The variance can be derived by the following calculation.

[math]\displaystyle{ \begin{align} E[X^2] &= \sum_{k=0}^{K} k^2 \Pr(X=k) = \left[\sum_{k=0}^{K} (k+r)(k+r+1) \Pr(X=k)\right]-(2r+1)E[X]-r^2-r \\ &=\frac{r(r+1)}{N \choose K}\left[\sum_{k=0}^{K} {{k+r+1}\choose{r+1}}{{N+1-(r+1)-k}\choose{K-k}}\right]-(2r+1)E[X]-r^2-r\\ &= \frac{r(r+1)}{N \choose K}\left[{{N+2} \choose K} \right]-(2r+1)E[X]-r^2-r = \frac{r K (N - r + K r + 1)}{(N-K+1)(N-K+2)} \end{align} }[/math]

Then the variance is [math]\displaystyle{ \textrm{Var}[X]=E[X^2]-\left(E[X]\right)^2 = \frac{r K (N + 1) (N-K-r+1)}{(N - K + 1)^2 (N - K + 2)} }[/math]

Related distributions

If the drawing stops after a constant number [math]\displaystyle{ n }[/math] of draws (regardless of the number of failures), then the number of successes has the hypergeometric distribution, [math]\displaystyle{ HG_{N,K,n}(k) }[/math]. The two functions are related in the following way:[1]

[math]\displaystyle{ NHG_{N,K,r}(k) = 1-HG_{N,N-K,k+r}(r-1) }[/math]

Negative-hypergeometric distribution (like the hypergeometric distribution) deals with draws without replacement, so that the probability of success is different in each draw. In contrast, negative-binomial distribution (like the binomial distribution) deals with draws with replacement, so that the probability of success is the same and the trials are independent. The following table summarizes the four distributions related to drawing items:

| With replacements | No replacements | |

|---|---|---|

| # of successes in constant # of draws | binomial distribution | hypergeometric distribution |

| # of successes in constant # of failures | negative binomial distribution | negative hypergeometric distribution |

Some authors[3][4] define the negative hypergeometric distribution to be the number of draws required to get the [math]\displaystyle{ r }[/math]th failure. If we let [math]\displaystyle{ Y }[/math] denote this number then it is clear that [math]\displaystyle{ Y=X+r }[/math] where [math]\displaystyle{ X }[/math] is as defined above. Hence the PMF [math]\displaystyle{ Pr(Y=y)=\binom{y-1}{r-1}\frac{\binom{N-y}{N-K-r}}{\binom{N}{N-K}} }[/math]. If we let the number of failures [math]\displaystyle{ N-K }[/math] be denoted by [math]\displaystyle{ M }[/math] means that we have [math]\displaystyle{ Pr(Y=y)=\binom{y-1}{r-1}\frac{\binom{N-y}{M-r}}{\binom{N}{M}} }[/math]. The support of [math]\displaystyle{ Y }[/math] is the set [math]\displaystyle{ \{r,r+1,\dots,N-M+r\} }[/math]. It is clear that [math]\displaystyle{ E[Y]=E[X]+r=\frac{r(N+1)}{M+1} }[/math] and that [math]\displaystyle{ \textrm{Var}[X]=\textrm{Var}[Y] }[/math].

References

- ↑ Jump up to: 1.0 1.1 Negative hypergeometric distribution in Encyclopedia of Math.

- ↑ Johnson, Norman L.; Kemp, Adrienne W.; Kotz, Samuel (2005). Univariate Discrete Distributions. Wiley. ISBN 0-471-27246-9. §6.2.2 (p.253–254)

- ↑ Rohatgi, Vijay K., and AK Md Ehsanes Saleh. An introduction to probability and statistics. John Wiley & Sons, 2015.

- ↑ Khan, RA (1994). A note on the generating function of a negative hypergeometric distribution. Sankhya: The Indian Journal of Statistics B, 56(3), 309-313.

|