Physics:Time stretch analog-to-digital converter

The time-stretch analog-to-digital converter (TS-ADC),[1][2][3] also known as the time stretch enhanced recorder (TiSER), is an analog-to-digital converter (ADC) system that has the capability of digitizing very high bandwidth signals that cannot be captured by conventional electronic ADCs.[4] Alternatively, it is also known as the photonic time stretch (PTS) digitizer,[5] since it uses an optical frontend. It relies on the process of time-stretch, which effectively slows down the analog signal in time (or compresses its bandwidth) before it can be digitized by a slow electronic ADC.

Background

There is a huge demand for very high speed analog-to-digital converters (ADCs), as they are needed for test and measurement equipment in laboratories and in high speed data communications systems.[citation needed] Most of the ADCs are based purely on electronic circuits, which have limited speeds and add a lot of impairments, limiting the bandwidth of the signals that can be digitized and the achievable signal-to-noise ratio. In the TS-ADC, this limitation is overcome by time-stretching the analog signal, which effectively slows down the signal in time prior to digitization. By doing so, the bandwidth (and carrier frequency) of the signal is compressed. Electronic ADCs that would have been too slow to digitize the original signal, can now be used to capture this slowed down signal.

Operation principle

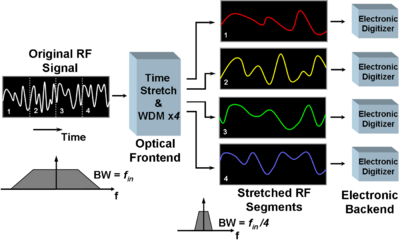

The time-stretch processor, which is generally an optical frontend, stretches the signal in time. It also divides the signal into multiple segments using a filter, for example a wavelength division multiplexing (WDM) filter, to ensure that the stretched replica of the original analog signal segments do not overlap each other in time after stretching. The time-stretched and slowed down signal segments are then converted into digital samples by slow electronic ADCs. Finally, these samples are collected by a digital signal processor (DSP) and rearranged in a manner such that output data is the digital representation of the original analog signal. Any distortion added to the signal by the time-stretch preprocessor is also removed by the DSP.

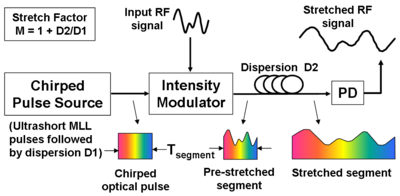

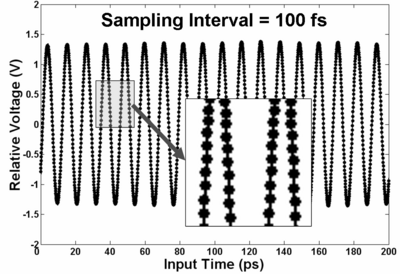

An optical front-end is commonly used to accomplish this process of time-stretch. An ultrashort optical pulse (typically 100 to 200 femtoseconds long), also called a supercontinuum pulse, which has a broad optical bandwidth, is time-stretched by dispersing it in a highly dispersive medium (such as a dispersion compensating fiber). This process results in (an almost) linear time-to-wavelength mapping in the stretched pulse, because different wavelengths travel at different speeds in the dispersive medium. The obtained pulse is called a chirped pulse as its frequency is changing with time, and it is typically a few nanoseconds long. The analog signal is modulated onto this chirped pulse using an electro-optic intensity modulator. Subsequently, the modulated pulse is stretched further in the second dispersive medium which has much higher dispersion value. Finally, this obtained optical pulse is converted to electrical domain by a photodetector, giving the stretched replica of the original analog signal.

For continuous operation, a train of supercontinuum pulses is used. The chirped pulses arriving at the electro-optic modulator should be wide enough (in time) such that the trailing edge of one pulse overlaps the leading edge of the next pulse. For segmentation, optical filters separate the signal into multiple wavelength channels at the output of the second dispersive medium. For each channel, a separate photodetector and backend electronic ADC is used. Finally the output of these ADCs are passed on to the DSP which generates the desired digital output.

Impulse response of the photonic time-stretch (PTS) system

The PTS processor is based on specialized analog optical (or microwave photonic) fiber links[5] such as those used in cable TV distribution. While the dispersion of fiber is a nuisance in conventional analog optical links, time-stretch technique exploits it to slow down the electrical waveform in the optical domain. In the cable TV link, the light source is a continuous-wave (CW) laser. In PTS, the source is a chirped pulse laser.

In a conventional analog optical link, dispersion causes the upper and lower modulation sidebands, foptical ± felectrical, to slip in relative phase. At certain frequencies, their beats with the optical carrier interfere destructively, creating nulls in the frequency response of the system. For practical systems the first null is at tens of GHz, which is sufficient for handling most electrical signals of interest. Although it may seem that the dispersion penalty places a fundamental limit on the impulse response (or the bandwidth) of the time-stretch system, it can be eliminated. The dispersion penalty vanishes with single-sideband modulation.[5] Alternatively, one can use the modulator’s secondary (inverse) output port to eliminate the dispersion penalty,[5] in much the same way as two antennas can eliminate spatial nulls in wireless communication (hence the two antennas on top of a WiFi access point). This configuration is termed phase-diversity.[6] Combining the complementary outputs using a maximal ratio combining (MRC) algorithm results in a transfer function with a flat response in the frequency domain. Thus, the impulse response (bandwidth) of a time-stretch system is limited only by the bandwidth of the electro-optic modulator, which is about 120 GHz—a value that is adequate for capturing most electrical waveforms of interest.

Extremely large stretch factors can be obtained using long lengths of fiber, but at the cost of larger loss—a problem that has been overcome by employing Raman amplification within the dispersive fiber itself, leading to the world’s fastest real-time digitizer.[7] Also, using PTS, capture of very high frequency signals with a world record resolution in 10-GHz bandwidth range has been achieved.[8]

Comparison with time lens imaging

Another technique, temporal imaging using a time lens, can also be used to slow down (mostly optical) signals in time. The time-lens concept relies on the mathematical equivalence between spatial diffraction and temporal dispersion, the so-called space-time duality.[9] A lens held at fixed distance from an object produces a magnified visible image. The lens imparts a quadratic phase shift to the spatial frequency components of the optical waves; in conjunction with the free space propagation (object to lens, lens to eye), this generates a magnified image. Owing to the mathematical equivalence between paraxial diffraction and temporal dispersion, an optical waveform can be temporally imaged by a three-step process of dispersing it in time, subjecting it to a phase shift that is quadratic in time (the time lens itself), and dispersing it again. Theoretically, a focused aberration-free image is obtained under a specific condition when the two dispersive elements and the phase shift satisfy the temporal equivalent of the classic lens equation. Alternatively, the time lens can be used without the second dispersive element to transfer the waveform’s temporal profile to the spectral domain, analogous to the property that an ordinary lens produces the spatial Fourier transform of an object at its focal points.[10]

In contrast to the time-lens approach, PTS is not based on the space-time duality – there is no lens equation that needs to be satisfied to obtain an error-free slowed-down version of the input waveform. Time-stretch technique also offers continuous-time acquisition performance, a feature needed for mainstream applications of oscilloscopes.

Another important difference between the two techniques is that the time lens requires the input signal to be subjected to high amount of dispersion before further processing. For electrical waveforms, the electronic devices that have the required characteristics: (1) high dispersion to loss ratio, (2) uniform dispersion, and (3) broad bandwidths, do not exist. This renders time lens not suitable for slowing down wideband electrical waveforms. In contrast, PTS does not have such a requirement. It was developed specifically for slowing down electrical waveforms and enable high speed digitizers.

Relation to phase stretch transform

The phase stretch transform or PST is a computational approach to signal and image processing. One of its utilities is for feature detection and classification. phase stretch transform is a spin-off from research on the time stretch dispersive Fourier transform. It transforms the image by emulating propagation through a diffractive medium with engineered 3D dispersive property (refractive index).

Application to imaging and spectroscopy

In addition to wideband A/D conversion, photonic time-stretch (PTS) is also an enabling technology for high-throughput real-time instrumentation such as imaging[11] and spectroscopy.[12][13] The first artificial intelligence facilitated high-speed phase microscopy is demonstrated to improve the diagnosis accuracy of cancer cells out of blood cells by simultaneous measurement of phase and intensity spatial profiles.[14] The world's fastest optical imaging method called serial time-encoded amplified microscopy (STEAM) makes use of the PTS technology to acquire image using a single-pixel photodetector and commercial ADC. Wavelength-time spectroscopy, which also relies on photonic time-stretch technique, permits real-time single-shot measurements of rapidly evolving or fluctuating spectra.

Time stretch quantitative phase imaging (TS-QPI) is an imaging technique based on time-stretch technology for simultaneous measurement of phase and intensity spatial profiles. In time stretched imaging, the object’s spatial information is encoded in the spectrum of laser pulses within a pulse duration of sub-nanoseconds. Each pulse representing one frame of the camera is then stretched in time so that it can be digitized in real-time by an electronic analog-to-digital converter (ADC). The ultra-fast pulse illumination freezes the motion of high-speed cells or particles in flow to achieve blur-free imaging.[15][16]

References

- ↑ A. S. Bhushan, F. Coppinger, and B. Jalali, “Time-stretched analogue-to-digital conversion," Electronics Letters vol. 34, no. 9, pp. 839–841, April 1998. [1]

- ↑ A. Fard, S. Gupta, and B. Jalali, "Photonic time-stretch digitizer and its extension to real-time spectroscopy and imaging," Laser & Photonics Reviews vol. 7, no. 2, pp. 207-263, March 2013. [2]

- ↑ Y. Han and B. Jalali, “Photonic Time-Stretched Analog-to-Digital Converter: Fundamental Concepts and Practical Considerations," Journal of Lightwave Technology, Vol. 21, Issue 12, pp. 3085–3103, Dec. 2003. [3]

- ↑ Mahjoubfar, Ata; Churkin, Dmitry V.; Barland, Stéphane; Broderick, Neil; Turitsyn, Sergei K.; Jalali, Bahram (June 2017). "Time stretch and its applications" (in en). Nature Photonics 11 (6): 341–351. doi:10.1038/nphoton.2017.76. ISSN 1749-4885. Bibcode: 2017NaPho..11..341M.

- ↑ 5.0 5.1 5.2 5.3 J. Capmany and D. Novak, “Microwave photonics combines two worlds," Nature Photonics 1, 319-330 (2007). [4]

- ↑ Yan Han, Ozdal Boyraz, Bahram Jalali, "Ultrawide-Band Photonic Time-Stretch A/D Converter Employing Phase Diversity," "IEEE TRANSACTIONS ON MICROWAVE THEORY AND TECHNIQUES" VOL. 53, NO. 4, APRIL 2005 [5]

- ↑ J. Chou, O. Boyraz, D. Solli, and B. Jalali, “Femtosecond real-time single-shot digitizer," Applied Physics Letters 91, 161105 (2007). [6]

- ↑ S. Gupta and B. Jalali, “Time-warp correction and calibration in photonic time-stretch analog-to-digital converter," Optics Letters 33, 2674–2676 (2008). [7]

- ↑ B. H. Kolner and M. Nazarathy, “Temporal imaging with a time lens," Optics Letters 14, 630-632 (1989) [8]

- ↑ J. W. Goodman, “Introduction to Fourier Optics," McGraw-Hill (1968).

- ↑ K. Goda, K.K. Tsia, and B. Jalali, "Serial time-encoded amplified imaging for real-time observation of fast dynamic phenomena," Nature 458, 1145–1149, 2009. [9]

- ↑ D. R. Solli, J. Chou, and B. Jalali, "Amplified wavelength–time transformation for real-time spectroscopy," Nature Photonics 2, 48-51, 2008. [10]

- ↑ J. Chou, D. Solli, and B. Jalali, "Real-time spectroscopy with subgigahertz resolution using amplified dispersive Fourier transformation," Applied Physics Letters 92, 111102, 2008. [11]

- ↑ C. Chen, A. Mahjoubfar, & B. Jalali, “Deep Learning in Label-free Cell Classification," Scientific Reports 6, 21471 (2016) doi:10.1038/srep21471. [12]

- ↑ Chen, Claire Lifan; Mahjoubfar, Ata; Tai, Li-Chia; Blaby, Ian K.; Huang, Allen; Niazi, Kayvan Reza; Jalali, Bahram (2016). "Deep Learning in Label-free Cell Classification". Scientific Reports 6: 21471. doi:10.1038/srep21471. PMID 26975219. Bibcode: 2016NatSR...621471C.published under CC BY 4.0 licensing

- ↑ Michaud, Sarah (5 April 2016). "Leveraging Big Data for Cell Imaging". Optics & Photonics News (Full text download available: The Optical Society). http://www.osa-opn.org/home/newsroom/2016/april/leveraging_big_data_for_cell_imaging/.

Further reading

- G. C. Valley, “Photonic analog-to-digital converters," Opt. Express, vol. 15, no. 5, pp. 1955–1982, March 2007. [13]

- Photonic Bandwidth Compression for Instantaneous Wideband A/D Conversion (PHOBIAC) project. [14]

- Short time Fourier transform for time-frequency analysis of ultrawideband signals