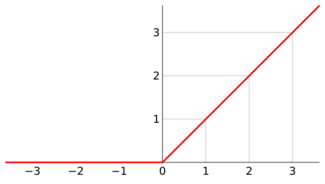

Ramp function

The ramp function is a unary real function, whose graph is shaped like a ramp. It can be expressed by numerous definitions, for example "0 for negative inputs, output equals input for non-negative inputs". The term "ramp" can also be used for other functions obtained by scaling and shifting, and the function in this article is the unit ramp function (slope 1, starting at 0).

In mathematics, the ramp function is also known as the positive part.

In machine learning, it is commonly known as a ReLU activation function[1][2] or a rectifier in analogy to half-wave rectification in electrical engineering. In statistics (when used as a likelihood function) it is known as a tobit model.

This function has numerous applications in mathematics and engineering, and goes by various names, depending on the context. There are differentiable variants of the ramp function.

Definitions

The ramp function (R(x) : R → R0+) may be defined analytically in several ways. Possible definitions are:

- A piecewise function:

- Using the Iverson bracket notation: or

- The max function:

- The mean of an independent variable and its absolute value (a straight line with unity gradient and its modulus): this can be derived by noting the following definition of max(a, b), for which a = x and b = 0

- The Heaviside step function multiplied by a straight line with unity gradient:

- The convolution of the Heaviside step function with itself:

- The integral of the Heaviside step function:[3]

- Macaulay brackets:

- The positive part of the identity function:

- As a limit function:

It could approximated as close as desired by choosing an increasing positive value .

Applications

The ramp function has numerous applications in engineering, such as in the theory of digital signal processing.

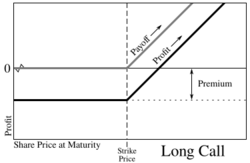

In finance, the payoff of a call option is a ramp (shifted by strike price). Horizontally flipping a ramp yields a put option, while vertically flipping (taking the negative) corresponds to selling or being "short" an option. In finance, the shape is widely called a "hockey stick", due to the shape being similar to an ice hockey stick.

In statistics, hinge functions of multivariate adaptive regression splines (MARS) are ramps, and are used to build regression models.

Analytic properties

Non-negativity

In the whole domain the function is non-negative, so its absolute value is itself, i.e. and

by the mean of definition 2, it is non-negative in the first quarter, and zero in the second; so everywhere it is non-negative.

Derivative

Its derivative is the Heaviside step function:

Second derivative

The ramp function satisfies the differential equation: where δ(x) is the Dirac delta. This means that R(x) is a Green's function for the second derivative operator. Thus, any function, f(x), with an integrable second derivative, f″(x), will satisfy the equation:

where δ(x) is the Dirac delta (in this formula, its derivative appears).

The single-sided Laplace transform of R(x) is given as follows,[4]

Algebraic properties

Iteration invariance

Every iterated function of the ramp mapping is itself, as

This applies the non-negative property.

See also

References

- ↑ Brownlee, Jason (8 January 2019). "A Gentle Introduction to the Rectified Linear Unit (ReLU)". https://machinelearningmastery.com/rectified-linear-activation-function-for-deep-learning-neural-networks/.

- ↑ Liu, Danqing (30 November 2017). "A Practical Guide to ReLU" (in en). https://medium.com/@danqing/a-practical-guide-to-relu-b83ca804f1f7.

- ↑ Weisstein, Eric W.. "Ramp Function". http://mathworld.wolfram.com/RampFunction.html.

- ↑ "The Laplace Transform of Functions". https://lpsa.swarthmore.edu/LaplaceXform/FwdLaplace/LaplaceFuncs.html#Ramp.

|