Elliptic boundary value problem

In mathematics, an elliptic boundary value problem is a special kind of boundary value problem which can be thought of as the stable state of an evolution problem. For example, the Dirichlet problem for the Laplacian gives the eventual distribution of heat in a room several hours after the heating is turned on.

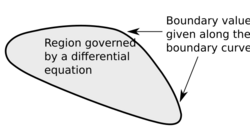

Differential equations describe a large class of natural phenomena, from the heat equation describing the evolution of heat in (for instance) a metal plate, to the Navier-Stokes equation describing the movement of fluids, including Einstein's equations describing the physical universe in a relativistic way. Although all these equations are boundary value problems, they are further subdivided into categories. This is necessary because each category must be analyzed using different techniques. The present article deals with the category of boundary value problems known as linear elliptic problems.

Boundary value problems and partial differential equations specify relations between two or more quantities. For instance, in the heat equation, the rate of change of temperature at a point is related to the difference of temperature between that point and the nearby points so that, over time, the heat flows from hotter points to cooler points. Boundary value problems can involve space, time and other quantities such as temperature, velocity, pressure, magnetic field, etc.

Some problems do not involve time. For instance, if one hangs a clothesline between the house and a tree, then in the absence of wind, the clothesline will not move and will adopt a gentle hanging curved shape known as the catenary.[1] This curved shape can be computed as the solution of a differential equation relating position, tension, angle and gravity, but since the shape does not change over time, there is no time variable.

Elliptic boundary value problems are a class of problems which do not involve the time variable, and instead only depend on space variables.

The main example

In two dimensions, let [math]\displaystyle{ x,y }[/math] be the coordinates. We will use the notation [math]\displaystyle{ u_x, u_{xx} }[/math] for the first and second partial derivatives of [math]\displaystyle{ u }[/math] with respect to [math]\displaystyle{ x }[/math], and a similar notation for [math]\displaystyle{ y }[/math]. We will use the symbols [math]\displaystyle{ D_x }[/math] and [math]\displaystyle{ D_y }[/math] for the partial differential operators in [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math]. The second partial derivatives will be denoted [math]\displaystyle{ D_x^2 }[/math] and [math]\displaystyle{ D_y^2 }[/math]. We also define the gradient [math]\displaystyle{ \nabla u = (u_x,u_y) }[/math], the Laplace operator [math]\displaystyle{ \Delta u = u_{xx}+u_{yy} }[/math] and the divergence [math]\displaystyle{ \nabla \cdot (u,v) = u_x + v_y }[/math]. Note from the definitions that [math]\displaystyle{ \Delta u = \nabla \cdot (\nabla u) }[/math].

The main example for boundary value problems is the Laplace operator,

- [math]\displaystyle{ \Delta u = f \text{ in }\Omega, }[/math]

- [math]\displaystyle{ u = 0 \text { on }\partial \Omega; }[/math]

where [math]\displaystyle{ \Omega }[/math] is a region in the plane and [math]\displaystyle{ \partial \Omega }[/math] is the boundary of that region. The function [math]\displaystyle{ f }[/math] is known data and the solution [math]\displaystyle{ u }[/math] is what must be computed. This example has the same essential properties as all other elliptic boundary value problems.

The solution [math]\displaystyle{ u }[/math] can be interpreted as the stationary or limit distribution of heat in a metal plate shaped like [math]\displaystyle{ \Omega }[/math], if this metal plate has its boundary adjacent to ice (which is kept at zero degrees, thus the Dirichlet boundary condition.) The function [math]\displaystyle{ f }[/math] represents the intensity of heat generation at each point in the plate (perhaps there is an electric heater resting on the metal plate, pumping heat into the plate at rate [math]\displaystyle{ f(x) }[/math], which does not vary over time, but may be nonuniform in space on the metal plate.) After waiting for a long time, the temperature distribution in the metal plate will approach [math]\displaystyle{ u }[/math].

Nomenclature

Let [math]\displaystyle{ Lu=a u_{xx} + b u_{yy} }[/math] where [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math] are constants. [math]\displaystyle{ L=aD_x^2+bD_y^2 }[/math] is called a second order differential operator. If we formally replace the derivatives [math]\displaystyle{ D_x }[/math] by [math]\displaystyle{ x }[/math] and [math]\displaystyle{ D_y }[/math] by [math]\displaystyle{ y }[/math], we obtain the expression

- [math]\displaystyle{ a x^2 + b y^2 }[/math].

If we set this expression equal to some constant [math]\displaystyle{ k }[/math], then we obtain either an ellipse (if [math]\displaystyle{ a,b,k }[/math] are all the same sign) or a hyperbola (if [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math] are of opposite signs.) For that reason, [math]\displaystyle{ L }[/math] is said to be elliptic when [math]\displaystyle{ ab\gt 0 }[/math] and hyperbolic if [math]\displaystyle{ ab\lt 0 }[/math]. Similarly, the operator [math]\displaystyle{ L=D_x+D_y^2 }[/math] leads to a parabola, and so this [math]\displaystyle{ L }[/math] is said to be parabolic.

We now generalize the notion of ellipticity. While it may not be obvious that our generalization is the right one, it turns out that it does preserve most of the necessary properties for the purpose of analysis.

General linear elliptic boundary value problems of the second degree

Let [math]\displaystyle{ x_1,...,x_n }[/math] be the space variables. Let [math]\displaystyle{ a_{ij}(x), b_i(x), c(x) }[/math] be real valued functions of [math]\displaystyle{ x=(x_1,...,x_n) }[/math]. Let [math]\displaystyle{ L }[/math] be a second degree linear operator. That is,

- [math]\displaystyle{ Lu(x)=\sum_{i,j=1}^n (a_{ij} (x) u_{x_i})_{x_j} + \sum_{i=1}^n b_i(x) u_{x_i}(x) + c(x) u(x) }[/math] (divergence form).

- [math]\displaystyle{ Lu(x)=\sum_{i,j=1}^n a_{ij} (x) u_{x_i x_j} + \sum_{i=1}^n \tilde b_i(x) u_{x_i}(x) + c(x) u(x) }[/math] (nondivergence form)

We have used the subscript [math]\displaystyle{ \cdot_{x_i} }[/math] to denote the partial derivative with respect to the space variable [math]\displaystyle{ x_i }[/math]. The two formulae are equivalent, provided that

- [math]\displaystyle{ \tilde b_i(x) = b_i(x) + \sum_j a_{ij,x_j}(x) }[/math].

In matrix notation, we can let [math]\displaystyle{ a(x) }[/math] be an [math]\displaystyle{ n \times n }[/math] matrix valued function of [math]\displaystyle{ x }[/math] and [math]\displaystyle{ b(x) }[/math] be a [math]\displaystyle{ n }[/math]-dimensional column vector-valued function of [math]\displaystyle{ x }[/math], and then we may write

- [math]\displaystyle{ Lu = \nabla \cdot (a \nabla u) + b^T \nabla u + c u }[/math] (divergence form).

One may assume, without loss of generality, that the matrix [math]\displaystyle{ a }[/math] is symmetric (that is, for all [math]\displaystyle{ i,j,x }[/math], [math]\displaystyle{ a_{ij}(x)=a_{ji}(x) }[/math]. We make that assumption in the rest of this article.

We say that the operator [math]\displaystyle{ L }[/math] is elliptic if, for some constant [math]\displaystyle{ \alpha\gt 0 }[/math], any of the following equivalent conditions hold:

- [math]\displaystyle{ \lambda_{\min} (a(x)) \gt \alpha \;\;\; \forall x }[/math] (see eigenvalue).

- [math]\displaystyle{ u^T a(x) u \gt \alpha u^T u \;\;\; \forall u \in \mathbb{R}^n }[/math].

- [math]\displaystyle{ \sum_{i,j=1}^n a_{ij} u_i u_j \gt \alpha \sum_{i=1}^n u_i^2 \;\;\; \forall u \in \mathbb{R}^n }[/math].

An elliptic boundary value problem is then a system of equations like

- [math]\displaystyle{ Lu=f \text{ in } \Omega }[/math] (the PDE) and

- [math]\displaystyle{ u=0 \text{ on } \partial \Omega }[/math] (the boundary value).

This particular example is the Dirichlet problem. The Neumann problem is

- [math]\displaystyle{ Lu=f \text{ in } \Omega }[/math] and

- [math]\displaystyle{ u_\nu = g \text{ on } \partial \Omega }[/math]

where [math]\displaystyle{ u_\nu }[/math] is the derivative of [math]\displaystyle{ u }[/math] in the direction of the outwards pointing normal of [math]\displaystyle{ \partial \Omega }[/math]. In general, if [math]\displaystyle{ B }[/math] is any trace operator, one can construct the boundary value problem

- [math]\displaystyle{ Lu=f \text{ in } \Omega }[/math] and

- [math]\displaystyle{ Bu=g \text{ on } \partial \Omega }[/math].

In the rest of this article, we assume that [math]\displaystyle{ L }[/math] is elliptic and that the boundary condition is the Dirichlet condition [math]\displaystyle{ u=0 \text{ on }\partial \Omega }[/math].

Sobolev spaces

The analysis of elliptic boundary value problems requires some fairly sophisticated tools of functional analysis. We require the space [math]\displaystyle{ H^1(\Omega) }[/math], the Sobolev space of "once-differentiable" functions on [math]\displaystyle{ \Omega }[/math], such that both the function [math]\displaystyle{ u }[/math] and its partial derivatives [math]\displaystyle{ u_{x_i} }[/math], [math]\displaystyle{ i=1,\dots,n }[/math] are all square integrable. There is a subtlety here in that the partial derivatives must be defined "in the weak sense" (see the article on Sobolev spaces for details.) The space [math]\displaystyle{ H^1 }[/math] is a Hilbert space, which accounts for much of the ease with which these problems are analyzed.

The discussion in details of Sobolev spaces is beyond the scope of this article, but we will quote required results as they arise.

Unless otherwise noted, all derivatives in this article are to be interpreted in the weak, Sobolev sense. We use the term "strong derivative" to refer to the classical derivative of calculus. We also specify that the spaces [math]\displaystyle{ C^k }[/math], [math]\displaystyle{ k=0,1,\dots }[/math] consist of functions that are [math]\displaystyle{ k }[/math] times strongly differentiable, and that the [math]\displaystyle{ k }[/math]th derivative is continuous.

Weak or variational formulation

The first step to cast the boundary value problem as in the language of Sobolev spaces is to rephrase it in its weak form. Consider the Laplace problem [math]\displaystyle{ \Delta u = f }[/math]. Multiply each side of the equation by a "test function" [math]\displaystyle{ \varphi }[/math] and integrate by parts using Green's theorem to obtain

- [math]\displaystyle{ -\int_\Omega \nabla u \cdot \nabla \varphi + \int_{\partial \Omega} u_\nu \varphi = \int_\Omega f \varphi }[/math].

We will be solving the Dirichlet problem, so that [math]\displaystyle{ u=0\text{ on }\partial \Omega }[/math]. For technical reasons, it is useful to assume that [math]\displaystyle{ \varphi }[/math] is taken from the same space of functions as [math]\displaystyle{ u }[/math] is so we also assume that [math]\displaystyle{ \varphi=0\text{ on }\partial \Omega }[/math]. This gets rid of the [math]\displaystyle{ \int_{\partial \Omega} }[/math] term, yielding

- [math]\displaystyle{ A(u,\varphi) = F(\varphi) }[/math] (*)

where

- [math]\displaystyle{ A(u,\varphi) = \int_\Omega \nabla u \cdot \nabla \varphi }[/math] and

- [math]\displaystyle{ F(\varphi) = -\int_\Omega f \varphi }[/math].

If [math]\displaystyle{ L }[/math] is a general elliptic operator, the same reasoning leads to the bilinear form

- [math]\displaystyle{ A(u,\varphi) = \int_\Omega \nabla u ^T a \nabla \varphi - \int_\Omega b^T \nabla u \varphi - \int_\Omega c u \varphi }[/math].

We do not discuss the Neumann problem but note that it is analyzed in a similar way.

Continuous and coercive bilinear forms

The map [math]\displaystyle{ A(u,\varphi) }[/math] is defined on the Sobolev space [math]\displaystyle{ H^1_0\subset H^1 }[/math] of functions which are once differentiable and zero on the boundary [math]\displaystyle{ \partial \Omega }[/math], provided we impose some conditions on [math]\displaystyle{ a,b,c }[/math] and [math]\displaystyle{ \Omega }[/math]. There are many possible choices, but for the purpose of this article, we will assume that

- [math]\displaystyle{ a_{ij}(x) }[/math] is continuously differentiable on [math]\displaystyle{ \bar\Omega }[/math] for [math]\displaystyle{ i,j=1,\dots,n, }[/math]

- [math]\displaystyle{ b_i(x) }[/math] is continuous on [math]\displaystyle{ \bar\Omega }[/math] for [math]\displaystyle{ i=1,\dots,n, }[/math]

- [math]\displaystyle{ c(x) }[/math] is continuous on [math]\displaystyle{ \bar\Omega }[/math] and

- [math]\displaystyle{ \Omega }[/math] is bounded.

The reader may verify that the map [math]\displaystyle{ A(u,\varphi) }[/math] is furthermore bilinear and continuous, and that the map [math]\displaystyle{ F(\varphi) }[/math] is linear in [math]\displaystyle{ \varphi }[/math], and continuous if (for instance) [math]\displaystyle{ f }[/math] is square integrable.

We say that the map [math]\displaystyle{ A }[/math] is coercive if there is an [math]\displaystyle{ \alpha\gt 0 }[/math] for all [math]\displaystyle{ u,\varphi \in H_0^1(\Omega) }[/math],

- [math]\displaystyle{ A(u,\varphi) \geq \alpha \int_\Omega \nabla u \cdot \nabla \varphi. }[/math]

This is trivially true for the Laplacian (with [math]\displaystyle{ \alpha=1 }[/math]) and is also true for an elliptic operator if we assume [math]\displaystyle{ b = 0 }[/math] and [math]\displaystyle{ c \leq 0 }[/math]. (Recall that [math]\displaystyle{ u^T a u \gt \alpha u^T u }[/math] when [math]\displaystyle{ L }[/math] is elliptic.)

Existence and uniqueness of the weak solution

One may show, via the Lax–Milgram lemma, that whenever [math]\displaystyle{ A(u,\varphi) }[/math] is coercive and [math]\displaystyle{ F(\varphi) }[/math] is continuous, then there exists a unique solution [math]\displaystyle{ u\in H_0^1(\Omega) }[/math] to the weak problem (*).

If further [math]\displaystyle{ A(u,\varphi) }[/math] is symmetric (i.e., [math]\displaystyle{ b=0 }[/math]), one can show the same result using the Riesz representation theorem instead.

This relies on the fact that [math]\displaystyle{ A(u,\varphi) }[/math] forms an inner product on [math]\displaystyle{ H_0^1(\Omega) }[/math], which itself depends on Poincaré's inequality.

Strong solutions

We have shown that there is a [math]\displaystyle{ u\in H_0^1(\Omega) }[/math] which solves the weak system, but we do not know if this [math]\displaystyle{ u }[/math] solves the strong system

- [math]\displaystyle{ Lu=f\text{ in }\Omega, }[/math]

- [math]\displaystyle{ u=0\text{ on }\partial \Omega, }[/math]

Even more vexing is that we are not even sure that [math]\displaystyle{ u }[/math] is twice differentiable, rendering the expressions [math]\displaystyle{ u_{x_i x_j} }[/math] in [math]\displaystyle{ Lu }[/math] apparently meaningless. There are many ways to remedy the situation, the main one being regularity.

Regularity

A regularity theorem for a linear elliptic boundary value problem of the second order takes the form

Theorem If (some condition), then the solution [math]\displaystyle{ u }[/math] is in [math]\displaystyle{ H^2(\Omega) }[/math], the space of "twice differentiable" functions whose second derivatives are square integrable.

There is no known simple condition necessary and sufficient for the conclusion of the theorem to hold, but the following conditions are known to be sufficient:

- The boundary of [math]\displaystyle{ \Omega }[/math] is [math]\displaystyle{ C^2 }[/math], or

- [math]\displaystyle{ \Omega }[/math] is convex.

It may be tempting to infer that if [math]\displaystyle{ \partial \Omega }[/math] is piecewise [math]\displaystyle{ C^2 }[/math] then [math]\displaystyle{ u }[/math] is indeed in [math]\displaystyle{ H^2 }[/math], but that is unfortunately false.

Almost everywhere solutions

In the case that [math]\displaystyle{ u \in H^2(\Omega) }[/math] then the second derivatives of [math]\displaystyle{ u }[/math] are defined almost everywhere, and in that case [math]\displaystyle{ Lu=f }[/math] almost everywhere.

Strong solutions

One may further prove that if the boundary of [math]\displaystyle{ \Omega \subset \mathbb{R}^n }[/math] is a smooth manifold and [math]\displaystyle{ f }[/math] is infinitely differentiable in the strong sense, then [math]\displaystyle{ u }[/math] is also infinitely differentiable in the strong sense. In this case, [math]\displaystyle{ Lu=f }[/math] with the strong definition of the derivative.

The proof of this relies upon an improved regularity theorem that says that if [math]\displaystyle{ \partial \Omega }[/math] is [math]\displaystyle{ C^k }[/math] and [math]\displaystyle{ f \in H^{k-2}(\Omega) }[/math], [math]\displaystyle{ k\geq 2 }[/math], then [math]\displaystyle{ u\in H^k(\Omega) }[/math], together with a Sobolev imbedding theorem saying that functions in [math]\displaystyle{ H^k(\Omega) }[/math] are also in [math]\displaystyle{ C^m(\bar \Omega) }[/math] whenever [math]\displaystyle{ 0 \leq m \lt k-n/2 }[/math].

Numerical solutions

While in exceptional circumstances, it is possible to solve elliptic problems explicitly, in general it is an impossible task. The natural solution is to approximate the elliptic problem with a simpler one and to solve this simpler problem on a computer.

Because of the good properties we have enumerated (as well as many we have not), there are extremely efficient numerical solvers for linear elliptic boundary value problems (see finite element method, finite difference method and spectral method for examples.)

Eigenvalues and eigensolutions

Another Sobolev imbedding theorem states that the inclusion [math]\displaystyle{ H^1\subset L^2 }[/math] is a compact linear map. Equipped with the spectral theorem for compact linear operators, one obtains the following result.

Theorem Assume that [math]\displaystyle{ A(u,\varphi) }[/math] is coercive, continuous and symmetric. The map [math]\displaystyle{ S : f \rightarrow u }[/math] from [math]\displaystyle{ L^2(\Omega) }[/math] to [math]\displaystyle{ L^2(\Omega) }[/math] is a compact linear map. It has a basis of eigenvectors [math]\displaystyle{ u_1, u_2, \dots \in H^1(\Omega) }[/math] and matching eigenvalues [math]\displaystyle{ \lambda_1,\lambda_2,\dots \in \mathbb{R} }[/math] such that

- [math]\displaystyle{ Su_k = \lambda_k u_k, k=1,2,\dots, }[/math]

- [math]\displaystyle{ \lambda_k \rightarrow 0 }[/math] as [math]\displaystyle{ k \rightarrow \infty }[/math],

- [math]\displaystyle{ \lambda_k \gneqq 0\;\;\forall k }[/math],

- [math]\displaystyle{ \int_\Omega u_j u_k = 0 }[/math] whenever [math]\displaystyle{ j \neq k }[/math] and

- [math]\displaystyle{ \int_\Omega u_j u_j = 1 }[/math] for all [math]\displaystyle{ j=1,2,\dots\,. }[/math]

Series solutions and the importance of eigensolutions

If one has computed the eigenvalues and eigenvectors, then one may find the "explicit" solution of [math]\displaystyle{ Lu=f }[/math],

- [math]\displaystyle{ u=\sum_{k=1}^\infty \hat u(k) u_k }[/math]

via the formula

- [math]\displaystyle{ \hat u(k) = \lambda_k \hat f(k) ,\;\;k=1,2,\dots }[/math]

where

- [math]\displaystyle{ \hat f(k) = \int_{\Omega} f(x) u_k(x) \, dx. }[/math]

(See Fourier series.)

The series converges in [math]\displaystyle{ L^2 }[/math]. Implemented on a computer using numerical approximations, this is known as the spectral method.

An example

Consider the problem

- [math]\displaystyle{ u-u_{xx}-u_{yy}=f(x,y)=xy }[/math] on [math]\displaystyle{ (0,1)\times(0,1), }[/math]

- [math]\displaystyle{ u(x,0)=u(x,1)=u(0,y)=u(1,y)=0 \;\;\forall (x,y)\in(0,1)\times(0,1) }[/math] (Dirichlet conditions).

The reader may verify that the eigenvectors are exactly

- [math]\displaystyle{ u_{jk}(x,y)=\sin(\pi jx)\sin(\pi ky) }[/math], [math]\displaystyle{ j,k\in \mathbb{N} }[/math]

with eigenvalues

- [math]\displaystyle{ \lambda_{jk}={ 1 \over 1+\pi^2 j^2+\pi^2 k^2 }. }[/math]

The Fourier coefficients of [math]\displaystyle{ g(x)=x }[/math] can be looked up in a table, getting [math]\displaystyle{ \hat g(n) = { (-1)^{n+1} \over \pi n } }[/math]. Therefore,

- [math]\displaystyle{ \hat f(j,k) = { (-1)^{j+k+1} \over \pi^2 jk } }[/math]

yielding the solution

- [math]\displaystyle{ u(x,y) = \sum_{j,k=1}^\infty { (-1)^{j+k+1} \over \pi^2 jk (1+\pi^2 j^2+\pi^2 k^2) } \sin(\pi jx) \sin (\pi ky). }[/math]

Maximum principle

There are many variants of the maximum principle. We give a simple one.

Theorem. (Weak maximum principle.) Let [math]\displaystyle{ u \in C^2(\Omega) \cap C^1(\bar \Omega) }[/math], and assume that [math]\displaystyle{ c(x)=0\;\forall x\in\Omega }[/math]. Say that [math]\displaystyle{ Lu \leq 0 }[/math] in [math]\displaystyle{ \Omega }[/math]. Then [math]\displaystyle{ \max_{x \in \bar \Omega} u(x) = \max_{x \in \partial \Omega} u(x) }[/math]. In other words, the maximum is attained on the boundary.

A strong maximum principle would conclude that [math]\displaystyle{ u(x) \lneqq \max_{y \in \partial \Omega} u(y) }[/math] for all [math]\displaystyle{ x \in \Omega }[/math] unless [math]\displaystyle{ u }[/math] is constant.

References

Further reading

- Evans, Lawrence C. (1998). Partial Differential Equations. Graduate Studies in Mathematics. 19. Providence, RI: American Mathematical Society. ISBN 0-8218-0772-2.

|