Deming regression

In statistics, Deming regression, named after W. Edwards Deming, is an errors-in-variables model that tries to find the line of best fit for a two-dimensional dataset. It differs from the simple linear regression in that it accounts for errors in observations on both the x- and the y- axis. It is a special case of total least squares, which allows for any number of predictors and a more complicated error structure.

Deming regression is equivalent to the maximum likelihood estimation of an errors-in-variables model in which the errors for the two variables are assumed to be independent and normally distributed, and the ratio of their variances, denoted δ, is known.[1] In practice, this ratio might be estimated from related data-sources; however the regression procedure takes no account for possible errors in estimating this ratio.

The Deming regression is only slightly more difficult to compute than the simple linear regression. Most statistical software packages used in clinical chemistry offer Deming regression.

The model was originally introduced by (Adcock 1878) who considered the case δ = 1, and then more generally by (Kummell 1879) with arbitrary δ. However their ideas remained largely unnoticed for more than 50 years, until they were revived by (Koopmans 1936) and later propagated even more by (Deming 1943). The latter book became so popular in clinical chemistry and related fields that the method was even dubbed Deming regression in those fields.[2]

Specification

Assume that the available data (yi, xi) are measured observations of the "true" values (yi*, xi*), which lie on the regression line:

- [math]\displaystyle{ \begin{align} y_i &= y^*_i + \varepsilon_i, \\ x_i &= x^*_i + \eta_i, \end{align} }[/math]

where errors ε and η are independent and the ratio of their variances is assumed to be known:

- [math]\displaystyle{ \delta = \frac{\sigma_\varepsilon^2}{\sigma_\eta^2}. }[/math]

In practice, the variances of the [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] parameters are often unknown, which complicates the estimate of [math]\displaystyle{ \delta }[/math]. Note that when the measurement method for [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] is the same, these variances are likely to be equal, so [math]\displaystyle{ \delta = 1 }[/math] for this case.

We seek to find the line of "best fit"

- [math]\displaystyle{ y^* = \beta_0 + \beta_1 x^*, }[/math]

such that the weighted sum of squared residuals of the model is minimized:[3]

- [math]\displaystyle{ SSR = \sum_{i=1}^n\bigg(\frac{\varepsilon_i^2}{\sigma_\varepsilon^2} + \frac{\eta_i^2}{\sigma_\eta^2}\bigg) = \frac{1}{\sigma_\eta^2} \sum_{i=1}^n\Big((y_i-\beta_0-\beta_1x^*_i)^2 + \delta(x_i-x^*_i)^2\Big) \ \to\ \min_{\beta_0,\beta_1,x_1^*,\ldots,x_n^*} SSR }[/math]

See (Jensen 2007) for a full derivation.

Solution

The solution can be expressed in terms of the second-degree sample moments. That is, we first calculate the following quantities (all sums go from i = 1 to n):

- [math]\displaystyle{ \begin{align} \overline{x} &= \tfrac{1}{n}\sum x_i & \overline{y} &= \tfrac{1}{n}\sum y_i,\\ s_{xx} &= \tfrac{1}{n}\sum (x_i-\overline{x})^2 &&= \overline{x^2} - \overline{x}^2, \\ s_{xy} &= \tfrac{1}{n}\sum (x_i-\overline{x})(y_i-\overline{y}) &&= \overline{x y} - \overline{x} \, \overline{y}, \\ s_{yy} &= \tfrac{1}{n}\sum (y_i-\overline{y})^2 &&= \overline{y^2} - \overline{y}^2. \end{align}\, }[/math]

Finally, the least-squares estimates of model's parameters will be[4]

- The equation below for the slope is faulty: see Linnet, K. Estimation of the linear relationship between the measurements of two methods with proportional errors. Statistics in Medicine 1990, 9 (12), 1463-1473. DOI: https://doi.org/10.1002/sim.4780091210 and NCSS Statistical Software. Deming Regression. https://www.ncss.com/wp-content/themes/ncss/pdf/Procedures/NCSS/Deming_Regression.pdf https://www.ncss.com/wp-content/themes/ncss/pdf/Procedures/PASS/Deming_Regression.pdf (accessed December, 2023).

- [math]\displaystyle{ \begin{align} & \hat\beta_1 = \frac{s_{yy}-\delta s_{xx} + \sqrt{(s_{yy}-\delta s_{xx})^2 + 4\delta s_{xy}^2}}{2s_{xy}}, \\ & \hat\beta_0 = \overline{y} - \hat\beta_1\overline{x}, \\ & \hat{x}_i^* = x_i + \frac{\hat\beta_1}{\hat\beta_1^2+\delta}(y_i-\hat\beta_0-\hat\beta_1x_i). \end{align} }[/math]

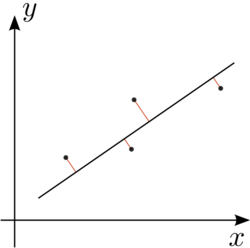

Orthogonal regression

For the case of equal error variances, i.e., when [math]\displaystyle{ \delta=1 }[/math], Deming regression becomes orthogonal regression: it minimizes the sum of squared perpendicular distances from the data points to the regression line. In this case, denote each observation as a point [math]\displaystyle{ z_j = x_j +i y_j }[/math] in the complex plane (i.e., the point [math]\displaystyle{ (x_j, y_j) }[/math] where [math]\displaystyle{ i }[/math] is the imaginary unit). Denote as [math]\displaystyle{ S=\sum{(z_j - \overline z)^2} }[/math] the sum of the squared differences of the data points from the centroid [math]\displaystyle{ \overline z = \tfrac{1}{n} \sum z_j }[/math] (also denoted in complex coordinates), which is the point whose horizontal and vertical locations are the averages of those of the data points. Then:[5]

- If [math]\displaystyle{ S=0 }[/math], then every line through the centroid is a line of best orthogonal fit.

- If [math]\displaystyle{ S \neq 0 }[/math], the orthogonal regression line goes through the centroid and is parallel to the vector from the origin to [math]\displaystyle{ \sqrt{S} }[/math].

A trigonometric representation of the orthogonal regression line was given by Coolidge in 1913.[6]

Application

In the case of three non-collinear points in the plane, the triangle with these points as its vertices has a unique Steiner inellipse that is tangent to the triangle's sides at their midpoints. The major axis of this ellipse falls on the orthogonal regression line for the three vertices.[7] The quantification of a biological cell's intrinsic cellular noise can be quantified upon applying Deming regression to the observed behavior of a two reporter synthetic biological circuit.[8]

York regression

The York regression extends Deming regression by allowing correlated errors in x and y.[9]

See also

References

- Notes

- ↑ Linnet 1993.

- ↑ Cornbleet & Gochman 1979.

- ↑ Fuller 1987, Ch. 1.3.3.

- ↑ Glaister 2001.

- ↑ Minda & Phelps 2008, Theorem 2.3.

- ↑ Coolidge 1913.

- ↑ Minda & Phelps 2008, Corollary 2.4.

- ↑ Quarton 2020.

- ↑ York, D., Evensen, N. M., Martınez, M. L., and Delgado, J. D. B.: Unified equations for the slope, intercept, and standard errors of the best straight line, Am. J. Phys., 72, 367–375, https://doi.org/10.1119/1.1632486, 2004.

- Bibliography

- Adcock, R. J. (1878). "A problem in least squares". The Analyst 5 (2): 53–54. doi:10.2307/2635758.

- Coolidge, J. L. (1913). "Two geometrical applications of the mathematics of least squares". The American Mathematical Monthly 20 (6): 187–190. doi:10.2307/2973072.

- Cornbleet, P.J.; Gochman, N. (1979). "Incorrect Least–Squares Regression Coefficients". Clinical Chemistry 25 (3): 432–438. doi:10.1093/clinchem/25.3.432. PMID 262186.

- Deming, W. E. (1943). Statistical adjustment of data. Wiley, NY (Dover Publications edition, 1985). ISBN 0-486-64685-8.

- Fuller, Wayne A. (1987). Measurement error models. John Wiley & Sons, Inc. ISBN 0-471-86187-1.

- Glaister, P. (2001). "Least squares revisited". The Mathematical Gazette 85: 104–107. doi:10.2307/3620485.

- Jensen, Anders Christian (2007). "Deming regression, MethComp package". Gentofte, Denmark: Steno Diabetes Center. https://r-forge.r-project.org/scm/viewvc.php/*checkout*/pkg/vignettes/Deming.pdf?root=methcomp.

- Koopmans, T. C. (1936). Linear regression analysis of economic time series. DeErven F. Bohn, Haarlem, Netherlands.

- Kummell, C. H. (1879). "Reduction of observation equations which contain more than one observed quantity". The Analyst 6 (4): 97–105. doi:10.2307/2635646.

- Linnet, K. (1993). "Evaluation of regression procedures for method comparison studies". Clinical Chemistry 39 (3): 424–432. doi:10.1093/clinchem/39.3.424. PMID 8448852. http://www.clinchem.org/cgi/reprint/39/3/424.

- Minda, D.; Phelps, S. (2008). "Triangles, ellipses, and cubic polynomials". American Mathematical Monthly 115 (8): 679–689. doi:10.1080/00029890.2008.11920581.

- Quarton, T. G. (2020). "Uncoupling gene expression noise along the central dogma using genome engineered human cell lines". Nucleic Acids Research 48 (16): 9406–9413. doi:10.1093/nar/gkaa668. PMID 32810265.

|