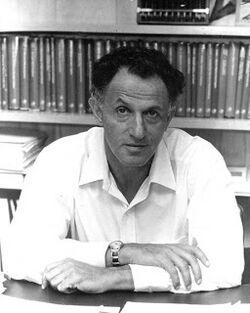

Biography:Richard E. Bellman

Richard Ernest Bellman | |

|---|---|

| |

| Born | Richard Ernest Bellman August 26, 1920 New York City, New York, U.S. |

| Died | March 19, 1984 (aged 63) Los Angeles, California, U.S. |

| Alma mater |

|

| Known for | Dynamic programming Stochastic dynamic programming Curse of dimensionality Linear search problem Bellman equation Bellman–Ford algorithm Bellman's lost in a forest problem Bellman–Held–Karp algorithm Grönwall–Bellman inequality Hamilton–Jacobi–Bellman equation |

| Awards | John von Neumann Theory Prize (1976) IEEE Medal of Honor (1979) Richard E. Bellman Control Heritage Award (1984) |

| Scientific career | |

| Fields | Mathematics and Control theory |

| Institutions | University of Southern California Rand Corporation Stanford University |

| Thesis | On the Boundedness of Solutions of Non-Linear Differential and Difference Equations[1] |

| Doctoral advisor | Solomon Lefschetz[1] |

| Doctoral students | Christine Shoemaker[1] |

Richard Ernest Bellman[2] (August 26, 1920 – March 19, 1984) was an American applied mathematician, who introduced dynamic programming in 1953, and made important contributions in other fields of mathematics, such as biomathematics. He founded the leading biomathematical journal Mathematical Biosciences.

Biography

Bellman was born in 1920 in New York City to non-practising[3] Jewish parents of Polish and Russian descent, Pearl (née Saffian) and John James Bellman,[4] who ran a small grocery store on Bergen Street near Prospect Park, Brooklyn.[5] On his religious views, he was an atheist.[6] He attended Abraham Lincoln High School, Brooklyn in 1937,[4] and studied mathematics at Brooklyn College where he earned a BA in 1941. He later earned an MA from the University of Wisconsin. During World War II, he worked for a Theoretical Physics Division group in Los Alamos. In 1946, he received his Ph.D. at Princeton University under the supervision of Solomon Lefschetz.[7] Beginning in 1949, Bellman worked for many years at RAND corporation, and it was during this time that he developed dynamic programming.[8]

Later in life, Richard Bellman's interests began to emphasize biology and medicine, which he identified as "the frontiers of contemporary science". In 1967, he became founding editor of the journal Mathematical Biosciences, which rapidly became (and remains) one of the most important journals in the field of Mathematical Biology. In 1985, the Bellman Prize in Mathematical Biosciences was created in his honor, being awarded biannually to the journal's best research paper.

Bellman was diagnosed with a brain tumor in 1973, which was removed but resulted in complications that left him severely disabled. He was a professor at the University of Southern California, a Fellow in the American Academy of Arts and Sciences (1975),[9] a member of the National Academy of Engineering (1977),[10] and a member of the National Academy of Sciences (1983).

He was awarded the IEEE Medal of Honor in 1979, "for contributions to decision processes and control system theory, particularly the creation and application of dynamic programming".[11] His key work is the Bellman equation.

Work

Bellman equation

A Bellman equation, also known as a dynamic programming equation, is a necessary condition for optimality associated with the mathematical optimization method known as dynamic programming. Almost any problem which can be solved using optimal control theory can also be solved by analyzing the appropriate Bellman equation. The Bellman equation was first applied to engineering control theory and to other topics in applied mathematics, and subsequently became an important tool in economic theory.[12]

Hamilton–Jacobi–Bellman equation

The Hamilton–Jacobi–Bellman equation (HJB) is a partial differential equation which is central to optimal control theory. The solution of the HJB equation is the 'value function', which gives the optimal cost-to-go for a given dynamical system with an associated cost function. Classical variational problems, for example, the brachistochrone problem can be solved using this method as well. The equation is a result of the theory of dynamic programming which was pioneered in the 1950s by Richard Bellman and coworkers. The corresponding discrete-time equation is usually referred to as the Bellman equation. In continuous time, the result can be seen as an extension of earlier work in classical physics on the Hamilton–Jacobi equation by William Rowan Hamilton and Carl Gustav Jacob Jacobi.[13]

Curse of dimensionality

The curse of dimensionality is an expression coined by Bellman to describe the problem caused by the exponential increase in volume associated with adding extra dimensions to a (mathematical) space. One implication of the curse of dimensionality is that some methods for numerical solution of the Bellman equation require vastly more computer time when there are more state variables in the value function. For example, 100 evenly spaced sample points suffice to sample a unit interval with no more than 0.01 distance between points; an equivalent sampling of a 10-dimensional unit hypercube with a lattice with a spacing of 0.01 between adjacent points would require 1020 sample points: thus, in some sense, the 10-dimensional hypercube can be said to be a factor of 1018 "larger" than the unit interval. (Adapted from an example by R. E. Bellman, see below.) [14]

Bellman–Ford algorithm

Though discovering the algorithm after Ford he is referred to in the Bellman–Ford algorithm, also sometimes referred to as the Label Correcting Algorithm, computes single-source shortest paths in a weighted digraph where some of the edge weights may be negative. Dijkstra's algorithm accomplishes the same problem with a lower running time, but requires edge weights to be non-negative.

Publications

Over the course of his career he published 619 papers and 39 books. During the last 11 years of his life he published over 100 papers despite suffering from crippling complications of brain surgery (Dreyfus, 2003). A selection:[4]

- 1957. Dynamic Programming

- 1959. Asymptotic Behavior of Solutions of Differential Equations

- 1961. An Introduction to Inequalities

- 1961. Adaptive Control Processes: A Guided Tour

- 1962. Applied Dynamic Programming

- 1967. Introduction to the Mathematical Theory of Control Processes

- 1970. Algorithms, Graphs and Computers

- 1972. Dynamic Programming and Partial Differential Equations

- 1982. Mathematical Aspects of Scheduling and Applications

- 1983. Mathematical Methods in Medicine

- 1984. Partial Differential Equations

- 1984. Eye of the Hurricane: An Autobiography, World Scientific Publishing.

- 1985. Artificial Intelligence

- 1995. Modern Elementary Differential Equations

- 1997. Introduction to Matrix Analysis

- 2003. Dynamic Programming

- 2003. Perturbation Techniques in Mathematics, Engineering and Physics

- 2003. Stability Theory of Differential Equations (originally publ. 1953)[15]

References

- ↑ 1.0 1.1 1.2 Richard E. Bellman at the Mathematics Genealogy Project

- ↑ Richard Bellman's Biography

- ↑ Robert S. Roth, ed (1986). The Bellman Continuum: A Collection of the Works of Richard E. Bellman. World Scientific. p. 4. ISBN 9789971500900. "He was raised by his father to be a religious skeptic. He was taken to a different church every week to observe different ceremonies. He was struck by the contrast between the ideals of various religions and the history of cruelty and hypocrisy done in God's name. He was well aware of the intellectual giants who believed in God, but if asked, he would say that each person had to make their own choice. Statements such as "By the State of New York and God ..." struck him as ludicrous. From his childhood he recalled a particularly unpleasant scene between his parents just before they sent him to the store. He ran down the street saying over and over again, "I wish there was a God, I wish there was a God.""

- ↑ 4.0 4.1 4.2 Salvador Sanabria. Richard Bellman profile at http://www-math.cudenver.edu; retrieved October 3, 2008.

- ↑ Bellman biodata at history.mcs.st-andrews.ac.uk; retrieved August 10, 2013.

- ↑ Richard Bellman (June 1984). "Growing Up in New York City" (in English). Eye Of The Hurricane. World Scientific Publishing Company. p. 7. ISBN 9789814635707. https://books.google.com/books?id=4FwGCwAAQBAJ&dq=%22Naturally,+I+was+raised+as+an+atheist.%22&pg=PA7. Retrieved 5 July 2021. "Naturally, I was raised as an atheist. This was quite easy since the only one in the family that had any religion was my grandmother, and she was of German stock. Although she believed in God, and went to the synagogue on the high holy days, there was no nonsense about ritual. I well remember when I went off to the army, she said, "God will protect you." I smiled politely. She added, “I know you don't believe in God, but he will protect you anyway.” I know many sophisticated and highly intelligent people who are practicing Catholics, Protestants, Jews, Mormons, Hindus, Buddhists, etc., feel strongly that religion, or lack of it, is a highly personal matter. My own attitude is like Lagrange's. One day, he was asked by Napoleon whether he believed in God. “Sire,” he said, “I have no need of that hypothesis.”"

- ↑ Mathematics Genealogy Project

- ↑ Bellman R: An introduction to the theory of dynamic programming RAND Corp. Report 1953 (Based on unpublished researches from 1949. It contained the first statement of the principle of optimality)

- ↑ "Book of Members, 1780–2010: Chapter B". American Academy of Arts and Sciences. http://www.amacad.org/publications/BookofMembers/ChapterB.pdf.

- ↑ "NAE Members Directory – Dr. Richard Bellman profile". NAE. http://www.nae.edu/MembersSection/Directory20412/29705.aspx.

- ↑ "IEEE Medal of Honor Recipients". IEEE. http://www.ieee.org/documents/moh_rl.pdf.

- ↑ Ljungqvist, Lars; Sargent, Thomas J. (2012). Recursive Macroeconomic Theory (3rd ed.). MIT Press. ISBN 978-0-262-31202-8. https://books.google.com/books?id=H-PxCwAAQBAJ.

- ↑ Kamien, Morton I.; Schwartz, Nancy L. (1991). Dynamic Optimization: The Calculus of Variations and Optimal Control in Economics and Management (2nd ed.). Amsterdam: Elsevier. pp. 259–263. ISBN 9780486488561. https://books.google.com/books?id=0IoGUn8wjDQC&pg=PA259.

- ↑ Richard Bellman (1961). Adaptive control processes: a guided tour. Princeton University Press.. https://archive.org/details/adaptivecontrolp0000bell.

- ↑ Haas, F. (1954). "Review: Stability theory of differential equations, by R. Bellman". Bull. Amer. Math. Soc. 60 (4): 400–401. doi:10.1090/s0002-9904-1954-09830-0. https://www.ams.org/journals/bull/1954-60-04/S0002-9904-1954-09830-0/.

Further reading

- Bellman, Richard (1984). Eye of the Hurricane: An Autobiography, World Scientific.

- Stuart Dreyfus (2002). "Richard Bellman on the Birth of Dynamic Programming". In: Operations Research. Vol. 50, No. 1, Jan–Feb 2002, pp. 48–51.

- J.J. O'Connor and E.F. Robertson (2005). Biography of Richard Bellman from the MacTutor History of Mathematics.

- Stuart Dreyfus (2003) "Richard Ernest Bellman". In: International Transactions in Operational Research. Vol 10, no. 5, pp. 543–545.

Articles

- Bellman, R.E, Kalaba, R.E, Dynamic Programming and Feedback Control, RAND Corporation, P-1778, 1959.

External links

- "IEEE Global History Network – Richard Bellman". IEEE. 14 August 2017. http://www.ieeeghn.org/wiki/index.php/Richard_Bellman.

- Harold J. Kushner's speech on Richard Bellman, when accepting the Richard E. Bellman Control Heritage Award (click on "2004: Harold J. Kushner")

- IEEE biography

- Richard E. Bellman at the Mathematics Genealogy Project

- Author profile in the database zbMATH

- Biography of Richard Bellman from the Institute for Operations Research and the Management Sciences (INFORMS)

|