Copying network models

| Network science | ||||

|---|---|---|---|---|

| Network types | ||||

| Graphs | ||||

|

||||

| Models | ||||

|

||||

| ||||

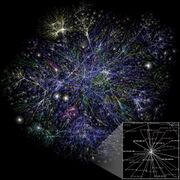

Copying network models are network generation models that use a copying mechanism to form a network, by repeatedly duplicating and mutating existing nodes of the network. Such a network model has first been proposed in 1999 to explain the network of links between web pages, but since has been used to model biological and citation networks as well.

Origins

In 1999 Jon Kleinberg and 3 co-authors published an article to Computing and combinatorics attempting to construct a network model that explains patterns found in an analysis of the World Wide Web. The intuition behind the model was that when a user decides to build and publish her own web page, she encounters a list of links for her topic of interest on the web and ends up copying this collection, or many such collections to her own web page. This creating a new node in the network - the new page - and copying edges from already existing nodes in some fashion.

They outlined a model very generally, but didn't analyse the predictions of an exact model in detail, mostly due to computational limitations, but suggested that copying nodes randomly is a simple, model worthy mechanism for creating Zipfian distribution networks.[1]

This paper since, has been cited over 1200 times, which is a number comparable to significant papers contributing to network science, like the one describing the Erdős–Rényi model (about 8300) and includes notable network science books like Mark Newman's.[2]

Description

General model

To understand a general model, take a basic network growth model, which is characterized by four stochastic processes. Creation processes and for node- and edge-creation, and deletion processes and for node- and edge-deletion.

Take a discrete time timeframe, where consists of simply at each step, creating a node with probability , and similarly is deleting a node with probability ad(t). Consequently, this also means includes removing all edges that belonged to a node that was removed.

is where the essence of the copying model is. In the original article, they characterize with a probability distribution, that determines a node to add edges out of, and a number of edges that will be added. And with probability that the k edges are either copied or added randomly. With probability , all edges from v are drawn to nodes chosen independently and uniformly at random. With probability , the k edges are copied from a randomly chosen node . Meaning that neighbours of become neighbours of . If has a degree higher than , edges are selected randomly and if it has a lower degree , a next node is randomly selected and of its edges are copied, and so on.

It can be shown, that such a network produces a power law degree distribution, with an exponent where is the ratio of number of the randomly added edges to the number of the copied edges.[3] So with a ratio between zero and 0.5 a power law distribution with an exponent of can be achieved. Also note that as the ratio approaches 1, the exponent goes to infinity.

GNC model

Another simple model, proposed to explain the observation that the average node degree grows with system size adds nodes one at a time. A new node randomly selects a node from the existing ones and in addition to copying all the edges the target node was assigned at its introduction, it connects to the node itself, thus slightly increasing average degree. For example if the target node is the very first one in the network, no additional edges are added, just the one between the first node and the last. Take the two extreme cases. If a new node always connects to the first node, the model forms a star graph, where each node has degree one, but the first node has increasing degree count. In this case, the average degree increases by with each additional node, where is the number of nodes. In the other extreme case, where the new nodes connects to the one added before it, a complete graph is formed and the average degree increases 1 with every new node. [4]

Walking model

A copying model that is somewhat of a mixture of the two was introduced by Vazquez. In this model, a when a new node is added, it connects to one randomly selected node that is already present in the network, and to each of its neighbors with possibility. So with this graph creates a chain and creates a complete graph. Depending on this graph can produce a number of power-law degree distributions with certain cutoffs that characterize real world networks well. [5]

Biological networks

There has been considerable interest in modeling biological networks, such as protein interaction networks and genetic regulatory networks using copying network models. Genes that contain information about how a node in a network should interact with others tend to duplicate in evolution, thus duplicating the edges that were present in the network. Also, preferential attachment networks can not really model biological networks well, both because they are not plausible, and because a number of biological networks have power law degree distribution with exponent which is not produced by such prefernital network models.

Take a node duplication process, in which initially each node has equal probability of being duplicated in a single time step. However, the probability of duplication is influenced by the history of prior duplications, and not all edges get duplicated, only a randomly selected subset of them. Such a partial duplication model, can produce power-law distributions with exponents , consistent with the degree distribution of a number of biological networks, regardless of the starting graph.[6]

Notes

- ↑ Kleinberg, Jon (1999), "The web as a graph: measurements, models, and methods.", Computing and Combinatorics: 1–17

- ↑ Newman, Mark (2003), "The Structure and Function of Complex Networks", SIAM Review 45 (2): 167–256, doi:10.1137/S003614450342480, Bibcode: 2003SIAMR..45..167N

- ↑ Kumar, Ravi (2000), "Stochastic models for the web graph.", Foundations of Computer Science.

- ↑ Krapivsky, Pavel L; Redner, Sidney (2005), "Network growth by copying.", Physical Review 71 (3): 036118, doi:10.1103/PhysRevE.71.036118, PMID 15903504, Bibcode: 2005PhRvE..71c6118K

- ↑ Vazquez, Alexei L (2000), "Knowing a network by walking on it: emergence of scaling", arXiv:cond-mat/0006132

- ↑ Chung, Fan (2003), "Duplication models for biological networks.", Journal of Computational Biology 10 (5): 677–687, doi:10.1089/106652703322539024, PMID 14633392.

|