Evidence-based policy

| Part of a series on |

| Evidence-based practices |

|---|

Evidence-based policy (also known as evidence-based governance) is a concept in public policy that advocates for policy decisions to be grounded on, or influenced by, rigorously established objective evidence. This concept presents a stark contrast to policymaking predicated on ideology, 'common sense,' anecdotes, or personal intuitions. The methodology employed in evidence-based policy often includes comprehensive research methods such as randomized controlled trials (RCT).[1] Good data, analytical skills, and political support to the use of scientific information are typically seen as the crucial elements of an evidence-based approach.[2]

An individual or organisation is justified in claiming that a specific policy is evidence-based if, and only if, three conditions are met. First, the individual or organisation possesses comparative evidence about the effects of the specific policy in comparison to the effects of at least one alternative policy. Second, the specific policy is supported by this evidence according to at least one of the individual's or organisation's preferences in the given policy area. Third, the individual or organisation can provide a sound account for this support by explaining the evidence and preferences that lay the foundation for the claim.[3]

The effectiveness of evidence-based policy hinges upon the presence of quality data, proficient analytical skills, and political backing for the utilization of scientific information.[4]

While proponents of evidence-based policy have identified certain types of evidence, such as scientifically rigorous evaluation studies like randomized controlled trials, as optimal for policymakers to consider, others argue that not all policy-relevant areas are best served by quantitative research. This discrepancy has sparked debates about the types of evidence that should be utilized. For example, policies concerning human rights, public acceptability, or social justice may necessitate different forms of evidence than what randomized trials provide. Furthermore, evaluating policy often demands moral philosophical reasoning in addition to the assessment of intervention effects, which randomized trials primarily aim to provide.[5]

In response to such complexities, some policy scholars have moved away from using the term evidence-based policy, adopting alternatives like evidence-informed. This semantic shift allows for continued reflection on the need to elevate the rigor and quality of evidence used, while sidestepping some of the limitations or reductionist notions occasionally associated with the term evidence-based. Despite these nuances, the phrase "evidence-based policy" is still widely employed, generally signifying a desire for evidence to be used in a rigorous, high-quality, and unbiased manner, while avoiding its misuse for political ends.[6]

History

The shift towards contemporary evidence-based policy is deeply rooted in the broader movement towards evidence-based practice. This shift was largely influenced by the emergence of evidence-based medicine during the 1980s.[1] However, the term 'Evidence-based policy' was not adopted in the medical field until the 1990s.[7] In social policy, the term was not employed until the early 2000s.[8]

The initial instance of evidence-based policy was manifested in tariff-making in Australia. The legislation necessitated that tariffs be informed by a public report issued by the Tariff Board. This report would cover the tariff, industrial, and economic implications.[9]

History of evidence-based medicine

Evidence-based medicine (EBM) is a term that was first introduced by Gordon Guyatt.[10] Nevertheless, examples of EBM can be traced back to the early 1900s. Some contend that the earliest instance of EBM dates back to the 11th century when Ben Cao Tu Jing from the Song Dynasty suggested a method to evaluate the efficacy of ginseng.[11]

Many scholars regard evidence-based policy as an evolution from "evidence-based medicine", where research findings are utilized to support clinical decisions. In this model, evidence is collected through randomized controlled trials (RCTs) which compare a treatment group with a placebo group to measure outcomes.[12]

While the earliest published RCTs in medicine date back to the 1940s and 1950s,[1] the term 'evidence-based medicine' did not appear in published medical research until 1993.[7] In the same year, the Cochrane Collaboration was established in the UK. This organization works to keep all RCTs up-to-date and provides "Cochrane reviews", which present primary research in human health and health policy.[13]

The usage of the keyword EBM has seen a significant increase since the 2000s, and the influence of EBM has substantially expanded within the field of medicine.[14]

History of evidence-based policy making

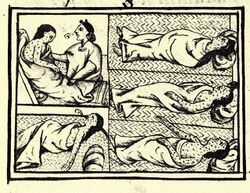

The application of Randomized Controlled Trials in social policy was notably later than in the medical field. Although elements of an evidence-based approach can be traced back as far as the fourteenth century, it was popularized more recently during the tenure of the Blair Government in the United Kingdom.[9] This government expressed a desire to shift away from ideological decision-making in policy formulation.[9] For instance, a 1999 UK Government white paper, Modernising Government, emphasized the need for policies that "really deal with problems, are forward-looking and shaped by evidence rather than a response to short-term pressures; [and] tackle causes not symptoms."[15]

This shift in policy formulation led to an upswing in research and activism advocating for more evidence-based policy-making. As a result, the Campbell Collaboration was established in 1999 as a sibling organization to the Cochrane Collaboration.[12][16] The Campbell Collaboration undertakes reviews of the most robust evidence, analyzing the impacts of social and educational policies and practices.

The Economic and Social Research Council (ESRC) furthered the drive for more evidence-based policymaking by granting £1.3 million to the Evidence Network in 1999. Similar to both the Campbell and Cochrane Collaborations, the Evidence Network functions as a hub for evidence-based policy and practice.[12] More recently, the Alliance for Useful Evidence was established, funded by the ESRC, Big Lottery, and Nesta, to advocate for the use of evidence in social policy and practice. The Alliance, operating throughout the UK, promotes the use of high-quality evidence to inform decisions on strategy, policy, and practice through advocacy, research publication, idea sharing, advice, event hosting, and training.

The application of evidence-based policy varies among practitioners. For instance, Michael Kremer and Rachel Glennerster, curious about strategies to enhance students' test scores, conducted randomized controlled trials in Kenya. They experimented with new textbooks and flip charts, and smaller class sizes, but they discovered that the only intervention that boosted school attendance was treating intestinal worms in children.[17] Their findings led to the establishment of the Deworm the World Initiative, a charity highly rated by GiveWell for its cost-effectiveness.[17]

Recent discussions have emerged about the potential conflicts of interest in evidence-based decision-making applied to public policy development. In their analysis of vocational education in prisons run by the California Department of Corrections, researchers Andrew J. Dick, William Rich, and Tony Waters found that political factors inevitably influenced "evidence-based decisions," which were ostensibly neutral and technocratic. They argue that when policymakers, who have a vested interest in validating previous political judgments, fund evidence, there is a risk of corruption, leading to policy-based evidence making.[18]

Methodology

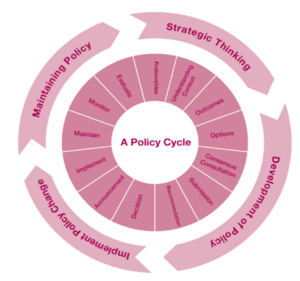

Evidence-based policy employs various methodologies, but they all commonly share the following characteristics:

- They test a theory as to why the policy will be effective and what the impacts of the policy will be if it is successful.

- They include a counterfactual: an analysis of what would have occurred if the policy had not been implemented.

- They incorporate some measurement of the impact.

- They examine both direct and indirect effects that occur because of the policy.

- They identify uncertainties and control for external influences outside of the policy that may affect the outcome.

- They can be tested and replicated by a third party.[citation needed]

The methodology used in evidence-based policy aligns with the cost-benefit framework. It is designed to estimate a net payoff if the policy is implemented. Due to the difficulty in quantifying some effects and outcomes of the policy, the focus is primarily on whether benefits will outweigh costs, rather than assigning specific values.[9]

Types of evidence in evidence-based policy making

Various types of data can be considered evidence in evidence-based policy making.[19] The Scientific Method organizes this data into tests to validate or challenge specific beliefs or hypotheses. The outcomes of various tests may hold varying degrees of credibility within the scientific community, influenced by factors such as the type of blind experiment (blind vs. double-blind), sample size, and replication. Advocates for evidence-based policy strive to align societal needs (as framed within Maslow's Hierarchy of needs) with outcomes that the scientific method indicates as most probable.[20]

Quantitative evidence

Quantitative evidence for policymaking includes numerical data from peer-reviewed journals, public surveillance systems, or individual programs. Quantitative data can also be collected by the government or policymakers themselves through surveys.[19] Both evidence-based medicine (EBM) and evidence-based public health policy constructions extensively utilize quantitative evidence.

Qualitative evidence

Qualitative evidence comprises non-numerical data gathered through methods such as observations, interviews, or focus groups. It is often used to craft compelling narratives to influence decision-makers.[19] The distinction between qualitative and quantitative data does not imply a hierarchy; both types of evidence can be effective in different contexts. Policymaking often involves a combination of qualitative and quantitative evidence.[20]

Prioritization of causes

Adherents of evidence-based policy often approach their work with a principle of cause neutrality:[citation needed][21] They first establish a human interest or goal and then use evidence-based methods to identify the most effective way to achieve it. Some of the causes pursued under this approach encompass addressing hunger, protecting endangered species, combating climate change, reforming immigration policy, researching and accessibility of cures for diseases, preventing sexual violence, alleviating poverty, addressing intensive factory farming, and preventing nuclear warfare.[22][23] The effective policy movement has seen many of its participants prioritize global health and development, animal welfare, and risk mitigation strategies that aim to safeguard humanity's future.[24]

Scholarly communication in policy-making

Academics provide input to policy beyond the production of content relating to issues addressed via policy through various channels:

- some studies investigate existing policies (policy studies)[25]

- some studies include policy options with varying levels of specificity or detail[26] or compare possible rough pathway-options[27]

- some science-related organizations devise concrete policy proposals[28]

- some academics engage in science communication or activism in various ways such as by holding press conferences, actively engaging with news media, engaging direct action themselves to attract media attention,[29] writing collectively-signed public documents,[30] social media activities,[31] or creating open letters.

Global development and health

The mitigation of global poverty and neglected tropical diseases has been a primary focus of several pioneering organizations dedicated to data-driven decision-making.

GiveWell, a charity evaluator, was established by Holden Karnofsky and Elie Hassenfeld in 2007 to combat poverty.[32][33] GiveWell contends that donations have the greatest marginal impact when aimed at global poverty and health. Its top recommendations have included: malaria prevention charities such as the Against Malaria Foundation and Malaria Consortium, deworming charities like the Schistosomiasis Control Initiative and Deworm the World Initiative, and GiveDirectly, which facilitates direct unconditional cash transfers.[34][35]

The Life You Can Save, which originated from Singer's book of the same name,[36] works to alleviate global poverty by promoting evidence-backed charities, conducting philanthropy education, and changing the culture of giving in affluent countries.[37][38]

The initial focus of many effective altruism projects has largely been on direct strategies, such as health interventions and cash transfers. However, more comprehensive social, economic, and political reforms aimed at facilitating long-term poverty reduction have also garnered attention.[39] In 2011, GiveWell launched GiveWell Labs, which was later renamed the Open Philanthropy Project. The project aims to research and fund more speculative and diverse causes such as policy reform, global catastrophic risk reduction, and scientific research.[40][41] The Open Philanthropy Project is a collaboration between GiveWell and Good Ventures.[42][43][44]

Long-term future and global catastrophic risks

The concept of focusing on the long-term future suggests that the cumulative value of meaningful metrics (like wealth, potential for suffering or happiness, etc.) over future generations, exceeds the current value for existing populations. However, some researchers find it challenging to make such trade-offs. For example, Toby Ord mentioned, "Given the urgent need to address the avoidable suffering in our present, I was slow to turn to the future."[45]: 8 Ord justified his work on long-term issues by stating that mitigating future suffering is even more overlooked than current causes of suffering. Furthermore, he believes that future populations are more vulnerable to risks induced by present actions than currently disadvantaged populations.[45] A large corpus of scientific research suggests near-term decisions and actions have a large long-term impact on humanity's future, which would prioritize a near-term rather than long-term focus – including the 2023 synthesis of the IPCC Sixth Assessment Report which concluded that the extent to which both current and future generations will be impacted by climate change depends on choices now and in the near-term[26] or the book Our Final Century about issues such as risks from novel and relatively novel technologies.[46]

There are several philosophical considerations when evaluating the suffering of future populations. First, the nonexistence of humans (and other animals) would eliminate the possibility of suffering, assuming that the process of extinction doesn't involve suffering itself. Second, the cost of reducing suffering in the future could be higher or lower, influenced by factors like rising healthcare costs or decreasing costs of computing and renewable energy. Third, the value of a cost or benefit is shaped by the time preferences of the recipient and the payer. Fourth, current expenditures may alleviate future suffering, possibly at a reduced cost. Fifth, early alleviation of suffering might influence future suffering. Sixth, investments with high returns could potentially reduce total suffering more than immediate donations. Seventh, future populations might be so affluent that despite a potential increase in the cost of reducing suffering, they could be better off waiting.[47] Singer suggested that existential risk should not dominate the public image of the effective altruism movement to avoid limiting its outreach.[48]

In particular, the importance of addressing existential risks such as dangers associated with biotechnology and possibly advanced synthetic artificial intelligence is often highlighted and the subject of active research.[49] Because it is generally infeasible to use traditional research techniques such as randomized controlled trials to analyze existential risks, researchers such as Nick Bostrom have used methods such as expert opinion elicitation to estimate their importance.[50] Ord offered probability estimates for a number of existential risks in his 2020 book The Precipice.[51]

The Future of Humanity Institute at the University of Oxford, the Centre for the Study of Existential Risk at the University of Cambridge, and the Future of Life Institute are organizations actively engaged in research and advocacy aimed at improving the long-term future.[52] Moreover, the Machine Intelligence Research Institute has a specific focus on managing advanced artificial intelligence.[53][54]

Evidence-based Policy Initiatives by Non-governmental Organizations

Overseas Development Institute

The Overseas Development Institute (ODI) asserts that research-based evidence can significantly influence policies that have profound impacts on lives. Illustrative examples mentioned in the UK's Department for International Development's (DFID) new research strategy include a 22% reduction in neonatal mortality in Ghana, achieved by encouraging women to initiate breastfeeding within one hour of childbirth, and a 43% decrease in mortality among HIV-positive children due to the use of a widely accessible antibiotic.

Following numerous policy initiatives, the ODI conducted an evaluation of their evidence-based policy efforts. This analysis identified several factors contributing to policy decisions that are only weakly informed by research-based evidence. Policy development processes are complex, seldom linear or logical, thus making the direct application of presented information by policy-makers an unlikely scenario. These factors encompass information gaps, secrecy, the necessity for rapid responses versus slow data availability, political expediency (what is popular), and a lack of interest among policy-makers in making policies more scientifically grounded. When a discrepancy is identified between the scientific process and political process, those seeking to reduce this gap face a choice: either to encourage politicians to adopt more scientific methods or to prompt scientists to employ more political strategies.

The Overseas Development Institute (ODI) suggested that, in the face of limited progress in evidence-based policy, individuals and organizations possessing relevant data should leverage the emotional appeal and narrative power typically associated with politics and advertising to influence decision-makers. Instead of relying solely on tools like cost–benefit analysis and logical frameworks,[55] the ODI recommended identifying key players, crafting compelling narratives, and simplifying complex research data into clear, persuasive stories. Rather than advocating for systemic changes to promote evidence-based policy, the ODI encouraged data holders to actively engage in the political process.

Furthermore, the ODI posited that transforming a person who merely 'finds' data into someone who actively 'uses' data within our current system necessitates a fundamental shift towards policy engagement over academic achievement. This shift implies greater involvement with the policy community, the development of a research agenda centered on policy issues instead of purely academic interests, the acquisition of new skills or the formation of multidisciplinary teams, the establishment of new internal systems and incentives, increased investment in communications, the production of a different range of outputs, and enhanced collaboration within partnerships and networks.

The Future Health Systems consortium, based on research undertaken in six countries across Asia and Africa, has identified several key strategies to enhance the incorporation of evidence into policy-making.[56] These strategies include enhancing the technical capacity of policy-makers; refining the presentation of research findings; leveraging social networks; and establishing forums to facilitate the connection between evidence and policy outcomes.[57][58]

The Pew Charitable Trusts

The Pew Charitable Trusts is a non-governmental organization dedicated to using data, science, and facts to serve the public good.[59] One of its initiatives, the Results First, collaborates with different US states to promote the use of evidence-based policymaking in the development of their laws.[60] The initiative has created a framework that serves as an example of how to implement evidence-based policy.

Pew's 5 key components of evidence-based policy are:[59]

- Program Assessment: This involves systematic reviews of the available evidence on the effectiveness of public programs, the development of a comprehensive inventory of funded programs, categorization of these programs by their proven effectiveness, and identification of their potential return on investment.

- Budget Development: This process incorporates the evidence of program effectiveness into budget and policy decisions, prioritizing funding for programs that deliver a high return on investment. It involves integrating program performance information into the budget development process, presenting information to policymakers in user-friendly formats, including relevant studies in budget hearings and committee meetings, establishing incentives for implementing evidence-based programs and practices, and building performance requirements into grants and contracts.

- Implementation Oversight: This ensures that programs are effectively delivered and remain faithful to their intended design. Key aspects include establishing quality standards for program implementation, building and maintaining capacity for ongoing quality improvement and monitoring of fidelity to program design, balancing program fidelity requirements with local needs, and conducting data-driven reviews to improve program performance.

- Outcome Monitoring: This involves routinely measuring and reporting outcome data to determine whether programs are achieving their desired results. It includes developing meaningful outcome measures for programs, agencies, and the community, conducting regular audits of systems for collecting and reporting performance data, and regularly reporting performance data to policymakers.

- Targeted Evaluation: This process involves conducting rigorous evaluations of new and untested programs to ensure they warrant continued funding. This includes leveraging available resources to conduct evaluations, targeting evaluations to high-priority programs, making better use of administrative data for program evaluations, requiring evaluations as a condition for continued funding for new initiatives, and developing a centralized repository for program evaluations.

The Coalition for Evidence-Based Policy

The Coalition for Evidence-Based Policy was a nonprofit, nonpartisan organization, whose mission was to increase government effectiveness through the use of rigorous evidence about "what works." Since 2001, the Coalition worked with U.S. Congressional and Executive Branch officials and advanced evidence-based reforms in U.S. social programs, which have been enacted into law and policy. The Coalition claimed to have no affiliation with any programs or program models, and no financial interest in the policy ideas it supported, enabling it to serve as an independent, objective source of expertise to government officials on evidence-based policy.[61][unreliable source]

Major new policy initiatives that were enacted into law with the work of Coalition with congressional and executive branch officials.[62]

- Evidence-Based Home Visitation Program for at-risk families with young children (Department of Health and Human Services – HHS, $1.5 billion over 2010-2014

- Evidence-Based Teen Pregnancy Prevention Program (HHS, $109 million in FY14)

- Investing in Innovation Fund, to fund development and scale-up of evidence-based K-12 educational interventions (Department of Education, $142 million in FY14)

- First in the World Initiative, to fund development and scale-up of evidence-based interventions in postsecondary education (Department of Education, $75 million in FY14)

- Social Innovation Fund, to support public/private investment in evidence-based programs in low-income communities (Corporation for National and Community Service, $70 million in FY14)

- Trade Adjustment Assistance Community College and Career Training Grants Program, to fund development and scale-up of evidence-based education and career training programs for dislocated workers (Department of Labor – DOL, $2 billion over 2011–2014)

- Workforce Innovation Fund, to fund development and scale-up of evidence-based strategies to improve education/employment outcomes for U.S. workers (DOL, $47 million in FY14).

Their website now says "The Coalition wound down its operations in the spring of 2015, and the Coalition's leadership and core elements of the group's work have been integrated into the Laura and John Arnold Foundation".[63] In 2003 the Coalition published a guide on educational evidenced-based practices.[64]

Cost-benefit analysis in evidence-based policy

Cost-benefit analysis (CBA) is a method used in evidence-based policy. It's an economic tool used to assess the economic, social, and environmental impacts of policies. The aim is to guide policymakers toward decisions that increase societal welfare.[65]

The use of cost-benefit analysis in policy-making was first mandated by President Ronald Reagan's Executive Order 12291 in 1981. This order stated that administrative decisions should use sufficient information regarding the potential impacts of regulation. Maximizing the net benefits to society was a primary focus among the five general requirements of the order.[66]

Later presidents, including Bill Clinton and Barack Obama, modified but still emphasized the importance of cost-benefit analysis in their executive orders. For example, Clinton's Executive Order 12866 kept the need for cost-benefit analysis but also stressed the importance of flexibility, public involvement, and coordination among agencies.[67]

During Obama's administration, Executive Order 13563 further strengthened the role of cost-benefit analysis in regulatory review. It encouraged agencies to consider values that are hard or impossible to quantify, like equity, human dignity, and fairness.[68]

The use of cost-benefit analysis in these executive orders highlights its importance in evidence-based policy. By comparing the potential impacts of different policy options, cost-benefit analysis aids in making policy decisions that are based on empirical evidence and designed to maximize societal benefits.

Critiques

Evidence-based policy has faced several critiques. Paul Cairney, a professor of politics and public policy at the University of Stirling in Scotland, contends[69] that proponents of the approach often underestimate the complexity of policy-making and misconstrue how policy decisions are typically made. Nancy Cartwright and Jeremy Hardie[70] question the emphasis on randomized controlled trials (RCTs), arguing that evidence from RCTs is not always sufficient for making decisions. They suggest that applying experimental evidence to a policy context requires an understanding of the conditions present within the experimental setting and an assertion that these conditions also exist in the target environment of the proposed intervention. Additionally, they argue that the prioritization of RCTs could lead to the criticism of evidence-based policy being overly focused on narrowly defined 'interventions', which implies surgical actions on one causal factor to influence its effect.

The concept of intervention within the evidence-based policy movement aligns with James Woodward's interventionist theory of causality.[71] However, policy-making also involves other types of decisions, such as institutional reforms and predictive actions. These other forms of evidence-based decision-making do not necessitate evidence of an invariant causal relationship under intervention. Hence, mechanistic evidence and observational studies are often adequate for implementing institutional reforms and actions that do not alter the causes of a causal claim.[72]

Furthermore, there have been reports[73] of frontline public servants, such as hospital managers, making decisions that detrimentally affect patient care to meet predetermined targets. This argument was presented by Professor Jerry Muller of the Catholic University of America in his book The Tyranny of Metrics.[74]

See also

- Argument map

- Effective altruism

- Evidence-based legislation

- Evidence-based management

- Evidence-based policing

- Evidence-based practices

- Evidence-based research

- Inverse benefit law

- Knowledge-based decision making

- Libertarian paternalism

- March for Science

- Nudge theory

- Policy-based evidence making

- Politicization of science

- Science policy

- Scientocracy

- Technocracy

- Wildlife Enforcement Monitoring System

References

- ↑ 1.0 1.1 1.2 Baron, Jon (2018-07-01). "A Brief History of Evidence-Based Policy" (in en). The Annals of the American Academy of Political and Social Science 678 (1): 40–50. doi:10.1177/0002716218763128. ISSN 0002-7162.

- ↑ Head, Brian. (2009). Evidence-based policy: principles and requirements . University of Queensland. Retrieved 4 June 2010.

- ↑ Gade, Christian (2023). "When is it justified to claim that a practice or policy is evidence-based? Reflections on evidence and preferences". Evidence & Policy: 1–10. doi:10.1332/174426421X16905606522863.

This article incorporates text available under the CC BY 4.0 license.

This article incorporates text available under the CC BY 4.0 license.

- ↑ Head, Brian. (2009). Evidence-based policy: principles and requirements . University of Queensland. Retrieved 4 June 2010.

- ↑ Petticrew, M (2003). "Evidence, hierarchies, and typologies: Horses for courses". Journal of Epidemiology & Community Health 57 (7): 527–529. doi:10.1136/jech.57.7.527. PMID 12821702.

- ↑ Parkhurst, Justin (2017). The Politics of Evidence: from Evidence Based Policy to the Good Governance of Evidence. London: Routledge. doi:10.4324/9781315675008. ISBN 978-1138939400. http://eprints.lse.ac.uk/68604/1/Parkhurst_The%%20Politics%20of%20Evidence.pdf.[page needed]

- ↑ 7.0 7.1 Guyatt, G. H. (1993-12-01). "Users' guides to the medical literature. II. How to use an article about therapy or prevention. A. Are the results of the study valid? Evidence-Based Medicine Working Group". JAMA: The Journal of the American Medical Association 270 (21): 2598–2601. doi:10.1001/jama.270.21.2598. ISSN 0098-7484. PMID 8230645. http://dx.doi.org/10.1001/jama.270.21.2598.

- ↑ Hammersley, M. (2013) The Myth of Research-Based Policy and Practice, London, Sage.

- ↑ 9.0 9.1 9.2 9.3 Banks, Gary (2009). Evidence-based policy making: What is it? How do we get it? . Australian Government, Productivity Commission. Retrieved 4 June 2010

- ↑ Guyatt, Gordon H. (1991-03-01). "Evidence-based medicine". ACP Journal Club 114 (2): A16. doi:10.7326/ACPJC-1991-114-2-A16. ISSN 1056-8751. https://www.acpjournals.org/doi/10.7326/ACPJC-1991-114-2-A16.

- ↑ Payne-Palacio, June R.; Canter, Deborah D. (2016) (in en). The Profession of Dietetics: A Team Approach. Jones & Bartlett Learning. ISBN 978-1284126358. https://books.google.com/books?id=aFf_DAAAQBAJ&dq=In+order+to+evaluate+the+efficacy+of+ginseng%2C+find+two+people+and+let+one+eat+ginseng+and+run%2C+the+other+run+without+ginseng.+The+one+that+did+not+eat+ginseng+will+develop+shortness+of+breath+sooner&pg=PA4.

- ↑ 12.0 12.1 12.2 Marston & Watts. Tampering with the Evidence: A Critical Appraisal of Evidence-Based Policy-Making . RMIT University. Retrieved 10 September 2014.

- ↑ The Cochrane Collaboration Retrieved 10 September 2014.

- ↑ Claridge, Jeffrey A.; Fabian, Timothy C. (2005-05-01). "History and Development of Evidence-based Medicine" (in en). World Journal of Surgery 29 (5): 547–553. doi:10.1007/s00268-005-7910-1. ISSN 1432-2323. PMID 15827845. https://doi.org/10.1007/s00268-005-7910-1.

- ↑ "Evidence-based policy making". Department for Environment, Food and Rural Affairs. 21 September 2006. http://www.defra.gov.uk/corporate/policy/evidence/index.htm.

- ↑ The Campbell Collaboration Retrieved 10 September 2014.

- ↑ 17.0 17.1 Thompson, Derek (15 June 2015). "The Greatest Good". The Atlantic. https://www.theatlantic.com/business/archive/2015/06/what-is-the-greatest-good/395768/.

- ↑ Dick, Andrew J.; Rich, William; Waters, Tony (2016). Prison Vocational Education and Policy in the United States. New York: Palgrave Macmillan. pp. 11–40, 281–306.

- ↑ 19.0 19.1 19.2 Brownson, Ross C.; Chriqui, Jamie F.; Stamatakis, Katherine A. (2009). "Understanding Evidence-Based Public Health Policy". American Journal of Public Health 99 (9): 1576–1583. doi:10.2105/AJPH.2008.156224. ISSN 0090-0036. PMID 19608941.

- ↑ 20.0 20.1 Court, Julius; Sutcliffe, Sophie (November 2005). "Evidence-Based Policymaking: What is it? How does it work? What relevance for developing countries?". Overseas Development Institute). https://cdn.odi.org/media/documents/3683.pdf.

- ↑ Kissel, Joshua (31 January 2017). "Effective Altruism and Anti-Capitalism: An Attempt at Reconciliation". Essays in Philosophy 18 (1): 68–90. doi:10.7710/1526-0569.1573.

- ↑ Pummer, Theron; MacAskill, William (June 2020). "Effective altruism". in LaFollette, Hugh. International Encyclopedia of Ethics. Hoboken, NJ: John Wiley & Sons. pp. 1–9. doi:10.1002/9781444367072.wbiee883. ISBN 978-1444367072. OCLC 829259960. https://philarchive.org/archive/PUMEA.

- ↑ MacAskill, William (2019a). "The definition of effective altruism". in Greaves, Hilary; Pummer, Theron. Effective Altruism: Philosophical Issues. Engaging philosophy. Oxford; New York: Oxford University Press. pp. 10–28. doi:10.1093/oso/9780198841364.003.0001. ISBN 978-0198841364. OCLC 1101772304.

- ↑ You have $8 billion. You want to do as much good as possible. What do you do? Inside the Open Philanthropy Project

- ↑ Hoffman, Steven J.; Baral, Prativa; Rogers Van Katwyk, Susan; Sritharan, Lathika; Hughsam, Matthew; Randhawa, Harkanwal; Lin, Gigi; Campbell, Sophie et al. (9 August 2022). "International treaties have mostly failed to produce their intended effects" (in en). Proceedings of the National Academy of Sciences 119 (32): e2122854119. doi:10.1073/pnas.2122854119. ISSN 0027-8424. PMID 35914153. Bibcode: 2022PNAS..11922854H.

- University press release: "Do international treaties actually work? Study says they mostly don't" (in en). York University. https://phys.org/news/2022-08-international-treaties-dont.html.

- ↑ 26.0 26.1 "AR6 Synthesis Report: Climate Change 2023 — IPCC". https://www.ipcc.ch/report/sixth-assessment-report-cycle/.

- ↑ Weidner, Till; Guillén-Gosálbez, Gonzalo (15 February 2023). "Planetary boundaries assessment of deep decarbonisation options for building heating in the European Union" (in en). Energy Conversion and Management 278: 116602. doi:10.1016/j.enconman.2022.116602. ISSN 0196-8904.

- ↑ "GermanZero - Creating a better climate". https://germanzero.de/english#.

- ↑ Capstick, Stuart; Thierry, Aaron; Cox, Emily; Berglund, Oscar; Westlake, Steve; Steinberger, Julia K. (September 2022). "Civil disobedience by scientists helps press for urgent climate action" (in en). Nature Climate Change 12 (9): 773–774. doi:10.1038/s41558-022-01461-y. ISSN 1758-6798. Bibcode: 2022NatCC..12..773C. https://www.nature.com/articles/s41558-022-01461-y.

- ↑ Ripple, William J; Wolf, Christopher; Newsome, Thomas M; Barnard, Phoebe; Moomaw, William R (5 November 2019). "World Scientists' Warning of a Climate Emergency". BioScience. doi:10.1093/biosci/biz088.

- ↑ Bik, Holly M.; Goldstein, Miriam C. (23 April 2013). "An Introduction to Social Media for Scientists" (in en). PLOS Biology 11 (4): e1001535. doi:10.1371/journal.pbio.1001535. ISSN 1545-7885. PMID 23630451.

- ↑ Karnofsky, Holden (13 August 2013). "Effective Altruism". GiveWell. http://blog.givewell.org/2013/08/13/effective-altruism/.

- ↑ Konduri, Vimal. "GiveWell Co-Founder Explains Effective Altruism Frameworks" (in en). http://www.thecrimson.com/article/2015/12/4/givewell-founder-effective-altruism/.

- ↑ "Doing good by doing well". The Economist. https://www.economist.com/news/international/21651815-lessons-business-charities-doing-good-doing-well.

- ↑ Pitney, Nico (26 March 2015). "That Time A Hedge Funder Quit His Job And Then Raised $60 Million For Charity". Huffington Post. https://www.huffingtonpost.com/2015/03/26/elie-hassenfeld-givewell_n_6927320.html.

- ↑ Singer, Peter (2009). The Life You Can Save: Acting Now to End World Poverty. New York: Random House. ISBN 978-1400067107. OCLC 232980306.

- ↑ Zhang, Linch (17 March 2017). "How To Do Good: A Conversation with the World's Leading Ethicist" (in en-US). https://www.huffingtonpost.com/entry/how-to-do-good-a-conversation-with-the-worlds-leading_us_58cb8dcee4b0537abd956f91.

- ↑ The Life You Can Save. "Our story – The Life You Can Save". https://www.thelifeyoucansave.org/our-story/.

- ↑ Weathers, Scott (29 February 2016). "Can 'effective altruism' change the world? It already has." (in en). https://www.opendemocracy.net/transformation/scott-weathers/can-effective-altruism-change-world-it-already-has.

- ↑ "Focus Areas | Open Philanthropy Project". https://www.openphilanthropy.org/focus.

- ↑ Karnofsky, Holden (8 September 2011). "Announcing GiveWell Labs". GiveWell. http://blog.givewell.org/2011/09/08/announcing-givewell-labs/.

- ↑ "Who We Are | Open Philanthropy Project". https://www.openphilanthropy.org/about/who-we-are.

- ↑ Karnofsky, Holden (20 August 2014). "Open Philanthropy Project (formerly GiveWell Labs)". GiveWell. http://blog.givewell.org/2014/08/20/open-philanthropy-project-formerly-givewell-labs/.

- ↑ Moses, Sue-Lynn (9 March 2016). "Leverage: Why This Silicon Valley Funder Is Doubling Down on a Beltway Think Tank". Inside Philanthropy. https://www.insidephilanthropy.com/home/2016/3/9/leverage-why-this-silicon-valley-funder-is-doubling-down-on.html.

- ↑ 45.0 45.1 Ord, Toby (2020). "Introduction". The Precipice: Existential Risk and the Future of Humanity. London: Bloomsbury Publishing. ISBN 978-1526600196. OCLC 1143365836. https://cdn.80000hours.org/wp-content/uploads/2020/03/The-Precipice-Introduction-Chapter-1.pdf. Retrieved 2020-04-06.

- ↑ Rees, Martin (2003). Our final century: a scientist's warning ; how terror, error, and environmental disaster threaten humankind's future in this century - on Earth and beyond (1. publ ed.). London: Heinemann. ISBN 0-434-00809-5.

- ↑ Bostrom, Nick (February 2013). "Existential risk prevention as global priority". Global Policy 4: 15–31. doi:10.1111/1758-5899.12002.

- ↑ Klein, Ezra (6 December 2019). "Peter Singer on the lives you can save" (in en). Vox. https://www.vox.com/future-perfect/2019/12/6/20992100/peter-singer-effective-altruism-lives-you-can-save-animal-liberation.

- ↑ Todd, Benjamin (2017). "Why despite global progress, humanity is probably facing its most dangerous time ever" (in en-US). https://80000hours.org/articles/extinction-risk/.

- ↑ Rowe, Thomas; Beard, Simon (2018). "Probabilities, methodologies and the evidence base in existential risk assessments". Working Paper, Centre for the Study of Existential Risk. http://eprints.lse.ac.uk/89506/1/Beard_Existential-Risk-Assessments_Accepted.pdf. Retrieved 26 August 2018.

- ↑ Ord, Toby (2020). The Precipice: Existential Risk and the Future of Humanity. London: Bloomsbury Publishing. p. 167. ISBN 978-1526600196. OCLC 1143365836.

- ↑ Guan, Melody (19 April 2015). "The New Social Movement of our Generation: Effective Altruism". Harvard Political Review. http://harvardpolitics.com/harvard/new-social-movement-generation-effective-altruism/.

- ↑ Piper, Kelsey (2018-12-21). "The case for taking AI seriously as a threat to humanity". https://www.vox.com/future-perfect/2018/12/21/18126576/ai-artificial-intelligence-machine-learning-safety-alignment.

- ↑ Basulto, Dominic (7 July 2015). "The very best ideas for preventing artificial intelligence from wrecking the planet". The Washington Post. https://www.washingtonpost.com/news/innovations/wp/2015/07/07/the-very-best-ideas-for-preventing-artificial-intelligence-from-wrecking-the-planet/.

- ↑ "Policy Entrepreneurs: Their Activity Structure and Function in the Policy Process". Journal of Public Administration Research and Theory. 1991. doi:10.1093/oxfordjournals.jpart.a037081.

- ↑ Syed, Shamsuzzoha B; Hyder, Adnan A; Bloom, Gerald; Sundaram, Sandhya; Bhuiya, Abbas; Zhenzhong, Zhang; Kanjilal, Barun; Oladepo, Oladimeji et al. (2008). "Exploring evidence-policy linkages in health research plans: A case study from six countries". Health Research Policy and Systems 6: 4. doi:10.1186/1478-4505-6-4. PMID 18331651.

- ↑ Hyder, A (14 June 2010). "National Policy-Makers Speak Out: Are Researchers Giving Them What They Need?". Health Policy and Planning. http://www.futurehealthsystems.org/publications/national-policy-makers-speak-out-are-researchers-giving-them.html. Retrieved 26 May 2012.

- ↑ Hyder, A; Syed, S; Puvanachandra, P; Bloom, G; Sundaram, S; Mahmood, S; Iqbal, M; Hongwen, Z et al. (2010). "Stakeholder analysis for health research: Case studies from low- and middle-income countries". Public Health 124 (3): 159–166. doi:10.1016/j.puhe.2009.12.006. PMID 20227095.

- ↑ 59.0 59.1 Evidence-Based Policymaking. 2014. https://www.pewtrusts.org/~/media/assets/2014/11/-evidencebasedpolicymakingaguideforeffectivegovernment.pdf.

- ↑ "About The Pew Charitable Trusts" (in en). https://pew.org/OCwZsy.

- ↑ The Coalition for Evidence-Based Policy. Retrieved 18 September 2014.

- ↑ "Coalition for Evidence-Based Policy" (in en-US). http://coalition4evidence.org/.

- ↑ "Coalition for Evidence-Based Policy". http://coalition4evidence.org.

- ↑ "Identifying and Implementing Educational Practices Supported By Rigorous Evidence: A User-Friendly Guide, 2003". https://ies.ed.gov/ncee/pdf/evidence_based.pdf.

- ↑ Boardman, Anthony E.. "Cost-Benefit Analysis: Concepts and Practice". https://www.cambridge.org/core/books/costbenefit-analysis/89B06B085717BBADC68B2B568F8B81EC.

- ↑ "Executive Order 12291". https://en.wikisource.org/wiki/Executive_Order_12291.

- ↑ "Executive Orders Disposition Tables Clinton - 1993". 15 August 2016. https://www.archives.gov/federal-register/executive-orders/1993-clinton.html.

- ↑ "Executive Order 13563 - Improving Regulation and Regulatory Review". 18 January 2011. https://obamawhitehouse.archives.gov/the-press-office/2011/01/18/executive-order-13563-improving-regulation-and-regulatory-review.

- ↑ Cairney, Paul (2016). The politics of evidence-based policy making. New York. ISBN 978-1137517814. OCLC 946724638.

- ↑ Cartwright, Nancy; Hardie, Jeremy (2012) (in en). Evidence-Based Policy: A Practical Guide to Doing It Better. Oxford University Press. ISBN 978-0199986705. https://books.google.com/books?id=JtsFOZRCY0EC&q=cartwright+and+hardie+evidence+based+policy.

- ↑ Woodward, James (2005) (in en). Making Things Happen: A Theory of Causal Explanation. Oxford University Press. ISBN 978-0198035336. https://books.google.com/books?id=vvj7frYow6IC&q=james+woodward+causality.

- ↑ Maziarz, Mariusz (2020). The Philosophy of Causality in Economics: Causal Inferences and Policy Proposals. London & New York: Routledge.

- ↑ "Government by numbers: how data is damaging our public services" (in en-US). Apolitical. https://apolitical.co/solution_article/government-numbers-data-damaging-public-services/.

- ↑ Muller, Jerry Z. (2017). The tyranny of metrics. Princeton. ISBN 978-0691174952. OCLC 1005121833.

Further reading

- Cartwright, Nancy; Stegenga, Jacob (2011). "A theory of evidence for evidence-based policy". Proceedings of the British Academy 171: 291–322. https://www.academia.edu/2310181.

- Davies, H. T. O.; Nutley, S. M.; Smith, P. C. (Eds.). (2000). What Works? Evidence-based Policy and Practice in the Public Services. Bristol: Policy Press.

- Hammersley, M. (2002). Educational Research, Policymaking and Practice. Paul Chapman/Sage. ISBN 9781847876454.

- Hammersley, M. (2013). The Myth of Research-Based Policy and Practice. London: Sage. ISBN 1446291715.

- McKinnon, Madeleine C.; Cheng, Samantha H.; Garside, Ruth; Masuda, Yuta J.; Miller, Daniel C. (2015). "Sustainability: Map the evidence". Nature 528 (7581): 185–187. doi:10.1038/528185a. PMID 26659166. Bibcode: 2015Natur.528..185M.

External links

- "Modernising Government". http://www.archive.official-documents.co.uk/document/cm43/4310/4310.htm.

- "U.S. Evidence-Based Policymaking Commission Act of 2016". https://www.congress.gov/114/plaws/publ140/PLAW-114publ140.pdf.

- "U.S. Commission on Evidence-based Policy". https://www.cep.gov/.

- "U.S. House bill passed to enact some of CEP recommendations". November 2017. https://www.congress.gov/bill/115th-congress/house-bill/4174.

|