Smooth maximum

In mathematics, a smooth maximum of an indexed family x1, ..., xn of numbers is a smooth approximation to the maximum function meaning a parametric family of functions such that for every , the function is smooth, and the family converges to the maximum function as . The concept of smooth minimum is similarly defined. In many cases, a single family approximates both: maximum as the parameter goes to positive infinity, minimum as the parameter goes to negative infinity; in symbols, as and as . The term can also be used loosely for a specific smooth function that behaves similarly to a maximum, without necessarily being part of a parametrized family.

Examples

Boltzmann operator

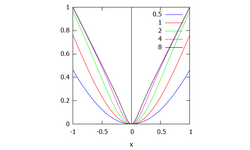

For large positive values of the parameter , the following formulation is a smooth, differentiable approximation of the maximum function. For negative values of the parameter that are large in absolute value, it approximates the minimum.

has the following properties:

- as

- is the arithmetic mean of its inputs

- as

The gradient of is closely related to softmax and is given by

This makes the softmax function useful for optimization techniques that use gradient descent.

This operator is sometimes called the Boltzmann operator,[1] after the Boltzmann distribution.

LogSumExp

Another smooth maximum is LogSumExp:

This can also be normalized if the are all non-negative, yielding a function with domain and range :

The term corrects for the fact that by canceling out all but one zero exponential, and if all are zero.

Mellowmax

The mellowmax operator[1] is defined as follows:

It is a non-expansive operator. As , it acts like a maximum. As , it acts like an arithmetic mean. As , it acts like a minimum. This operator can be viewed as a particular instantiation of the quasi-arithmetic mean. It can also be derived from information theoretical principles as a way of regularizing policies with a cost function defined by KL divergence. The operator has previously been utilized in other areas, such as power engineering.[2]

Connection between LogSumExp and Mellowmax

LogSumExp and Mellowmax are the same function differing by a constant . LogSumExp is always larger than the true max, differing at most from the true max by in the case where all n arguments are equal and being exactly equal to the true max when all but one argument is . Similarly, Mellowmax is always less than the true max, differing at most from the true max by in the case where all but one argument is and being exactly equal to the true max when all n arguments are equal.

p-Norm

Another smooth maximum is the p-norm:

which converges to as .

An advantage of the p-norm is that it is a norm. As such it is scale invariant (homogeneous): , and it satisfies the triangle inequality.

Smooth maximum unit

The following binary operator is called the Smooth Maximum Unit (SMU):[3]

where is a parameter. As , and thus .

See also

References

- ↑ 1.0 1.1 Asadi, Kavosh; Littman, Michael L. (2017). "An Alternative Softmax Operator for Reinforcement Learning". PMLR 70: 243–252. https://proceedings.mlr.press/v70/asadi17a.html. Retrieved January 6, 2023.

- ↑ Safak, Aysel (February 1993). "Statistical analysis of the power sum of multiple correlated log-normal components". IEEE Transactions on Vehicular Technology 42 (1): {58–61. doi:10.1109/25.192387.

- ↑ Biswas, Koushik; Kumar, Sandeep; Banerjee, Shilpak; Ashish Kumar Pandey (2021). "SMU: Smooth activation function for deep networks using smoothing maximum technique". arXiv:2111.04682 [cs.LG].

https://www.johndcook.com/soft_maximum.pdf

M. Lange, D. Zühlke, O. Holz, and T. Villmann, "Applications of lp-norms and their smooth approximations for gradient based learning vector quantization," in Proc. ESANN, Apr. 2014, pp. 271-276. (https://www.elen.ucl.ac.be/Proceedings/esann/esannpdf/es2014-153.pdf)

|