Prosecutor's fallacy

The prosecutor's fallacy is a fallacy of statistical reasoning involving a test for an occurrence, such as a DNA match. A positive result in the test may paradoxically be more likely to be an erroneous result than an actual occurrence, even if the test is very accurate. The fallacy is named because it is typically used by a prosecutor to exaggerate the probability of a criminal defendant's guilt. The fallacy can be used to support other claims as well – including the innocence of a defendant.

For instance, if a perpetrator were known to have the same blood type as a given defendant and 10% of the population to share that blood type, then one version of the prosecutor's fallacy would be to claim that, on that basis alone, the probability that the defendant is guilty is 90%. However, this conclusion is only close to correct if the defendant was selected as the main suspect based on robust evidence discovered prior to the blood test and unrelated to it (the blood match may then be an "unexpected coincidence"). Otherwise, the reasoning presented is flawed, as it overlooks the high prior probability (that is, prior to the blood test) that he is a random innocent person. Assume, for instance, that 1000 people live in the town where the murder occurred. This means that 100 people live there who have the perpetrator's blood type; therefore, the true probability that the defendant is guilty – based on the fact that his blood type matches that of the killer – is only 1%, far less than the 90% argued by the prosecutor.

At its heart, therefore, the fallacy involves assuming that the prior probability of a random match is equal to the probability that the defendant is innocent. When using it, a prosecutor questioning an expert witness may ask: "The odds of finding this evidence on an innocent man are so small that the jury can safely disregard the possibility that this defendant is innocent, correct?"[1] The claim assumes that the probability that evidence is found on an innocent man is the same as the probability that a man is innocent given that evidence was found on him, which is not true. Whilst the former is usually small (approximately 10% in the previous example) due to good forensic evidence procedures, the latter (99% in that example) does not directly relate to it and will often be much higher, since, in fact, it depends on the likely quite high prior odds of the defendant being a random innocent person.

Mathematically, the fallacy results from misunderstanding the concept of a conditional probability, which is defined as the probability that an event A occurs given that event B is known – or assumed – to have occurred, and it is written as [math]\displaystyle{ P(A|B) }[/math]. The error is based on assuming that [math]\displaystyle{ P(A|B) = P(B|A) }[/math], where A represents the event of finding evidence on the defendant, and B the event that the defendant is innocent. But this equality is not true: in fact, although [math]\displaystyle{ P(A|B) }[/math] is usually very small, [math]\displaystyle{ P(B|A) }[/math] may still be much higher.

Concept

The terms "prosecutor's fallacy" and "defense attorney's fallacy" were originated by William C. Thompson and Edward Schumann in 1987.[2][3] The fallacy can arise from multiple testing, such as when evidence is compared against a large database. The size of the database elevates the likelihood of finding a match by pure chance alone; i.e., DNA evidence is soundest when a match is found after a single directed comparison because the existence of matches against a large database where the test sample is of poor quality may be less unlikely by mere chance.

The basic fallacy results from misunderstanding conditional probability and neglecting the prior odds of a defendant being guilty before that evidence was introduced. When a prosecutor has collected some evidence (for instance a DNA match) and has an expert testify that the probability of finding this evidence if the accused were innocent is tiny, the fallacy occurs if it is concluded that the probability of the accused being innocent must be comparably tiny. If the DNA match is used to confirm guilt which is otherwise suspected then it is indeed strong evidence. However, if the DNA evidence is the sole evidence against the accused and the accused was picked out of a large database of DNA profiles, the odds of the match being made at random may be increased, and less damaging to the defendant. The odds in this scenario do not relate to the odds of being guilty, they relate to the odds of being picked at random. While the odds of being picked at random may be low for an individual condition implying guilt, i.e. a positive DNA match, the probability of being picked at random for any condition grows to 1 as more conditions are considered, as is the case in multiple testing. It is often the case that both innocence and guilt (i.e., accidental death and murder) are both highly improbable, though naturally one must be true, so the ratio of the likelihood of the "innocent scenario" to the "guilty scenario" is much more informative than the probability of the "guilty scenario" alone.

Examples

Conditional probability

In the fallacy of argument from rarity, an explanation for an observed event is said to be unlikely because the prior probability of that explanation is low. Consider this case: a lottery winner is accused of cheating, based on the improbability of winning. At the trial, the prosecutor calculates the (very small) probability of winning the lottery without cheating and argues that this is the chance of innocence. The logical flaw is that the prosecutor has failed to account for the large number of people who play the lottery. While the probability of any singular person winning is quite low, the probability of any person winning the lottery, given the number of people who play it, is very high.

In Berkson's paradox, conditional probability is mistaken for unconditional probability. This has led to several wrongful convictions of British mothers, accused of murdering two of their children in infancy, where the primary evidence against them was the statistical improbability of two children dying accidentally in the same household (under "Meadow's law"). Though multiple accidental (SIDS) deaths are rare, so are multiple murders; with only the facts of the deaths as evidence, it is the ratio of these (prior) improbabilities that gives the correct "posterior probability" of murder.[4]

Multiple testing

In another scenario, a crime-scene DNA sample is compared against a database of 20,000 men. A match is found, that man is accused and at his trial, it is testified that the probability that two DNA profiles match by chance is only 1 in 10,000. This does not mean the probability that the suspect is innocent is 1 in 10,000. Since 20,000 men were tested, there were 20,000 opportunities to find a match by chance.

Even if none of the men in the database left the crime-scene DNA, a match by chance to an innocent is more likely than not. The chance of getting at least one match among the records is:

[math]\displaystyle{ 1 - \left(1-\frac{1}{10000}\right)^{20000} \approx 86\%\,, }[/math] where, explicitly:

- [math]\displaystyle{ 1/10000 }[/math] = probability of two DNA profiles matching by chance, after one check,

- [math]\displaystyle{ (1 - 1/10000) }[/math] = probability of not matching, after one check,

- [math]\displaystyle{ (1 - 1/10000)^{20000} }[/math] = probability of not matching, after 20,000 checks, and

- [math]\displaystyle{ 1 - (1 - 1/10000)^{20000} }[/math] = probability of matching, after 20,000 checks.

So, this evidence alone is an uncompelling data dredging result. If the culprit were in the database then he and one or more other men would probably be matched; in either case, it would be a fallacy to ignore the number of records searched when weighing the evidence. "Cold hits" like this on DNA databanks are now understood to require careful presentation as trial evidence.

Mathematical analysis

Evidence Innocence |

Has evidence E |

No evidence ~E |

Total |

|---|---|---|---|

| Is innocent I |

P(I|E)·P(E) = P(E|I)·P(I) |

P(I|~E)·P(~E) = P(~E|I)·P(I) |

P(I) |

| Not innocent ~I |

P(~I|E)·P(E) = P(E|~I)·P(~I) |

P(~I|~E)·P(~E) = P(~E|~I)·P(~I) |

P(~I) = 1 − P(I) |

| Total | P(E) | P(~E) = 1 − P(E) | 1 |

Finding a person innocent or guilty can be viewed in mathematical terms as a form of binary classification. If E is the observed evidence, and I stands for "accused is innocent" then consider the conditional probabilities:

- [math]\displaystyle{ P(E|I) }[/math] is the probability that the "damning evidence" would be observed even when the accused is innocent (a "false positive").

- [math]\displaystyle{ P(I|E) }[/math] is the probability that the accused is innocent, despite the evidence E.

With forensic evidence, [math]\displaystyle{ P(E|I) }[/math] is tiny. The prosecutor wrongly concludes that [math]\displaystyle{ P(I|E) }[/math] is comparatively tiny. (The Lucia de Berk prosecution is accused of exactly this error,[5] for example.) In fact, [math]\displaystyle{ P(E|I) }[/math] and [math]\displaystyle{ P(I|E) }[/math] are quite different; Bayes' theorem states, [math]\displaystyle{ P(I|E) = P(E|I) \cdot \frac{P(I)}{P(E)}\,, }[/math] where:

- [math]\displaystyle{ P(I) }[/math] is the probability of innocence independent of the test result (i.e. from all other evidence); and

- [math]\displaystyle{ P(E) }[/math] is the prior probability that the evidence would be observed, regardless of innocence.

This equation shows that a small [math]\displaystyle{ P(E|I) }[/math] does not imply a small [math]\displaystyle{ P(I|E) }[/math] in case of a large [math]\displaystyle{ P(I) }[/math] and a small [math]\displaystyle{ P(E) }[/math]. That is, if the accused is otherwise likely to be innocent and it is unlikely for anyone (guilty or innocent) to exhibit the observed evidence.

Note that [math]\displaystyle{ P(E) = P(E|I) \cdot P(I) + P(E|\sim I) \cdot [1 - P(I)]\,. }[/math]

[math]\displaystyle{ P(E|{\sim}I) }[/math] is the probability that the evidence would identify a guilty suspect (not give a false negative). This is usually close to 100%, slightly increasing the inference of innocence over a test with false negatives. That inequality is concisely expressed in terms of odds:

[math]\displaystyle{ \operatorname{Odds}(I|E) \ge \operatorname{Odds}(I)\cdot P(E|I)\,. }[/math] The prosecutor is claiming a negligible chance of innocence, given the evidence, implying [math]\displaystyle{ \operatorname{Odds}(I|E) \implies P(I|E), }[/math] or that:

[math]\displaystyle{ P(I|E) \approx P(E|I)\cdot \operatorname{Odds}(I)\,. }[/math]

A prosecutor conflating [math]\displaystyle{ P(I|E) }[/math] with [math]\displaystyle{ P(E|I) }[/math] makes a technical error whenever [math]\displaystyle{ \operatorname{Odds}(I) \gg 1. }[/math] This may be a harmless error if [math]\displaystyle{ P(I|E) }[/math] is still negligible, but it is especially misleading otherwise (mistaking low statistical significance for high confidence).

Legal impact

Though the prosecutor's fallacy typically happens by mistake,[6] in the adversarial system lawyers are usually free to present statistical evidence as best suits their case; retrials are more commonly the result of the prosecutor's fallacy in expert witness testimony or in the judge's summation.[7]

Defense attorney's fallacy

Test result Innocence |

Match | No match | Total |

|---|---|---|---|

| Guilty | 1 | 0 | 1 |

| Innocent | 10 | 9 999 990 | 10 000 000 |

| Total | 11 | 9 999 990 | 10 000 001 |

Suppose there is a one-in-a-million chance of a match given that the accused is innocent. The prosecutor says this means there is only a one-in-a-million chance of innocence. But if everyone in a community of 10 million people is tested, one expects 10 matches even if all are innocent. The defense fallacy would be to reason that "10 matches were expected, so the accused is no more likely to be guilty than any of the other matches, thus the evidence suggests a 90% chance that the accused is innocent." and "As such, this evidence is irrelevant." The first part of the reasoning would be correct only in the case where there is no further evidence pointing to the defendant. On the second part, Thompson & Schumann wrote that the evidence should still be highly relevant because it "drastically narrows the group of people who are or could have been suspects, while failing to exclude the defendant" (page 171).[2][8]

Another way of saying this would be to point out that the defense attorney's calculation failed to take into account the prior probability of the defendant's guilt. If, for example, the police came up with a list of 10 suspects, all of whom had access to the crime scene, then it would be very illogical indeed to suggest that a test that offers a one-in-a-million chance of a match would change the defendant's prior probability from 1 in 10 (10 percent) to 1 in a million (0.0001 percent). If nine innocent people were to be tested, the likelihood that the test would incorrectly match one (or more) of those people can be calculated as

[math]\displaystyle{ 1 - ((1 - 1/1000000)^9)\,, }[/math]

or approximately 0.0009%. If, however, the other 9 suspects were tested and did not return a match, then the probability of the defendant's guilt has increased from the prior probability of 10% (1 in 10 suspects) to 99.9991% on the basis of the test. The defendant might argue that "lists of suspects compiled by police fail to include the guilty person in 50% of cases" – if that were true, then the defendant's guilt would have increased from the prior probability of 5% (50% of 10%) to 49.99955% on the basis of the test – in which case "reasonable doubt" could be claimed to exist despite the positive test result.

Possible examples of fallacious defense arguments

Authors have cited defense arguments in the O. J. Simpson murder trial as an example of this fallacy regarding the context in which the accused had been brought to court: crime scene blood matched Simpson's with characteristics shared by 1 in 400 people. The defense argued that a football stadium could be filled with Los Angeles matching the sample and that the figure of 1 in 400 was useless.[9][10]

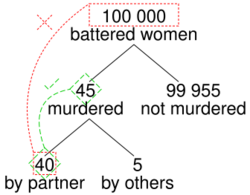

Also at the O. J. Simpson murder trial, the prosecution presented evidence that Simpson had been violent toward his wife, while the defense argued that there was only one woman murdered for every 2500 women who were subjected to spousal abuse, and that any history of Simpson being violent toward his wife was irrelevant to the trial. However, the reasoning behind the defense's calculation was fallacious. According to author Gerd Gigerenzer, the correct probability requires the context — that Simpson's wife had not only been subjected to domestic violence, but rather subjected to domestic violence (by Simpson) and killed (by someone) — to be taken into account. Gigerenzer writes "the chances that a batterer actually murdered his partner, given that she has been killed, is about 8 in 9 or approximately 90%".[11] While most cases of spousal abuse do not end in murder, most cases of murder where there is a history of spousal abuse were committed by the spouse.

The Sally Clark case

Sally Clark, a British woman, was accused in 1998 of having killed her first child at 11 weeks of age and then her second child at 8 weeks of age. The prosecution had expert witness Sir Roy Meadow, a professor and consultant paediatrician,[12] testify that the probability of two children in the same family dying from SIDS is about 1 in 73 million. That was much less frequent than the actual rate measured in historical data – Meadow estimated it from single-SIDS death data, and the assumption that the probability of such deaths should be uncorrelated between infants.[13]

Meadow acknowledged that 1-in-73 million is not an impossibility, but argued that such accidents would happen "once every hundred years" and that, in a country of 15 million 2-child families, it is vastly more likely that the double-deaths are due to Münchausen syndrome by proxy than to such a rare accident. However, there is good reason to suppose that the likelihood of a death from SIDS in a family is significantly greater if a previous child has already died in these circumstances (a genetic predisposition to SIDS is likely to invalidate that assumed statistical independence[14]) making some families more susceptible to SIDS and the error an outcome of the ecological fallacy.[15] The likelihood of two SIDS deaths in the same family cannot be soundly estimated by squaring the likelihood of a single such death in all otherwise similar families.[16]

1-in-73 million greatly underestimated the chance of two successive accidents, but, even if that assessment were accurate, the court seems to have missed the fact that the 1-in-73 million number meant nothing on its own. As an a priori probability, it should have been weighed against the a priori probabilities of the alternatives. Given that two deaths had occurred, one of the following explanations must be true, and all of them are a priori extremely improbable:

- Two successive deaths in the same family, both by SIDS

- Double homicide (the prosecution's case)

- Other possibilities (including one homicide and one case of SIDS)

It is unclear whether an estimate of the probability for the second possibility was ever proposed during the trial, or whether the comparison of the first two probabilities was understood to be the key estimate to make in the statistical analysis assessing the prosecution's case against the case for innocence.

Clark was convicted in 1999, resulting in a press release by the Royal Statistical Society which pointed out the mistakes.[17]

In 2002, Ray Hill (Mathematics professor at Salford) attempted to accurately compare the chances of these two possible explanations; he concluded that successive accidents are between 4.5 and 9 times more likely than are successive murders, so that the a priori odds of Clark's guilt were between 4.5 to 1 and 9 to 1 against.[18]

After the court found that the forensic pathologist who had examined both babies had withheld exculpatory evidence, a higher court later quashed Clark's conviction, on 29 January 2003.[19]

See also

- Base rate fallacy – Error in thinking which involves under-valuing base rate information

- Confusion of the inverse

- Data dredging – Misuse of data analysis

- Philosophy:Ethics in mathematics – Emerging field of applied ethics

- False positive paradox

- Jurimetrics

- Likelihood function – Function related to statistics and probability theory

- Simpson's paradox – Error in statistical reasoning with groups

References

- ↑ Fenton, Norman; Neil, Martin; Berger, Daniel (June 2016). "Bayes and the Law". Annual Review of Statistics and Its Application 3 (1): 51–77. doi:10.1146/annurev-statistics-041715-033428. PMID 27398389. Bibcode: 2016AnRSA...3...51F.

- ↑ 2.0 2.1 Thompson, W.C.; Shumann, E.L. (1987). "Interpretation of Statistical Evidence in Criminal Trials: The Prosecutor's Fallacy and the Defense Attorney's Fallacy". Law and Human Behavior 2 (3): 167. doi:10.1007/BF01044641.

- ↑ Fountain, John; Gunby, Philip (February 2010). "Ambiguity, the Certainty Illusion, and Gigerenzer's Natural Frequency Approach to Reasoning with Inverse Probabilities". University of Canterbury. p. 6. http://uctv.canterbury.ac.nz/viewfile.php/4/sharing/55/74/74/NZEPVersionofImpreciseProbabilitiespaperVersi.pdf.[yes|permanent dead link|dead link}}]

- ↑ Goldacre, Ben (2006-10-28). "Prosecuting and defending by numbers". The Guardian. https://www.theguardian.com/science/2006/oct/28/uknews1. "rarity is irrelevant, because double murder is rare too. An entire court process failed to spot the nuance of how the figure should be used. Twice."

- ↑ Meester, R.; Collins, M.; Gill, R.; van Lambalgen, M. (2007-05-05). "On the (ab)use of statistics in the legal case against the nurse Lucia de B". Law, Probability & Risk 5 (3–4): 233–250. doi:10.1093/lpr/mgm003. "[page 11] Writing E for the observed event, and H for the hypothesis of chance, Elffers calculated P(E | H) < 0.0342%, while the court seems to have concluded that P(H | E) < 0.0342%".

- ↑ Rossmo, D.K. (October 2009). "Failures in Criminal Investigation: Errors of Thinking". The Police Chief LXXVI (10). http://policechiefmagazine.org/magazine/index.cfm?fuseaction=display_arch&article_id=1922&issue_id=102009. Retrieved 2010-05-21. "The prosecutor's fallacy is more insidious because it typically happens by mistake.".

- ↑ "DNA Identification in the Criminal Justice System". Australian Institute of Criminology. 2002-05-01. http://www.denverda.org/DNA_Documents/DNA%20in%20Australia.pdf.

- ↑ N. Scurich (2010). "Interpretative Arguments of Forensic Match Evidence: An Evidentiary Analysis". The Dartmouth Law Journal 8 (2): 31–47. "The idea is that each piece of evidence need not conclusively establish a proposition, but that all the evidence can be used as a mosaic to establish the proposition".

- ↑ Robertson, B., & Vignaux, G. A. (1995). Interpreting evidence: Evaluating forensic evidence in the courtroom. Chichester: John Wiley and Sons.

- ↑ Rossmo, D. Kim (2009). Criminal Investigative Failures. CRC Press Taylor & Francis Group.

- ↑ Gigerenzer, G., Reckoning with Risk: Learning to Live with Uncertainty, Penguin, (2003)

- ↑ "Resolution adopted by the Senate (21 October 1998) on the retirement of Professor Sir Roy Meadow". Reporter (University of Leeds) (428). 30 November 1998. http://reporter.leeds.ac.uk/428/mead.htm. Retrieved 2015-10-17.

- ↑ The population-wide probability of a SIDS fatality was about 1 in 1,303; Meadow generated his 1-in-73 million estimate from the lesser probability of SIDS death in the Clark household, which had lower risk factors (e.g. non-smoking). In this sub-population he estimated the probability of a single death at 1 in 8,500. See: Joyce, H. (September 2002). "Beyond reasonable doubt" (pdf). plus.maths.org. http://plus.maths.org/issue21/features/clark/.. Professor Ray Hill questioned even this first step (1/8,500 vs 1/1,300) in two ways: firstly, on the grounds that it was biased, excluding those factors that increased risk (especially that both children were boys) and (more importantly) because reductions in SIDS risk factors will proportionately reduce murder risk factors, so that the relative frequencies of Münchausen syndrome by proxy and SIDS will remain in the same ratio as in the general population: Hill, Ray (2002). "Cot Death or Murder? – Weighing the Probabilities". http://www.mrbartonmaths.com/resources/a%20level/s1/Beyond%20reasonable%20doubt.doc. "it is patently unfair to use the characteristics which basically make her a good, clean-living, mother as factors which count against her. Yes, we can agree that such factors make a natural death less likely – but those same characteristics also make murder less likely."

- ↑ Sweeney, John; Law, Bill (July 15, 2001). "Gene find casts doubt on double 'cot death' murders". The Observer. http://observer.guardian.co.uk/print/0,,4221973-102285,00.html.

- ↑ Vincent Scheurer. "Convicted on Statistics?". http://understandinguncertainty.org/node/545#notes.

- ↑ Hill, R. (2004). "Multiple sudden infant deaths – coincidence or beyond coincidence?". Paediatric and Perinatal Epidemiology 18 (5): 321. doi:10.1111/j.1365-3016.2004.00560.x. PMID 15367318. http://www.cse.salford.ac.uk/staff/RHill/ppe_5601.pdf. Retrieved 2010-06-13.

- ↑ "Royal Statistical Society concerned by issues raised in Sally Clark case". 23 October 2001. http://www.rss.org.uk/uploadedfiles/documentlibrary/744.pdf. "Society does not tolerate doctors making serious clinical errors because it is widely understood that such errors could mean the difference between life and death. The case of R v. Sally Clark is one example of a medical expert witness making a serious statistical error, one which may have had a profound effect on the outcome of the case"

- ↑ The uncertainty in this range is mainly driven by uncertainty in the likelihood of killing a second child, having killed a first, see: Hill, R. (2004). "Multiple sudden infant deaths – coincidence or beyond coincidence?". Paediatric and Perinatal Epidemiology 18 (5): 322–323. doi:10.1111/j.1365-3016.2004.00560.x. PMID 15367318. http://www.cse.salford.ac.uk/staff/RHill/ppe_5601.pdf. Retrieved 2010-06-13.

- ↑ "R v Clark. [2003 EWCA Crim 1020 (11 April 2003)"]. http://www.bailii.org/ew/cases/EWCA/Crim/2003/1020.html.

External links

- Discussion of the prosecutor's fallacy

- Forensic mathematics of DNA matching

- A British statistician on the fallacy