Multidimensional filter design and implementation

Many concepts in one–dimensional signal processing are similar to concepts in multidimensional signal processing. However, many familiar one–dimensional procedures do not readily generalize to the multidimensional case and some important issues associated with multidimensional signals and systems do not appear in the one–dimensional special case.

Motivation and applications

Most of the signals we witness in real life exist in more than one dimension, be they image, video or sound among many others. A multidimensional (M-D) signal can be modeled as a function of independent variables, where is greater than or equal to 2. Certain concepts for multidimensional signal processing vary from one dimensional signal processing. For example, The computational complexity for multi-dimensional case is higher as it involves more dimensions. Also, assumptions of causality do not hold good for the multi-dimensional case.

A multidimensional (M-D) signal can be modeled as a function of independent variables, where is greater than or equal to 2. These signals may be categorized as continuous, discrete, or mixed. A continuous signal can be modeled as a function of independent variables which range over a continuum of values, example – an audio wave traveling in space.A continuous signal in the multi-dimensional case can be represented in the time domain as .The number of arguments within the parenthesis indicates the number of dimensions of the signal. The signal in this case is of n dimensions. A discrete signal, on the other hand, can be modeled as a function defined only on a set of points, such as the set of integers.A discrete signal of -dimensions can be represented in the spatial domain as . A mixed signal is a multidimensional signal that is modeled as a function of some continuous variables and some discrete ones, for example an ensemble of time waveforms recorded from an array of electrical transducers is a mixed signal. The ensemble can be modeled with one continuous variable, time, and one or more discrete variables to index the transducers.

Filtering is an application that is performed on signals whenever certain frequencies are to be removed so as to suppress interfering signals and reduce background noise. A mixed filter is a kind of filter that is different from the traditional Finite Impulse Response(FIR) and Infinite impulse response(IIR) filters and these three filters are explained here in detail. We can combine the M-D Discrete Fourier transform(DFT) method and the M-D linear difference equation(LDE) method to filter the M-D signals. This results in the so-called combined DFT/LDE filtering technique in which the Discrete Fourier Transform is performed in some of the dimensions prior to Linear Difference Equation filtering which is performed later in the remaining dimensions. Such kind of filtering of M-D signals is referred to as Mixed domain(MixeD) filtering and the filters that perform such kind of filtering are referred to as MixeD Multidimensional Filters.[1]

Combining Discrete Transforms with Linear Difference Equations for implementing the Multidimensional filters proves to be computationally efficient and straightforward to design with low memory requirements for spatio-temporal applications such as video processing. Also the Linear Difference equations of the MixeD filters are of lower dimensionality as compared to normal multidimensional filters which results in simplification of the design and increase in the stability.[2]

Multidimensional Digital filters are finding applications in many fields such as image processing, video processing, seismic tomography, magnetic data processing, Computed tomography (CT), RADAR, Sonar and many more.[3] There is a difference between 1-D and M-D digital filter design problems. In the 1-D case, the filter design and filter implementation issues are distinct and decoupled. The 1-D filter can first be designed and then particular network structure can be determined through the appropriate manipulation of the transfer function. In the case of M-D filter design, the multidimensional polynomials cannot be factored in general. This means that an arbitrary transfer function can generally not be manipulated into a form required by a particular implementation. This makes the design and implementation of M-D filters more complex than the 1-D filters.

Problem statement and basic concepts[4]

Multidimensional filters not unlike their one dimensional counterparts can be categorized as

- Finite impulse response filters

- Infinite impulse response filters

- Mixed filters

In order to understand these concepts, it is necessary to understand what an impulse response means. An impulse response is basically the response of the system when the input to that system is a Unit impulse function. An impulse response in the spatial domain can be represented as .

A Finite Impulse Response (FIR), or non-recursive filter has an impulse response with a finite number of non-zero samples. This makes their impulse response always absolutely summable and thus FIR filters are always stable. is the multidimensional input signal and is the multidimensional output signal. For a dimensional spatial domain, the output can be represented as

The above difference equation can be represented in the Z-domain as follows

,

where and are the Z-transform of , and respectively.

The transfer function is given by,

In the case of FIR filters the transfer function consists of only numerator terms as the denominator is unity due to the absence of feedback.

An Infinite Impulse Response (IIR), or recursive filter (due to feedback) has infinite-extent impulse response. Its input and output satisfy a multidimensional difference equation of finite order. IIR filters may or may not be stable and in many cases are less complex to realize when compared to FIR filters. The promise of IIR filters is a potential reduction in computation compared to FIR filters when performing comparable filtering operations. by, feeding back output samples, we can use a filter with fewer coefficients (hence less computations) to implement a desired operation. On the other hand, IIR filters pose some potentially significant implementation and stabilization problems not encountered with FIR filters. The design of an M-D recursive filter is quite different from the design of a 1-D filter which is due to the increased difficulty of assuring stability. For a dimensional domain, the output can be represented as

The transfer function in this case will have both numerator and denominator terms due to the presence of feedback.

Although multidimensional difference equations represent a generalization of 1-D difference equations, they are considerably more complex and quite different. A number of important issues associated with multidimensional difference equations, such as the direction of recursion and the ordering relation, are really not an issue in the 1-D case. Other issues such as stability, although present in the 1-D case, are far more difficult to understand for multidimensional systems

Multidimensional(M-D) filtering may also be achieved by carrying out the P-dimensional Discrete Fourier transform (DFT) over P of the dimensions where (P<M) and spatio-temporal (M - P) dimensional Linear Difference Equation (LDE) filtering over the remaining dimensions. This is referred to as the combined DFT/LDE method. Such an implementation is referred to as mixed filter implementation. Instead of using Discrete Fourier Transform(DFT), other transforms such as Discrete Cosine Transform(DCT) and Discrete Hartley Transform(DHT) can also be used, depending on the application. The Discrete Cosine Transform(DCT) is often preferred for the transform coding of images because of its superior energy-compaction property while Discrete Hartley Transform(DHT) is useful when a real-valued sequence is to be mapped onto a real-valued spectrum.[2]

In general, the M-D Linear Difference Equation (LDE) filter method convolves a real M-D input sequence x(n(M)) with a M-D unit impulse sequence h(n(M))to obtain a desired M-D output sequence y(n(M)).

y(n(M)) = x(n(M)) h(n(M)),

where refers to the M-D convolutional(convolution) operator and (n(M)) refers to the M-D signal domain index vector.

If a sequence is zero for some i and for all > , where , that sequence is said to be duration bounded in the ith dimension. A multiplicative operator (R(M)) may be used to obtain from (x(M)) a M-D sequence that is duration bounded. An M-D sequence,

(n(M)) = x(n(M))R(n(M)) is duration bounded in the first P dimensions if R(n(M)) = 1 r(P) where r(P) n(M) and , i = 1,2,3....P where P M and 0 otherwise .

The MixeD filter method requires that the M-D input sequence be duration bounded in P of the M dimensions.

The index (n(M)) is then ordered so that corresponds to the duration-bounded dimensions. The MixeD filtering method involves a P-dimensional(P-D) discrete forward transform operation F(k(P))[.] on (n(M)) over the first P variables , which we can write as X(k(P),n(M-P)) =

F(k(P))[(n(M))].

Examples of F(k(P))[.] are the P-Dimensional DFT, DCT and DHT. According to the MixeD filter method, each complex sequence, X(k(P),n(M-P)) is filtered by a (M - P)-dimensional LDE. The LDE filters have impulse responses h(k(P),n(M-P)). Finally the complex output sequences of the LDE filters, i.e. Y(k(P),n(M-P)) are inverse transformed using the operator

F−1(k(P))[.], to give the final M-D filtered output signal, (n(M)).

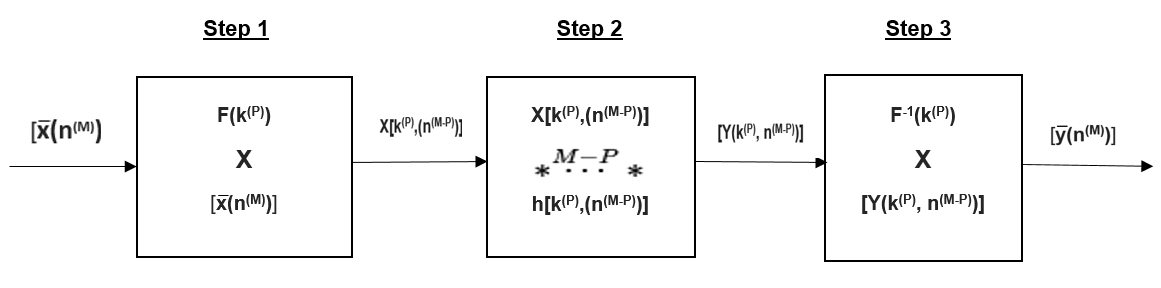

The three step process can be summarized as follows,[1]

Step 1. X(k(P),n(M-P)) = F(k(P))[(n(M))]

Step 2. Y(k(P),n(M-P)) = X(k(P),n(M-P)) h(k(P),n(M-P))

Step 3. (n(M)) = F−1(k(P))[Y(k(P),n(M-P))]

The crucial step in the MixeD filter design is the 2nd step. This is because filtering takes place in this step. Since there is no filtering involved in Steps 1 and 3, there is no need to weigh the transform coefficients.

The block diagram which shows the MixeD filter method can be seen below.

FIR filter implementation

Direct convolution

Output of any Linear Shift Invariant (LSI) filter can be determined from its input by means of the convolution sum. There are a finite number of non-zero samples and the limits of summation are finite for a FIR filter. The convolution sum serves as an algorithm that enables us to compute the successive output samples of the filter. As an example, let is assume that the filter has support over the region {(,,...,): ≤ < , ≤ < ,..., ≤ < }, the output samples can be computed using[4]

If all input samples are available, the output samples can be computed in any order or can also be computed simultaneously. However, if only selected samples of the output are desired, only those samples need to be computed. The number of multiplications and additions for one desired output sample is (....) and (....)– respectively.

For the 2D case, the computation of depends on input samples from ( – ) previous columns of the input and the ( – ) previous rows. If the input samples arrive row by row, we need sufficient storage to store rows of the input sequence. If the input is available column by column instead, we need to store columns of the input. A zero phase filter with a real impulse response satisfies = , which means that each sample can be paired with another of identical value. In this case we can use the arithmetic distributive law to interchange some of the multiplications and additions, to reduce the number of multiplications necessary to implement the filter, but the number of multiplications is still proportional to the filter order. Specifically, if the region of support for the filter is assumed to be rectangular and centered at the origin, we have

Using the above equation to implement an FIR filter requires roughly one-half the number of multiplications of an implementation, although both implementations require the same number of additions and the same amount of storage. If the impulse response of an FIR filter possess other symmetries, they can be exploited in a similar fashion to reduce further the number of required multiplications.

Discrete Fourier transform implementations of FIR filters

The FIR filter can also be implemented by means of the Discrete Fourier transform (DFT). This can be particularly appealing for high-order filters because the various Fast Fourier transform algorithms permit the efficient evaluation of the DFT. The general form of DFT for multidimensional signals can be seen below, where is periodicity matrix, is the multidimensional signal in the space domain, is the DFT of in frequency domain, is a region containing || samples in domain, and is a region containing || () frequency samples.[4]

Let be the linear convolution of a finite-extent sequence with the impulse response of an FIR filter.

On computing Fourier Transform of both sides of this expression, we get

There are many possible definitions of the M-D discrete Fourier transform, and that all of these correspond to sets of samples of the M-D Fourier transform; these DFT's can be used to perform convolutions as long their assumed region of support contains the support for . Let us assume that is sampled on a xx...x rectangular lattice of samples, and let

Therefore, .

To compute (xx...x)-point DFT's of and requires that both sequences have their regions of support extended with samples of value zero. If results from the inverse DFT of the product . , then will be the circular convolution of and . If , ,..., are chosen to be at least equal to the size of , then . This implementation technique is efficient with respect to computation, however it is prodigal with respect to storage as this method requires sufficient storage to contain all xx...x points of the signal . In addition, we must store the filter response coefficients . By direct convolution the number of rows of the input that needs to be stored depends on the order of the filter. However, with the DFT the whole input must be stored regardless of the filter order.

For the 2D case, and assuming that is pre-computed, the number of real multiplications needed to compute is

xxxx xx; and are powers of 2

Block convolution

The arithmetic complexity of the DFT implementation of an FIR filter is effectively independent of the order of the filter, while the complexity of a direct convolution implementation is proportional to the filter order. So, the convolution implementation would be more efficient for the lower filter order. As, the filter order increases, the DFT implementation would eventually become more efficient.[4]

The problem with the DFT implementation is that it requires a large storage. The block convolution method offers a compromise. With these approaches the convolutions are performed on sections or blocks of data using DFT methods. Limiting the size of these blocks limits the amount of storage required and using transform methods maintains the efficiency of the procedure.

The simplest block convolution method is called the overlap-add technique. We begin by partitioning 2-D array, , into (x) point sections, where the section indexed by the pair (,) is defined as below:

The regions of support for the different sections do not overlap, and collectively they cover the entire region of support of the array . Thus,

Because the operation of discrete convolution distributes with respect to addition, can be written as follows:

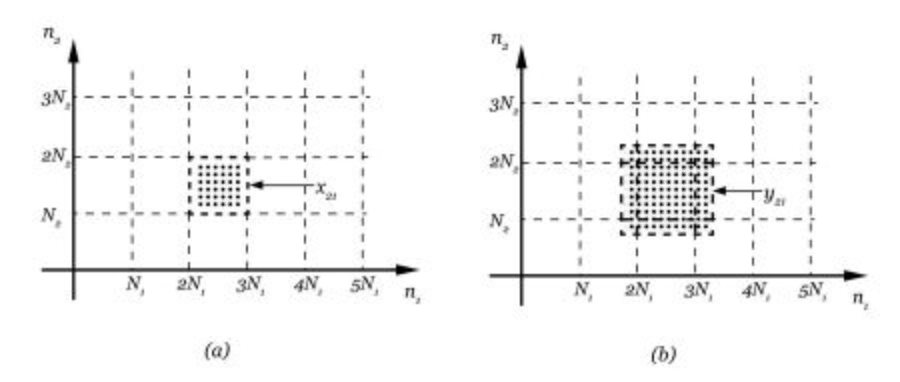

Figure (a) shows the section of the input array . Figure (b) shows the region of support of the convolution of that section with that is .

The block output is the convolution of with block of . The result of the block convolution must be added together to produce the complete filter output . As the support of is greater than the support of , the output blocks will of necessity overlap, but the degree of that overlap is limited.

The convolutions of the and can be evaluated by means of discrete Fourier transforms, provided that the size of the transform is large enough to support . By controlling the block size we can limit the size of the DFTs, which reduces the required storage.

The overlap-save method is an alternative block convolution technique. When the block size is considerably larger than the support of , the samples of in the center of each block are not overlapped by samples from neighboring blocks. Similarly, when a sequence is circularly convolved with another, , which has a much smaller region of support, only a subset of the samples of that circular convolution will show the effects of the spatial aliasing. The remaining samples of the circular convolution will be identical to the samples of the linear convolution. Thus if an × -point section of is circularly convolved with an × -point impulse response using an × -point DFT, the resulting circular convolution will contain a cluster of × samples which are identical to samples of the linear convolution, . The whole output array can be constructed form these "good" samples by carefully choosing the regions of support for the input sections. If the input sections are allowed to overlap, the "good" samples of the various blocks can be made to abut. The overlap-save method thus involves overlapping input sections, whereas the overlap-add method involves overlapping output sections.

The figure above shows the overlap-save method. The shaded region gives those samples of for which both the × circular convolution and the linear convolution of with are identical.

For both the overlap-add and overlap-save procedures, the choice of block size affects the efficiency of the resulting implementation. It affects the amount of storage needed, and also affects the amount of computation.

FIR filter design

Minimax design of FIR filters

The frequency response of a multi-dimensional filter is given by,

where is the impulse response of the designed filter for size

The frequency response of the ideal filter is given by

where is the impulse response of the ideal filter.

The error measure is given by subtracting the above two results i.e.

The maximum of this error measure is what needs to be minimized.There are different norms available for minimizing the error namely:

given by the formula

given by the formula

if p =2 we get the and if p tends to we get the norm.The norm is given by,

When we say minimax design the norm is what comes to mind.

Design using transformation

Another method to design a multidimensional FIR filter is by the transformation from 1-D filters. This method was first developed by McClellan as other methods were time consuming and cumbersome. The first successful implementation was achieved by Mecklenbrauker and Mersereau[5][6] and was later revised by McClellan and Chan.[7] For a zero phase filter the one phase impulse response is given by[4]

where represents the complex conjugate of .

Let be the frequency response of .Assuming is even,we can write

where is defined as if n=0 and if n0.Also is a polynomial of degree n known as the Chebyshev polynomial.

The variable is and the polynomial can be represented by .

Thus is the required 1-D frequency response in terms of Chebyshev polynomial .

If we consider to be a transformation function where maps to ,then we get,

The contours and the symmetry of depend on that of . is also called the mapping function.

The values of can be obtained from the values of the 1_D prototype .

The conditions for choosing the mapping function are

- · It must be a valid frequency response of a md filter

- · It should be real

- · It should lie between -1 and 1.

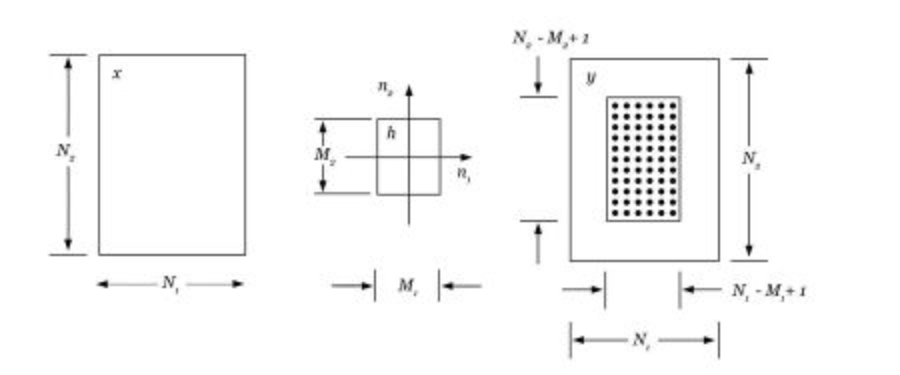

Considering a two dimensional case to compute the size of , If the 1-D prototype has size and the mapping function has size ,then the size of the desired will be

The main advantages of this method are

- Easy to implement

- ·Easy to understand 1-D concepts

- ·Optimal filter design is possible

Implementation of filters designed using transformations

Methods such as Convolution or implementation using the DFT can be used for the implementation of FIR filters. However, for filters of moderate order another method can be used which justifies the design using transformation.Consider the equation for a 2-dimensional case,

where, is a Chebyshev polynomial.These polynomials are defined as,

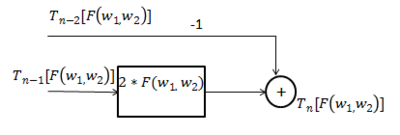

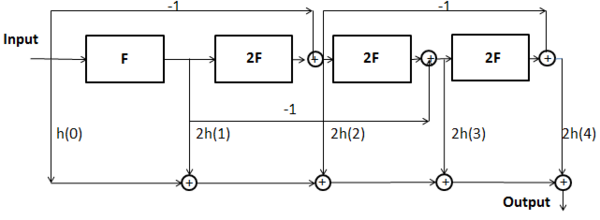

Using this we can form a digital network to realize the 2-D frequency response as shown in the figure below.Replacing x by we get,

Since each of these signals can be generated from two lower order signals, a ladder network of N outputs can be formed such that frequency response between the input and nth output is

.By weighting these outputs according to the equation mentioned below, The filter

can be realized.

This realization is as shown in the figure below.

In the figure,the filters F define the transformation function and h(n) is the impulse response of the 1-D prototype filter .

Trigonometric sum-of-squares optimization

Here we discuss a method for multidimensional FIR filter design via sum-of-squares formulations of spectral mask constraints. The sum-of-squares optimization problem is expressed as a semidefinite program with low-rank structure, by sampling the constraints using discrete sine and cosine transforms. The resulting semidefinite program is then solved by a customized primal-dual interior-point method that exploits low-rank structure. This leads to substantial reduction in the computational complexity, compared to general-purpose semidefinite programming methods that exploit sparsity.[8]

A variety of one-dimensional FIR filter design problems can be expressed as convex optimization problems over real trigonometric polynomials, subject to spectral mask constraints. These optimization problems can be formulated as semidefinite programs (SDPs) using classical sum-of-squares (SOS) characterizations of nonnegative polynomials, and solved efficiently via interior-point methods for semidefinite programming.

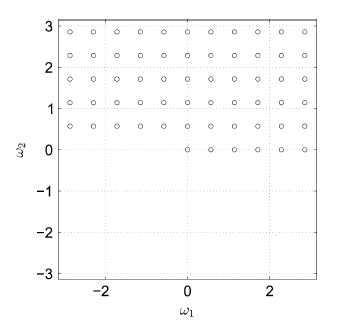

For the figure above, FIR filter in frequency domain with d=2; n1=n2=5 and has 61 sampling points. The extension of these techniques to multidimensional filter design poses several difficulties. First, SOS characterization of multivariate positive trigonometric polynomials may require factors of arbitrarily high degree. Second, difficulty stems from the large size of the semidefinite programming problems obtained from multivariate SOS programs. Most recent research on exploiting structure in semidefinite programming has focused on exploiting sparsity of the coefficient matrices. This technique is very useful for SDPs derived from SOS programs and are included in several general purpose semidefinite programming packages.

Let and denote the sets of d-vectors of integers and natural numbers, respectively. For a vector , we define as the diagonal matrix with as its ith diagonal entry. For a square matrix , is a vector with the entry . The matrix inequality denotes that is positive definite (semidefinite). denotes the trace of a symmetric matrix .

is a -variate trigonometric polynomial of degree , with real symmetric coefficients =

The above summation is over all integer vectors that satisfy , where the inequalities between the vectors are interpreted element-wise. is positive on , then it can be expressed as sum of squares of trigonometric polynomials

2-D FIR Filter Design as SOS Program: is taken to be the frequency response of a 2-D linear phase FIR filter with filter order , with filter coefficients = .

We want to determine the filter coefficients that maximize the attenuation in the stopband for a given maximum allowable ripple () in the passband . The optimization problem is to minimize by subjecting to the following conditions

where the scalar and the filter coefficients are the problem variables. These constraints are as shown below

If the passband and stopband are defined, then we can replace each positive polynomial by a weighted sum of squares expression. Limiting the degrees of the sum-of-squares polynomials to then gives sufficient conditions for feasibility. We call the resulting optimization problem a sum-of-squares program and can be solved via semidefinite programming.

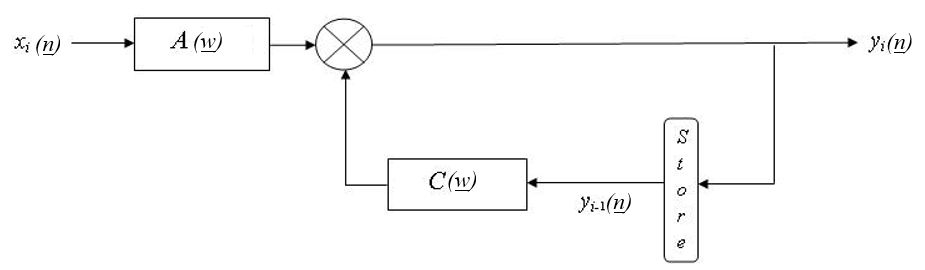

Iterative implementation for M-D IIR filters

In some applications, where access to all values of signal is available (i.e. where entire signal is stored in memory and is available for processing), the concept of "feedback" can be realized. The iterative approach uses the previous output as feedback to generate successively better approximations to the desired output signal.[4]

In general, the IIR frequency response can be expressed as

where and are M-D finite extent coefficient arrays. The ratio is normalized so that

Now, let represent the spectrum of a M-D input signal and represent the spectrum of a M-D output signal .

where is a trigonometric polynomial defined as

In the signal domain, the equation becomes

After making an initial guess, and then substituting the guess in the above equation iteratively, a better approximation of can be obtained –

where denotes the iteration index

In the frequency domain, the above equation becomes

An IIR filter is BIBO stable if

If we assume that then

Thus, it can be said that, the frequency response of a M-D IIR filter can be obtained by infinite number of M-D FIR filtering operations. The store operator stores the result of the previous iteration.

To be practical, an iterative IIR filter should require fewer computations, counting all iterations to achieve an acceptable error, compared to an FIR filter with similar performance.

Existing approaches for IIR filter design

Similar to its 1-D special case, M-D IIR filters can have dramatically lower order than FIR filters with similar performance. This motivates the development of design techniques for M-D IIR filtering algorithms. This section presents brief overview of approaches for designing M-D IIR filters.

Shank's method

This technique is based on minimizing the error functionals in the space domain. The coefficient arrays and are determined such that the output response of a filter matches the desired response .[4]

Let us denote the error signal as

And let denote it's the Fourier transform

By multiplying both sides by , we get the modified error spectrum, converted in discrete domain as

The total mean-squared error is obtained as

Let the input signal be . Now, the numerator coefficient is zero outside region because of the ROS of input signal.Then equation becomes

for or or ...

Substituting the result into and differentiating with respect to denominator coefficients , the linear set of equations is obtained as

for

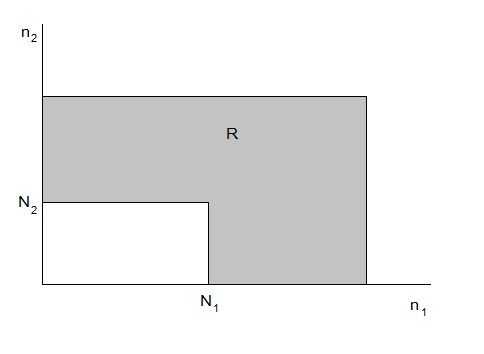

Now, taking the double summation for the region "R" i.e. for and and ... shown in figure (shown for 2-D case), the coefficients are obtained.

The coefficients can be obtained from

The major advantage of Shank's method is that IIR filter coefficients can be obtained by solving linear equations. The disadvantage is that the mean squared error between and is not minimized. Also, the stability is not certain.

Frequency-domain designs for IIR[4]

Shank's method is a spatial-domain design method. It is also possible to design IIR filters in the frequency domain. Here our aim would be to minimize the error in the frequency domain and not the spatial domain.Due to Parseval's theorem we observe that the mean squared error will be identical to that in the spatial domain.Parseval's theorem states that[4]

Also the different norms that are used for FIR filter design such as , and can also be used for the design of IIR filters

is the required equation for the norm and when p tends to we get the norm as explained above

The main advantages of design in the frequency domain are

- If there is any specification which is only partially complete, such as approximation of a magnitude response without the specification of phase response, this can be implemented with greater ease in the frequency domain rather than the space domain.

- Also the approximating function can be written in closed form as a function of the filter parameters, thus facilitating simpler derivation of partial derivatives.

The main disadvantage of this technique is that there is no guarantee for stability.

General minimization techniques as seen in the design of IIR filters in the spatial domain can be used in the frequency domain too.

One popular method for frequency domain design is the Magnitude and magnitude squared algorithms.

Magnitude and magnitude squared design algorithm

In this section, we examine the technique for designing 2-D IIR filters based on minimizing error functionals in the frequency domain. The mean-squared error is given as

The below function is the measure of difference between two complex functions - the desired response and the actual response . Generally, we can define a complex function of to approximate the desired response . Hence, the optimization task boils down to the task of minimizing .

where is the weighting function and are frequency domain samples selected for minimization. Now, consider a case, where we ignore the phase response of the filter i.e. we concentrate on only matching the magnitude (or square of magnitude) of the desired and actual filter response. Linearization can be used to find out the coefficients that results in minimum value of . This will ensure that the filter form can be represented by a finite order difference equation. The stability of the filter depends on the function.

The disadvantages of this method are,

- Stability cannot be guaranteed

- Depending on the function , the computations of partial derivatives will be cumbersome.

Magnitude design with stability constraint

This design procedure includes a stability error , which is to be minimized together with the usual approximation error .[9] The stability error is a crude measure of how unstable a filter is. It is a type of penalty function. It should be zero for stable filters and large for the unstable filters. The filters can be designed by minimizing[4]

is a positive constraint which weights the relative importance of and . Ekstrom et al.[9] used the nonlinear optimization techniques to minimize . Their stability error was based on the difference between the denominator coefficient array and the minimum-phase array with the same autocorrelation function.

The minimum phase array can be determined by first computing the autocorrelation function of the denominator coefficient array .

After computing , its Fourier transform must be split into its minimum- and maximum-phase components. This is accomplished by spectral factorization using the complex cepstrum.

We form the cepstrum of the autocorrelation function is formed and multiplied by a non symmetric half-plane window to obtain the cepstrum,

The subscript "mp" denotes that this cepstrum corresponds to a minimum phase sequence .

If the designed filter is stable, its denominator coefficient array is a minimum-phase sequence with non symmetric half-plane support. In this case, is equal to ; otherwise it is not.

can be denoted as,

In practice, is rarely driven to zero because of numerical errors in computing the cepstrum . In general, has infinite extent, and spatial aliasing results when the FFT is used to compute it. The degree of aliasing can be controlled by increasing the size of the FFT.

Design and implementation of M-D zero-phase IIR filters

Often, especially in applications such as image processing, one may be required to design a filter with symmetric impulse response. Such filters will have a real-valued, or zero-phase, frequency response. Zero-phase IIR filter could be implemented in two ways, cascade or parallel.[4]

- 1. The cascade approach

In the cascade approach, a filter whose impulse response is is cascaded with a filter whose impulse response is . The overall impulse response of the cascade is . The overall frequency response is a real and non-negative function,

The cascade approach suffers from some computational problems due to transient effects. The output samples of the second filter in the cascade are computed by a recursion which runs in the opposite direction from that of the first filter. For an IIR filter, its output has infinite extent, and theoretically an infinite number of its output samples must be evaluated before filtering with the can begin, even if the ultimate output is desired only over a limited region. Truncating the computations from the first filter can introduce errors. As a practical approach, the output form the first filter must be computed far enough out in space depending on the region-of-support of the numerator coefficient array and on the location of the to-be-computed second filter's output sample, so that any initial transient from the second filter will have effectively died out in the region of interest of the final output.

- 2. The parallel approach

In the parallel approach, the outputs of two non symmetric half-plane (OR four non-symmetric quarter-plane) IIR filters are added to form the final output signal. As in the cascade approach, the second filter is a space-reserved version of the first. The overall frequency response is given by,

This approach avoids the problems of the cascade approach for zero-phase implementation. But, this approach is best suited for the 2-D IIR filters designed in the space domain, where the desired filter response can be partitioned into the proper regions of support.

For a symmetric zero-phase 2-D IIR filter, the denominator has a real positive frequency response.

For 2-D zero-phase IIR filter, since

We can write,

We can formulate the mean-squared error functional that could be minimized by various techniques. The result of the minimization would yield the zero-phase filter coefficients . We can use these coefficients to implement the designed filter then.

Implementation of Mixed Multidimensional Filters

If the M-D transform transfer function, H(n(M)) = Y(n(M))/X(n(M)) for a particular class of inputs x(n(M)) and a particular transform is known, the design approximation problem becomes simple and we then have to find the (M-P) dimensional LDE's, one for each P-tuple that help in approximating all the complex (M-P) dimensional transform transfer functions, H(k(P),k(M-P)). But as the multidimensional approximation theory for dimensions greater than 2 is not well developed, the (M-P) dimensional approximation maybe a problem. The input and output sequences of each (M-P) dimensional LDE are complex and are given by X(k(P),k(M-P)) and Y(k(P),k(M-P)) respectively. The main design objective is to choose coefficients of the LDE in such a way that the complex (M-P) dimensional transform transfer function of the sequences X(k(P),k(M-P)) and Y(k(P),k(M-P)), are approximately in the ratio of H(k(P),k(M-P)) = H(n(M)). This can be very difficult unless certain transforms such as DFT, DCT and DHT are used. For the above-mentioned transforms, it is possible to find the (M-P) dimensional impulse response, h(k(P),n(M-P)) by approximating the Linear Difference Equations.

The following approaches can be used to implement Mixed Multidimensional filters:

Using discrete Fourier transform (DFT)

For a multidimensional array that is a function of p discrete variables for in , the P-Dimensional Discrete Forward Fourier Transform is defined by:

F(k(P)) = ,

where as shown above and the p output indices run from . If we want to express it in vector notation, where and are p-dimensional vectors of indices from 0 to , where , the P-Dimensional Discrete Forward Fourier Transform is given by :

F(k(P)) = ,

where the division is defined as to be performed element-wise, and the sum denotes the set of nested summations above.

To find the P-Dimensional inverse Discrete Fourier Transform, we can use the following:

F−1(k(P)) = F(k(P)).

The Discrete Fourier Transform is useful for certain applications such as Data Compression, Spectral Analysis, Polynomial Multiplication, etc. The DFT is also used as a building block for techniques that take advantage of properties of signals frequency-domain representation, such as the overlap-save and overlap-add fast convolution algorithms. However the computational complexity increases if Discrete Fourier Transform is used as the Discrete Transform. The computational complexity of the DFT is way higher than the other Discrete Transforms and for P-D DFT, the computational complexity is given by O(...). Therefore, the Fast Fourier Transform(FFT) is used to compute the DFT as it reduces the computational complexity significantly to O(......) and at the same time other Discrete Transforms are preferred over the DFT.

Using discrete cosine transform (DCT)

For a multidimensional array that is a function of p discrete variables for in , the P-Dimensional Discrete Forward Cosine Transform is defined by:

F(k(P))

The P-Dimensional inverse Discrete Cosine Transform is given by:

F−1(k(P))

The DCT finds its use in data compression applications such as the JPEG image format. The DCT has high degree of spectral compaction at a qualitative level, which makes it very suitable for various compression applications. A signal's DCT representation tends to have more of its energy concentrated in a small number of coefficients when compared to other transforms like the DFT. Thus you can reduce your data storage requirement by only storing the DCT outputs that contain significant amounts of energy. The computational complexity of P-D DCT goes by O(......). Since the number of operations required to compute the DCT is less than that required to compute the DFT without the use of FFT, the DCT's are also called as Fast Cosine Transforms(FCT).

Using discrete Hartley transform (DHT)

For a multidimensional array that is a function of p discrete variables for in , the P-Dimensional Discrete Forward Hartley Transform is defined by:

F(k(P))

where, and where

If Discrete Hartley Transform is used, the computational complexity of complex numbers can be avoided. The overall computational complexity of P-D Discrete Hartley Transform is given by O(......), if algorithms similar to the FFT are used and thus the DHT is also referred to as the Fast Hartley Transform(FHT).

The Discrete Hartley Transform is used in various applications in communications and signal processing areas. Some of these applications include multidimensional filtering, multidimensional spectral analysis, error control coding, adaptive digital filters, image processing etc.

Applications of MixeD Multidimensional Filters

Mixed 3-D filters can be used for enhancement of 3-D spatially planar signals. A 3-D MixeD Cone filter can be designed using 2-D DHT and is shown below.

Review of 3-D Spatially Planar Signals[1]

An M-D signal, x(n(M)) is considered to be spatially-planar(SP) if it is constant on all surfaces, i.e.

+ + + = d, for d R where R is the set of real numbers.

Therefore, a 3-D spatially planar signal, x(n(3)) is constant on 3 surfaces and is given by + + = d, for d R

It may be shown that the 3-D DFT of a SP x(n(M)) yields 3-D DFT frequency domain coefficients, X(k(3)), which are zero everywhere except on the line L(k(3)) where

(k(3)) Z /=/=/ ,where Z refer to the set of integers.

A 3-D signal input sequence will be selectively enhanced by a 3-D passband enclosing this line. Thus we make use of a cone filter having a thin pyramidal shaped passband which is approximated using Mixed filter constructed using 2-D DHT.

Design of a 3-D MixeD cone filter using 2-D DHT[1]

Firstly, we have to select the passband regions on {k1,k2}. The close examination of DFT and DHT, shows that the 3-D DHT of (k(3)) of a spatially-planar signal x(n(3)) is zero outside the straight line (k(3)) passing through the origin of 3-D DHT (k(3)) space. Therefore, (k(3)) Z /=/}, where Z refer to the set of integers. All the LDE's that correspond to 2-tuples {,}, that lie outside the fan shaped projection of the thin pyramidal passband on the - plane have to be omitted. The half angle will determine the k1-k2 plane bandwidth of the MixeD filter.

Secondly, we have to find the characteristics of the LDE Input sequences (,,). The LDE input sequences computed for the 2-D DHT of a S-P signal x(n(3)), are real and sinusoidal in the steady-state. Since a spatially planar signal is also a linear trajectory signal, it maybe written in the form, x(n(3)) = x( - , - , ), where = / and = /.

Now, using the shift property of 2-D DHT, we get,

(,,) = (,,0)cosW + (N - , N - ,0)cosW, where W = cos2( + )()/N. This equation implies that the passband sequences, (,,) at each tuple {,} are real sampled sinusoids that may be selectively transmitted by employing LDE's that are characterized by narrowband bandpass magnitude frequency respone having Normalized frequencies given by,

v = 2( + )/N. If we choose the bandwidths B{,} of these narrowband

bandpass LDEs to be proportional to the centre frequencies v, so that B{,} = Kv, K > 0, and K constant, the required 3D pyramidal passband is realized.

Thus it has been proved that 2-D DHT helped in the constructing a MixeD 3-D filter.

References

- ↑ 1.0 1.1 1.2 1.3 K.S. Knudsen and T. Bruton, "Mixed Multidimensional Filters" IEEE transactions on "Circuits and Systems for Video Technology"

- ↑ 2.0 2.1 Knud Steven Knudsen and Leonard T. Bruton "Mixed Domain Filtering of Multidimensional Signals", IEEE transactions on "Circuits and Systems for Video Technology", VOL. I , NO. 3, SEPTEMBER 1991

- ↑ Kwan, H. K., and C. L. Chan. "Multidimensional spherically symmetric recursive digital filter design satisfying prescribed magnitude and constant group delay responses." IEE Proceedings G (Electronic Circuits and Systems). Vol. 134. Issue 4, pp. 187 – 193, IET Digital Library, 1987

- ↑ 4.00 4.01 4.02 4.03 4.04 4.05 4.06 4.07 4.08 4.09 4.10 Dan E. Dudgeon, Russell M. Mersereau, "Multidimensional Digital Signal Processing", Prentice-Hall Signal Processing Series, ISBN 0136049591, 1983.

- ↑ Russell M.Mersereau, Wolfgang F.G. Mecklenbrauker, and Thomas F. Quatieri, Jr., "McClellan Transformation for 2-D Digital Filtering: I-Design," IEEE Trans. Circuits and Systems, CAS-23, no. 7 (July 1976),405-14.

- ↑ Wolfgang F.G. Mecklenbrauker and Russell M. Mersereau, "McClellan Transformations for 2-D Digital Filtering: II-Implementation," IEEE Trans. Circuits and Systems, CAS-23, no.7(July 1976), 414-22.

- ↑ James H. McClellan and David S.K. Chan. "A 2-D FIR Filter Structure Derived from the Chebyshev Recursion," IEEE Trans. Circuits and Systems, CAS-24, no.7 (July 1977), 372-78.

- ↑ Roh T., Bogdan D.,Vandenberghe L., "Multidimensional FIR Filter Design Via Trigonometric Sum-of-Squares Optimization," 'IEEE JOURNAL OF SELECTED TOPICS IN SIGNAL PROCESSING (12/2007)', Vol. 1, No. 4, Page 1-10

- ↑ 9.0 9.1 Ekstrom, Michael P., Richard E. Twogood, and John W. Woods. "Two-dimensional recursive filter design--A spectral factorization approach." Acoustics, Speech and Signal Processing, IEEE Transactions on 28.1 (1980): 16-26.