Probabilistic metric space

In mathematics, probabilistic metric spaces are a generalization of metric spaces where the distance no longer takes values in the non-negative real numbers R ≥ 0, but in distribution functions.[1]

Let D+ be the set of all probability distribution functions F such that F(0) = 0 (F is a nondecreasing, left continuous mapping from R into [0, 1] such that max(F) = 1).

Then given a non-empty set S and a function F: S × S → D+ where we denote F(p, q) by Fp,q for every (p, q) ∈ S × S, the ordered pair (S, F) is said to be a probabilistic metric space if:

- For all u and v in S, u = v if and only if Fu,v(x) = 1 for all x > 0.

- For all u and v in S, Fu,v = Fv,u.

- For all u, v and w in S, Fu,v(x) = 1 and Fv,w(y) = 1 ⇒ Fu,w(x + y) = 1 for x, y > 0.[2]

History

Probabilistic metric spaces are initially introduced by Menger, which were termed statistical metrics.[3] Shortly after, Wald criticized the generalized triangle inequality and proposed an alternative one.[4] However, both authors had come to the conclusion that in some respects the Wald inequality was too stringent a requirement to impose on all probability metric spaces, which is partly included in the work of Schweizer and Sklar.[5] Later, the probabilistic metric spaces found to be very suitable to be used with fuzzy sets[6] and further called fuzzy metric spaces[7]

Probability metric of random variables

A probability metric D between two random variables X and Y may be defined, for example, as where F(x, y) denotes the joint probability density function of the random variables X and Y. If X and Y are independent from each other, then the equation above transforms into where f(x) and g(y) are probability density functions of X and Y respectively.

One may easily show that such probability metrics do not satisfy the first metric axiom or satisfies it if, and only if, both of arguments X and Y are certain events described by Dirac delta density probability distribution functions. In this case: the probability metric simply transforms into the metric between expected values , of the variables X and Y.

For all other random variables X, Y the probability metric does not satisfy the identity of indiscernibles condition required to be satisfied by the metric of the metric space, that is:

Example

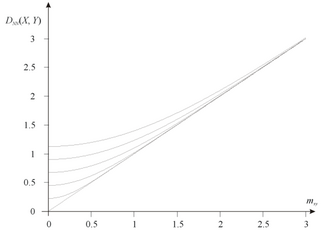

For example if both probability distribution functions of random variables X and Y are normal distributions (N) having the same standard deviation , integrating yields: where and is the complementary error function.

In this case:

Probability metric of random vectors

The probability metric of random variables may be extended into metric D(X, Y) of random vectors X, Y by substituting with any metric operator d(x, y): where F(X, Y) is the joint probability density function of random vectors X and Y. For example substituting d(x, y) with Euclidean metric and providing the vectors X and Y are mutually independent would yield to:

References

- ↑ Sherwood, H. (1971). "Complete probabilistic metric spaces". Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete 20 (2): 117–128. doi:10.1007/bf00536289. ISSN 0044-3719.

- ↑ Schweizer, Berthold; Sklar, Abe (1983). Probabilistic metric spaces. North-Holland series in probability and applied mathematics. New York: North-Holland. ISBN 978-0-444-00666-0.

- ↑ "Statistical Metrics", Selecta Mathematica, Springer Vienna, 2003, pp. 433–435, doi:10.1007/978-3-7091-6045-9_35, ISBN 978-3-7091-7294-0, http://dx.doi.org/10.1007/978-3-7091-6045-9_35

- ↑ "On a Statistical Generalization of Metric Spaces", Proceedings of the National Academy of Sciences 29 (6): 196–197, 1943, doi:10.1073/pnas.29.6.196, PMID 16578072, Bibcode: 1943PNAS...29..196W

- ↑ "Statistical Metrics", Selecta Mathematica, Springer Vienna, 2003, pp. 433–435, doi:10.1007/978-3-7091-6045-9_35, ISBN 978-3-7091-7294-0, http://dx.doi.org/10.1007/978-3-7091-6045-9_35

- ↑ Mathematics of Fuzzy Sets and Fuzzy Logic. Studies in Fuzziness and Soft Computing. 295. Springer Berlin Heidelberg. 2013. doi:10.1007/978-3-642-35221-8. ISBN 978-3-642-35220-1. http://dx.doi.org/10.1007/978-3-642-35221-8.

- ↑ Kramosil, Ivan; Michálek, Jiří (1975). "Fuzzy metrics and statistical metric spaces". Kybernetika 11 (5): 336–344. https://www.kybernetika.cz/content/1975/5/336/paper.pdf.

|