Static random-access memory

| Computer memory types |

|---|

| Volatile |

| RAM |

| Historical |

|

| Non-volatile |

| ROM |

| NVRAM |

| Early stage NVRAM |

| Magnetic |

| Optical |

| In development |

| Historical |

|

Static random-access memory (static RAM or SRAM) is a type of random-access memory (RAM) that uses latching circuitry (flip-flop) to store each bit. SRAM is volatile memory; data is lost when power is removed.

The static qualifier differentiates SRAM from dynamic random-access memory (DRAM):

- SRAM will hold its data permanently in the presence of power, while data in DRAM decays in seconds and thus must be periodically refreshed.

- SRAM is faster than DRAM but it is more expensive in terms of silicon area and cost.

- Typically, SRAM is used for the cache and internal registers of a CPU while DRAM is used for a computer's main memory.

History

Semiconductor bipolar SRAM was invented in 1963 by Robert Norman at Fairchild Semiconductor.[1] Metal–oxide–semiconductor SRAM (MOS-SRAM) was invented in 1964 by John Schmidt at Fairchild Semiconductor. The first device was a 64-bit MOS p-channel SRAM.[2][3]

SRAM was the main driver behind any new CMOS-based technology fabrication process since the 1960s, when CMOS was invented.[4]

In 1964, Arnold Farber and Eugene Schlig, working for IBM, created a hard-wired memory cell, using a transistor gate and tunnel diode latch. They replaced the latch with two transistors and two resistors, a configuration that became known as the Farber-Schlig cell. That year they submitted an invention disclosure, but it was initially rejected.[5][6] In 1965, Benjamin Agusta and his team at IBM created a 16-bit silicon memory chip based on the Farber-Schlig cell, with 84 transistors, 64 resistors, and 4 diodes.

In April 1969, Intel Inc. introduced its first product, Intel 3101, a SRAM memory chip intended to replace bulky magnetic-core memory modules; Its capacity was 64 bits[lower-alpha 1][7] and was based on bipolar junction transistors.[8] It was designed by using rubylith.[9]

Characteristics

Though it can be characterized as volatile memory, SRAM exhibits data remanence.[10]

SRAM offers a simple data access model and does not require a refresh circuit. Performance and reliability are good and power consumption is low when idle. Since SRAM requires more transistors per bit to implement, it is less dense and more expensive than DRAM and also has a higher power consumption during read or write access. The power consumption of SRAM varies widely depending on how frequently it is accessed.[11]

Applications

Embedded use

Many categories of industrial and scientific subsystems, automotive electronics, and similar embedded systems, contain SRAM which, in this context, may be referred to as embedded SRAM (ESRAM).[12] Some amount is also embedded in practically all modern appliances, toys, etc. that implement an electronic user interface.

SRAM in its dual-ported form is sometimes used for real-time digital signal processing circuits.[13]

In computers

SRAM is used in personal computers, workstations and peripheral equipment: CPU register files, internal CPU caches and GPU caches, hard disk buffers, etc. LCD screens also may employ SRAM to hold the image displayed. SRAM was used for the main memory of many early personal computers such as the ZX80, TRS-80 Model 100, and VIC-20.

Some early memory cards in the late 1980s to early 1990s used SRAM as a storage medium, which required a lithium battery to retain the contents of the SRAM.[14][15]

Integrated on chip

SRAM may be integrated on chip for:

- the RAM in microcontrollers (usually from around 32 bytes to a megabyte),

- the on-chip caches in most modern processors, like CPUs and GPUs, from a few kilobytes and up to more than a hundred megabytes,

- the registers and parts of the state-machines used in CPUs, GPUs, chipsets and peripherals (see register file),

- scratchpad memory,

- application-specific integrated circuits (ASICs) (usually in the order of kilobytes),

- and in field-programmable gate arrays (FPGAs) and complex programmable logic devices (CPLDs).

Hobbyists

Hobbyists, specifically home-built processor enthusiasts, often prefer SRAM due to the ease of interfacing. It is much easier to work with than DRAM as there are no refresh cycles[16] and the address and data buses are often directly accessible. In addition to buses and power connections, SRAM usually requires only three controls: Chip Enable (CE), Write Enable (WE) and Output Enable (OE). In synchronous SRAM, Clock (CLK) is also included.[17]

Types of SRAM

Non-volatile SRAM

Non-volatile SRAM (nvSRAM) has standard SRAM functionality, but retains data when power is lost. nvSRAMs are used in networking, aerospace, and medical, among other applications,[18]where the preservation of data is critical and where batteries are impractical.

Pseudostatic RAM

Pseudostatic RAM (PSRAM) is DRAM combined with a self-refresh circuit.[19] It appears externally as slower SRAM, albeit with a density and cost advantage over true SRAM, and without the access complexity of DRAM.

By transistor type

- Bipolar junction transistor (used in TTL and ECL) – very fast but with high power consumption

- MOSFET (used in CMOS) – low power

By numeral system

- Binary

- Ternary

By function

- Asynchronous – independent of clock frequency; data in and data out are controlled by address transition. Examples include the ubiquitous 28-pin 8K × 8 and 32K × 8 chips (often but not always named something along the lines of 6264 and 62C256 respectively), as well as similar products up to 16 Mbit per chip.

- Synchronous – all timings are initiated by the clock edges. Address, data in and other control signals are associated with the clock signals.

In the 1990s, asynchronous SRAM used to be employed for fast access time. Asynchronous SRAM was used as main memory for small cache-less embedded processors used in everything from industrial electronics and measurement systems to hard disks and networking equipment, among many other applications. Nowadays, synchronous SRAM (e.g. DDR SRAM) is rather employed similarly to synchronous DRAM – DDR SDRAM memory is rather used than asynchronous DRAM. Synchronous memory interface is much faster as access time can be significantly reduced by employing pipeline architecture. Furthermore, as DRAM is much cheaper than SRAM, SRAM is often replaced by DRAM, especially in the case when a large volume of data is required. SRAM memory is, however, much faster for random (not block / burst) access. Therefore, SRAM memory is mainly used for CPU cache, small on-chip memory, FIFOs or other small buffers.

By feature

- Zero bus turnaround (ZBT) – the turnaround is the number of clock cycles it takes to change access to SRAM from write to read and vice versa. The turnaround for ZBT SRAMs or the latency between read and write cycle is zero.

- syncBurst (syncBurst SRAM or synchronous-burst SRAM) – features synchronous burst write access to SRAM to increase write operation to SRAM.

- DDR SRAM – synchronous, single read/write port, double data rate I/O.

- Quad Data Rate SRAM – synchronous, separate read and write ports, quadruple data rate I/O.

By stacks

- Single-stack SRAM

- 2.5D SRAM – as of 2025[update], 3D SRAM technology is still expensive, so SRAM with 2.5D integrated circuit technology may be used.

- 3D SRAM – used on various performance-oriented models of AMD processors.

Design

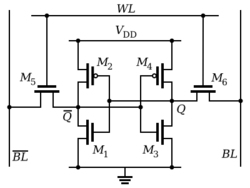

A typical SRAM cell is made up of six MOSFETs, and is often called a 6T SRAM cell. Each bit in the cell is stored on four transistors (M1, M2, M3, M4) that form two cross-coupled inverters. This storage cell has two stable states which are used to denote 0 and 1. Two additional access transistors serve to control the access to a storage cell during read and write operations. 6T SRAM is the most common kind of SRAM.[20] In addition to 6T SRAM, other kinds of SRAM use 4, 5, 7,[21] 8, 9,[20] 10[22] (4T, 5T, 7T 8T, 9T, 10T SRAM), or more transistors per bit.[23][24][25] Four-transistor SRAM is quite common in stand-alone SRAM devices (as opposed to SRAM used for CPU caches), implemented in special processes with an extra layer of polysilicon, allowing for very high-resistance pull-up resistors.[26] The principal drawback of using 4T SRAM is increased static power due to the constant current flow through one of the pull-down transistors (M1 or M2).

This is sometimes used to implement more than one (read and/or write) port, which may be useful in certain types of video memory and register files implemented with multi-ported SRAM circuitry.

Generally, the fewer transistors needed per cell, the smaller each cell can be. Since the cost of processing a silicon wafer is relatively fixed, using smaller cells and so packing more bits on one wafer reduces the cost per bit of memory.

Memory cells that use fewer than four transistors are possible; however, such 3T[27][28] or 1T cells are DRAM, not SRAM (even the so-called 1T-SRAM).

Access to the cell is enabled by the word line (WL in figure) which controls the two access transistors M5 and M6 in 6T SRAM figure (or M3 and M4 in 4T SRAM figure) which, in turn, control whether the cell should be connected to the bit lines: BL and BL. They are used to transfer data for both read and write operations. Although it is not strictly necessary to have two bit lines, both the signal and its inverse are typically provided in order to improve noise margins and speed.

During read accesses, the bit lines are actively driven high and low by the inverters in the SRAM cell. This improves SRAM bandwidth compared to DRAMs – in a DRAM, the bit line is connected to storage capacitors and charge sharing causes the bit line to swing upwards or downwards. The symmetric structure of SRAMs also allows for differential signaling, which makes small voltage swings more easily detectable. Another difference with DRAM that contributes to making SRAM faster is that commercial chips accept all address bits at a time. By comparison, commodity DRAMs have the address multiplexed in two halves, i.e. higher bits followed by lower bits, over the same package pins in order to keep their size and cost down.

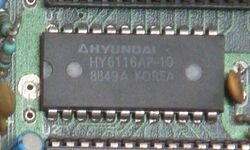

The size of an SRAM with m address lines and n data lines is 2m words, or 2m × n bits. The most common word size is 8 bits, meaning that a single byte can be read or written to each of 2m different words within the SRAM chip. Several common SRAM chips have 11 address lines (thus a capacity of 211 = 2,048 = 2k words) and an 8-bit word, so they are referred to as 2k × 8 SRAM.

The dimensions of an SRAM cell on an IC is determined by the minimum feature size of the process used to make the IC.

SRAM operation

This section contains instructions, advice, or how-to content. (January 2023) |

An SRAM cell has three states:

- Standby: The circuit is idle.

- Reading: The data has been requested.

- Writing: Updating the contents.

SRAM operating in read and write modes should have readability and write stability, respectively. The three different states work as follows:

Standby

If the word line is not asserted, the access transistors M5 and M6 disconnect the cell from the bit lines. The two cross-coupled inverters formed by M1 – M4 will continue to reinforce each other as long as they are connected to the supply.

Reading

In theory, reading only requires asserting the word line WL and reading the SRAM cell state by a single access transistor and bit line, e.g. M6, BL. However, bit lines are relatively long and have large parasitic capacitance. To speed up reading, a more complex process is used in practice: The read cycle is started by precharging both bit lines BL and BL, to high (logic 1) voltage. Then asserting the word line WL enables both the access transistors M5 and M6, which causes one bit line BL voltage to slightly drop. Then the BL and BL lines will have a small voltage difference between them. A sense amplifier will sense which line has the higher voltage and thus determine whether there was 1 or 0 stored. The higher the sensitivity of the sense amplifier, the faster the read operation. As the NMOS is more powerful, the pull-down is easier. Therefore, bit lines are traditionally precharged to high voltage. Many researchers are also trying to precharge at a slightly low voltage to reduce the power consumption.[29][30]

Writing

The write cycle begins by applying the value to be written to the bit lines. To write a 0, a 0 is applied to the bit lines, such as setting BL to 1 and BL to 0. This is similar to applying a reset pulse to an SR-latch, which causes the flip flop to change state. A 1 is written by inverting the values of the bit lines. WL is then asserted and the value that is to be stored is latched in. This works because the bit line input-drivers are designed to be much stronger than the relatively weak transistors in the cell itself so they can easily override the previous state of the cross-coupled inverters. In practice, access NMOS transistors M5 and M6 have to be stronger than either bottom NMOS (M1, M3) or top PMOS (M2, M4) transistors. This is easily obtained as PMOS transistors are much weaker than NMOS when same sized. Consequently, when one transistor pair (e.g. M3 and M4) is only slightly overridden by the write process, the opposite transistors pair (M1 and M2) gate voltage is also changed. This means that the M1 and M2 transistors can be easier overridden, and so on. Thus, cross-coupled inverters magnify the writing process.

Bus behavior

RAM with an access time of 70 ns will output valid data within 70 ns from the time that the address lines are valid. Some SRAM cells have a page mode, where words of a page (256, 512, or 1024 words) can be read sequentially with a significantly shorter access time (typically approximately 30 ns). The page is selected by setting the upper address lines and then words are sequentially read by stepping through the lower address lines.

Production challenges

Over 30 years (from 1987 to 2017), with a steadily decreasing transistor size (node size), the footprint-shrinking of the SRAM cell topology itself slowed down, making it harder to pack the cells more densely.[4] One of the reasons is that scaling down transistor size leads to SRAM reliability issues. Careful cells designs are necessary to achieve SRAM cells that do not suffer from stability problems especially when they are being read.[31] With the introduction of the FinFET transistor implementation of SRAM cells, they started to suffer from increasing inefficiencies in cell sizes.

Besides issues with size a significant challenge of modern SRAM cells is a static current leakage. The current, that flows from positive supply (Vdd), through the cell, and to the ground, increases exponentially when the cell's temperature rises. The cell power drain occurs in both active and idle states, thus wasting useful energy without any useful work done. Even though in the last 20 years the issue was partially addressed by the Data Retention Voltage technique (DRV) with reduction rates ranging from 5 to 10, the decrease in node size caused reduction rates to fall to about 2.[4]

With these two issues it became more challenging to develop energy-efficient and dense SRAM memories, prompting semiconductor industry to look for alternatives such as STT-MRAM and F-RAM.[4][32]

Research

In 2019 a French institute reported on a research of an IoT-purposed 28nm fabricated IC.[33] It was based on fully depleted silicon on insulator-transistors (FD-SOI), had two-ported SRAM memory rail for synchronous/asynchronous accesses, and selective virtual ground (SVGND). The study claimed reaching an ultra-low SVGND current in a sleep and read modes by finely tuning its voltage.[33]

See also

- Flash memory

- Miniature Card, a discontinued SRAM memory card standard

- In-memory processing

Notes

- ↑ In the first versions, only 63 bits were usable due to a bug.

References

- ↑ "1966: Semiconductor RAMs Serve High-speed Storage Needs". https://www.computerhistory.org/siliconengine/semiconductor-rams-serve-high-speed-storage-needs/.

- ↑ "1970: MOS dynamic RAM competes with magnetic core memory on price". https://www.computerhistory.org/siliconengine/mos-dynamic-ram-competes-with-magnetic-core-memory-on-price/.

- ↑ "Memory lectures". https://faculty.kfupm.edu.sa/COE/mudawar/coe501/lectures/05-MainMemory.pdf.

- ↑ 4.0 4.1 4.2 4.3 Walker, Andrew (December 17, 2018). "The Trouble with SRAM". EE Times. https://www.eetimes.com/the-trouble-with-sram/.

- ↑ & Eugene S. Schlig"Nondestructive memory array" US patent 3354440A, issued 1967-11-21, assigned to IBM

- ↑ Emerson W. Pugh; Lyle R. Johnson; John H. Palmer (1991). IBM's 360 and Early 370 Systems. MIT Press. p. 462. ISBN 9780262161237. https://books.google.com/books?id=MFGj_PT_clIC.

- ↑ Volk, Andrew M.; Stoll, Peter A.; Metrovich, Paul (First Quarter 2001). "Recollections of Early Chip Development at Intel". Intel Technology Journal 5 (1): 11. https://www.intel.com/content/dam/www/public/us/en/documents/research/2001-vol05-iss-1-intel-technology-journal.pdf#page=11.

- ↑ "Intel at 50: Intel's First Product – the 3101" (in en-US). 2018-05-14. https://newsroom.intel.com/news/intel-at-50-intels-first-product-3101/.

- ↑ Intel 64 bit static RAM rubylith : 6, c. 1970, https://www.computerhistory.org/collections/catalog/102718783, retrieved 2023-01-28

- ↑ Sergei Skorobogatov (June 2002). Low temperature data remanence in static RAM. doi:10.48456/tr-536. http://www.cl.cam.ac.uk/techreports/UCAM-CL-TR-536.html. Retrieved 2008-02-27.

- ↑ Null, Linda; Lobur, Julia (2006). The Essentials of Computer Organization and Architecture. Jones and Bartlett Publishers. p. 282. ISBN 978-0763737696. https://books.google.com/books?id=QGPHAl9GE-IC. Retrieved 2021-09-14.

- ↑ Fahad Arif (Apr 5, 2014). "Microsoft Says Xbox One's ESRAM is a "Huge Win" – Explains How it Allows Reaching 1080p/60 FPS". https://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/.

- ↑ Shared Memory Interface with the TMS320C54x DSP, https://www.ti.com/lit/an/spra441/spra441.pdf, retrieved 2019-05-04

- ↑ Stam, Nick (December 21, 1993). "PCMCIA's System Architecture". PC Mag (Ziff Davis, Inc.). https://books.google.com/books?id=x2Fa5SDi0G8C&dq=memory+cards+SRAM+battery&pg=PA270.

- ↑ Matzkin, Jonathan (December 26, 1989). "$399 Atari Portfolio Takes on Hand-held Poqet PC". PC Mag (Ziff Davis, Inc.). https://books.google.com/books?id=-Xr7Ic-ivyMC&dq=atari+portfolio+memory+card&pg=PT318.

- ↑ "Homemade CPU – from scratch : Svarichevsky Mikhail". https://3.14.by/en/read/homemade-cpus.

- ↑ "Embedded Systems Course- module 15: SRAM memory interface to microcontroller in embedded systems". https://www.eeherald.com/section/design-guide/esmod15.html.

- ↑ Computer organization (4th ed.). [S.l.]: McGraw-Hill. 1996-07-01. ISBN 978-0-07-114323-3. https://archive.org/details/isbn_9780071143097.

- ↑ "3.0V Core Async/Page PSRAM Memory". Micron. https://media.digikey.com/pdf/Data%20Sheets/Micron%20Technology%20Inc%20PDFs/MT45V256KW16PEGA.pdf.

- ↑ 20.0 20.1 Rathi, Neetu; Kumar, Anil; Gupta, Neeraj; Singh, Sanjay Kumar (2023). "A Review of Low-Power Static Random Access Memory (SRAM) Designs". 2023 IEEE Devices for Integrated Circuit (DevIC). pp. 455–459. doi:10.1109/DevIC57758.2023.10134887. ISBN 979-8-3503-4726-5.

- ↑ Chen, Wai-Kai (October 3, 2018). The VLSI Handbook. CRC Press. ISBN 978-1-4200-0596-7. https://books.google.com/books?id=rMsqBgAAQBAJ&dq=5+transistor+sram&pg=SA1-PA35.

- ↑ Kulkarni, Jaydeep P.; Kim, Keejong; Roy, Kaushik (2007). "A 160 mV Robust Schmitt Trigger Based Subthreshold SRAM". IEEE Journal of Solid-State Circuits 42 (10): 2303. doi:10.1109/JSSC.2007.897148. Bibcode: 2007IJSSC..42.2303K.

- ↑ "0.45-V operating Vt-variation tolerant 9T/18T dual-port SRAM". March 2011. pp. 1–4. doi:10.1109/ISQED.2011.5770728.

- ↑ United States Patent 6975532: Quasi-static random access memory

- ↑ "Area Optimization in 6T and 8T SRAM Cells Considering Vth Variation in Future Processes -- MORITA et al. E90-C (10): 1949 -- IEICE Transactions on Electronics". http://ietele.oxfordjournals.org/cgi/content/abstract/E90-C/10/1949.

- ↑ Preston, Ronald P. (2001). "14: Register Files and Caches". The Design of High Performance Microprocessor Circuits. IEEE Press. p. 290. http://courses.engr.illinois.edu/ece512/Papers/Preston_2001_CBF.pdf. Retrieved 2013-02-01.

- ↑ United States Patent 6975531: 6F2 3-transistor DRAM gain cell

- ↑ 3T-iRAM(r) Technology

- ↑ Kabir, Hussain Mohammed Dipu; Chan, Mansun (January 2, 2015). "SRAM precharge system for reducing write power". HKIE Transactions 22 (1): 1–8. doi:10.1080/1023697X.2014.970761. https://www.tandfonline.com/doi/full/10.1080/1023697X.2014.970761.

- ↑ "CiteSeerX". https://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.119.3735.

- ↑ Torrens, Gabriel; Alorda, Bartomeu; Carmona, Cristian; Malagon-Perianez, Daniel; Segura, Jaume; Bota, Sebastia (2019). "A 65-nm Reliable 6T CMOS SRAM Cell with Minimum Size Transistors". IEEE Transactions on Emerging Topics in Computing 7 (3): 447–455. doi:10.1109/TETC.2017.2721932. ISSN 2168-6750. Bibcode: 2019ITETC...7..447T.

- ↑ Walker, Andrew (February 6, 2019). "The Race is On". EE Times. https://www.eetimes.com/the-race-is-on/.

- ↑ 33.0 33.1 Reda, Boumchedda (May 20, 2019). "Ultra-low voltage and energy efficient SRAM design with new technologies for IoT applications". Grenoble Alpes University. https://tel.archives-ouvertes.fr/tel-03359929/document.

|