Variation of information

In probability theory and information theory, the variation of information or shared information distance is a measure of the distance between two clusterings (partitions of elements). It is closely related to mutual information; indeed, it is a simple linear expression involving the mutual information. Unlike the mutual information, however, the variation of information is a true metric, in that it obeys the triangle inequality.[1][2][3]

Definition

Suppose we have two partitions and of a set into disjoint subsets, namely and .

Let:

- and

Then the variation of information between the two partitions is:

- .

This is equivalent to the shared information distance between the random variables i and j with respect to the uniform probability measure on defined by for .

Explicit information content

We can rewrite this definition in terms that explicitly highlight the information content of this metric.

The set of all partitions of a set form a compact Lattice where the partial order induces two operations, the meet and the join , where the maximum is the partition with only one block, i.e., all elements grouped together, and the minimum is , the partition consisting of all elements as singletons. The meet of two partitions and is easy to understand as that partition formed by all pair intersections of one block of, , of and one, , of . It then follows that and .

Let's define the entropy of a partition as

- ,

where . Clearly, and . The entropy of a partition is a monotonous function on the lattice of partitions in the sense that .

Then the VI distance between and is given by

- .

The difference is a pseudo-metric as doesn't necessarily imply that . From the definition of , it is .

If in the Hasse diagram we draw an edge from every partition to the maximum and assign it a weight equal to the VI distance between the given partition and , we can interpret the VI distance as basically an average of differences of edge weights to the maximum

- .

For as defined above, it holds that the joint information of two partitions coincides with the entropy of the meet

and we also have that coincides with the conditional entropy of the meet (intersection) relative to .

Identities

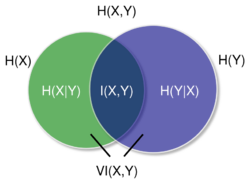

The variation of information satisfies

- ,

where is the entropy of , and is mutual information between and with respect to the uniform probability measure on . This can be rewritten as

- ,

where is the joint entropy of and , or

- ,

where and are the respective conditional entropies.

The variation of information can also be bounded, either in terms of the number of elements:

- ,

Or with respect to a maximum number of clusters, :

Triangle inequality

To verify the triangle inequality , expand using the identity . It simplifies to The right side equals , which is no less than the left side. This is intuitive, as contains no less randomness than .

References

- ↑ P. Arabie, S.A. Boorman, S. A., "Multidimensional scaling of measures of distance between partitions", Journal of Mathematical Psychology (1973), vol. 10, 2, pp. 148–203, doi: 10.1016/0022-2496(73)90012-6

- ↑ W.H. Zurek, Nature, vol 341, p119 (1989); W.H. Zurek, Physics Review A, vol 40, p. 4731 (1989)

- ↑ Marina Meila, "Comparing Clusterings by the Variation of Information", Learning Theory and Kernel Machines (2003), vol. 2777, pp. 173–187, doi:10.1007/978-3-540-45167-9_14, Lecture Notes in Computer Science, ISBN 978-3-540-40720-1

Further reading

- Arabie, P.; Boorman, S. A. (1973). "Multidimensional scaling of measures of distance between partitions". Journal of Mathematical Psychology 10 (2): 148–203. doi:10.1016/0022-2496(73)90012-6.

- Meila, Marina (2003). "Comparing Clusterings by the Variation of Information". Learning Theory and Kernel Machines. Lecture Notes in Computer Science 2777: 173–187. doi:10.1007/978-3-540-45167-9_14. ISBN 978-3-540-40720-1.

- Meila, M. (2007). "Comparing clusterings—an information based distance". Journal of Multivariate Analysis 98 (5): 873–895. doi:10.1016/j.jmva.2006.11.013.

- Kingsford, Carl (2009). "Information Theory Notes". http://www.cs.umd.edu/class/spring2009/cmsc858l/InfoTheoryHints.pdf.

- Kraskov, Alexander; Harald Stögbauer; Ralph G. Andrzejak; Peter Grassberger (2003). "Hierarchical Clustering Based on Mutual Information". arXiv:q-bio/0311039.

External links

- Partanalyzer includes a C++ implementation of VI and other metrics and indices for analyzing partitions and clusterings

- C++ implementation with MATLAB mex files

|