Singular spectrum analysis

In time series analysis, singular spectrum analysis (SSA) is a nonparametric spectral estimation method. It combines elements of classical time series analysis, multivariate statistics, multivariate geometry, dynamical systems and signal processing. Its roots lie in the classical Karhunen (1946)–Loève (1945, 1978) spectral decomposition of time series and random fields and in the Mañé (1981)–Takens (1981) embedding theorem. SSA can be an aid in the decomposition of time series into a sum of components, each having a meaningful interpretation. The name "singular spectrum analysis" relates to the spectrum of eigenvalues in a singular value decomposition of a covariance matrix, and not directly to a frequency domain decomposition.

Brief history

The origins of SSA and, more generally, of subspace-based methods for signal processing, go back to the eighteenth century (Prony's method). A key development was the formulation of the spectral decomposition of the covariance operator of stochastic processes by Kari Karhunen and Michel Loève in the late 1940s (Loève, 1945; Karhunen, 1947).

Broomhead and King (1986a, b) and Fraedrich (1986) proposed to use SSA and multichannel SSA (M-SSA) in the context of nonlinear dynamics for the purpose of reconstructing the attractor of a system from measured time series. These authors provided an extension and a more robust application of the idea of reconstructing dynamics from a single time series based on the embedding theorem. Several other authors had already applied simple versions of M-SSA to meteorological and ecological data sets (Colebrook, 1978; Barnett and Hasselmann, 1979; Weare and Nasstrom, 1982).

Ghil, Vautard and their colleagues (Vautard and Ghil, 1989; Ghil and Vautard, 1991; Vautard et al., 1992; Ghil et al., 2002) noticed the analogy between the trajectory matrix of Broomhead and King, on the one hand, and the Karhunen–Loeve decomposition (Principal component analysis in the time domain), on the other. Thus, SSA can be used as a time-and-frequency domain method for time series analysis — independently from attractor reconstruction and including cases in which the latter may fail. The survey paper of Ghil et al. (2002) is the basis of the § Methodology section of this article. A crucial result of the work of these authors is that SSA can robustly recover the "skeleton" of an attractor, including in the presence of noise. This skeleton is formed by the least unstable periodic orbits, which can be identified in the eigenvalue spectra of SSA and M-SSA. The identification and detailed description of these orbits can provide highly useful pointers to the underlying nonlinear dynamics.

The so-called ‘Caterpillar’ methodology is a version of SSA that was developed in the former Soviet Union, independently of the mainstream SSA work in the West. This methodology became known in the rest of the world more recently (Danilov and Zhigljavsky, Eds., 1997; Golyandina et al., 2001; Zhigljavsky, Ed., 2010; Golyandina and Zhigljavsky, 2013; Golyandina et al., 2018). ‘Caterpillar-SSA’ emphasizes the concept of separability, a concept that leads, for example, to specific recommendations concerning the choice of SSA parameters. This method is thoroughly described in § SSA as a model-free tool of this article.

Methodology

In practice, SSA is a nonparametric spectral estimation method based on embedding a time series [math]\displaystyle{ \{X(t) : t=1,\ldots,N\} }[/math] in a vector space of dimension [math]\displaystyle{ M }[/math]. SSA proceeds by diagonalizing the [math]\displaystyle{ M\times M }[/math] lag-covariance matrix [math]\displaystyle{ {\textbf C}_X }[/math] of [math]\displaystyle{ X(t) }[/math] to obtain spectral information on the time series, assumed to be stationary in the weak sense. The matrix [math]\displaystyle{ {\textbf C}_X }[/math] can be estimated directly from the data as a Toeplitz matrix with constant diagonals (Vautard and Ghil, 1989), i.e., its entries [math]\displaystyle{ c_{ij} }[/math] depend only on the lag [math]\displaystyle{ |i-j| }[/math]:

- [math]\displaystyle{ c_{ij} = \frac{1}{N-|i-j|} \sum_{t=1}^{N-|i-j|} X(t) X(t+|i-j|). }[/math]

An alternative way to compute [math]\displaystyle{ {\textbf C}_X }[/math], is by using the [math]\displaystyle{ N' \times M }[/math] "trajectory matrix" [math]\displaystyle{ {\textbf D} }[/math] that is formed by [math]\displaystyle{ M }[/math] lag-shifted copies of [math]\displaystyle{ {\it X(t)} }[/math], which are [math]\displaystyle{ N' =N-M+1 }[/math] long; then

- [math]\displaystyle{ {\textbf C}_X = \frac{1}{N'} {\textbf D}^{\rm t} {\textbf D}. }[/math]

The [math]\displaystyle{ M }[/math] eigenvectors [math]\displaystyle{ {\textbf E}_k }[/math] of the lag-covariance matrix [math]\displaystyle{ {\textbf C}_ X }[/math] are called temporal empirical orthogonal functions (EOFs). The eigenvalues [math]\displaystyle{ \lambda_k }[/math] of [math]\displaystyle{ {\textbf C}_{X} }[/math] account for the partial variance in the direction [math]\displaystyle{ {\textbf E}_k }[/math] and the sum of the eigenvalues, i.e., the trace of [math]\displaystyle{ {\textbf C}_{X} }[/math], gives the total variance of the original time series [math]\displaystyle{ X(t) }[/math]. The name of the method derives from the singular values [math]\displaystyle{ \lambda^{1/2}_k }[/math] of [math]\displaystyle{ {\textbf C}_{X}. }[/math]

Decomposition and reconstruction

Projecting the time series onto each EOF yields the corresponding temporal principal components (PCs) [math]\displaystyle{ {\textbf A}_k }[/math]:

- [math]\displaystyle{ A_k(t) = \sum_{j=1}^{M} X(t+j-1) E_k(j). }[/math]

An oscillatory mode is characterized by a pair of nearly equal SSA eigenvalues and associated PCs that are in approximate phase quadrature (Ghil et al., 2002). Such a pair can represent efficiently a nonlinear, anharmonic oscillation. This is due to the fact that a single pair of data-adaptive SSA eigenmodes often will capture better the basic periodicity of an oscillatory mode than methods with fixed basis functions, such as the sines and cosines used in the Fourier transform.

The window width [math]\displaystyle{ M }[/math] determines the longest periodicity captured by SSA. Signal-to-noise separation can be obtained by merely inspecting the slope break in a "scree diagram" of eigenvalues [math]\displaystyle{ \lambda_k }[/math] or singular values [math]\displaystyle{ \lambda^{1/2}_k }[/math] vs. [math]\displaystyle{ k }[/math]. The point [math]\displaystyle{ k^* = S }[/math] at which this break occurs should not be confused with a "dimension" [math]\displaystyle{ D }[/math] of the underlying deterministic dynamics (Vautard and Ghil, 1989).

A Monte-Carlo test (Allen and Smith, 1996; Allen and Robertson, 1996; Groth and Ghil, 2015) can be applied to ascertain the statistical significance of the oscillatory pairs detected by SSA. The entire time series or parts of it that correspond to trends, oscillatory modes or noise can be reconstructed by using linear combinations of the PCs and EOFs, which provide the reconstructed components (RCs) [math]\displaystyle{ {\textbf R}_K }[/math]:

- [math]\displaystyle{ R_{ K}(t) = \frac{1}{M_t} \sum_{k\in {\textit K}} \sum_{j={L_t}}^{U_t} A_k(t-j+1)E_k(j); }[/math]

here [math]\displaystyle{ K }[/math] is the set of EOFs on which the reconstruction is based. The values of the normalization factor [math]\displaystyle{ M_t }[/math], as well as of the lower and upper bound of summation [math]\displaystyle{ L_t }[/math] and [math]\displaystyle{ U_t }[/math], differ between the central part of the time series and the vicinity of its endpoints (Ghil et al., 2002).

Multivariate extension

Multi-channel SSA (or M-SSA) is a natural extension of SSA to an [math]\displaystyle{ L }[/math]-channel time series of vectors or maps with [math]\displaystyle{ N }[/math] data points [math]\displaystyle{ \{X_{l}(t): l=1,\dots, L; t=1,\dots, N\} }[/math]. In the meteorological literature, extended EOF (EEOF) analysis is often assumed to be synonymous with M-SSA. The two methods are both extensions of classical principal component analysis (PCA) but they differ in emphasis: EEOF analysis typically utilizes a number [math]\displaystyle{ L }[/math] of spatial channels much greater than the number [math]\displaystyle{ M }[/math] of temporal lags, thus limiting the temporal and spectral information. In M-SSA, on the other hand, one usually chooses [math]\displaystyle{ L \leq M }[/math]. Often M-SSA is applied to a few leading PCs of the spatial data, with [math]\displaystyle{ M }[/math] chosen large enough to extract detailed temporal and spectral information from the multivariate time series (Ghil et al., 2002). However, Groth and Ghil (2015) have demonstrated possible negative effects of this variance compression on the detection rate of weak signals when the number [math]\displaystyle{ L }[/math] of retained PCs becomes too small. This practice can further affect negatively the judicious reconstruction of the spatio-temporal patterns of such weak signals, and Groth et al. (2016) recommend retaining a maximum number of PCs, i.e., [math]\displaystyle{ L=N }[/math].

Groth and Ghil (2011) have demonstrated that a classical M-SSA analysis suffers from a degeneracy problem, namely the EOFs do not separate well between distinct oscillations when the corresponding eigenvalues are similar in size. This problem is a shortcoming of principal component analysis in general, not just of M-SSA in particular. In order to reduce mixture effects and to improve the physical interpretation, Groth and Ghil (2011) have proposed a subsequent VARIMAX rotation of the spatio-temporal EOFs (ST-EOFs) of the M-SSA. To avoid a loss of spectral properties (Plaut and Vautard 1994), they have introduced a slight modification of the common VARIMAX rotation that does take the spatio-temporal structure of ST-EOFs into account. Alternatively, a closed matrix formulation of the algorithm for the simultaneous rotation of the EOFs by iterative SVD decompositions has been proposed (Portes and Aguirre, 2016).

M-SSA has two forecasting approaches known as recurrent and vector. The discrepancies between these two approaches are attributable to the organization of the single trajectory matrix [math]\displaystyle{ {\textbf X} }[/math] of each series into the block trajectory matrix in the multivariate case. Two trajectory matrices can be organized as either vertical (VMSSA) or horizontal (HMSSA) as was recently introduced in Hassani and Mahmoudvand (2013), and it was shown that these constructions lead to better forecasts. Accordingly, we have four different forecasting algorithms that can be exploited in this version of MSSA (Hassani and Mahmoudvand, 2013).

Prediction

In this subsection, we focus on phenomena that exhibit a significant oscillatory component: repetition increases understanding and hence confidence in a prediction method that is closely connected with such understanding.

Singular spectrum analysis (SSA) and the maximum entropy method (MEM) have been combined to predict a variety of phenomena in meteorology, oceanography and climate dynamics (Ghil et al., 2002, and references therein). First, the “noise” is filtered out by projecting the time series onto a subset of leading EOFs obtained by SSA; the selected subset should include statistically significant, oscillatory modes. Experience shows that this approach works best when the partial variance associated with the pairs of RCs that capture these modes is large (Ghil and Jiang, 1998).

The prefiltered RCs are then extrapolated by least-square fitting to an autoregressive model [math]\displaystyle{ AR[p] }[/math], whose coefficients give the MEM spectrum of the remaining “signal”. Finally, the extended RCs are used in the SSA reconstruction process to produce the forecast values. The reason why this approach – via SSA prefiltering, AR extrapolation of the RCs, and SSA reconstruction – works better than the customary AR-based prediction is explained by the fact that the individual RCs are narrow-band signals, unlike the original, noisy time series [math]\displaystyle{ X(t) }[/math] (Penland et al., 1991; Keppenne and Ghil, 1993). In fact, the optimal order p obtained for the individual RCs is considerably lower than the one given by the standard Akaike information criterion (AIC) or similar ones.

Spatio-temporal gap filling

The gap-filling version of SSA can be used to analyze data sets that are unevenly sampled or contain missing data (Kondrashov and Ghil, 2006; Kondrashov et al. 2010). For a univariate time series, the SSA gap filling procedure utilizes temporal correlations to fill in the missing points. For a multivariate data set, gap filling by M-SSA takes advantage of both spatial and temporal correlations. In either case: (i) estimates of missing data points are produced iteratively, and are then used to compute a self-consistent lag-covariance matrix [math]\displaystyle{ {\textbf C}_X }[/math] and its EOFs [math]\displaystyle{ {\textbf E}_k }[/math]; and (ii) cross-validation is used to optimize the window width [math]\displaystyle{ M }[/math] and the number of leading SSA modes to fill the gaps with the iteratively estimated "signal," while the noise is discarded.

As a model-free tool

The areas where SSA can be applied are very broad: climatology, marine science, geophysics, engineering, image processing, medicine, econometrics among them. Hence different modifications of SSA have been proposed and different methodologies of SSA are used in practical applications such as trend extraction, periodicity detection, seasonal adjustment, smoothing, noise reduction (Golyandina, et al, 2001).

Basic SSA

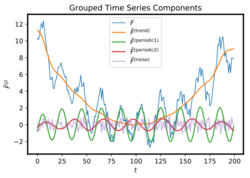

SSA can be used as a model-free technique so that it can be applied to arbitrary time series including non-stationary time series. The basic aim of SSA is to decompose the time series into the sum of interpretable components such as trend, periodic components and noise with no a-priori assumptions about the parametric form of these components.

Consider a real-valued time series [math]\displaystyle{ \mathbb{X}=(x_1,\ldots,x_{N}) }[/math] of length [math]\displaystyle{ N }[/math]. Let [math]\displaystyle{ L }[/math] [math]\displaystyle{ \ (1\lt L \lt N) }[/math] be some integer called the window length and [math]\displaystyle{ K=N-L+1 }[/math].

Main algorithm

1st step: Embedding.

Form the trajectory matrix of the series [math]\displaystyle{ \mathbb{X} }[/math], which is the [math]\displaystyle{ L\!\times\! K }[/math] matrix

- [math]\displaystyle{ \mathbf{X}=[X_1:\ldots:X_K]=(x_{ij})_{i,j=1}^{L,K}= \begin{bmatrix} x_1&x_2&x_3&\ldots&x_{K}\\ x_2&x_3&x_4&\ldots&x_{K+1}\\ x_3&x_4&x_5&\ldots&x_{K+2}\\ \vdots&\vdots&\vdots&\ddots&\vdots\\ x_{L}&x_{L+1}&x_{L+2}&\ldots&x_{N}\\ \end{bmatrix} }[/math]

where [math]\displaystyle{ X_i=(x_{i},\ldots,x_{i+L-1})^\mathrm{T} \; \quad (1\leq i\leq K) }[/math] are lagged vectors of size [math]\displaystyle{ L }[/math]. The matrix [math]\displaystyle{ \mathbf{X} }[/math] is a Hankel matrix which means that [math]\displaystyle{ \mathbf{X} }[/math] has equal elements [math]\displaystyle{ x_{ij} }[/math] on the anti-diagonals [math]\displaystyle{ i+j =\,{\rm const} }[/math].

2nd step: Singular Value Decomposition (SVD).

Perform the singular value decomposition (SVD) of the trajectory matrix [math]\displaystyle{ \mathbf{X} }[/math]. Set [math]\displaystyle{ \mathbf{S}=\mathbf{X} \mathbf{X}^\mathrm{T} }[/math] and denote by [math]\displaystyle{ \lambda_1, \ldots,\lambda_L }[/math] the eigenvalues of [math]\displaystyle{ \mathbf{S} }[/math] taken in the decreasing order of magnitude ([math]\displaystyle{ \lambda_1\geq \ldots \geq \lambda_L\geq 0 }[/math]) and by [math]\displaystyle{ U_1,\ldots,U_L }[/math] the orthonormal system of the eigenvectors of the matrix [math]\displaystyle{ \mathbf{S} }[/math] corresponding to these eigenvalues.

Set [math]\displaystyle{ d= \mathop{\mathrm{rank}} \mathbf{X} =\max\{i,\ \mbox{such that}\ \lambda_i \gt 0\} }[/math] (note that [math]\displaystyle{ d=L }[/math] for a typical real-life series) and [math]\displaystyle{ V_i=\mathbf{X}^\mathrm{T} U_i/\sqrt{\lambda_i} }[/math] [math]\displaystyle{ (i=1,\ldots,d) }[/math]. In this notation, the SVD of the trajectory matrix [math]\displaystyle{ \mathbf{X} }[/math] can be written as

- [math]\displaystyle{ \mathbf{X} = \mathbf{X}_1 + \ldots + \mathbf{X}_d, }[/math]

where

- [math]\displaystyle{ \mathbf{X}_i=\sqrt{\lambda_i}U_i V_i^\mathrm{T} }[/math]

are matrices having rank 1; these are called elementary matrices. The collection [math]\displaystyle{ (\sqrt{\lambda_i},U_i,V_i) }[/math] will be called the [math]\displaystyle{ i }[/math]th eigentriple (abbreviated as ET) of the SVD. Vectors [math]\displaystyle{ U_i }[/math] are the left singular vectors of the matrix [math]\displaystyle{ \mathbf{X} }[/math], numbers [math]\displaystyle{ \sqrt{\lambda_i} }[/math] are the singular values and provide the singular spectrum of [math]\displaystyle{ \mathbf{X} }[/math]; this gives the name to SSA. Vectors [math]\displaystyle{ \sqrt{\lambda_i}V_i=\mathbf{X}^\mathrm{T} U_i }[/math] are called vectors of principal components (PCs).

3rd step: Eigentriple grouping.

Partition the set of indices [math]\displaystyle{ \{1,\ldots,d\} }[/math] into [math]\displaystyle{ m }[/math] disjoint subsets [math]\displaystyle{ I_1,\ldots,I_m }[/math].

Let [math]\displaystyle{ I=\{i_1,\ldots,i_p\} }[/math]. Then the resultant matrix [math]\displaystyle{ \mathbf{X}_I }[/math] corresponding to the group [math]\displaystyle{ I }[/math] is defined as [math]\displaystyle{ \mathbf{X}_I=\mathbf{X}_{i_1}+\ldots+\mathbf{X}_{i_p} }[/math]. The resultant matrices are computed for the groups [math]\displaystyle{ I=I_1, \ldots, I_m }[/math] and the grouped SVD expansion of [math]\displaystyle{ \mathbf{X} }[/math] can now be written as

- [math]\displaystyle{ \mathbf{X}=\mathbf{X}_{I_1}+\ldots+\mathbf{X}_{I_m}. }[/math]

4th step: Diagonal averaging.

Each matrix [math]\displaystyle{ \mathbf{X}_{I_j} }[/math] of the grouped decomposition is hankelized and then the obtained Hankel matrix is transformed into a new series of length [math]\displaystyle{ N }[/math] using the one-to-one correspondence between Hankel matrices and time series. Diagonal averaging applied to a resultant matrix [math]\displaystyle{ \mathbf{X}_{I_k} }[/math] produces a reconstructed series [math]\displaystyle{ \widetilde{\mathbb{X}}^{(k)}=(\widetilde{x}^{(k)}_1,\ldots,\widetilde{x}^{(k)}_N) }[/math]. In this way, the initial series [math]\displaystyle{ x_1,\ldots,x_N }[/math] is decomposed into a sum of [math]\displaystyle{ m }[/math] reconstructed subseries:

- [math]\displaystyle{ x_n = \sum\limits_{k=1}^m \widetilde{x}^{(k)}_n \ \ (n=1,2, \ldots, N). }[/math]

This decomposition is the main result of the SSA algorithm. The decomposition is meaningful if each reconstructed subseries could be classified as a part of either trend or some periodic component or noise.

Theory of SSA separability

The two main questions which the theory of SSA attempts to answer are: (a) what time series components can be separated by SSA, and (b) how to choose the window length [math]\displaystyle{ L }[/math] and make proper grouping for extraction of a desirable component. Many theoretical results can be found in Golyandina et al. (2001, Ch. 1 and 6).

Trend (which is defined as a slowly varying component of the time series), periodic components and noise are asymptotically separable as [math]\displaystyle{ N\rightarrow \infty }[/math]. In practice [math]\displaystyle{ N }[/math] is fixed and one is interested in approximate separability between time series components. A number of indicators of approximate separability can be used, see Golyandina et al. (2001, Ch. 1). The window length [math]\displaystyle{ L }[/math] determines the resolution of the method: larger values of [math]\displaystyle{ L }[/math] provide more refined decomposition into elementary components and therefore better separability. The window length [math]\displaystyle{ L }[/math] determines the longest periodicity captured by SSA. Trends can be extracted by grouping of eigentriples with slowly varying eigenvectors. A sinusoid with frequency smaller than 0.5 produces two approximately equal eigenvalues and two sine-wave eigenvectors with the same frequencies and [math]\displaystyle{ \pi/2 }[/math]-shifted phases.

Separation of two time series components can be considered as extraction of one component in the presence of perturbation by the other component. SSA perturbation theory is developed in Nekrutkin (2010) and Hassani et al. (2011).

Forecasting by SSA

If for some series [math]\displaystyle{ \mathbb{X} }[/math] the SVD step in Basic SSA gives [math]\displaystyle{ d\lt L }[/math], then this series is called time series of rank [math]\displaystyle{ d }[/math] (Golyandina et al., 2001, Ch.5). The subspace spanned by the [math]\displaystyle{ d }[/math] leading eigenvectors is called signal subspace. This subspace is used for estimating the signal parameters in signal processing, e.g. ESPRIT for high-resolution frequency estimation. Also, this subspace determines the linear homogeneous recurrence relation (LRR) governing the series, which can be used for forecasting. Continuation of the series by the LRR is similar to forward linear prediction in signal processing.

Let the series be governed by the minimal LRR [math]\displaystyle{ x_{n}=\sum_{k=1}^d b_k x_{n-k} }[/math]. Let us choose [math]\displaystyle{ L\gt d }[/math], [math]\displaystyle{ U_1,\ldots,U_d }[/math] be the eigenvectors (left singular vectors of the [math]\displaystyle{ L }[/math]-trajectory matrix), which are provided by the SVD step of SSA. Then this series is governed by an LRR [math]\displaystyle{ x_{n}=\sum_{k=1}^{L-1} a_k x_{n-k} }[/math], where [math]\displaystyle{ (a_{L-1},\ldots,a_1)^\mathrm{T} }[/math] are expressed through [math]\displaystyle{ U_1,\ldots,U_d }[/math] (Golyandina et al., 2001, Ch.5), and can be continued by the same LRR.

This provides the basis for SSA recurrent and vector forecasting algorithms (Golyandina et al., 2001, Ch.2). In practice, the signal is corrupted by a perturbation, e.g., by noise, and its subspace is estimated by SSA approximately. Thus, SSA forecasting can be applied for forecasting of a time series component that is approximately governed by an LRR and is approximately separated from the residual.

Multivariate extension

Multi-channel, Multivariate SSA (or M-SSA) is a natural extension of SSA to for analyzing multivariate time series, where the size of different univariate series does not have to be the same. The trajectory matrix of multi-channel time series consists of linked trajectory matrices of separate times series. The rest of the algorithm is the same as in the univariate case. System of series can be forecasted analogously to SSA recurrent and vector algorithms (Golyandina and Stepanov, 2005). MSSA has many applications. It is especially popular in analyzing and forecasting economic and financial time series with short and long series length (Patterson et al., 2011, Hassani et al., 2012, Hassani and Mahmoudvand, 2013). Other multivariate extension is 2D-SSA that can be applied to two-dimensional data like digital images (Golyandina and Usevich, 2010). The analogue of trajectory matrix is constructed by moving 2D windows of size [math]\displaystyle{ L_x \times L_y }[/math].

MSSA and causality

A question that frequently arises in time series analysis is whether one economic variable can help in predicting another economic variable. One way to address this question was proposed by Granger (1969), in which he formalized the causality concept. A comprehensive causality test based on MSSA has recently introduced for causality measurement. The test is based on the forecasting accuracy and predictability of the direction of change of the MSSA algorithms (Hassani et al., 2011 and Hassani et al.,2012).

MSSA and EMH

The MSSA forecasting results can be used in examining the efficient-market hypothesis controversy (EMH). The EMH suggests that the information contained in the price series of an asset is reflected “instantly, fully, and perpetually” in the asset’s current price. Since the price series and the information contained in it are available to all market participants, no one can benefit by attempting to take advantage of the information contained in the price history of an asset by trading in the markets. This is evaluated using two series with different series length in a multivariate system in SSA analysis (Hassani et al. 2010).

MSSA, SSA and business cycles

Business cycles plays a key role in macroeconomics, and are interest for a variety of players in the economy, including central banks, policy-makers, and financial intermediaries. MSSA-based methods for tracking business cycles have been recently introduced, and have been shown to allow for a reliable assessment of the cyclical position of the economy in real-time (de Carvalho et al., 2012 and de Carvalho and Rua, 2017).

MSSA, SSA and unit root

SSA's applicability to any kind of stationary or deterministically trending series has been extended to the case of a series with a stochastic trend, also known as a series with a unit root. In Hassani and Thomakos (2010) and Thomakos (2010) the basic theory on the properties and application of SSA in the case of series of a unit root is given, along with several examples. It is shown that SSA in such series produces a special kind of filter, whose form and spectral properties are derived, and that forecasting the single reconstructed component reduces to a moving average. SSA in unit roots thus provides an `optimizing' non-parametric framework for smoothing series with a unit root. This line of work is also extended to the case of two series, both of which have a unit root but are cointegrated. The application of SSA in this bivariate framework produces a smoothed series of the common root component.

Gap-filling

The gap-filling versions of SSA can be used to analyze data sets that are unevenly sampled or contain missing data (Schoellhamer, 2001; Golyandina and Osipov, 2007).

Schoellhamer (2001) shows that the straightforward idea to formally calculate approximate inner products omitting unknown terms is workable for long stationary time series. Golyandina and Osipov (2007) uses the idea of filling in missing entries in vectors taken from the given subspace. The recurrent and vector SSA forecasting can be considered as particular cases of filling in algorithms described in the paper.

Detection of structural changes

SSA can be effectively used as a non-parametric method of time series monitoring and change detection. To do that, SSA performs the subspace tracking in the following way. SSA is applied sequentially to the initial parts of the series, constructs the corresponding signal subspaces and checks the distances between these subspaces and the lagged vectors formed from the few most recent observations. If these distances become too large, a structural change is suspected to have occurred in the series (Golyandina et al., 2001, Ch.3; Moskvina and Zhigljavsky, 2003).

In this way, SSA could be used for change detection not only in trends but also in the variability of the series, in the mechanism that determines dependence between different series and even in the noise structure. The method have proved to be useful in different engineering problems (e.g. Mohammad and Nishida (2011) in robotics), and has been extended to the multivariate case with corresponding analysis of detection delay and false positive rate.[1]

Relation between SSA and other methods

- Autoregression

- Typical model for SSA is [math]\displaystyle{ x_n=s_n+e_n }[/math], where [math]\displaystyle{ s_n=\sum_{k=1}^r a_k s_{n-k} }[/math] (signal satisfying an LRR) and [math]\displaystyle{ e_n }[/math] is noise. The model of AR is [math]\displaystyle{ x_n=\sum_{k=1}^r a_k x_{n-k}+ e_n }[/math]. Despite these two models look similar they are very different. SSA considers AR as a noise component only. AR(1), which is red noise, is typical model of noise for Monte-Carlo SSA (Allen and Smith, 1996).

- Spectral Fourier Analysis

- In contrast with Fourier analysis with fixed basis of sine and cosine functions, SSA uses an adaptive basis generated by the time series itself. As a result, the underlying model in SSA is more general and SSA can extract amplitude-modulated sine wave components with frequencies different from [math]\displaystyle{ k/N }[/math]. SSA-related methods like ESPRIT can estimate frequencies with higher resolution than spectral Fourier analysis.

- Linear Recurrence Relations

- Let the signal be modeled by a series, which satisfies a linear recurrence relation [math]\displaystyle{ s_{n}=\sum_{k=1}^r a_k s_{n-k} }[/math]; that is, a series that can be represented as sums of products of exponential, polynomial and sine wave functions. This includes the sum of dumped sinusoids model whose complex-valued form is [math]\displaystyle{ s_n=\sum_k C_k \rho_k^n e^{i 2\pi \omega_k n} }[/math]. SSA-related methods allow estimation of frequencies [math]\displaystyle{ \omega_k }[/math] and exponential factors [math]\displaystyle{ \rho_k }[/math] (Golyandina and Zhigljavsky, 2013, Sect 3.8). Coefficients [math]\displaystyle{ C_k }[/math] can be estimated by the least squares method. Extension of the model, where [math]\displaystyle{ C_k }[/math] are replaced by polynomials of [math]\displaystyle{ n }[/math], can be also considered within the SSA-related methods (Badeau et al., 2008).

- Signal Subspace methods

- SSA can be considered as a subspace-based method, since it allows estimation of the signal subspace of dimension [math]\displaystyle{ r }[/math] by [math]\displaystyle{ \mathop{\mathrm{span}}(U_1,\ldots,U_r) }[/math].

- State Space Models

- The main model behind SSA is [math]\displaystyle{ x_n=s_n+e_n }[/math], where [math]\displaystyle{ s_n=\sum_{k=1}^r a_k s_{n-k} }[/math] and [math]\displaystyle{ e_n }[/math] is noise. Formally, this model belongs to the general class of state space models. The specifics of SSA is in the facts that parameter estimation is a problem of secondary importance in SSA and the data analysis procedures in SSA are nonlinear as they are based on the SVD of either trajectory or lag-covariance matrix.

- Independent Component Analysis (ICA)

- SSA is used in blind source separation by ICA as a preprocessing step (Pietilä et al., 2006). On the other hand, ICA can be used as a replacement of the SVD step in the SSA algorithm for achieving better separability (Golyandina and Zhigljavsky, 2013, Sect. 2.5.4).

- Regression

- SSA is able to extract polynomial and exponential trends. However, unlike regression, SSA does not assume any parametric model which may give significant advantage when an exploratory data analysis is performed with no obvious model in hand (Golyandina et al., 2001, Ch.1).

- Linear Filters

- The reconstruction of the series by SSA can be considered as adaptive linear filtration. If the window length [math]\displaystyle{ L }[/math] is small, then each eigenvector [math]\displaystyle{ U_i=(u_1, \ldots, u_L)^\mathrm{T} }[/math] generates a linear filter of width [math]\displaystyle{ 2L-1 }[/math] for reconstruction of the middle of the series [math]\displaystyle{ \widetilde{x}_s }[/math], [math]\displaystyle{ L\le s\le K }[/math]. The filtration is non-causal. However, the so-called Last-point SSA can be used as a causal filter (Golyandina and Zhigljavsky 2013, Sect. 3.9).

- Density Estimation

- Since SSA can be used as a method of data smoothing it can be used as a method of non-parametric density estimation (Golyandina et al., 2012).

See also

References

- ↑ Alanqary, Arwa; Alomar, Abdullah; Shah, Devavrat (2021). "Change Point Detection via Multivariate Singular Spectrum Analysis". Advances in Neural Information Processing Systems 34.

This section may contain an excessive number of citations. (October 2020) (Learn how and when to remove this template message) |

- Akaike, H. (1969): "Fitting autoregressive models for prediction, " Ann. Inst. Stat. Math., 21, 243–247.

- Allen, M.R., and A.W. Robertson (1996): "Distinguishing modulated oscillations from coloured noise in multivariate datasets", Clim. Dyn., 12, 775–784.

- Allen, M.R. and L.A. Smith (1996) "Monte Carlo SSA: detecting irregular oscillations in the presence of colored noise". Journal of Climate, 9 (12), 3373–3404.

- Badeau, R., G. Richard, and B. David (2008): "Performance of ESPRIT for Estimating Mixtures of Complex Exponentials Modulated by Polynomials". IEEE Transactions on signal processing, 56(2), 492–504.

- Barnett, T. P., and K. Hasselmann (1979): "Techniques of linear prediction, with application to oceanic and atmospheric fields in the tropical Pacific, " Rev. Geophys., 17, 949–968.

- Bozzo, E., R. Carniel and D. Fasino (2010): "Relationship between singular spectrum analysis and Fourier analysis: Theory and application to the monitoring of volcanic activity", Comput. Math. Appl. 60(3), 812–820

- Broomhead, D.S., and G.P. King (1986a): "Extracting qualitative dynamics from experimental data", Physica D, 20, 217–236.

- Broomhead, D.S., and G. P. King (1986b): "On the qualitative analysis of experimental dynamical systems". Nonlinear Phenomena and Chaos, Sarkar S (Ed.), Adam Hilger, Bristol, 113-–144.

- Colebrook, J. M., (1978): "Continuous plankton records: Zooplankton and environment, Northeast Atlantic and North Sea," Oceanol. Acta, 1, 9–23.

- Danilov, D. and Zhigljavsky, A. (Eds.) (1997):Principal Components of Time Series: the Caterpillar method, University of St. Petersburg Press. (In Russian.)

- de Carvalho, M., Rodrigues, P. C. and Rua, A. (2012): "Tracking the US business cycle with a singular spectrum analysis". Econ. Lett., 114, 32‒35.

- de Carvalho, M., and Rua, A. (2017): "Real-time nowcasting the US output gap: Singular spectrum analysis at work". Int. J. Forecasting, 33, 185–198.

- Ghil, M., and R. Vautard (1991): "Interdecadal oscillations and the warming trend in global temperature time series", Nature, 350, 324–327.

- Elsner, J.B. and Tsonis, A.A. (1996): Singular Spectrum Analysis. A New Tool in Time Series Analysis, Plenum Press.

- Fraedrich, K. (1986) "Estimating dimensions of weather and climate attractors". J. Atmos. Sci. 43, 419–432.

- Ghil, M., and R. Vautard (1991): "Interdecadal oscillations and the warming trend in global temperature time series", Nature, 350, 324–327.

- Ghil, M. and Jiang, N. (1998): "Recent forecast skill for the El Niño/Southern Oscillation ", Geophys. Res. Lett., 25, 171–174, 1998.

- Ghil, M., R. M. Allen, M. D. Dettinger, K. Ide, D. Kondrashov, et al. (2002) "Advanced spectral methods for climatic time series", Rev. Geophys. 40(1), 3.1–3.41.

- Golyandina, N., A. Korobeynikov and A. Zhigljavsky (2018): Singular Spectrum Analysis with R. Springer Verlag. ISBN:3662573784.

- Golyandina, N., V. Nekrutkin and A. Zhigljavsky (2001): Analysis of Time Series Structure: SSA and related techniques. Chapman and Hall/CRC. ISBN:1-58488-194-1.

- Golyandina, N., and E. Osipov (2007) "The ‘Caterpillar’-SSA method for analysis of time series with missing values", J. Stat. Plan. Inference 137(8), 2642–2653.

- Golyandina, N., A. Pepelyshev and A. Steland (2012): "New approaches to nonparametric density estimation and selection of smoothing parameters", Comput. Stat. Data Anal. 56(7), 2206–2218.

- Golyandina, N. and D. Stepanov (2005): "SSA-based approaches to analysis and forecast of multidimensional time series". In: Proceedings of the 5th St.Petersburg Workshop on Simulation, June 26-July 2, 2005, St. Petersburg State University, St. Petersburg, pp. 293–298.

- Golyandina, N. and K. Usevich (2010): "2D-extension of Singular Spectrum Analysis: algorithm and elements of theory". In: Matrix Methods: Theory, Algorithms and Applications (Eds. V.Olshevsky and E.Tyrtyshnikov). World Scientific Publishing, 449–473.

- Golyandina, N., and A. Zhigljavsky (2013) Singular Spectrum Analysis for time series. Springer Briefs in Statistics, Springer, ISBN:978-3-642-34912-6.

- Groth, A., Feliks, Y., Kondrashov, D., and Ghil, M. (2016): "Interannual variability in the North Atlantic ocean's temperature field and its association with the wind stress forcing", Journal of Climate, doi:10.1175/jcli-d-16-0370.1.

- Groth, A. and M. Ghil (2011): "Multivariate singular spectrum analysis and the road to phase synchronization", Physical Review E 84, 036206, doi:10.1103/PhysRevE.84.036206.

- Groth, A. and M. Ghil (2015): "Monte Carlo Singular Spectrum Analysis (SSA) revisited: Detecting oscillator clusters in multivariate datasets", Journal of Climate, 28, 7873-7893, doi:10.1175/JCLI-D-15-0100.1.

- Harris, T. and H. Yan (2010): "Filtering and frequency interpretations of singular spectrum analysis". Physica D 239, 1958–1967.

- Hassani, H.and D. Thomakos, (2010): "A Review on Singular Spectrum Analysis for Economic and Financial Time Series". Statistics and Its Interface 3(3), 377-397.

- Hassani, H., A. Soofi and A. Zhigljavsky (2011): "Predicting Daily Exchange Rate with Singular Spectrum Analysis".Nonlinear Analysis: Real World Applications 11, 2023-2034.

- Hassani, H., Z. Xu and A. Zhigljavsky (2011): "Singular spectrum analysis based on the perturbation theory". Nonlinear Analysis: Real World Applications 12 (5), 2752-2766.

- Hassani, H., S. Heravi and A. Zhigljavsky (2012): " Forecasting UK industrial production with multivariate singular spectrum analysis". Journal of Forecasting 10.1002/for.2244

- Hassani, H., A. Zhigljavsky., K. Patterson and A. Soofi (2011): " A comprehensive causality test based on the singular spectrum analysis". In: Illari, P.M., Russo, F., Williamson, J. (eds.) Causality in Science, 1st edn., p. 379. Oxford University Press, London.

- Hassani, H., and Mahmoudvand, R. (2013). Multivariate Singular Spectrum Analysis: A General View and New Vector Forecasting Approach;. International Journal of Energy and Statistics 1(1), 55-83.

- Keppenne, C. L. and M. Ghil (1993): "Adaptive filtering and prediction of noisy multivariate signals: An application to subannual variability in atmospheric angular momentum," Intl. J. Bifurcation & Chaos, 3, 625–634.

- Kondrashov, D., and M. Ghil (2006): "Spatio-temporal filling of missing points in geophysical data sets", Nonlin. Processes Geophys., 13, 151–159.

- Kondrashov, D., Y. Shprits, M. Ghil, 2010: " Gap Filling of Solar Wind Data by Singular Spectrum Analysis," Geophys. Res. Lett, 37, L15101,

- Mohammad, Y., and T. Nishida (2011) "On comparing SSA-based change point discovery algorithms". IEEE SII, 938–945.

- Moskvina, V., and A. Zhigljavsky (2003) "An algorithm based on singular spectrum analysis for change-point detection". Commun Stat Simul Comput 32, 319–352.

- Nekrutkin, V. (2010) "Perturbation expansions of signal subspaces for long signals". J. Stat. Interface 3, 297–319.

- Patterson, K., H. Hassani, S. Heravi and A. Zhigljavsky (2011) "Multivariate singular spectrum analysis for forecasting revisions to real-time data". Journal of Applied Statistics 38 (10), 2183-2211.

- Penland, C., Ghil, M., and Weickmann, K. M. (1991): "Adaptive filtering and maximum entropy spectra, with application to changes in atmospheric angular momentum," J. Geophys. Res., 96, 22659–22671.

- Pietilä, A., M. El-Segaier, R. Vigário and E. Pesonen (2006) "Blind source separation of cardiac murmurs from heart recordings". In: Rosca J, et al. (eds) Independent Component Analysis and Blind Signal Separation, Lecture Notes in Computer Science, vol 3889, Springer, pp 470–477.

- Portes, L. L. and Aguirre, L. A. (2016): "Matrix formulation and singular-value decomposition algorithm for structured varimax rotation in multivariate singular spectrum analysis", Physical Review E, 93, 052216, doi:10.1103/PhysRevE.93.052216.

- de Prony, G. (1795) "Essai expérimental et analytique sur les lois de la dilatabilité des fluides élastiques et sur celles de la force expansive de la vapeur de l’eau et la vapeur de l’alkool à différentes températures". J. de l’Ecole Polytechnique, 1(2), 24–76.

- Sanei, S., and H. Hassani (2015) Singular Spectrum Analysis of Biomedical Signals. CRC Press, ISBN:9781466589278 - CAT# K20398.

- Schoellhamer, D. (2001) "Singular spectrum analysis for time series with missing data". Geophys. Res. Lett. 28(16), 3187–3190.

- Thomakos, D. (2010) "Median Unbiased Optimal Smoothing and Trend. Extraction". Journal of Modern Applied Statistical Methods 9,144-159.

- Vautard, R., and M. Ghil (1989): "Singular spectrum analysis in nonlinear dynamics, with applications to paleoclimatic time series", Physica D, 35, 395–424.

- Vautard, R., Yiou, P., and M. Ghil (1992): "Singular-spectrum analysis: A toolkit for short, noisy chaotic signals", Physica D, 58, 95-126.

- Weare, B. C., and J. N. Nasstrom (1982): "Examples of extended empirical orthogonal function analyses," Mon. Weather Rev., 110, 784–812.

- Zhigljavsky, A. (Guest Editor) (2010) "Special issue on theory and practice in singular spectrum analysis of time series". Stat. Interface 3(3)

External links

- Singular Spectrum Analysis–Multi-taper Method (SSA-MTM) Toolkit freeware from UCLA.

- kSpectra Toolkit for Mac OS X from SpectraWorks.

- Caterpillar-SSA Papers and software from Gistat Group.

- Efficient implementation of SSA in R

- Examples in R with the Rssa package

- Applied SSA in R

- SSA and Phase Synchronisation in R

- Multivariate singular spectrum filter for tracking business cycles

- Singular Spectrum Analysis Excel Demo With VBA

- Singular Spectrum Analysis tutorial with Matlab

- Multichannel Singular Spectrum Analysis tutorial with Matlab

- Singular Spectrum Analysis in Julia

|