Hilbert transform

In mathematics and signal processing, the Hilbert transform is a specific singular integral that takes a function, u(t) of a real variable and produces another function of a real variable H(u)(t). The Hilbert transform is given by the Cauchy principal value of the convolution with the function [math]\displaystyle{ 1/(\pi t) }[/math] (see § Definition). The Hilbert transform has a particularly simple representation in the frequency domain: It imparts a phase shift of ±90° (π/2 radians) to every frequency component of a function, the sign of the shift depending on the sign of the frequency (see § Relationship with the Fourier transform). The Hilbert transform is important in signal processing, where it is a component of the analytic representation of a real-valued signal u(t). The Hilbert transform was first introduced by David Hilbert in this setting, to solve a special case of the Riemann–Hilbert problem for analytic functions.

Definition

The Hilbert transform of u can be thought of as the convolution of u(t) with the function h(t) = 1/πt, known as the Cauchy kernel. Because 1/t is not integrable across t = 0, the integral defining the convolution does not always converge. Instead, the Hilbert transform is defined using the Cauchy principal value (denoted here by p.v.). Explicitly, the Hilbert transform of a function (or signal) u(t) is given by

[math]\displaystyle{ \operatorname{H}(u)(t) = \frac{1}{\pi}\, \operatorname{p.v.} \int_{-\infty}^{+\infty} \frac{u(\tau)}{t - \tau}\,\mathrm{d}\tau, }[/math]

provided this integral exists as a principal value. This is precisely the convolution of u with the tempered distribution p.v. 1/πt.[1] Alternatively, by changing variables, the principal-value integral can be written explicitly[2] as

[math]\displaystyle{ \operatorname{H}(u)(t) = \frac{2}{\pi}\, \lim_{\varepsilon \to 0} \int_\varepsilon^\infty \frac{u(t - \tau) - u(t + \tau)}{2\tau} \,\mathrm{d}\tau. }[/math]

When the Hilbert transform is applied twice in succession to a function u, the result is

[math]\displaystyle{ \operatorname{H}\bigl(\operatorname{H}(u)\bigr)(t) = -u(t), }[/math]

provided the integrals defining both iterations converge in a suitable sense. In particular, the inverse transform is [math]\displaystyle{ \operatorname{H}^3 }[/math]. This fact can most easily be seen by considering the effect of the Hilbert transform on the Fourier transform of u(t) (see § Relationship with the Fourier transform below).

For an analytic function in the upper half-plane, the Hilbert transform describes the relationship between the real part and the imaginary part of the boundary values. That is, if f(z) is analytic in the upper half complex plane {z : Im{z} > 0}, and u(t) = Re{f (t + 0·i)}, then Im{f(t + 0·i)} = H(u)(t) up to an additive constant, provided this Hilbert transform exists.

Notation

In signal processing the Hilbert transform of u(t) is commonly denoted by [math]\displaystyle{ \hat{u}(t) }[/math].[3] However, in mathematics, this notation is already extensively used to denote the Fourier transform of u(t).[4] Occasionally, the Hilbert transform may be denoted by [math]\displaystyle{ \tilde{u}(t) }[/math]. Furthermore, many sources define the Hilbert transform as the negative of the one defined here.[5]

History

The Hilbert transform arose in Hilbert's 1905 work on a problem Riemann posed concerning analytic functions,[6][7] which has come to be known as the Riemann–Hilbert problem. Hilbert's work was mainly concerned with the Hilbert transform for functions defined on the circle.[8][9] Some of his earlier work related to the Discrete Hilbert Transform dates back to lectures he gave in Göttingen. The results were later published by Hermann Weyl in his dissertation.[10] Schur improved Hilbert's results about the discrete Hilbert transform and extended them to the integral case.[11] These results were restricted to the spaces L2 and ℓ2. In 1928, Marcel Riesz proved that the Hilbert transform can be defined for u in [math]\displaystyle{ L^p(\mathbb{R}) }[/math] (Lp space) for 1 < p < ∞, that the Hilbert transform is a bounded operator on [math]\displaystyle{ L^p(\mathbb{R}) }[/math] for 1 < p < ∞, and that similar results hold for the Hilbert transform on the circle as well as the discrete Hilbert transform.[12] The Hilbert transform was a motivating example for Antoni Zygmund and Alberto Calderón during their study of singular integrals.[13] Their investigations have played a fundamental role in modern harmonic analysis. Various generalizations of the Hilbert transform, such as the bilinear and trilinear Hilbert transforms are still active areas of research today.

Relationship with the Fourier transform

The Hilbert transform is a multiplier operator.[14] The multiplier of H is σH(ω) = −i sgn(ω), where sgn is the signum function. Therefore:

[math]\displaystyle{ \mathcal{F}\bigl(\operatorname{H}(u)\bigr)(\omega) = -i \sgn(\omega) \cdot \mathcal{F}(u)(\omega) , }[/math]

where [math]\displaystyle{ \mathcal{F} }[/math] denotes the Fourier transform. Since sgn(x) = sgn(2πx), it follows that this result applies to the three common definitions of [math]\displaystyle{ \mathcal{F} }[/math].

By Euler's formula, [math]\displaystyle{ \sigma_\operatorname{H}(\omega) = \begin{cases} ~~i = e^{+i\pi/2}, & \text{for } \omega \lt 0,\\ ~~ 0, & \text{for } \omega = 0,\\ -i = e^{-i\pi/2}, & \text{for } \omega \gt 0. \end{cases} }[/math]

Therefore, H(u)(t) has the effect of shifting the phase of the negative frequency components of u(t) by +90° (π⁄2 radians) and the phase of the positive frequency components by −90°, and i·H(u)(t) has the effect of restoring the positive frequency components while shifting the negative frequency ones an additional +90°, resulting in their negation (i.e., a multiplication by −1).

When the Hilbert transform is applied twice, the phase of the negative and positive frequency components of u(t) are respectively shifted by +180° and −180°, which are equivalent amounts. The signal is negated; i.e., H(H(u)) = −u, because

[math]\displaystyle{ \left(\sigma_\operatorname{H}(\omega)\right)^2 = e^{\pm i\pi} = -1 \quad \text{for } \omega \neq 0 . }[/math]

Table of selected Hilbert transforms

In the following table, the frequency parameter [math]\displaystyle{ \omega }[/math] is real.

| Signal [math]\displaystyle{ u(t) }[/math] |

Hilbert transform[fn 1] [math]\displaystyle{ \operatorname{H}(u)(t) }[/math] |

|---|---|

| [math]\displaystyle{ \sin(\omega t + \varphi) }[/math] [fn 2] |

[math]\displaystyle{ \begin{array}{lll} \sin\left(\omega t + \varphi - \tfrac{\pi}{2}\right)=-\cos\left(\omega t + \varphi \right), \quad \omega \gt 0\\ \sin\left(\omega t + \varphi + \tfrac{\pi}{2}\right)=\cos\left(\omega t + \varphi \right), \quad \omega \lt 0 \end{array} }[/math] |

| [math]\displaystyle{ \cos(\omega t + \varphi) }[/math] [fn 2] |

[math]\displaystyle{ \begin{array}{lll} \cos\left(\omega t + \varphi - \tfrac{\pi}{2}\right)=\sin\left(\omega t + \varphi\right), \quad \omega \gt 0\\ \cos\left(\omega t + \varphi + \tfrac{\pi}{2}\right)=-\sin\left(\omega t + \varphi\right), \quad \omega \lt 0 \end{array} }[/math] |

| [math]\displaystyle{ e^{i \omega t} }[/math] |

[math]\displaystyle{ \begin{array}{lll} e^{i\left(\omega t - \tfrac{\pi}{2}\right)}, \quad \omega \gt 0\\ e^{i\left(\omega t + \tfrac{\pi}{2}\right)}, \quad \omega \lt 0 \end{array} }[/math] |

| [math]\displaystyle{ e^{-i \omega t} }[/math] |

[math]\displaystyle{ \begin{array}{lll} e^{-i\left(\omega t - \tfrac{\pi}{2}\right)}, \quad \omega \gt 0\\ e^{-i\left(\omega t + \tfrac{\pi}{2}\right)}, \quad \omega \lt 0 \end{array} }[/math] |

| [math]\displaystyle{ 1 \over t^2 + 1 }[/math] | [math]\displaystyle{ t \over t^2 + 1 }[/math] |

| [math]\displaystyle{ e^{-t^2} }[/math] | [math]\displaystyle{ \frac{2}{\sqrt{\pi\,}} F(t) }[/math] (see Dawson function) |

| Sinc function [math]\displaystyle{ \sin(t) \over t }[/math] |

[math]\displaystyle{ 1 - \cos(t)\over t }[/math] |

| Dirac delta function [math]\displaystyle{ \delta(t) }[/math] |

[math]\displaystyle{ {1 \over \pi t} }[/math] |

| Characteristic function [math]\displaystyle{ \chi_{[a,b]}(t) }[/math] |

[math]\displaystyle{ { \frac{1}{\,\pi\,}\ln \left\vert \frac{t - a}{t - b}\right\vert } }[/math] |

Notes

- ↑ Some authors (e.g., Bracewell) use our −H as their definition of the forward transform. A consequence is that the right column of this table would be negated.

- ↑ 2.0 2.1 The Hilbert transform of the sin and cos functions can be defined by taking the principal value of the integral at infinity. This definition agrees with the result of defining the Hilbert transform distributionally.

An extensive table of Hilbert transforms is available.[15] Note that the Hilbert transform of a constant is zero.

Domain of definition

It is by no means obvious that the Hilbert transform is well-defined at all, as the improper integral defining it must converge in a suitable sense. However, the Hilbert transform is well-defined for a broad class of functions, namely those in [math]\displaystyle{ L^p(\mathbb{R}) }[/math] for 1 < p < ∞.

More precisely, if u is in [math]\displaystyle{ L^p(\mathbb{R}) }[/math] for 1 < p < ∞, then the limit defining the improper integral

[math]\displaystyle{ \operatorname{H}(u)(t) = \frac{2}{\pi} \lim_{\varepsilon \to 0} \int_\varepsilon^\infty \frac{u(t - \tau) - u(t + \tau)}{2\tau}\,d\tau }[/math]

exists for almost every t. The limit function is also in [math]\displaystyle{ L^p(\mathbb{R}) }[/math] and is in fact the limit in the mean of the improper integral as well. That is,

[math]\displaystyle{ \frac{2}{\pi} \int_\varepsilon^\infty \frac{u(t - \tau) - u(t + \tau)}{2\tau}\,\mathrm{d}\tau \to \operatorname{H}(u)(t) }[/math]

as ε → 0 in the Lp norm, as well as pointwise almost everywhere, by the Titchmarsh theorem.[16]

In the case p = 1, the Hilbert transform still converges pointwise almost everywhere, but may itself fail to be integrable, even locally.[17] In particular, convergence in the mean does not in general happen in this case. The Hilbert transform of an L1 function does converge, however, in L1-weak, and the Hilbert transform is a bounded operator from L1 to L1,w.[18] (In particular, since the Hilbert transform is also a multiplier operator on L2, Marcinkiewicz interpolation and a duality argument furnishes an alternative proof that H is bounded on Lp.)

Properties

Boundedness

If 1 < p < ∞, then the Hilbert transform on [math]\displaystyle{ L^p(\mathbb{R}) }[/math] is a bounded linear operator, meaning that there exists a constant Cp such that

[math]\displaystyle{ \left\|\operatorname{H}u\right\|_p \le C_p \left\|u\right\|_p }[/math]

for all [math]\displaystyle{ u \isin L^p(\mathbb{R}) }[/math].[19]

The best constant [math]\displaystyle{ C_p }[/math] is given by[20] [math]\displaystyle{ C_p = \begin{cases} \tan \frac{\pi}{2p} & \text{for} ~ 1 \lt p \leq 2, \\[4pt] \cot \frac{\pi}{2p} & \text{for} ~ 2 \lt p \lt \infty. \end{cases} }[/math]

An easy way to find the best [math]\displaystyle{ C_p }[/math] for [math]\displaystyle{ p }[/math] being a power of 2 is through the so-called Cotlar's identity that [math]\displaystyle{ (\operatorname{H}f)^2 =f^2 +2\operatorname{H}(f\operatorname{H}f) }[/math] for all real valued f. The same best constants hold for the periodic Hilbert transform.

The boundedness of the Hilbert transform implies the [math]\displaystyle{ L^p(\mathbb{R}) }[/math] convergence of the symmetric partial sum operator [math]\displaystyle{ S_R f = \int_{-R}^R \hat{f}(\xi) e^{2\pi i x\xi} \, \mathrm{d}\xi }[/math]

to f in [math]\displaystyle{ L^p(\mathbb{R}) }[/math].[21]

Anti-self adjointness

The Hilbert transform is an anti-self adjoint operator relative to the duality pairing between [math]\displaystyle{ L^p(\mathbb{R}) }[/math] and the dual space [math]\displaystyle{ L^q(\mathbb{R}) }[/math], where p and q are Hölder conjugates and 1 < p, q < ∞. Symbolically,

[math]\displaystyle{ \langle \operatorname{H} u, v \rangle = \langle u, -\operatorname{H} v \rangle }[/math]

for [math]\displaystyle{ u \isin L^p(\mathbb{R}) }[/math] and [math]\displaystyle{ v \isin L^q(\mathbb{R}) }[/math].[22]

Inverse transform

The Hilbert transform is an anti-involution,[23] meaning that

[math]\displaystyle{ \operatorname{H}\bigl(\operatorname{H}\left(u\right)\bigr) = -u }[/math]

provided each transform is well-defined. Since H preserves the space [math]\displaystyle{ L^p(\mathbb{R}) }[/math], this implies in particular that the Hilbert transform is invertible on [math]\displaystyle{ L^p(\mathbb{R}) }[/math], and that

[math]\displaystyle{ \operatorname{H}^{-1} = -\operatorname{H} }[/math]

Complex structure

Because H2 = −I ("I" is the identity operator) on the real Banach space of real-valued functions in [math]\displaystyle{ L^p(\mathbb{R}) }[/math], the Hilbert transform defines a linear complex structure on this Banach space. In particular, when p = 2, the Hilbert transform gives the Hilbert space of real-valued functions in [math]\displaystyle{ L^2(\mathbb{R}) }[/math] the structure of a complex Hilbert space.

The (complex) eigenstates of the Hilbert transform admit representations as holomorphic functions in the upper and lower half-planes in the Hardy space H2 by the Paley–Wiener theorem.

Differentiation

Formally, the derivative of the Hilbert transform is the Hilbert transform of the derivative, i.e. these two linear operators commute:

[math]\displaystyle{ \operatorname{H}\left(\frac{ \mathrm{d}u}{\mathrm{d}t}\right) = \frac{\mathrm d}{\mathrm{d}t}\operatorname{H}(u) }[/math]

Iterating this identity,

[math]\displaystyle{ \operatorname{H}\left(\frac{\mathrm{d}^ku}{\mathrm{d}t^k}\right) = \frac{\mathrm{d}^k}{\mathrm{d}t^k}\operatorname{H}(u) }[/math]

This is rigorously true as stated provided u and its first k derivatives belong to [math]\displaystyle{ L^p(\mathbb{R}) }[/math].[24] One can check this easily in the frequency domain, where differentiation becomes multiplication by ω.

Convolutions

The Hilbert transform can formally be realized as a convolution with the tempered distribution[25]

[math]\displaystyle{ h(t) = \operatorname{p.v.} \frac{1}{ \pi \, t } }[/math]

Thus formally,

[math]\displaystyle{ \operatorname{H}(u) = h*u }[/math]

However, a priori this may only be defined for u a distribution of compact support. It is possible to work somewhat rigorously with this since compactly supported functions (which are distributions a fortiori) are dense in Lp. Alternatively, one may use the fact that h(t) is the distributional derivative of the function log|t|/π; to wit

[math]\displaystyle{ \operatorname{H}(u)(t) = \frac{\mathrm{d}}{\mathrm{d}t}\left(\frac{1}{\pi} \left(u*\log\bigl|\cdot\bigr|\right)(t)\right) }[/math]

For most operational purposes the Hilbert transform can be treated as a convolution. For example, in a formal sense, the Hilbert transform of a convolution is the convolution of the Hilbert transform applied on only one of either of the factors:

[math]\displaystyle{ \operatorname{H}(u*v) = \operatorname{H}(u)*v = u*\operatorname{H}(v) }[/math]

This is rigorously true if u and v are compactly supported distributions since, in that case,

[math]\displaystyle{ h*(u*v) = (h*u)*v = u*(h*v) }[/math]

By passing to an appropriate limit, it is thus also true if u ∈ Lp and v ∈ Lq provided that

[math]\displaystyle{ 1 \lt \frac{1}{p} + \frac{1}{q} }[/math]

from a theorem due to Titchmarsh.[26]

Invariance

The Hilbert transform has the following invariance properties on [math]\displaystyle{ L^2(\mathbb{R}) }[/math].

- It commutes with translations. That is, it commutes with the operators Ta f(x) = f(x + a) for all a in [math]\displaystyle{ \mathbb{R}. }[/math]

- It commutes with positive dilations. That is it commutes with the operators Mλ f (x) = f (λ x) for all λ > 0.

- It anticommutes with the reflection R f (x) = f (−x).

Up to a multiplicative constant, the Hilbert transform is the only bounded operator on L2 with these properties.[27]

In fact there is a wider set of operators that commute with the Hilbert transform. The group [math]\displaystyle{ \text{SL}(2,\mathbb{R}) }[/math] acts by unitary operators Ug on the space [math]\displaystyle{ L^2(\mathbb{R}) }[/math] by the formula

[math]\displaystyle{ \operatorname{U}_{g}^{-1} f(x) = \frac{1}{ c x + d } \, f \left( \frac{ ax + b }{ cx + d } \right) \,,\qquad g = \begin{bmatrix} a & b \\ c & d \end{bmatrix} ~,\qquad \text{ for }~ a d - b c = \pm 1 . }[/math]

This unitary representation is an example of a principal series representation of [math]\displaystyle{ ~\text{SL}(2,\mathbb{R})~. }[/math] In this case it is reducible, splitting as the orthogonal sum of two invariant subspaces, Hardy space [math]\displaystyle{ H^2(\mathbb{R}) }[/math] and its conjugate. These are the spaces of L2 boundary values of holomorphic functions on the upper and lower halfplanes. [math]\displaystyle{ H^2(\mathbb{R}) }[/math] and its conjugate consist of exactly those L2 functions with Fourier transforms vanishing on the negative and positive parts of the real axis respectively. Since the Hilbert transform is equal to H = −i (2P − I), with P being the orthogonal projection from [math]\displaystyle{ L^2(\mathbb{R}) }[/math] onto [math]\displaystyle{ \operatorname{H}^2(\mathbb{R}), }[/math] and I the identity operator, it follows that [math]\displaystyle{ \operatorname{H}^2(\mathbb{R}) }[/math] and its orthogonal complement are eigenspaces of H for the eigenvalues ±i. In other words, H commutes with the operators Ug. The restrictions of the operators Ug to [math]\displaystyle{ \operatorname{H}^2(\mathbb{R}) }[/math] and its conjugate give irreducible representations of [math]\displaystyle{ \text{SL}(2,\mathbb{R}) }[/math] – the so-called limit of discrete series representations.[28]

Extending the domain of definition

Hilbert transform of distributions

It is further possible to extend the Hilbert transform to certain spaces of distributions (Pandey 1996). Since the Hilbert transform commutes with differentiation, and is a bounded operator on Lp, H restricts to give a continuous transform on the inverse limit of Sobolev spaces:

[math]\displaystyle{ \mathcal{D}_{L^p} = \underset{n \to \infty}{\underset{\longleftarrow}{\lim}} W^{n,p}(\mathbb{R}) }[/math]

The Hilbert transform can then be defined on the dual space of [math]\displaystyle{ \mathcal{D}_{L^p} }[/math], denoted [math]\displaystyle{ \mathcal{D}_{L^p}' }[/math], consisting of Lp distributions. This is accomplished by the duality pairing:

For [math]\displaystyle{ u\in \mathcal{D}'_{L^p} }[/math], define:

[math]\displaystyle{ \operatorname{H}(u)\in \mathcal{D}'_{L^p} = \langle \operatorname{H}u, v \rangle \ \triangleq \ \langle u, -\operatorname{H}v\rangle,\ \text{for all} \ v\in\mathcal{D}_{L^p} . }[/math]

It is possible to define the Hilbert transform on the space of tempered distributions as well by an approach due to Gel'fand and Shilov,[29] but considerably more care is needed because of the singularity in the integral.

Hilbert transform of bounded functions

The Hilbert transform can be defined for functions in [math]\displaystyle{ L^\infty (\mathbb{R}) }[/math] as well, but it requires some modifications and caveats. Properly understood, the Hilbert transform maps [math]\displaystyle{ L^\infty (\mathbb{R}) }[/math] to the Banach space of bounded mean oscillation (BMO) classes.

Interpreted naïvely, the Hilbert transform of a bounded function is clearly ill-defined. For instance, with u = sgn(x), the integral defining H(u) diverges almost everywhere to ±∞. To alleviate such difficulties, the Hilbert transform of an L∞ function is therefore defined by the following regularized form of the integral

[math]\displaystyle{ \operatorname{H}(u)(t) = \operatorname{p.v.} \int_{-\infty}^\infty u(\tau)\left\{h(t - \tau)- h_0(-\tau)\right\} \, \mathrm{d}\tau }[/math]

where as above h(x) = 1/πx and

[math]\displaystyle{ h_0(x) = \begin{cases} 0 & \text{for} ~ |x| \lt 1 \\ \frac{1}{\pi \, x} & \text{for} ~ |x| \ge 1 \end{cases} }[/math]

The modified transform H agrees with the original transform up to an additive constant on functions of compact support from a general result by Calderón and Zygmund.[30] Furthermore, the resulting integral converges pointwise almost everywhere, and with respect to the BMO norm, to a function of bounded mean oscillation.

A deep result of Fefferman's work[31] is that a function is of bounded mean oscillation if and only if it has the form f + H(g) for some [math]\displaystyle{ f,g \isin L^\infty (\mathbb{R}) }[/math].

Conjugate functions

The Hilbert transform can be understood in terms of a pair of functions f(x) and g(x) such that the function [math]\displaystyle{ F(x) = f(x) + i\,g(x) }[/math] is the boundary value of a holomorphic function F(z) in the upper half-plane.[32] Under these circumstances, if f and g are sufficiently integrable, then one is the Hilbert transform of the other.

Suppose that [math]\displaystyle{ f \isin L^p(\mathbb{R}). }[/math] Then, by the theory of the Poisson integral, f admits a unique harmonic extension into the upper half-plane, and this extension is given by

[math]\displaystyle{ u(x + iy) = u(x, y) = \frac{1}{\pi} \int_{-\infty}^\infty f(s)\;\frac{y}{(x - s)^2 + y^2} \; \mathrm{d}s }[/math]

which is the convolution of f with the Poisson kernel

[math]\displaystyle{ P(x, y) = \frac{ y }{ \pi\, \left( x^2 + y^2 \right) } }[/math]

Furthermore, there is a unique harmonic function v defined in the upper half-plane such that F(z) = u(z) + i v(z) is holomorphic and [math]\displaystyle{ \lim_{y \to \infty} v\,(x + i\,y) = 0 }[/math]

This harmonic function is obtained from f by taking a convolution with the conjugate Poisson kernel

[math]\displaystyle{ Q(x, y) = \frac{ x }{ \pi\, \left(x^2 + y^2\right) } . }[/math]

Thus [math]\displaystyle{ v(x, y) = \frac{1}{\pi}\int_{-\infty}^\infty f(s)\;\frac{x - s}{\,(x - s)^2 + y^2\,}\;\mathrm{d}s . }[/math]

Indeed, the real and imaginary parts of the Cauchy kernel are [math]\displaystyle{ \frac{i}{\pi\,z} = P(x, y) + i\,Q(x, y) }[/math]

so that F = u + i v is holomorphic by Cauchy's integral formula.

The function v obtained from u in this way is called the harmonic conjugate of u. The (non-tangential) boundary limit of v(x,y) as y → 0 is the Hilbert transform of f. Thus, succinctly, [math]\displaystyle{ \operatorname{H}(f) = \lim_{y \to 0} Q(-, y) \star f }[/math]

Titchmarsh's theorem

Titchmarsh's theorem (named for E. C. Titchmarsh who included it in his 1937 work) makes precise the relationship between the boundary values of holomorphic functions in the upper half-plane and the Hilbert transform.[33] It gives necessary and sufficient conditions for a complex-valued square-integrable function F(x) on the real line to be the boundary value of a function in the Hardy space H2(U) of holomorphic functions in the upper half-plane U.

The theorem states that the following conditions for a complex-valued square-integrable function [math]\displaystyle{ F : \mathbb{R} \to \mathbb{C} }[/math] are equivalent:

- F(x) is the limit as z → x of a holomorphic function F(z) in the upper half-plane such that [math]\displaystyle{ \int_{-\infty}^\infty |F(x + i\,y)|^2\;\mathrm{d}x \lt K }[/math]

- The real and imaginary parts of F(x) are Hilbert transforms of each other.

- The Fourier transform [math]\displaystyle{ \mathcal{F}(F)(x) }[/math] vanishes for x < 0.

A weaker result is true for functions of class Lp for p > 1.[34] Specifically, if F(z) is a holomorphic function such that

[math]\displaystyle{ \int_{-\infty}^\infty |F(x + i\,y)|^p\;\mathrm{d}x \lt K }[/math]

for all y, then there is a complex-valued function F(x) in [math]\displaystyle{ L^p(\mathbb{R}) }[/math] such that F(x + i y) → F(x) in the Lp norm as y → 0 (as well as holding pointwise almost everywhere). Furthermore,

[math]\displaystyle{ F(x) = f(x) - i\,g(x) }[/math]

where f is a real-valued function in [math]\displaystyle{ L^p(\mathbb{R}) }[/math] and g is the Hilbert transform (of class Lp) of f.

This is not true in the case p = 1. In fact, the Hilbert transform of an L1 function f need not converge in the mean to another L1 function. Nevertheless,[35] the Hilbert transform of f does converge almost everywhere to a finite function g such that

[math]\displaystyle{ \int_{-\infty}^\infty \frac{ |g(x)|^p }{ 1 + x^2 } \; \mathrm{d}x \lt \infty }[/math]

This result is directly analogous to one by Andrey Kolmogorov for Hardy functions in the disc.[36] Although usually called Titchmarsh's theorem, the result aggregates much work of others, including Hardy, Paley and Wiener (see Paley–Wiener theorem), as well as work by Riesz, Hille, and Tamarkin[37]

Riemann–Hilbert problem

One form of the Riemann–Hilbert problem seeks to identify pairs of functions F+ and F− such that F+ is holomorphic on the upper half-plane and F− is holomorphic on the lower half-plane, such that for x along the real axis, [math]\displaystyle{ F_{+}(x) - F_{-}(x) = f(x) }[/math]

where f(x) is some given real-valued function of [math]\displaystyle{ x \isin \mathbb{R} }[/math]. The left-hand side of this equation may be understood either as the difference of the limits of F± from the appropriate half-planes, or as a hyperfunction distribution. Two functions of this form are a solution of the Riemann–Hilbert problem.

Formally, if F± solve the Riemann–Hilbert problem [math]\displaystyle{ f(x) = F_{+}(x) - F_{-}(x) }[/math]

then the Hilbert transform of f(x) is given by[38] [math]\displaystyle{ H(f)(x) = -i \bigl( F_{+}(x) + F_{-}(x) \bigr) . }[/math]

Hilbert transform on the circle

For a periodic function f the circular Hilbert transform is defined:

[math]\displaystyle{ \tilde f(x) \triangleq \frac{1}{ 2\pi } \operatorname{p.v.} \int_0^{2\pi} f(t)\,\cot\left(\frac{ x - t }{2}\right)\,\mathrm{d}t }[/math]

The circular Hilbert transform is used in giving a characterization of Hardy space and in the study of the conjugate function in Fourier series. The kernel, [math]\displaystyle{ \cot\left(\frac{ x - t }{2}\right) }[/math] is known as the Hilbert kernel since it was in this form the Hilbert transform was originally studied.[8]

The Hilbert kernel (for the circular Hilbert transform) can be obtained by making the Cauchy kernel 1⁄x periodic. More precisely, for x ≠ 0

[math]\displaystyle{ \frac{1}{\,2\,}\cot\left(\frac{x}{2}\right) = \frac{1}{x} + \sum_{n=1}^\infty \left(\frac{1}{x + 2n\pi} + \frac{1}{\,x - 2n\pi\,} \right) }[/math]

Many results about the circular Hilbert transform may be derived from the corresponding results for the Hilbert transform from this correspondence.

Another more direct connection is provided by the Cayley transform C(x) = (x – i) / (x + i), which carries the real line onto the circle and the upper half plane onto the unit disk. It induces a unitary map

[math]\displaystyle{ U\,f(x) = \frac{1}{(x + i)\,\sqrt{\pi}} \, f\left(C\left(x\right)\right) }[/math]

of L2(T) onto [math]\displaystyle{ L^2 (\mathbb{R}). }[/math] The operator U carries the Hardy space H2(T) onto the Hardy space [math]\displaystyle{ H^2(\mathbb{R}) }[/math].[39]

Hilbert transform in signal processing

Bedrosian's theorem

Bedrosian's theorem states that the Hilbert transform of the product of a low-pass and a high-pass signal with non-overlapping spectra is given by the product of the low-pass signal and the Hilbert transform of the high-pass signal, or

[math]\displaystyle{ \operatorname{H}\left(f_\text{LP}(t)\cdot f_\text{HP}(t)\right) = f_\text{LP}(t)\cdot \operatorname{H}\left(f_\text{HP}(t)\right), }[/math]

where fLP and fHP are the low- and high-pass signals respectively.[40] A category of communication signals to which this applies is called the narrowband signal model. A member of that category is amplitude modulation of a high-frequency sinusoidal "carrier":

[math]\displaystyle{ u(t) = u_m(t) \cdot \cos(\omega t + \varphi), }[/math]

where um(t) is the narrow bandwidth "message" waveform, such as voice or music. Then by Bedrosian's theorem:[41]

[math]\displaystyle{ \operatorname{H}(u)(t) = \begin{cases} +u_m(t) \cdot \sin(\omega t + \varphi), & \omega \gt 0, \\ -u_m(t) \cdot \sin(\omega t + \varphi), & \omega \lt 0. \end{cases} }[/math]

Analytic representation

A specific type of conjugate function is:

[math]\displaystyle{ u_a(t) \triangleq u(t) + i\cdot H(u)(t), }[/math]

known as the analytic representation of [math]\displaystyle{ u(t). }[/math] The name reflects its mathematical tractability, due largely to Euler's formula. Applying Bedrosian's theorem to the narrowband model, the analytic representation is:[42]

[math]\displaystyle{ \begin{align} u_a(t) & = u_m(t) \cdot \cos(\omega t + \varphi) + i\cdot u_m(t) \cdot \sin(\omega t + \varphi), \quad \omega \gt 0 \\ & = u_m(t) \cdot \left[\cos(\omega t + \varphi) + i\cdot \sin(\omega t + \varphi)\right], \quad \omega \gt 0 \\ & = u_m(t) \cdot e^{i(\omega t + \varphi)}, \quad \omega \gt 0.\, \end{align} }[/math] |

|

( ) |

A Fourier transform property indicates that this complex heterodyne operation can shift all the negative frequency components of um(t) above 0 Hz. In that case, the imaginary part of the result is a Hilbert transform of the real part. This is an indirect way to produce Hilbert transforms.

Angle (phase/frequency) modulation

The form:[43]

[math]\displaystyle{ u(t) = A \cdot \cos(\omega t + \varphi_m(t)) }[/math]

is called angle modulation, which includes both phase modulation and frequency modulation. The instantaneous frequency is [math]\displaystyle{ \omega + \varphi_m^\prime(t). }[/math] For sufficiently large ω, compared to [math]\displaystyle{ \varphi_m^\prime }[/math]:

[math]\displaystyle{ \operatorname{H}(u)(t) \approx A \cdot \sin(\omega t + \varphi_m(t)) }[/math] and: [math]\displaystyle{ u_a(t) \approx A \cdot e^{i(\omega t + \varphi_m(t))}. }[/math]

Single sideband modulation (SSB)

When um(t) in Eq.1 is also an analytic representation (of a message waveform), that is:

[math]\displaystyle{ u_m(t) = m(t) + i \cdot \widehat{m}(t) }[/math]

the result is single-sideband modulation:

[math]\displaystyle{ u_a(t) = (m(t) + i \cdot \widehat{m}(t)) \cdot e^{i(\omega t + \varphi)} }[/math]

whose transmitted component is:[44][45]

[math]\displaystyle{ \begin{align} u(t) &= \operatorname{Re}\{u_a(t)\}\\ &= m(t)\cdot \cos(\omega t + \varphi) - \widehat{m}(t)\cdot \sin(\omega t + \varphi) \end{align} }[/math]

Causality

The function [math]\displaystyle{ h(t) = 1/(\pi t) }[/math] presents two causality-based challenges to practical implementation in a convolution (in addition to its undefined value at 0):

- Its duration is infinite (technically infinite support). Finite-length windowing reduces the effective frequency range of the transform; shorter windows result in greater losses at low and high frequencies. See also quadrature filter.

- It is a non-causal filter. So a delayed version, [math]\displaystyle{ h(t-\tau), }[/math] is required. The corresponding output is subsequently delayed by [math]\displaystyle{ \tau. }[/math] When creating the imaginary part of an analytic signal, the source (real part) must also be delayed by [math]\displaystyle{ \tau }[/math].

Discrete Hilbert transform

File:Bandpass discrete Hilbert transform filter.tif File:Highpass discrete Hilbert transform filter.tif

For a discrete function, [math]\displaystyle{ u[n] }[/math], with discrete-time Fourier transform (DTFT), [math]\displaystyle{ U(\omega) }[/math], and discrete Hilbert transform [math]\displaystyle{ \hat u[n] }[/math], the DTFT of [math]\displaystyle{ \hat u[n] }[/math] in the region −π < ω < π is given by:

- [math]\displaystyle{ \operatorname{DTFT} (\hat u) = U(\omega)\cdot (-i\cdot \sgn(\omega)). }[/math]

The inverse DTFT, using the convolution theorem, is:[46]

- [math]\displaystyle{ \begin{align} \hat u[n] &= {\scriptstyle \mathrm{DTFT}^{-1}} (U(\omega))\ *\ {\scriptstyle \mathrm{DTFT}^{-1}} (-i\cdot \sgn(\omega))\\ &= u[n]\ *\ \frac{1}{2 \pi}\int_{-\pi}^{\pi} (-i\cdot \sgn(\omega))\cdot e^{i \omega n} \,\mathrm{d}\omega\\ &= u[n]\ *\ \underbrace{\frac{1}{2 \pi}\left[\int_{-\pi}^0 i\cdot e^{i \omega n} \,\mathrm{d}\omega - \int_0^\pi i\cdot e^{i \omega n} \,\mathrm{d}\omega \right]}_{h[n]}, \end{align} }[/math]

where

- [math]\displaystyle{ h[n]\ \triangleq \ \begin{cases} 0, & \text{for }n\text{ even}\\ \frac 2 {\pi n} & \text{for }n\text{ odd}, \end{cases} }[/math]

which is an infinite impulse response (IIR). When the convolution is performed numerically, an FIR approximation is substituted for h[n], as shown in Figure 1. An FIR filter with an odd number of anti-symmetric coefficients is called Type III, which inherently exhibits responses of zero magnitude at frequencies 0 and Nyquist, resulting in this case in a bandpass filter shape. A Type IV design (even number of anti-symmetric coefficients) is shown in Figure 2. Since the magnitude response at the Nyquist frequency does not drop out, it approximates an ideal Hilbert transformer a little better than the odd-tap filter. However

- A typical (i.e. properly filtered and sampled) u[n] sequence has no useful components at the Nyquist frequency.

- The Type IV impulse response requires a 1⁄2 sample shift in the h[n] sequence. That causes the zero-valued coefficients to become non-zero, as seen in Figure 2. So a Type III design is potentially twice as efficient as Type IV.

- The group delay of a Type III design is an integer number of samples, which facilitates aligning [math]\displaystyle{ \hat u[n] }[/math] with [math]\displaystyle{ u[n], }[/math] to create an analytic signal. The group delay of Type IV is halfway between two samples.

The MATLAB function, hilbert(u,N),[47] convolves a u[n] sequence with the periodic summation:[upper-alpha 1]

- [math]\displaystyle{ h_N[n]\ \triangleq \sum_{m=-\infty}^\infty h[n - mN] }[/math] [upper-alpha 2][upper-alpha 3]

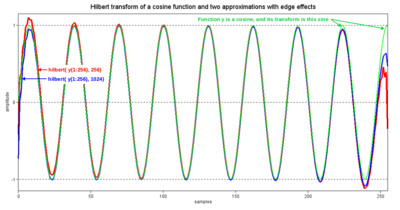

and returns one cycle (N samples) of the periodic result in the imaginary part of a complex-valued output sequence. The convolution is implemented in the frequency domain as the product of the array [math]\displaystyle{ {\scriptstyle \mathrm{DFT}} \left(u[n]\right) }[/math] with samples of the −i sgn(ω) distribution (whose real and imaginary components are all just 0 or ±1). Figure 3 compares a half-cycle of hN[n] with an equivalent length portion of h[n]. Given an FIR approximation for [math]\displaystyle{ h[n], }[/math] denoted by [math]\displaystyle{ \tilde{h}[n], }[/math] substituting [math]\displaystyle{ {\scriptstyle\mathrm{DFT}} \left(\tilde{h}[n]\right) }[/math] for the −i sgn(ω) samples results in an FIR version of the convolution.

The real part of the output sequence is the original input sequence, so that the complex output is an analytic representation of u[n]. When the input is a segment of a pure cosine, the resulting convolution for two different values of N is depicted in Figure 4 (red and blue plots). Edge effects prevent the result from being a pure sine function (green plot). Since hN[n] is not an FIR sequence, the theoretical extent of the effects is the entire output sequence. But the differences from a sine function diminish with distance from the edges. Parameter N is the output sequence length. If it exceeds the length of the input sequence, the input is modified by appending zero-valued elements. In most cases, that reduces the magnitude of the differences. But their duration is dominated by the inherent rise and fall times of the h[n] impulse response.

An appreciation for the edge effects is important when a method called overlap-save is used to perform the convolution on a long u[n] sequence. Segments of length N are convolved with the periodic function:

- [math]\displaystyle{ \tilde{h}_N[n]\ \triangleq \sum_{m=-\infty}^\infty \tilde{h}[n - mN]. }[/math]

When the duration of non-zero values of [math]\displaystyle{ \tilde{h}[n] }[/math] is [math]\displaystyle{ M \lt N, }[/math] the output sequence includes N − M + 1 samples of [math]\displaystyle{ \hat u. }[/math] M − 1 outputs are discarded from each block of N, and the input blocks are overlapped by that amount to prevent gaps.

Figure 5 is an example of using both the IIR hilbert(·) function and the FIR approximation. In the example, a sine function is created by computing the Discrete Hilbert transform of a cosine function, which was processed in four overlapping segments, and pieced back together. As the FIR result (blue) shows, the distortions apparent in the IIR result (red) are not caused by the difference between h[n] and hN[n] (green and red in Figure 3). The fact that hN[n] is tapered (windowed) is actually helpful in this context. The real problem is that it's not windowed enough. Effectively, M = N, whereas the overlap-save method needs M < N.

Number-theoretic Hilbert transform

The number theoretic Hilbert transform is an extension[50] of the discrete Hilbert transform to integers modulo an appropriate prime number. In this it follows the generalization of discrete Fourier transform to number theoretic transforms. The number theoretic Hilbert transform can be used to generate sets of orthogonal discrete sequences.[51]

See also

- Analytic signal

- Harmonic conjugate

- Hilbert spectroscopy

- Hilbert transform in the complex plane

- Hilbert–Huang transform

- Kramers–Kronig relation

- Riesz transform

- Single-sideband signal

- Singular integral operators of convolution type

Notes

- ↑ see § Periodic convolution, Eq.4b

- ↑ A closed form version of [math]\displaystyle{ h_N[n] }[/math] for even values of [math]\displaystyle{ N }[/math] is:[48] [math]\displaystyle{ h_N[n] = \begin{cases} \frac{2}{N} \cot(\pi n/N) & \text{for }n\text{ odd},\\ 0 & \text{for }n\text{ even}. \end{cases} }[/math]

- ↑ A closed form version of [math]\displaystyle{ h_N[n] }[/math] for odd values of [math]\displaystyle{ N }[/math] is:[49] [math]\displaystyle{ h_N[n] = \frac{1}{N} \left(\cot(\pi n/N) - \frac{\cos(\pi n)}{\sin(\pi n/N)}\right). }[/math]

Page citations

- ↑ Due to Schwartz 1950; see Pandey 1996, Chapter 3.

- ↑ Zygmund 1968, §XVI.1.

- ↑ E.g., Brandwood 2003, p. 87.

- ↑ E.g., Stein & Weiss 1971.

- ↑ E.g., Bracewell 2000, p. 359.

- ↑ Kress 1989.

- ↑ Bitsadze 2001.

- ↑ 8.0 8.1 Khvedelidze 2001.

- ↑ Hilbert 1953.

- ↑ Hardy, Littlewood & Pólya 1952, §9.1.

- ↑ Hardy, Littlewood & Pólya 1952, §9.2.

- ↑ Riesz 1928.

- ↑ Calderón & Zygmund 1952.

- ↑ Duoandikoetxea 2000, Chapter 3.

- ↑ King 2009b.

- ↑ Titchmarsh 1948, Chapter 5.

- ↑ Titchmarsh 1948, §5.14.

- ↑ Stein & Weiss 1971, Lemma V.2.8.

- ↑ This theorem is due to Riesz 1928, VII; see also Titchmarsh 1948, Theorem 101.

- ↑ This result is due to Pichorides 1972; see also Grafakos 2004, Remark 4.1.8.

- ↑ See for example Duoandikoetxea 2000, p. 59.

- ↑ Titchmarsh 1948, Theorem 102.

- ↑ Titchmarsh 1948, p. 120.

- ↑ Pandey 1996, §3.3.

- ↑ Duistermaat & Kolk 2010, p. 211.

- ↑ Titchmarsh 1948, Theorem 104.

- ↑ Stein 1970, §III.1.

- ↑ See Bargmann 1947, Lang 1985, and Sugiura 1990.

- ↑ Gel'fand & Shilov 1968.

- ↑ Calderón & Zygmund 1952; see Fefferman 1971.

- ↑ Fefferman 1971; Fefferman & Stein 1972

- ↑ Titchmarsh 1948, Chapter V.

- ↑ Titchmarsh 1948, Theorem 95.

- ↑ Titchmarsh 1948, Theorem 103.

- ↑ Titchmarsh 1948, Theorem 105.

- ↑ Duren 1970, Theorem 4.2.

- ↑ see King 2009a, § 4.22.

- ↑ Pandey 1996, Chapter 2.

- ↑ Rosenblum & Rovnyak 1997, p. 92.

- ↑ Schreier & Scharf 2010, 14.

- ↑ Bedrosian 1962.

- ↑ Osgood, p. 320

- ↑ Osgood, p. 320

- ↑ Franks 1969, p. 88

- ↑ Tretter 1995, p. 80 (7.9)

- ↑ Rabiner 1975

- ↑ MathWorks. "hilbert – Discrete-time analytic signal using Hilbert transform". MATLAB Signal Processing Toolbox Documentation. http://www.mathworks.com/help/toolbox/signal/ref/hilbert.html.

- ↑ Johansson, p. 24

- ↑ Johansson, p. 25

- ↑ Kak 1970.

- ↑ Kak 2014.

References

- Bargmann, V. (1947). "Irreducible unitary representations of the Lorentz group". Ann. of Math. 48 (3): 568–640. doi:10.2307/1969129.

- Bedrosian, E. (December 1962). A product theorem for Hilbert transforms (Report). Rand Corporation. RM-3439-PR. http://www.rand.org/content/dam/rand/pubs/research_memoranda/2008/RM3439.pdf.

- Hazewinkel, Michiel, ed. (2001), "Boundary value problems of analytic function theory", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4, https://www.encyclopediaofmath.org/index.php?title=b/b017400

- Bracewell, R. (2000). The Fourier Transform and Its Applications (3rd ed.). McGraw–Hill. ISBN 0-07-116043-4.

- Brandwood, David (2003). Fourier Transforms in Radar and Signal Processing. Boston: Artech House. ISBN 9781580531740.

- "On the existence of certain singular integrals". Acta Mathematica 88 (1): 85–139. 1952. doi:10.1007/BF02392130.

- Duoandikoetxea, J. (2000). Fourier Analysis. American Mathematical Society. ISBN 0-8218-2172-5.

- Duistermaat, J. J.; Kolk, J. A. C. (2010). Distributions. Birkhäuser. doi:10.1007/978-0-8176-4675-2. ISBN 978-0-8176-4672-1.

- Duren, P. (1970). Theory of H^p Spaces. New York, NY: Academic Press.

- "Characterizations of bounded mean oscillation". Bulletin of the American Mathematical Society 77 (4): 587–588. 1971. doi:10.1090/S0002-9904-1971-12763-5. https://www.ams.org/bull/1971-77-04/S0002-9904-1971-12763-5/home.html.

- Fefferman, C.; Stein, E. M. (1972). "H^p spaces of several variables". Acta Mathematica 129: 137–193. doi:10.1007/BF02392215.

- Franks, L.E. (September 1969). Thomas Kailath. ed. Signal Theory. Information theory. Englewood Cliffs, NJ: Prentice Hall. ISBN 0138100772.

- Generalized Functions. 2. Academic Press. 1968. pp. 153–154. ISBN 0-12-279502-4.

- Grafakos, Loukas (2004). Classical and Modern Fourier Analysis. Pearson Education. pp. 253–257. ISBN 0-13-035399-X.

- Inequalities. Cambridge, UK: Cambridge University Press. 1952. ISBN 0-521-35880-9.

- (in de) Grundzüge einer allgemeinen Theorie der linearen Integralgleichungen. Leipzig & Berlin, DE (1912); New York, NY (1953): B.G. Teubner (1912); Chelsea Pub. Co. (1953). 1953. ISBN 978-3-322-00681-3. OCLC 988251080. https://archive.org/details/grundzugeallg00hilbrich/page/n7. Retrieved 2020-12-18.

- Johansson, Mathias. "The Hilbert transform, Masters Thesis". http://w3.msi.vxu.se/exarb/mj_ex.pdf.; also http://www.fuchs-braun.com/media/d9140c7b3d5004fbffff8007fffffff0.pdf

- "The discrete Hilbert transform". Proc. IEEE 58 (4): 585–586. 1970. doi:10.1109/PROC.1970.7696.

- "Number theoretic Hilbert transform". Circuits, Systems and Signal Processing 33 (8): 2539–2548. 2014. doi:10.1007/s00034-014-9759-8.

- Hazewinkel, Michiel, ed. (2001), "Hilbert transform", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4, https://www.encyclopediaofmath.org/index.php?title=H/h047430

- King, Frederick W. (2009a). Hilbert Transforms. 1. Cambridge, UK: Cambridge University Press.

- King, Frederick W. (2009b). Hilbert Transforms. 2. Cambridge, UK: Cambridge University Press. pp. 453. ISBN 978-0-521-51720-1.

- Kress, Rainer (1989). Linear Integral Equations. New York, NY: Springer-Verlag. p. 91. ISBN 3-540-50616-0.

- SL(2,[math]\displaystyle{ \mathbb{R} }[/math]). Graduate Texts in Mathematics. 105. New York, NY: Springer-Verlag. 1985. ISBN 0-387-96198-4.

- Osgood, Brad, The Fourier Transform and its Applications, Stanford University, https://see.stanford.edu/materials/lsoftaee261/book-fall-07.pdf, retrieved 2021-04-30

- Pandey, J. N. (1996). The Hilbert transform of Schwartz distributions and applications. Wiley-Interscience. ISBN 0-471-03373-1.

- Pichorides, S. (1972). "On the best value of the constants in the theorems of Riesz, Zygmund, and Kolmogorov". Studia Mathematica 44 (2): 165–179. doi:10.4064/sm-44-2-165-179.

- Rabiner, Lawrence R.; Gold, Bernard (1975). "Chapter 2.27, Eq 2.195". Theory and application of digital signal processing. Englewood Cliffs, N.J.: Prentice-Hall. p. 71. ISBN 0-13-914101-4. https://archive.org/details/theoryapplicatio00rabi/page/71.

- "Sur les fonctions conjuguées" (in fr). Mathematische Zeitschrift 27 (1): 218–244. 1928. doi:10.1007/BF01171098.

- Rosenblum, Marvin; Rovnyak, James (1997). Hardy classes and operator theory. Dover. ISBN 0-486-69536-0.

- Théorie des distributions. Paris, FR: Hermann. 1950.

- Schreier, P.; Scharf, L. (2010). Statistical signal processing of complex-valued data: The theory of improper and noncircular signals. Cambridge, UK: Cambridge University Press.

- Smith, J. O. (2007). "Analytic Signals and Hilbert Transform Filters, in Mathematics of the Discrete Fourier Transform (DFT) with Audio Applications". https://ccrma.stanford.edu/~jos/r320/Analytic_Signals_Hilbert_Transform.html.; also https://www.dsprelated.com/freebooks/mdft/Analytic_Signals_Hilbert_Transform.html

- Singular integrals and differentiability properties of functions. Princeton University Press. 1970. ISBN 0-691-08079-8. https://archive.org/details/singularintegral0000stei.

- Introduction to Fourier Analysis on Euclidean Spaces. Princeton University Press. 1971. ISBN 0-691-08078-X. https://archive.org/details/introductiontofo0000stei.

- Sugiura, Mitsuo (1990). Unitary Representations and Harmonic Analysis: An Introduction. North-Holland Mathematical Library. 44 (2nd ed.). Elsevier. ISBN 0444885935.

- Introduction to the theory of Fourier integrals (2nd ed.). Oxford, UK: Clarendon Press. 1986. ISBN 978-0-8284-0324-5.

- Tretter, Steven A. (1995). R.W.Lucky. ed. Communication System Design Using DSP Algorithms. New York: Springer. ISBN 0306450321.

- Trigonometric Series (2nd ed.). Cambridge, UK: Cambridge University Press. 1988. ISBN 978-0-521-35885-9.

Further reading

- Benedetto, John J. (1996). Harmonic Analysis and its Applications. Boca Raton, FL: CRC Press. ISBN 0849378796. https://books.google.com/books?id=_SCeYgvPgoYC&q=%22Hilbert+transform%22.

- Carlson; Crilly; Rutledge (2002). Communication Systems (4th ed.). McGraw-Hill. ISBN 0-07-011127-8.

- Gold, B.; Oppenheim, A. V.; Rader, C. M. (1969). "Theory and Implementation of the Discrete Hilbert Transform". New York. http://www.rle.mit.edu/dspg/documents/HilbertComplete.pdf. Retrieved 2021-04-13.

- Grafakos, Loukas (1994). "An elementary proof of the square summability of the discrete Hilbert transform". American Mathematical Monthly (Mathematical Association of America) 101 (5): 456–458. doi:10.2307/2974910.

- Titchmarsh, E. (1926). "Reciprocal formulae involving series and integrals". Mathematische Zeitschrift 25 (1): 321–347. doi:10.1007/BF01283842.

External links

- Derivation of the boundedness of the Hilbert transform

- Mathworld Hilbert transform — Contains a table of transforms

- Weisstein, Eric W.. "Titchmarsh theorem". http://mathworld.wolfram.com/TitchmarshTheorem.html.

- "GS256 Lecture 3: Hilbert Transformation". http://www.geol.ucsb.edu/faculty/toshiro/GS256_Lecture3.pdf. an entry level introduction to Hilbert transformation.

|