Subderivative

In mathematics, subderivatives (or subgradient) generalizes the derivative to convex functions which are not necessarily differentiable. The set of subderivatives at a point is called the subdifferential at that point.[1] Subderivatives arise in convex analysis, the study of convex functions, often in connection to convex optimization.

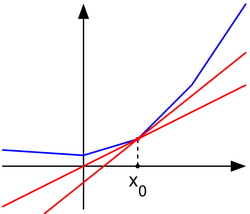

Let [math]\displaystyle{ f:I \to \mathbb{R} }[/math] be a real-valued convex function defined on an open interval of the real line. Such a function need not be differentiable at all points: For example, the absolute value function [math]\displaystyle{ f(x)=|x| }[/math] is non-differentiable when [math]\displaystyle{ x=0 }[/math]. However, as seen in the graph on the right (where [math]\displaystyle{ f(x) }[/math] in blue has non-differentiable kinks similar to the absolute value function), for any [math]\displaystyle{ x_0 }[/math] in the domain of the function one can draw a line which goes through the point [math]\displaystyle{ (x_0,f(x_0)) }[/math] and which is everywhere either touching or below the graph of f. The slope of such a line is called a subderivative.

Definition

Rigorously, a subderivative of a convex function [math]\displaystyle{ f:I \to \mathbb{R} }[/math] at a point [math]\displaystyle{ x_0 }[/math] in the open interval [math]\displaystyle{ I }[/math] is a real number [math]\displaystyle{ c }[/math] such that [math]\displaystyle{ f(x)-f(x_0)\ge c(x-x_0) }[/math] for all [math]\displaystyle{ x\in I }[/math]. By the converse of the mean value theorem, the set of subderivatives at [math]\displaystyle{ x_0 }[/math] for a convex function is a nonempty closed interval [math]\displaystyle{ [a,b] }[/math], where [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math] are the one-sided limits [math]\displaystyle{ a=\lim_{x\to x_0^-} \frac{f(x)-f(x_0)}{x-x_0}, }[/math] [math]\displaystyle{ b=\lim_{x\to x_0^+} \frac{f(x)-f(x_0)}{x-x_0}. }[/math] The interval [math]\displaystyle{ [a,b] }[/math] of all subderivatives is called the subdifferential of the function [math]\displaystyle{ f }[/math] at [math]\displaystyle{ x_0 }[/math], denoted by [math]\displaystyle{ \partial f(x_0) }[/math]. If [math]\displaystyle{ f }[/math] is convex, then its subdifferential at any point is non-empty. Moreover, if its subdifferential at [math]\displaystyle{ x_0 }[/math] contains exactly one subderivative, then [math]\displaystyle{ \partial f(x_0)=\{f'(x_0)\} }[/math] and [math]\displaystyle{ f }[/math] is differentiable at [math]\displaystyle{ x_0 }[/math].[2]

Example

Consider the function [math]\displaystyle{ f(x)=|x| }[/math] which is convex. Then, the subdifferential at the origin is the interval [math]\displaystyle{ [-1,1] }[/math]. The subdifferential at any point [math]\displaystyle{ x_0\lt 0 }[/math] is the singleton set [math]\displaystyle{ \{-1\} }[/math], while the subdifferential at any point [math]\displaystyle{ x_0\gt 0 }[/math] is the singleton set [math]\displaystyle{ \{1\} }[/math]. This is similar to the sign function, but is not single-valued at [math]\displaystyle{ 0 }[/math], instead including all possible subderivatives.

Properties

- A convex function [math]\displaystyle{ f:I\to\mathbb{R} }[/math] is differentiable at [math]\displaystyle{ x_0 }[/math] if and only if the subdifferential is a singleton set, which is [math]\displaystyle{ \{f'(x_0)\} }[/math].

- A point [math]\displaystyle{ x_0 }[/math] is a global minimum of a convex function [math]\displaystyle{ f }[/math] if and only if zero is contained in the subdifferential. For instance, in the figure above, one may draw a horizontal "subtangent line" to the graph of [math]\displaystyle{ f }[/math] at [math]\displaystyle{ (x_0,f(x_0)) }[/math]. This last property is a generalization of the fact that the derivative of a function differentiable at a local minimum is zero.

- If [math]\displaystyle{ f }[/math] and [math]\displaystyle{ g }[/math] are convex functions with subdifferentials [math]\displaystyle{ \partial f(x) }[/math] and [math]\displaystyle{ \partial g(x) }[/math] with [math]\displaystyle{ x }[/math] being the interior point of one of the functions, then the subdifferential of [math]\displaystyle{ f + g }[/math] is [math]\displaystyle{ \partial(f + g)(x) = \partial f(x) + \partial g(x) }[/math] (where the addition operator denotes the Minkowski sum). This reads as "the subdifferential of a sum is the sum of the subdifferentials."[3]

The subgradient

The concepts of subderivative and subdifferential can be generalized to functions of several variables. If [math]\displaystyle{ f:U\to\mathbb{R} }[/math] is a real-valued convex function defined on a convex open set in the Euclidean space [math]\displaystyle{ \mathbb{R}^n }[/math], a vector [math]\displaystyle{ v }[/math] in that space is called a subgradient at [math]\displaystyle{ x_0\in U }[/math] if for any [math]\displaystyle{ x\in U }[/math] one has that

- [math]\displaystyle{ f(x)-f(x_0)\ge v\cdot (x-x_0), }[/math]

where the dot denotes the dot product. The set of all subgradients at [math]\displaystyle{ x_0 }[/math] is called the subdifferential at [math]\displaystyle{ x_0 }[/math] and is denoted [math]\displaystyle{ \partial f(x_0) }[/math]. The subdifferential is always a nonempty convex compact set.

These concepts generalize further to convex functions [math]\displaystyle{ f:U\to\mathbb{R} }[/math] on a convex set in a locally convex space [math]\displaystyle{ V }[/math]. A functional [math]\displaystyle{ v^* }[/math] in the dual space [math]\displaystyle{ V^* }[/math] is called the subgradient at [math]\displaystyle{ x_0 }[/math] in [math]\displaystyle{ U }[/math] if for all [math]\displaystyle{ x\in U }[/math],

- [math]\displaystyle{ f(x)-f(x_0)\ge v^*(x-x_0). }[/math]

The set of all subgradients at [math]\displaystyle{ x_0 }[/math] is called the subdifferential at [math]\displaystyle{ x_0 }[/math] and is again denoted [math]\displaystyle{ \partial f(x_0) }[/math]. The subdifferential is always a convex closed set. It can be an empty set; consider for example an unbounded operator, which is convex, but has no subgradient. If [math]\displaystyle{ f }[/math] is continuous, the subdifferential is nonempty.

History

The subdifferential on convex functions was introduced by Jean Jacques Moreau and R. Tyrrell Rockafellar in the early 1960s. The generalized subdifferential for nonconvex functions was introduced by F.H. Clarke and R.T. Rockafellar in the early 1980s.[4]

See also

References

- ↑ Bubeck, S. (2014). Theory of Convex Optimization for Machine Learning. ArXiv, abs/1405.4980.

- ↑ Rockafellar, R. T. (1970). Convex Analysis. Princeton University Press. p. 242 [Theorem 25.1]. ISBN 0-691-08069-0.

- ↑ Lemaréchal, Claude; Hiriart-Urruty, Jean-Baptiste (2001). Fundamentals of Convex Analysis. Springer-Verlag Berlin Heidelberg. p. 183. ISBN 978-3-642-56468-0. https://archive.org/details/fundamentalsconv00hiri.

- ↑ Clarke, Frank H. (1983). Optimization and nonsmooth analysis. New York: John Wiley & Sons. pp. xiii+308. ISBN 0-471-87504-X. https://archive.org/details/optimizationnons0000clar.

- Borwein, Jonathan; Lewis, Adrian S. (2010). Convex Analysis and Nonlinear Optimization : Theory and Examples (2nd ed.). New York: Springer. ISBN 978-0-387-31256-9.

- Hiriart-Urruty, Jean-Baptiste; Lemaréchal, Claude (2001). Fundamentals of Convex Analysis. Springer. ISBN 3-540-42205-6.

- Zălinescu, C. (2002). Convex analysis in general vector spaces. World Scientific Publishing Co., Inc. pp. xx+367. ISBN 981-238-067-1.

External links

- "Uses of [math]\displaystyle{ \lim \limits_{h\to 0} \frac{f(x+h)-f(x-h)}{2h} }[/math]". Stack Exchange. September 18, 2011. https://math.stackexchange.com/q/65569.

|