Bernstein polynomial

In the mathematical field of numerical analysis, a Bernstein polynomial is a polynomial expressed as a linear combination of Bernstein basis polynomials. The idea is named after mathematician Sergei Natanovich Bernstein.

Polynomials in Bernstein form were first used by Bernstein in a constructive proof for the Weierstrass approximation theorem. With the advent of computer graphics, Bernstein polynomials, restricted to the interval [0, 1], became important in the form of Bézier curves.

A numerically stable way to evaluate polynomials in Bernstein form is de Casteljau's algorithm.

Definition

Bernstein basis polynomials

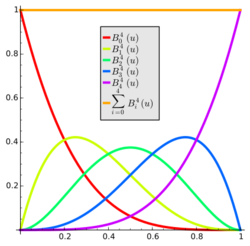

The n +1 Bernstein basis polynomials of degree n are defined as

- [math]\displaystyle{ b_{\nu,n}(x) \mathrel{:}\mathrel{=} \binom{n}{\nu} x^{\nu} \left( 1 - x \right)^{n - \nu}, \quad \nu = 0, \ldots, n, }[/math]

where [math]\displaystyle{ \tbinom{n}{\nu} }[/math] is a binomial coefficient.

So, for example, [math]\displaystyle{ b_{2,5}(x) = \tbinom{5}{2}x^2(1-x)^3 = 10x^2(1-x)^3. }[/math]

The first few Bernstein basis polynomials for blending 1, 2, 3 or 4 values together are:

- [math]\displaystyle{ \begin{align} b_{0,0}(x) & = 1, \\ b_{0,1}(x) & = 1 - x, & b_{1,1}(x) & = x \\ b_{0,2}(x) & = (1 - x)^2, & b_{1,2}(x) & = 2x(1 - x), & b_{2,2}(x) & = x^2 \\ b_{0,3}(x) & = (1 - x)^3, & b_{1,3}(x) & = 3x(1 - x)^2, & b_{2,3}(x) & = 3x^2(1 - x), & b_{3,3}(x) & = x^3 \end{align} }[/math]

The Bernstein basis polynomials of degree n form a basis for the vector space [math]\displaystyle{ \Pi_n }[/math] of polynomials of degree at most n with real coefficients.

Bernstein polynomials

A linear combination of Bernstein basis polynomials

- [math]\displaystyle{ B_n(x) \mathrel{:}\mathrel{=} \sum_{\nu=0}^{n} \beta_{\nu} b_{\nu,n}(x) }[/math]

is called a Bernstein polynomial or polynomial in Bernstein form of degree n.[1] The coefficients [math]\displaystyle{ \beta_\nu }[/math] are called Bernstein coefficients or Bézier coefficients.

The first few Bernstein basis polynomials from above in monomial form are:

- [math]\displaystyle{ \begin{align} b_{0,0}(x) & = 1, \\ b_{0,1}(x) & = 1 - 1x, & b_{1,1}(x) & = 0 + 1x \\ b_{0,2}(x) & = 1 - 2x + 1x^2, & b_{1,2}(x) & = 0 + 2x - 2x^2, & b_{2,2}(x) & = 0 + 0x + 1x^2 \\ b_{0,3}(x) & = 1 - 3x + 3x^2 - 1x^3, & b_{1,3}(x) & = 0 + 3x - 6x^2 + 3x^3, & b_{2,3}(x) & = 0 + 0x + 3x^2 - 3x^3, & b_{3,3}(x) & = 0 + 0x + 0x^2 + 1x^3 \end{align} }[/math]

Properties

The Bernstein basis polynomials have the following properties:

- [math]\displaystyle{ b_{\nu, n}(x) = 0 }[/math], if [math]\displaystyle{ \nu \lt 0 }[/math] or [math]\displaystyle{ \nu \gt n. }[/math]

- [math]\displaystyle{ b_{\nu, n}(x) \ge 0 }[/math] for [math]\displaystyle{ x \in [0,\ 1]. }[/math]

- [math]\displaystyle{ b_{\nu, n}\left( 1 - x \right) = b_{n - \nu, n}(x). }[/math]

- [math]\displaystyle{ b_{\nu, n}(0) = \delta_{\nu, 0} }[/math] and [math]\displaystyle{ b_{\nu, n}(1) = \delta_{\nu, n} }[/math] where [math]\displaystyle{ \delta }[/math] is the Kronecker delta function: [math]\displaystyle{ \delta_{ij} = \begin{cases} 0 &\text{if } i \neq j, \\ 1 &\text{if } i=j. \end{cases} }[/math]

- [math]\displaystyle{ b_{\nu, n}(x) }[/math] has a root with multiplicity [math]\displaystyle{ \nu }[/math] at point [math]\displaystyle{ x = 0 }[/math] (note: if [math]\displaystyle{ \nu = 0 }[/math], there is no root at 0).

- [math]\displaystyle{ b_{\nu, n}(x) }[/math] has a root with multiplicity [math]\displaystyle{ \left( n - \nu \right) }[/math] at point [math]\displaystyle{ x = 1 }[/math] (note: if [math]\displaystyle{ \nu = n }[/math], there is no root at 1).

- The derivative can be written as a combination of two polynomials of lower degree: [math]\displaystyle{ b'_{\nu, n}(x) = n \left( b_{\nu - 1, n - 1}(x) - b_{\nu, n - 1}(x) \right). }[/math]

- The k-th derivative at 0: [math]\displaystyle{ b_{\nu, n}^{(k)}(0) = \frac{n!}{(n - k)!} \binom{k}{\nu} (-1)^{\nu + k}. }[/math]

- The k-th derivative at 1: [math]\displaystyle{ b_{\nu, n}^{(k)}(1) = (-1)^k b_{n - \nu, n}^{(k)}(0). }[/math]

- The transformation of the Bernstein polynomial to monomials is [math]\displaystyle{ b_{\nu,n}(x) = \binom{n}{\nu}\sum_{k=0}^{n-\nu} \binom{n-\nu}{k}(-1)^{n-\nu-k} x^{\nu+k} = \sum_{\ell=\nu}^n \binom{n}{\ell}\binom{\ell}{\nu}(-1)^{\ell-\nu}x^\ell, }[/math] and by the inverse binomial transformation, the reverse transformation is[2] [math]\displaystyle{ x^k = \sum_{i=0}^{n-k} \binom{n-k}{i} \frac{1}{\binom{n}{i}} b_{n-i,n}(x) = \frac{1}{\binom{n}{k}} \sum_{j=k}^n \binom{j}{k}b_{j,n}(x). }[/math]

- The indefinite integral is given by [math]\displaystyle{ \int b_{\nu, n}(x) \, dx = \frac{1}{n+1} \sum_{j=\nu+1}^{n+1} b_{j, n+1}(x). }[/math]

- The definite integral is constant for a given n: [math]\displaystyle{ \int_0^1 b_{\nu, n}(x) \, dx = \frac{1}{n+1} \quad\ \, \text{for all } \nu = 0,1, \dots, n. }[/math]

- If [math]\displaystyle{ n \ne 0 }[/math], then [math]\displaystyle{ b_{\nu, n}(x) }[/math] has a unique local maximum on the interval [math]\displaystyle{ [0,\, 1] }[/math] at [math]\displaystyle{ x = \frac{\nu}{n} }[/math]. This maximum takes the value [math]\displaystyle{ \nu^\nu n^{-n} \left( n - \nu \right)^{n - \nu} {n \choose \nu}. }[/math]

- The Bernstein basis polynomials of degree [math]\displaystyle{ n }[/math] form a partition of unity: [math]\displaystyle{ \sum_{\nu = 0}^n b_{\nu, n}(x) = \sum_{\nu = 0}^n {n \choose \nu} x^\nu \left(1 - x\right)^{n - \nu} = \left(x + \left( 1 - x \right) \right)^n = 1. }[/math]

- By taking the first [math]\displaystyle{ x }[/math]-derivative of [math]\displaystyle{ (x+y)^n }[/math], treating [math]\displaystyle{ y }[/math] as constant, then substituting the value [math]\displaystyle{ y = 1-x }[/math], it can be shown that [math]\displaystyle{ \sum_{\nu=0}^{n} \nu b_{\nu, n}(x) = nx. }[/math]

- Similarly the second [math]\displaystyle{ x }[/math]-derivative of [math]\displaystyle{ (x+y)^n }[/math], with [math]\displaystyle{ y }[/math] again then substituted [math]\displaystyle{ y = 1-x }[/math], shows that [math]\displaystyle{ \sum_{\nu=1}^{n}\nu(\nu-1) b_{\nu, n}(x) = n(n-1)x^2. }[/math]

- A Bernstein polynomial can always be written as a linear combination of polynomials of higher degree: [math]\displaystyle{ b_{\nu, n - 1}(x) = \frac{n - \nu}{n} b_{\nu, n}(x) + \frac{\nu + 1}{n} b_{\nu + 1, n}(x). }[/math]

- The expansion of the Chebyshev Polynomials of the First Kind into the Bernstein basis is[3] [math]\displaystyle{ T_n(u) = (2n-1)!! \sum_{k=0}^n \frac{(-1)^{n-k}}{(2k-1)!!(2n-2k-1)!!} b_{k,n}(u). }[/math]

Approximating continuous functions

Let ƒ be a continuous function on the interval [0, 1]. Consider the Bernstein polynomial

- [math]\displaystyle{ B_n(f)(x) = \sum_{\nu = 0}^n f\left( \frac{\nu}{n} \right) b_{\nu,n}(x). }[/math]

It can be shown that

- [math]\displaystyle{ \lim_{n \to \infty}{ B_n(f) } = f }[/math]

uniformly on the interval [0, 1].[4][1][5][6]

Bernstein polynomials thus provide one way to prove the Weierstrass approximation theorem that every real-valued continuous function on a real interval [a, b] can be uniformly approximated by polynomial functions over [math]\displaystyle{ \mathbb R }[/math].[7]

A more general statement for a function with continuous kth derivative is

- [math]\displaystyle{ {\left\| B_n(f)^{(k)} \right\|}_\infty \le \frac{ (n)_k }{ n^k } \left\| f^{(k)} \right\|_\infty \quad\ \text{and} \quad\ \left\| f^{(k)}- B_n(f)^{(k)} \right\|_\infty \to 0, }[/math]

where additionally

- [math]\displaystyle{ \frac{ (n)_k }{ n^k } = \left( 1 - \frac{0}{n} \right) \left( 1 - \frac{1}{n} \right) \cdots \left( 1 - \frac{k - 1}{n} \right) }[/math]

is an eigenvalue of Bn; the corresponding eigenfunction is a polynomial of degree k.

Probabilistic proof

This proof follows Bernstein's original proof of 1912.[8] See also Feller (1966) or Koralov & Sinai (2007).[9][5]

Suppose K is a random variable distributed as the number of successes in n independent Bernoulli trials with probability x of success on each trial; in other words, K has a binomial distribution with parameters n and x. Then we have the expected value [math]\displaystyle{ \operatorname{\mathcal E}\left[\frac{K}{n}\right] = x\ }[/math] and

- [math]\displaystyle{ p(K) = {n \choose K} x^{K} \left( 1 - x \right)^{n - K} = b_{K,n}(x) }[/math]

By the weak law of large numbers of probability theory,

- [math]\displaystyle{ \lim_{n \to \infty}{ P\left( \left| \frac{K}{n} - x \right|\gt \delta \right) } = 0 }[/math]

for every δ > 0. Moreover, this relation holds uniformly in x, which can be seen from its proof via Chebyshev's inequality, taking into account that the variance of 1⁄n K, equal to 1⁄n x(1−x), is bounded from above by 1⁄(4n) irrespective of x.

Because ƒ, being continuous on a closed bounded interval, must be uniformly continuous on that interval, one infers a statement of the form

- [math]\displaystyle{ \lim_{n \to \infty}{ P\left( \left| f\left( \frac{K}{n} \right) - f\left( x \right) \right| \gt \varepsilon \right) } = 0 }[/math]

uniformly in x. Taking into account that ƒ is bounded (on the given interval) one gets for the expectation

- [math]\displaystyle{ \lim_{n \to \infty}{ \operatorname{\mathcal E}\left( \left| f\left( \frac{K}{n} \right) - f\left( x \right) \right| \right) } = 0 }[/math]

uniformly in x. To this end one splits the sum for the expectation in two parts. On one part the difference does not exceed ε; this part cannot contribute more than ε. On the other part the difference exceeds ε, but does not exceed 2M, where M is an upper bound for |ƒ(x)|; this part cannot contribute more than 2M times the small probability that the difference exceeds ε.

Finally, one observes that the absolute value of the difference between expectations never exceeds the expectation of the absolute value of the difference, and

- [math]\displaystyle{ \operatorname{\mathcal E}\left[f\left(\frac{K}{n}\right)\right] = \sum_{K=0}^n f\left(\frac{K}{n}\right) p(K) = \sum_{K=0}^n f\left(\frac{K}{n}\right) b_{K,n}(x) = B_n(f)(x) }[/math]

Elementary proof

The probabilistic proof can also be rephrased in an elementary way, using the underlying probabilistic ideas but proceeding by direct verification:[10][6][11][12][13]

The following identities can be verified:

- [math]\displaystyle{ \sum_k {n \choose k} x^k (1-x)^{n-k} = 1 }[/math] ("probability")

- [math]\displaystyle{ \sum_k {k\over n} {n \choose k} x^k (1-x)^{n-k} = x }[/math] ("mean")

- [math]\displaystyle{ \sum_k \left( x -{k\over n}\right)^2 {n \choose k} x^k (1-x)^{n-k} = {x(1-x)\over n}. }[/math] ("variance")

In fact, by the binomial theorem

[math]\displaystyle{ (1+t)^n = \sum_k {n \choose k} t^k, }[/math]

and this equation can be applied twice to [math]\displaystyle{ t\frac{d}{dt} }[/math]. The identities (1), (2), and (3) follow easily using the substitution [math]\displaystyle{ t = x/ (1 - x) }[/math].

Within these three identities, use the above basis polynomial notation

- [math]\displaystyle{ b_{k,n}(x) = {n\choose k} x^k (1-x)^{n-k}, }[/math]

and let

- [math]\displaystyle{ f_n(x) = \sum_k f(k/n)\, b_{k,n}(x). }[/math]

Thus, by identity (1)

- [math]\displaystyle{ f_n(x) - f(x) = \sum_k [f(k/n) - f(x)] \,b_{k,n}(x), }[/math]

so that

- [math]\displaystyle{ |f_n(x) - f(x)| \le \sum_k |f(k/n) - f(x)| \, b_{k,n}(x). }[/math]

Since f is uniformly continuous, given [math]\displaystyle{ \varepsilon \gt 0 }[/math], there is a [math]\displaystyle{ \delta \gt 0 }[/math] such that [math]\displaystyle{ |f(a) - f(b)| \lt \varepsilon }[/math] whenever [math]\displaystyle{ |a-b| \lt \delta }[/math]. Moreover, by continuity, [math]\displaystyle{ M= \sup |f| \lt \infty }[/math]. But then

- [math]\displaystyle{ |f_n(x) - f(x)| \le \sum_{|x -{k\over n}|\lt \delta} |f(k/n) - f(x)|\, b_{k,n}(x) + \sum_{|x -{k\over n}|\ge \delta} |f(k/n) - f(x)|\, b_{k,n}(x) . }[/math]

The first sum is less than ε. On the other hand, by identity (3) above, and since [math]\displaystyle{ |x - k/n| \ge \delta }[/math], the second sum is bounded by [math]\displaystyle{ 2M }[/math] times

- [math]\displaystyle{ \sum_{|x - k/n|\ge \delta} b_{k,n}(x) \le \sum_k \delta^{-2} \left(x -{k\over n}\right)^2 b_{k,n}(x) = \delta^{-2} {x(1-x)\over n} \lt {1\over4} \delta^{-2} n^{-1}. }[/math]

It follows that the polynomials fn tend to f uniformly.

Generalizations to higher dimension

Bernstein polynomials can be generalized to k dimensions – the resulting polynomials have the form Bi1(x1) Bi2(x2) ... Bik(xk).[1] In the simplest case only products of the unit interval [0,1] are considered; but, using affine transformations of the line, Bernstein polynomials can also be defined for products [a1, b1] × [a2, b2] × ... × [ak, bk]. For a continuous function f on the k-fold product of the unit interval, the proof that f(x1, x2, ... , xk) can be uniformly approximated by

- [math]\displaystyle{ \sum_{i_1} \sum_{i_2} \cdots \sum_{i_k} {n_1\choose i_1} {n_2\choose i_2} \cdots {n_k\choose i_k} f\left({i_1\over n_1}, {i_2\over n_2}, \dots, {i_k\over n_k}\right) x_1^{i_1} (1-x_1)^{n_1-i_1} x_2^{i_2} (1-x_2)^{n_2-i_2} \cdots x_k^{i_k} (1-x_k)^{n_k - i_k} }[/math]

is a straightforward extension of Bernstein's proof in one dimension. [14]

See also

- Polynomial interpolation

- Newton form

- Lagrange form

- Binomial QMF (also known as Daubechies wavelet)

Notes

- ↑ 1.0 1.1 1.2 Lorentz 1953

- ↑ Mathar, R. J. (2018). "Orthogonal basis function over the unit circle with the minimax property". Appendix B. arXiv:1802.09518 [math.NA].

- ↑ Rababah, Abedallah (2003). "Transformation of Chebyshev-Bernstein Polynomial Basis". Comp. Meth. Appl. Math. 3 (4): 608–622. doi:10.2478/cmam-2003-0038.

- ↑ Natanson (1964) p. 6

- ↑ 5.0 5.1 Feller 1966

- ↑ 6.0 6.1 Beals 2004

- ↑ Natanson (1964) p. 3

- ↑ Bernstein 1912

- ↑ Koralov, L.; Sinai, Y. (2007). ""Probabilistic proof of the Weierstrass theorem"". Theory of probability and random processes (2nd ed.). Springer. p. 29.

- ↑ Lorentz 1953, pp. 5–6

- ↑ Goldberg 1964

- ↑ Akhiezer 1956

- ↑ Burkill 1959

- ↑ Hildebrandt, T. H.; Schoenberg, I. J. (1933), "On linear functional operations and the moment problem for a finite interval in one or several dimensions", Annals of Mathematics 34 (2): 327, doi:10.2307/1968205, https://www.jstor.org/stable/1968205

References

- Bernstein, S. (1912), "Démonstration du théorème de Weierstrass fondée sur le calcul des probabilités (Proof of the theorem of Weierstrass based on the calculus of probabilities)", Comm. Kharkov Math. Soc. 13: 1–2, https://www.mn.uio.no/math/english/people/aca/michaelf/translations/bernstein_english.pdf, English translation

- Lorentz, G. G. (1953), Bernstein Polynomials, University of Toronto Press

- Akhiezer, N. I. (1956) (in ru), Theory of approximation, Frederick Ungar, pp. 30–31, https://archive.org/details/theoryofapproxim00akhi/page/30/mode/2up?q=bernstein, Russian edition first published in 1940

- Burkill, J. C. (1959), Lectures On Approximation By Polynomials, Bombay: Tata Institute of Fundamental Research, pp. 7–8, http://www.math.tifr.res.in/~publ/ln/tifr16.pdf

- Goldberg, Richard R. (1964), Methods of real analysis, John Wiley & Sons, pp. 263–265, https://archive.org/details/in.ernet.dli.2015.134296/page/n243/mode/2up?q=bernstein

- Caglar, Hakan; Akansu, Ali N. (July 1993). "A generalized parametric PR-QMF design technique based on Bernstein polynomial approximation". IEEE Transactions on Signal Processing 41 (7): 2314–2321. doi:10.1109/78.224242. Bibcode: 1993ITSP...41.2314C.

- Hazewinkel, Michiel, ed. (2001), "Bernstein polynomials", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4, https://www.encyclopediaofmath.org/index.php?title=B/b015730

- Natanson, I.P. (1964). Constructive function theory. Volume I: Uniform approximation. New York: Frederick Ungar.

- Feller, William (1966), An introduction to probability theory and its applications, Vol, II, John Wiley & Sons, pp. 149–150, 218–222

- Beals, Richard (2004), Analysis. An introduction, Cambridge University Press, pp. 95–98, ISBN 0521600472

External links

- Kac, Mark (1938). "Une remarque sur les polynomes de M. S. Bernstein". Studia Mathematica 7: 49–51. doi:10.4064/sm-7-1-49-51.

- Kelisky, Richard Paul; Rivlin, Theodore Joseph (1967). "Iteratives of Bernstein Polynomials". Pacific Journal of Mathematics 21 (3): 511. doi:10.2140/pjm.1967.21.511.

- Stark, E. L. (1981). "Bernstein Polynome, 1912-1955". in Butzer, P.L.. ISNM60. pp. 443–461. doi:10.1007/978-3-0348-9369-5_40. ISBN 978-3-0348-9369-5.

- Petrone, Sonia (1999). "Random Bernstein polynomials". Scand. J. Stat. 26 (3): 373–393. doi:10.1111/1467-9469.00155.

- Oruc, Halil; Phillips, Geoerge M. (1999). "A generalization of the Bernstein Polynomials". Proceedings of the Edinburgh Mathematical Society 42 (2): 403–413. doi:10.1017/S0013091500020332.

- Joy, Kenneth I. (2000). "Bernstein Polynomials". http://www.idav.ucdavis.edu/education/CAGDNotes/Bernstein-Polynomials.pdf. from University of California, Davis. Note the error in the summation limits in the first formula on page 9.

- Idrees Bhatti, M.; Bracken, P. (2007). "Solutions of differential equations in a Bernstein Polynomial basis". J. Comput. Appl. Math. 205 (1): 272–280. doi:10.1016/j.cam.2006.05.002. Bibcode: 2007JCoAM.205..272I.

- Casselman, Bill (2008). "From Bézier to Bernstein". https://www.ams.org/featurecolumn/archive/bezier.html. Feature Column from American Mathematical Society

- Acikgoz, Mehmet; Araci, Serkan (2010). "On the generating function for Bernstein Polynomials". AIP Conf. Proc.. AIP Conference Proceedings 1281 (1): 1141. doi:10.1063/1.3497855. Bibcode: 2010AIPC.1281.1141A.

- Doha, E. H.; Bhrawy, A. H.; Saker, M. A. (2011). "Integrals of Bernstein polynomials: An application for the solution of high even-order differential equations". Appl. Math. Lett. 24 (4): 559–565. doi:10.1016/j.aml.2010.11.013.

- Farouki, Rida T. (2012). "The Bernstein polynomial basis: a centennial retrospective". Comp. Aid. Geom. Des. 29 (6): 379–419. doi:10.1016/j.cagd.2012.03.001.

- Chen, Xiaoyan; Tan, Jieqing; Liu, Zhi; Xie, Jin (2017). "Approximations of functions by a new family of generalized Bernstein operators". J. Math. Ann. Applic. 450: 244–261. doi:10.1016/j.jmaa.2016.12.075.

- Weisstein, Eric W.. "Bernstein Polynomial". http://mathworld.wolfram.com/BernsteinPolynomial.html.

|