Trie

| Trie | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Tree | ||||||||||||||||||||

| Invented | 1960 | ||||||||||||||||||||

| Invented by | Edward Fredkin, Axel Thue, and René de la Briandais | ||||||||||||||||||||

| Time complexity in big O notation | |||||||||||||||||||||

| |||||||||||||||||||||

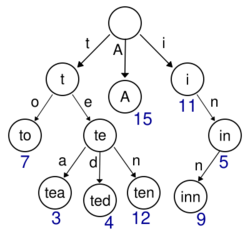

In computer science, a trie (/ˈtraɪ/, /ˈtriː/), also called digital tree or prefix tree,[1] is a type of k-ary search tree, a tree data structure used for locating specific keys from within a set. These keys are most often strings, with links between nodes defined not by the entire key, but by individual characters. In order to access a key (to recover its value, change it, or remove it), the trie is traversed depth-first, following the links between nodes, which represent each character in the key.

Unlike a binary search tree, nodes in the trie do not store their associated key. Instead, a node's position in the trie defines the key with which it is associated. This distributes the value of each key across the data structure, and means that not every node necessarily has an associated value.

All the children of a node have a common prefix of the string associated with that parent node, and the root is associated with the empty string. This task of storing data accessible by its prefix can be accomplished in a memory-optimized way by employing a radix tree.

Though tries can be keyed by character strings, they need not be. The same algorithms can be adapted for ordered lists of any underlying type, e.g. permutations of digits or shapes. In particular, a bitwise trie is keyed on the individual bits making up a piece of fixed-length binary data, such as an integer or memory address. The key lookup complexity of a trie remains proportional to the key size. Specialized trie implementations such as compressed tries are used to deal with the enormous space requirement of a trie in naive implementations.

History, etymology, and pronunciation

The idea of a trie for representing a set of strings was first abstractly described by Axel Thue in 1912.[2][3] Tries were first described in a computer context by René de la Briandais in 1959.[4][3][5]:{{{1}}}

The idea was independently described in 1960 by Edward Fredkin,[6] who coined the term trie, pronouncing it /ˈtriː/ (as "tree"), after the middle syllable of retrieval.[7][8] However, other authors pronounce it /ˈtraɪ/ (as "try"), in an attempt to distinguish it verbally from "tree".[7][8][3]

Overview

Tries are a form of string-indexed look-up data structure, which is used to store a dictionary list of words that can be searched on in a manner that allows for efficient generation of completion lists.[9][10]:{{{1}}} A prefix trie is an ordered tree data structure used in the representation of a set of strings over a finite alphabet set, which allows efficient storage of words with common prefixes.[1]

Tries can be efficacious on string-searching algorithms such as predictive text, approximate string matching, and spell checking in comparison to a binary search trees.[11][8][12]:358 A trie can be seen as a tree-shaped deterministic finite automaton.[13]

Operations

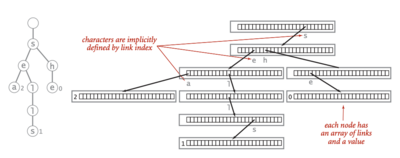

Tries support various operations: insertion, deletion, and lookup of a string key. Tries are composed of nodes that contain links, which either point to other suffix child nodes or null. As for every tree, each node but the root is pointed to by only one other node, called its parent. Each node contains as many links as the number of characters in the applicable alphabet (although tries tend to have a substantial number of null links). In some cases, the alphabet used is simply that of the character encoding—resulting in, for example, a size of 256 in the case of (unsigned) ASCII.[14]:{{{1}}}

The null links within the children of a node emphasize the following characteristics:[14]:734[5]:336

- Characters and string keys are implicitly stored in the trie, and include a character sentinel value indicating string termination.

- Each node contains one possible link to a prefix of strong keys of the set.

A basic structure type of nodes in the trie is as follows; [math]\displaystyle{ \text{Node} }[/math] may contain an optional [math]\displaystyle{ \text{Value} }[/math], which is associated with each key stored in the last character of string, or terminal node.

structure Node

Children Node[Alphabet-Size]

Is-Terminal Boolean

Value Data-Type

end structure

|

Searching

Searching for a value in a trie is guided by the characters in the search string key, as each node in the trie contains a corresponding link to each possible character in the given string. Thus, following the string within the trie yields the associated value for the given string key. A null link during the search indicates the inexistence of the key.[14]:732-733

The following pseudocode implements the search procedure for a given string key in a rooted trie x.[15]:135

Trie-Find(x, key)

for 0 ≤ i < key.length do

if x.Children[key[i]] = nil then

return false

end if

x := x.Children[key[i]]

repeat

return x.Value

|

In the above pseudocode, x and key correspond to the pointer of trie's root node and the string key respectively. The search operation, in a standard trie, takes [math]\displaystyle{ O(\text{dm}) }[/math] time, where [math]\displaystyle{ \text{m} }[/math] is the size of the string parameter [math]\displaystyle{ \text{key} }[/math], and [math]\displaystyle{ \text{d} }[/math] corresponds to the alphabet size.[16]:{{{1}}} Binary search trees, on the other hand, take [math]\displaystyle{ O(m \log n) }[/math] in the worst case, since the search depends on the height of the tree ([math]\displaystyle{ \log n }[/math]) of the BST (in case of balanced trees), where [math]\displaystyle{ \text{n} }[/math] and [math]\displaystyle{ \text{m} }[/math] being number of keys and the length of the keys.[12]:358

The trie occupies less space in comparison with a BST in the case of a large number of short strings, since nodes share common initial string subsequences and store the keys implicitly.[12]:358 The terminal node of the tree contains a non-null value, and it is a search hit if the associated value is found in the trie, and search miss if it is not.[14]:733

Insertion

Insertion into trie is guided by using the character sets as indexes to the children array until the last character of the string key is reached.[14]:733-734 Each node in the trie corresponds to one call of the radix sorting routine, as the trie structure reflects the execution of pattern of the top-down radix sort.[15]:135

1 2 3 4 5 6 7 8 9 |

Trie-Insert(x, key, value)

for 0 ≤ i < key.length do

if x.Children[key[i]] = nil then

x.Children[key[i]] := Node()

end if

x := x.Children[key[i]]

repeat

x.Value := value

x.Is-Terminal := True

|

If a null link is encountered prior to reaching the last character of the string key, a new node is created (line 3).[14]:745 The value of the terminal node is assigned to the input value; therefore, if the former was non-null at the time of insertion, it is substituted with the new value.

Deletion

Deletion of a key–value pair from a trie involves finding the terminal node with the corresponding string key, marking the terminal indicator and value to false and null correspondingly.[14]:740

The following is a recursive procedure for removing a string key from rooted trie (x).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

Trie-Delete(x, key)

if key = nil then

if x.Is-Terminal = True then

x.Is-Terminal := False

x.Value := nil

end if

for 0 ≤ i < x.Children.length

if x.Children[i] != nil

return x

end if

repeat

return nil

end if

x.Children[key[0]] := Trie-Delete(x.Children[key[0]], key[1:])

return x

|

The procedure begins by examining the key; null denotes the arrival of a terminal node or end of a string key. If the node is terminal it has no children, it is removed from the trie (line 14). However, an end of string key without the node being terminal indicates that the key does not exist, thus the procedure does not modify the trie. The recursion proceeds by incrementing key's index.

Replacing other data structures

Replacement for hash tables

A trie can be used to replace a hash table, over which it has the following advantages:[12]:358

- Searching for a node with an associated key of size [math]\displaystyle{ m }[/math] has the complexity of [math]\displaystyle{ O(m) }[/math], whereas an imperfect hash function may have numerous colliding keys, and the worst-case lookup speed of such a table would be [math]\displaystyle{ O(N) }[/math], where [math]\displaystyle{ N }[/math] denotes the total number of nodes within the table.

- Tries do not need a hash function for the operation, unlike a hash table; there are also no collisions of different keys in a trie.

- Buckets in a trie, which are analogous to hash table buckets that store key collisions, are necessary only if a single key is associated with more than one value.

- String keys within the trie can be sorted using a predetermined alphabetical ordering.

However, tries are less efficient than a hash table when the data is directly accessed on a secondary storage device such as a hard disk drive that has higher random access time than the main memory.[6] Tries are also disadvantageous when the key value cannot be easily represented as string, such as floating point numbers where multiple representations are possible (e.g. 1 is equivalent to 1.0, +1.0, 1.00, etc.),[12]:359 however it can be unambiguously represented as a binary number in IEEE 754, in comparison to two's complement format.[17]

Implementation strategies

Tries can be represented in several ways, corresponding to different trade-offs between memory use and speed of the operations.[5]:341 Using a vector of pointers for representing a trie consumes enormous space; however, memory space can be reduced at the expense of running time if a singly linked list is used for each node vector, as most entries of the vector contains [math]\displaystyle{ \text{nil} }[/math].[3]:495

Techniques such as alphabet reduction may alleviate the high space complexity by reinterpreting the original string as a long string over a smaller alphabet i.e. a string of n bytes can alternatively be regarded as a string of 2n four-bit units and stored in a trie with sixteen pointers per node. However, lookups need to visit twice as many nodes in the worst-case, although space requirements go down by a factor of eight.[5]:347–352 Other techniques include storing a vector of 256 ASCII pointers as a bitmap of 256 bits representing ASCII alphabet, which reduces the size of individual nodes dramatically.[18]

Bitwise tries

Bitwise tries are used to address the enormous space requirement for the trie nodes in a naive simple pointer vector implementations. Each character in the string key set is represented via individual bits, which are used to traverse the trie over a string key. The implementations for these types of trie use vectorized CPU instructions to find the first set bit in a fixed-length key input (e.g. GCC's __builtin_clz() intrinsic function). Accordingly, the set bit is used to index the first item, or child node, in the 32- or 64-entry based bitwise tree. Search then proceeds by testing each subsequent bit in the key.[19]

This procedure is also cache-local and highly parallelizable due to register independency, and thus performant on out-of-order execution CPUs.[19]

Compressed tries

Radix tree, also known as a compressed trie, is a space-optimized variant of a trie in which nodes with only one child get merged with its parents; elimination of branches of the nodes with a single child results in better in both space and time metrics.[20][21]:{{{1}}} This works best when the trie remains static and set of keys stored are very sparse within their representation space.[22]:{{{1}}}

One more approach is to "pack" the trie, in which a space-efficient implementation of a sparse packed trie applied to automatic hyphenation, in which the descendants of each node may be interleaved in memory.[8]

Patricia trees

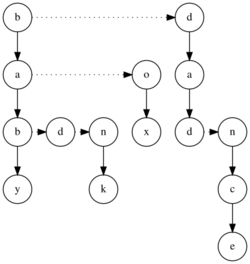

Patricia trees are a particular implementation of the compressed binary trie that uses the binary encoding of the string keys in its representation.[23][15]:{{{1}}} Every node in a Patricia tree contains an index, known as a "skip number", that stores the node's branching index to avoid empty subtrees during traversal.[15]:140-141 A naive implementation of a trie consumes immense storage due to larger number of leaf-nodes caused by sparse distribution of keys; Patricia trees can be efficient for such cases.[15]:142[24]:3

A representation of a Patricia tree is shown to the right. Each index value adjacent to the nodes represents the "skip number"—the index of the bit with which branching is to be decided.[24]:{{{1}}} The skip number 1 at node 0 corresponds to the position 1 in the binary encoded ASCII where the leftmost bit differed in the key set [math]\displaystyle{ X }[/math].[24]:3-4 The skip number is crucial for search, insertion, and deletion of nodes in the Patricia tree, and a bit masking operation is performed during every iteration.[15]:143

Applications

Trie data structures are commonly used in predictive text or autocomplete dictionaries, and approximate matching algorithms.[11] Tries enable faster searches, occupy less space, especially when the set contains large number of short strings, thus used in spell checking, hyphenation applications and longest prefix match algorithms.[8][12]:{{{1}}} However, if storing dictionary words is all that is required (i.e. there is no need to store metadata associated with each word), a minimal deterministic acyclic finite state automaton (DAFSA) or radix tree would use less storage space than a trie. This is because DAFSAs and radix trees can compress identical branches from the trie which correspond to the same suffixes (or parts) of different words being stored. String dictionaries are also utilized in natural language processing, such as finding lexicon of a text corpus.[25]:{{{1}}}

Sorting

Lexicographic sorting of a set of string keys can be implemented by building a trie for the given keys and traversing the tree in pre-order fashion;[26] this is also a form of radix sort.[27] Tries are also fundamental data structures for burstsort, which is notable for being the fastest string sorting algorithm as of 2007,[28] accompanied for its efficient use of CPU cache.[29]

Full-text search

A special kind of trie, called a suffix tree, can be used to index all suffixes in a text to carry out fast full-text searches.[30]

Web search engines

A specialized kind of trie called a compressed trie, is used in web search engines for storing the indexes - a collection of all searchable words.[31] Each terminal node is associated with a list of URLs—called occurrence list—to pages that match the keyword. The trie is stored in the main memory, whereas the occurrence is kept in an external storage, frequently in large clusters, or the in-memory index points to documents stored in an external location.[32]

Bioinformatics

Tries are used in Bioinformatics, notably in sequence alignment software applications such as BLAST, which indexes all the different substring of length k (called k-mers) of a text by storing the positions of their occurrences in a compressed trie sequence databases.[25]:75

Internet routing

Compressed variants of tries, such as databases for managing Forwarding Information Base (FIB), are used in storing IP address prefixes within routers and bridges for prefix-based lookup to resolve mask-based operations in IP routing.[25]:75

See also

References

- ↑ 1.0 1.1 Maabar, Maha (17 November 2014). "Trie Data Structure". CVR, University of Glasgow. https://bioinformatics.cvr.ac.uk/trie-data-structure/.

- ↑ Thue, Axel (1912). "Über die gegenseitige Lage gleicher Teile gewisser Zeichenreihen". Skrifter Udgivne Af Videnskabs-Selskabet I Christiania 1912 (1): 1–67. https://archive.org/details/skrifterutgitavv121chri/page/n11/mode/2up. Cited by Knuth.

- ↑ 3.0 3.1 3.2 3.3 Knuth, Donald (1997). "6.3: Digital Searching". The Art of Computer Programming Volume 3: Sorting and Searching (2nd ed.). Addison-Wesley. p. 492. ISBN 0-201-89685-0.

- ↑ de la Briandais, René (1959). "File searching using variable length keys". Proc. Western J. Computer Conf.. pp. 295–298. doi:10.1145/1457838.1457895. https://pdfs.semanticscholar.org/3ce3/f4cc1c91d03850ed84ef96a08498e018d18f.pdf. Cited by Brass and by Knuth.

- ↑ 5.0 5.1 5.2 5.3 Brass, Peter (8 September 2008). Advanced Data Structures. UK: Cambridge University Press. doi:10.1017/CBO9780511800191. ISBN 978-0521880374. https://www.cambridge.org/core/books/advanced-data-structures/D56E2269D7CEE969A3B8105AD5B9254C.

- ↑ 6.0 6.1 Edward Fredkin (1960). "Trie Memory". Communications of the ACM 3 (9): 490–499. doi:10.1145/367390.367400.

- ↑ 7.0 7.1 Black, Paul E. (2009-11-16). "trie". Dictionary of Algorithms and Data Structures. National Institute of Standards and Technology. https://xlinux.nist.gov/dads/HTML/trie.html.

- ↑ 8.0 8.1 8.2 8.3 8.4 Franklin Mark Liang (1983). Word Hy-phen-a-tion By Com-put-er (PDF) (Doctor of Philosophy thesis). Stanford University. Archived (PDF) from the original on 2005-11-11. Retrieved 2010-03-28.

- ↑ "Trie". School of Arts and Science, Rutgers University. 2022. https://ds.cs.rutgers.edu/assignment-trie/.

- ↑ Connelly, Richard H.; Morris, F. Lockwood (1993). "A generalization of the trie data structure". Mathematical Structures in Computer Science (Syracuse University) 5 (3): 381–418. doi:10.1017/S0960129500000803. https://surface.syr.edu/eecs_techreports/162/.

- ↑ 11.0 11.1 Aho, Alfred V.; Corasick, Margaret J. (Jun 1975). "Efficient String Matching: An Aid to Bibliographic Search". Communications of the ACM 18 (6): 333–340. doi:10.1145/360825.360855.

- ↑ 12.0 12.1 12.2 12.3 12.4 12.5 Thareja, Reema (13 October 2018). "Hashing and Collision". Data Structures Using C (2 ed.). Oxford University Press. ISBN 9780198099307. https://global.oup.com/academic/product/data-structures-using-c-9780198099307.

- ↑ Daciuk, Jan (24 June 2003). "Comparison of Construction Algorithms for Minimal, Acyclic, Deterministic, Finite-State Automata from Sets of Strings". International Conference on Implementation and Application of Automata. Springer Publishing. pp. 255–261. doi:10.1007/3-540-44977-9_26. ISBN 978-3-540-40391-3. https://link.springer.com/chapter/10.1007/3-540-44977-9_26.

- ↑ 14.0 14.1 14.2 14.3 14.4 14.5 14.6 Sedgewick, Robert; Wayne, Kevin (3 April 2011). Algorithms (4 ed.). Addison-Wesley, Princeton University. ISBN 978-0321573513. https://algs4.cs.princeton.edu/home/.

- ↑ 15.0 15.1 15.2 15.3 15.4 15.5 Gonnet, G. H.; Yates, R. Baeza (January 1991). Handbook of algorithms and data structures: in Pascal and C (2 ed.). Boston, United States: Addison-Wesley. ISBN 978-0-201-41607-7. https://dl.acm.org/doi/book/10.5555/103324.

- ↑ Patil, Varsha H. (10 May 2012). Data Structures using C++. Oxford University Press. ISBN 9780198066231. https://global.oup.com/academic/product/data-structures-using-c-9780198066231.

- ↑ "The IEEE 754 Format". Department of Mathematics and Computer Science, Emory University. http://mathcenter.oxford.emory.edu/site/cs170/ieee754/.

- ↑ Bellekens, Xavier (2014). "A Highly-Efficient Memory-Compression Scheme for GPU-Accelerated Intrusion Detection Systems". Proceedings of the 7th International Conference on Security of Information and Networks - SIN '14. Glasgow, Scotland, UK: ACM. pp. 302:302–302:309. doi:10.1145/2659651.2659723. ISBN 978-1-4503-3033-6.

- ↑ 19.0 19.1 Willar, Dan E. (27 January 1983). "Log-logarithmic worst-case range queries are possible in space O(n)". Information Processing Letters 17 (2): 81–84. doi:10.1016/0020-0190(83)90075-3. https://www.sciencedirect.com/science/article/abs/pii/0020019083900753.

- ↑ Sartaj Sahni (2004). "Data Structures, Algorithms, & Applications in C++: Tries". University of Florida. https://www.cise.ufl.edu/~sahni/dsaac/enrich/c16/tries.htm.

- ↑ Mehta, Dinesh P.; Sahni, Sartaj (7 March 2018). "Tries". Handbook of Data Structures and Applications (2 ed.). Chapman & Hall, University of Florida. ISBN 978-1498701853. https://www.routledge.com/Handbook-of-Data-Structures-and-Applications/Mehta-Sahni/p/book/9780367572006.

- ↑ Jan Daciuk; Stoyan Mihov; Bruce W. Watson; Richard E. Watson (1 March 2000). "Incremental Construction of Minimal Acyclic Finite-State Automata". Computational Linguistics (MIT Press) 26 (1): 3–16. doi:10.1162/089120100561601. Bibcode: 2000cs........7009D. https://direct.mit.edu/coli/article/26/1/3/1628/Incremental-Construction-of-Minimal-Acyclic-Finite.

- ↑ "Patricia tree". National Institute of Standards and Technology. https://xlinux.nist.gov/dads/HTML/patriciatree.html.

- ↑ 24.0 24.1 24.2 Crochemore, Maxime; Lecroq, Thierry (2009). "Trie". Encyclopedia of Database Systems. Boston, United States: Springer Publishing. doi:10.1007/978-0-387-39940-9. ISBN 978-0-387-49616-0. Bibcode: 2009eds..book.....L. https://link.springer.com/referencework/10.1007/978-0-387-39940-9.

- ↑ 25.0 25.1 25.2 Martinez-Prieto, Miguel A.; Brisaboa, Nieves; Canovas, Rodrigo; Claude, Francisco; Navarro, Gonzalo (March 2016). "Practical compressed string dictionaries". Information Systems (Elsevier) 56: 73–108. doi:10.1016/j.is.2015.08.008. ISSN 0306-4379. https://www.sciencedirect.com/science/article/abs/pii/S0306437915001672.

- ↑ Kärkkäinen, Juha. "Lecture 2". University of Helsinki. https://www.cs.helsinki.fi/u/tpkarkka/opetus/12s/spa/lecture02.pdf. ""The preorder of the nodes in a trie is the same as the lexicographical order of the strings they represent assuming the children of a node are ordered by the edge labels.""

- ↑ Kallis, Rafael (2018). "The Adaptive Radix Tree (Report #14-708-887)". https://www.ifi.uzh.ch/dam/jcr:27d15f69-2a44-40f9-8b41-6d11b5926c67/ReportKallisMScBasis.pdf.

- ↑ Ranjan Sinha and Justin Zobel and David Ring (Feb 2006). "Cache-Efficient String Sorting Using Copying". ACM Journal of Experimental Algorithmics 11: 1–32. doi:10.1145/1187436.1187439. https://people.eng.unimelb.edu.au/jzobel/fulltext/acmjea06.pdf.

- ↑ J. Kärkkäinen and T. Rantala (2008). "Engineering Radix Sort for Strings". in A. Amir and A. Turpin and A. Moffat. String Processing and Information Retrieval, Proc. SPIRE. Lecture Notes in Computer Science. 5280. Springer. pp. 3–14. doi:10.1007/978-3-540-89097-3_3. ISBN 978-3-540-89096-6.

- ↑ Giancarlo, Raffaele (28 May 1992). "A Generalization of the Suffix Tree to Square Matrices, with Applications". SIAM Journal on Computing (Society for Industrial and Applied Mathematics) 24 (3): 520–562. doi:10.1137/S0097539792231982. ISSN 0097-5397. https://epubs.siam.org/doi/abs/10.1137/S0097539792231982.

- ↑ Yang, Lai; Xu, Lida; Shi, Zhongzhi (23 March 2012). "An enhanced dynamic hash TRIE algorithm for lexicon search". Enterprise Information Systems 6 (4): 419–432. doi:10.1080/17517575.2012.665483. Bibcode: 2012EntIS...6..419Y.

- ↑ Transier, Frederik; Sanders, Peter (December 2010). "Engineering basic algorithms of an in-memory text search engine". ACM Transactions on Information Systems (Association for Computing Machinery) 29 (1): 1–37. doi:10.1145/1877766.1877768. https://dl.acm.org/doi/10.1145/1877766.1877768.

External links

|