Phase plane

| Differential equations |

|---|

|

| Classification |

| Solution |

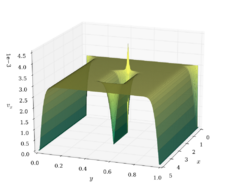

In applied mathematics, in particular the context of nonlinear system analysis, a phase plane is a visual display of certain characteristics of certain kinds of differential equations; a coordinate plane with axes being the values of the two state variables, say (x, y), or (q, p) etc. (any pair of variables). It is a two-dimensional case of the general n-dimensional phase space.

The phase plane method refers to graphically determining the existence of limit cycles in the solutions of the differential equation.

The solutions to the differential equation are a family of functions. Graphically, this can be plotted in the phase plane like a two-dimensional vector field. Vectors representing the derivatives of the points with respect to a parameter (say time t), that is (dx/dt, dy/dt), at representative points are drawn. With enough of these arrows in place the system behaviour over the regions of plane in analysis can be visualized and limit cycles can be easily identified.

The entire field is the phase portrait, a particular path taken along a flow line (i.e. a path always tangent to the vectors) is a phase path. The flows in the vector field indicate the time-evolution of the system the differential equation describes.

In this way, phase planes are useful in visualizing the behaviour of physical systems; in particular, of oscillatory systems such as predator-prey models (see Lotka–Volterra equations). In these models the phase paths can "spiral in" towards zero, "spiral out" towards infinity, or reach neutrally stable situations called centres where the path traced out can be either circular, elliptical, or ovoid, or some variant thereof. This is useful in determining if the dynamics are stable or not.[1]

Other examples of oscillatory systems are certain chemical reactions with multiple steps, some of which involve dynamic equilibria rather than reactions that go to completion. In such cases one can model the rise and fall of reactant and product concentration (or mass, or amount of substance) with the correct differential equations and a good understanding of chemical kinetics.[2]

Example of a linear system

A two-dimensional system of linear differential equations can be written in the form:[1]

- [math]\displaystyle{ \begin{align} \frac{dx}{dt} & = Ax + By \\ \frac{dy}{dt} & = Cx + Dy \end{align} }[/math]

which can be organized into a matrix equation:

- [math]\displaystyle{ \begin{align} & \frac{d}{dt} \begin{bmatrix} x \\ y \\ \end{bmatrix} = \begin{bmatrix} A & B \\ C & D \\ \end{bmatrix} \begin{bmatrix} x \\ y \\ \end{bmatrix} \\ & \frac{d\mathbf{v}}{dt} = \mathbf{A}\mathbf{v}. \end{align} }[/math]

where A is the 2 × 2 coefficient matrix above, and v = (x, y) is a coordinate vector of two independent variables.

Such systems may be solved analytically, for this case by integrating:[3]

[math]\displaystyle{ \frac{dy}{dx} = \frac{Cx+Dy}{Ax+By} }[/math]

although the solutions are implicit functions in x and y, and are difficult to interpret.[1]

Solving using eigenvalues

More commonly they are solved with the coefficients of the right hand side written in matrix form using eigenvalues λ, given by the determinant:

- [math]\displaystyle{ \det \left(\begin{bmatrix} A & B \\ C & D \\ \end{bmatrix}- \lambda \mathbf{I}\right) = 0 }[/math]

and eigenvectors:

- [math]\displaystyle{ \begin{bmatrix} A & B \\ C & D \\ \end{bmatrix}\mathbf{x}=\lambda\mathbf{x} }[/math]

The eigenvalues represent the powers of the exponential components and the eigenvectors are coefficients. If the solutions are written in algebraic form, they express the fundamental multiplicative factor of the exponential term. Due to the nonuniqueness of eigenvectors, every solution arrived at in this way has undetermined constants c1, c2, …, cn.

The general solution is:

- [math]\displaystyle{ x = \begin{bmatrix} k_{1} \\ k_{2} \end{bmatrix} c_{1}e^{\lambda_1 t} + \begin{bmatrix} k_{3} \\ k_{4} \end{bmatrix} c_{2}e^{\lambda_2 t}. }[/math]

where λ1 and λ2 are the eigenvalues, and (k1, k2), (k3, k4) are the basic eigenvectors. The constants c1 and c2 account for the nonuniqueness of eigenvectors and are not solvable unless an initial condition is given for the system.

The above determinant leads to the characteristic polynomial:

- [math]\displaystyle{ \lambda^2 - (A+D)\lambda + (AD-BC) = 0 }[/math]

which is just a quadratic equation of the form:

- [math]\displaystyle{ \lambda^2 - p\lambda + q=0 }[/math]

where [math]\displaystyle{ p = A+D = \mathrm{tr}(\mathbf{A}) \,, }[/math] ("tr" denotes trace) and [math]\displaystyle{ q=AD-BC=\det(\mathbf{A})\,. }[/math]

The explicit solution of the eigenvalues are then given by the quadratic formula:

- [math]\displaystyle{ \lambda = \frac{1}{2}(p\pm \sqrt{\Delta})\, }[/math]

where [math]\displaystyle{ \Delta=p^2-4q \,. }[/math]

Eigenvectors and nodes

The eigenvectors and nodes determine the profile of the phase paths, providing a pictorial interpretation of the solution to the dynamical system, as shown next.

The phase plane is then first set-up by drawing straight lines representing the two eigenvectors (which represent stable situations where the system either converges towards those lines or diverges away from them). Then the phase plane is plotted by using full lines instead of direction field dashes. The signs of the eigenvalues indicate the phase plane's behaviour:

- If the signs are opposite, the intersection of the eigenvectors is a saddle point.

- If the signs are both positive, the eigenvectors represent stable situations that the system diverges away from, and the intersection is an unstable node.

- If the signs are both negative, the eigenvectors represent stable situations that the system converges towards, and the intersection is a stable node.

The above can be visualized by recalling the behaviour of exponential terms in differential equation solutions.

Repeated eigenvalues

This example covers only the case for real, separate eigenvalues. Real, repeated eigenvalues require solving the coefficient matrix with an unknown vector and the first eigenvector to generate the second solution of a two-by-two system. However, if the matrix is symmetric, it is possible to use the orthogonal eigenvector to generate the second solution.

Complex eigenvalues

Complex eigenvalues and eigenvectors generate solutions in the form of sines and cosines as well as exponentials. One of the simplicities in this situation is that only one of the eigenvalues and one of the eigenvectors is needed to generate the full solution set for the system.

See also

- Phase line, 1-dimensional case

- Phase space, n-dimensional case

- Phase portrait

References

- ↑ 1.0 1.1 1.2 1.3 D.W. Jordan; P. Smith (2007). Non-Linear Ordinary Differential Equations: Introduction for Scientists and Engineers (4th ed.). Oxford University Press. ISBN 978-0-19-920825-8.

- ↑ K.T. Alligood; T.D. Sauer; J.A. Yorke (1996). Chaos: An Introduction to Dynamical Systems. Springer. ISBN 978-0-38794-677-1.

- ↑ W.E. Boyce; R.C. Diprima (1986). Elementary Differential Equations and Boundary Value Problems (4th ed.). John Wiley & Sons. ISBN 0-471-83824-1. https://archive.org/details/elementarydiffer4th00boyc.

External links

- Lamar University, Online Math Notes - Phase Plane, P. Dawkins

- Lamar University, Online Math Notes - Systems of Differential Equations, P. Dawkins

- Overview of the phase plane method

|