Local time (mathematics)

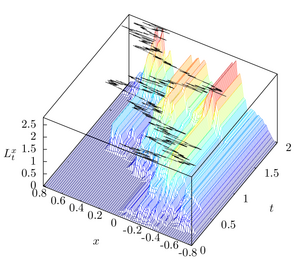

In the mathematical theory of stochastic processes, local time is a stochastic process associated with semimartingale processes such as Brownian motion, that characterizes the amount of time a particle has spent at a given level. Local time appears in various stochastic integration formulas, such as Tanaka's formula, if the integrand is not sufficiently smooth. It is also studied in statistical mechanics in the context of random fields.

Formal definition

For a continuous real-valued semimartingale [math]\displaystyle{ (B_s)_{s\ge 0} }[/math], the local time of [math]\displaystyle{ B }[/math] at the point [math]\displaystyle{ x }[/math] is the stochastic process which is informally defined by

- [math]\displaystyle{ L^x(t) =\int_0^t \delta(x-B_s)\,d[B]_s, }[/math]

where [math]\displaystyle{ \delta }[/math] is the Dirac delta function and [math]\displaystyle{ [B] }[/math] is the quadratic variation. It is a notion invented by Paul Lévy. The basic idea is that [math]\displaystyle{ L^x(t) }[/math] is an (appropriately rescaled and time-parametrized) measure of how much time [math]\displaystyle{ B_s }[/math] has spent at [math]\displaystyle{ x }[/math] up to time [math]\displaystyle{ t }[/math]. More rigorously, it may be written as the almost sure limit

- [math]\displaystyle{ L^x(t) =\lim_{\varepsilon\downarrow 0} \frac{1}{2\varepsilon} \int_0^t 1_{\{ x- \varepsilon \lt B_s \lt x+\varepsilon \}} \, d[B]_s, }[/math]

which may be shown to always exist. Note that in the special case of Brownian motion (or more generally a real-valued diffusion of the form [math]\displaystyle{ dB = b(t,B)\,dt+ dW }[/math] where [math]\displaystyle{ W }[/math] is a Brownian motion), the term [math]\displaystyle{ d[B]_s }[/math] simply reduces to [math]\displaystyle{ ds }[/math], which explains why it is called the local time of [math]\displaystyle{ B }[/math] at [math]\displaystyle{ x }[/math]. For a discrete state-space process [math]\displaystyle{ (X_s)_{s\ge 0} }[/math], the local time can be expressed more simply as[1]

- [math]\displaystyle{ L^x(t) =\int_0^t 1_{\{x\}}(X_s) \, ds. }[/math]

Tanaka's formula

Tanaka's formula also provides a definition of local time for an arbitrary continuous semimartingale [math]\displaystyle{ (X_s)_{s\ge 0} }[/math] on [math]\displaystyle{ \mathbb R: }[/math][2]

- [math]\displaystyle{ L^x(t) = |X_t - x| - |X_0 - x| - \int_0^t \left( 1_{(0,\infty)}(X_s - x) - 1_{(-\infty, 0]}(X_s-x) \right) \, dX_s, \qquad t \geq 0. }[/math]

A more general form was proven independently by Meyer[3] and Wang;[4] the formula extends Itô's lemma for twice differentiable functions to a more general class of functions. If [math]\displaystyle{ F:\mathbb R \rightarrow \mathbb R }[/math] is absolutely continuous with derivative [math]\displaystyle{ F', }[/math] which is of bounded variation, then

- [math]\displaystyle{ F(X_t) = F(X_0) + \int_0^t F'_{-}(X_s) \, dX_s + \frac12 \int_{-\infty}^\infty L^x(t) \, dF'_{-}(x), }[/math]

where [math]\displaystyle{ F'_{-} }[/math] is the left derivative.

If [math]\displaystyle{ X }[/math] is a Brownian motion, then for any [math]\displaystyle{ \alpha\in(0,1/2) }[/math] the field of local times [math]\displaystyle{ L = (L^x(t))_{x \in \mathbb R, t \geq 0} }[/math] has a modification which is a.s. Hölder continuous in [math]\displaystyle{ x }[/math] with exponent [math]\displaystyle{ \alpha }[/math], uniformly for bounded [math]\displaystyle{ x }[/math] and [math]\displaystyle{ t }[/math].[5] In general, [math]\displaystyle{ L }[/math] has a modification that is a.s. continuous in [math]\displaystyle{ t }[/math] and càdlàg in [math]\displaystyle{ x }[/math].

Tanaka's formula provides the explicit Doob–Meyer decomposition for the one-dimensional reflecting Brownian motion, [math]\displaystyle{ (|B_s|)_{s \geq 0} }[/math].

Ray–Knight theorems

The field of local times [math]\displaystyle{ L_t = (L^x_t)_{x \in E} }[/math] associated to a stochastic process on a space [math]\displaystyle{ E }[/math] is a well studied topic in the area of random fields. Ray–Knight type theorems relate the field Lt to an associated Gaussian process.

In general Ray–Knight type theorems of the first kind consider the field Lt at a hitting time of the underlying process, whilst theorems of the second kind are in terms of a stopping time at which the field of local times first exceeds a given value.

First Ray–Knight theorem

Let (Bt)t ≥ 0 be a one-dimensional Brownian motion started from B0 = a > 0, and (Wt)t≥0 be a standard two-dimensional Brownian motion started from W0 = 0 ∈ R2. Define the stopping time at which B first hits the origin, [math]\displaystyle{ T = \inf\{t \geq 0 \colon B_t = 0\} }[/math]. Ray[6] and Knight[7] (independently) showed that

-

[math]\displaystyle{ \left\{ L^x(T) \colon x \in [0,a] \right\} \stackrel{\mathcal{D}}{=} \left\{ |W_x|^2 \colon x \in [0,a] \right\} \, }[/math]

()

where (Lt)t ≥ 0 is the field of local times of (Bt)t ≥ 0, and equality is in distribution on C[0, a]. The process |Wx|2 is known as the squared Bessel process.

Second Ray–Knight theorem

Let (Bt)t ≥ 0 be a standard one-dimensional Brownian motion B0 = 0 ∈ R, and let (Lt)t ≥ 0 be the associated field of local times. Let Ta be the first time at which the local time at zero exceeds a > 0

- [math]\displaystyle{ T_a = \inf \{ t \geq 0 \colon L^0_t \gt a \}. }[/math]

Let (Wt)t ≥ 0 be an independent one-dimensional Brownian motion started from W0 = 0, then[8]

-

[math]\displaystyle{ \left \{ L^x_{T_a} + W_x^2 \colon x \geq 0 \right \} \stackrel{\mathcal{D}}{=} \left\{ (W_x + \sqrt a )^2 \colon x \geq 0 \right \}. \, }[/math]

()

Equivalently, the process [math]\displaystyle{ (L^x_{T_a})_{x \geq 0} }[/math] (which is a process in the spatial variable [math]\displaystyle{ x }[/math]) is equal in distribution to the square of a 0-dimensional Bessel process started at [math]\displaystyle{ a }[/math], and as such is Markovian.

Generalized Ray–Knight theorems

Results of Ray–Knight type for more general stochastic processes have been intensively studied, and analogue statements of both (1) and (2) are known for strongly symmetric Markov processes.

See also

Notes

- ↑ Karatzas, Ioannis; Shreve, Steven (1991). Brownian Motion and Stochastic Calculus. Springer.

- ↑ Kallenberg (1997). Foundations of Modern Probability. New York: Springer. pp. 428–449. ISBN 0387949577. https://archive.org/details/foundationsmoder00kall_063.

- ↑ Meyer, Paul-Andre (2002). "Un cours sur les intégrales stochastiques". Séminaire de probabilités 1967–1980. Lect. Notes in Math.. 1771. pp. 174–329. doi:10.1007/978-3-540-45530-1_11. ISBN 978-3-540-42813-8.

- ↑ Wang (1977). "Generalized Itô's formula and additive functionals of Brownian motion". Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete 41 (2): 153–159. doi:10.1007/bf00538419.

- ↑ Kallenberg (1997). Foundations of Modern Probability. New York: Springer. pp. 370. ISBN 0387949577. https://archive.org/details/foundationsmoder00kall_963.

- ↑ Ray, D. (1963). "Sojourn times of a diffusion process". Illinois Journal of Mathematics 7 (4): 615–630. doi:10.1215/ijm/1255645099.

- ↑ Knight, F. B. (1963). "Random walks and a sojourn density process of Brownian motion". Transactions of the American Mathematical Society 109 (1): 56–86. doi:10.2307/1993647.

- ↑ Marcus; Rosen (2006). Markov Processes, Gaussian Processes and Local Times. New York: Cambridge University Press. pp. 53–56. ISBN 0521863007. https://archive.org/details/markovprocessesg00marc.

References

- K. L. Chung and R. J. Williams, Introduction to Stochastic Integration, 2nd edition, 1990, Birkhäuser, ISBN 978-0-8176-3386-8.

- M. Marcus and J. Rosen, Markov Processes, Gaussian Processes, and Local Times, 1st edition, 2006, Cambridge University Press ISBN 978-0-521-86300-1

- P. Mörters and Y. Peres, Brownian Motion, 1st edition, 2010, Cambridge University Press, ISBN 978-0-521-76018-8.

|