Gamma process

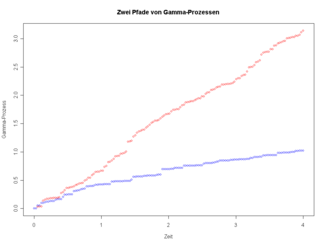

A gamma process, also called the Moran-Gamma subordinator,[1] is a two-parameter stochastic process which models the accumulation of effort or wear over time. The gamma process has independent and stationary increments which follow the gamma distribution, hence the name. The gamma process is studied in mathematics, statistics, probability theory, and stochastics, with particular applications in deterioration modeling[2] and mathematical finance.[3]

Notation

The gamma process is often abbreviated as where represents the time from 0. The shape parameter (inversely) controls the jump size, and the rate parameter controls the rate of jump arrivals, analogously with the gamma distribution.[4] Both and must be greater than 0. We use the gamma function and gamma distribution in this article, so the reader should distinguish between (the gamma function), (the gamma distribution), and (the gamma process).

Definition

The process is a pure-jump increasing Lévy process with intensity measure for all positive . It is assumed that the process starts from a value 0 at meaning . Thus jumps whose size lies in the interval occur as a Poisson process with intensity

The process can also be defined as a stochastic process with and independent increments, whose marginal distribution of the random variable for an increment is given by[4]

Inhomogenous process

It is also possible to allow the shape parameter to vary as a function of time, .[4]

Properties

Mean and variance

Because the value at each time has mean and variance [5] the gamma process is sometimes also parameterised in terms of the mean () and variance () of the increase per unit time. These satisfy and .

Scaling

Multiplication of a gamma process by a scalar constant is again a gamma process with different mean increase rate.

Adding independent processes

The sum of two independent gamma processes is again a gamma process.

Moments

The moment function helps mathematicians find expected values, variances, skewness, and kurtosis. where is the Gamma function.

Moment generating function

The moment generating function is the expected value of where X is the random variable.

Correlation

Correlation displays the statistical relationship between any two gamma processes. , for any gamma process

Related processes

The gamma process is used as the distribution for random time change in the variance gamma process. Specifically, combining Brownian motion with a gamma process produces a variance gamma process,[6] and a variance gamma process can be written as the difference of two gamma processes.[3]

See also

Notes

- ↑ Klenke 2008, p. 536.

- ↑ Sánchez-Silva & Klutke 2016, p. 93.

- ↑ 3.0 3.1 Fu & Madan 2007, p. 38.

- ↑ 4.0 4.1 4.2 Sánchez-Silva & Klutke 2016, p. 133.

- ↑ Sánchez-Silva & Klutke 2016, p. 94.

- ↑ Applebaum 2004, pp. 58–59.

References

- Applebaum, David (2004). Lévy processes and stochastic calculus. Cambridge, UK; New York: Cambridge University Press. ISBN 0-521-83263-2.

- Fu, Michael; Madan, Dilip B. (2007). Advances in mathematical finance. Boston: Birkhauser. ISBN 978-0-8176-4545-8.

- Klenke, Achim (2008). Probability theory: a comprehensive course. London: Springer. doi:10.1007/978-1-84800-048-3_24. ISBN 978-1-84800-048-3.

- Sánchez-Silva, Mauricio; Klutke, Georgia-Ann (2016). Reliability and life-cycle analysis of deteriorating systems. Cham: Springer. ISBN 978-3-319-20946-3.

|