Jensen's inequality

In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proved by Jensen in 1906,[1] building on an earlier proof of the same inequality for doubly-differentiable functions by Otto Hölder in 1889.[2] Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation; it is a simple corollary that the opposite is true of concave transformations.[3]

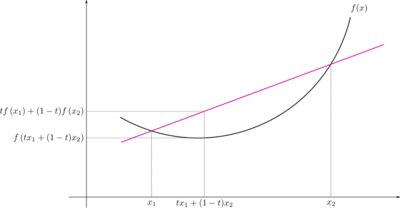

Jensen's inequality generalizes the statement that the secant line of a convex function lies above the graph of the function, which is Jensen's inequality for two points: the secant line consists of weighted means of the convex function (for t ∈ [0,1]),

while the graph of the function is the convex function of the weighted means,

Thus, Jensen's inequality is

In the context of probability theory, it is generally stated in the following form: if X is a random variable and φ is a convex function, then

The difference between the two sides of the inequality, , is called the Jensen gap.[4]

Statements

The classical form of Jensen's inequality involves several numbers and weights. The inequality can be stated quite generally using either the language of measure theory or (equivalently) probability. In the probabilistic setting, the inequality can be further generalized to its full strength.

Finite form

For a real convex function , numbers in its domain, and positive weights , Jensen's inequality can be stated as:

-

()

and the inequality is reversed if is concave, which is

-

()

Equality holds if and only if or is linear on a domain containing .

As a particular case, if the weights are all equal, then (1) and (2) become

-

()

-

()

For instance, the function log(x) is concave, so substituting in the previous formula (4) establishes the (logarithm of the) familiar arithmetic-mean/geometric-mean inequality:

A common application has x as a function of another variable (or set of variables) t, that is, . All of this carries directly over to the general continuous case: the weights ai are replaced by a non-negative integrable function f (x), such as a probability distribution, and the summations are replaced by integrals.

Measure-theoretic form

Let be a probability space. Let be a -measurable function and be convex. Then:[5]

In real analysis, we may require an estimate on

where , and is a non-negative Lebesgue-integrable function. In this case, the Lebesgue measure of need not be unity. However, by integration by substitution, the interval can be rescaled so that it has measure unity. Then Jensen's inequality can be applied to get[6]

Probabilistic form

The same result can be equivalently stated in a probability theory setting, by a simple change of notation. Let be a probability space, X an integrable real-valued random variable and φ a convex function. Then:

In this probability setting, the measure μ is intended as a probability , the integral with respect to μ as an expected value , and the function as a random variable X.

Note that the equality holds if and only if φ is a linear function on some convex set such that (which follows by inspecting the measure-theoretical proof below).

General inequality in a probabilistic setting

More generally, let T be a real topological vector space, and X a T-valued integrable random variable. In this general setting, integrable means that there exists an element in T, such that for any element z in the dual space of T: , and . Then, for any measurable convex function φ and any sub-σ-algebra of :

Here stands for the expectation conditioned to the σ-algebra . This general statement reduces to the previous ones when the topological vector space T is the real axis, and is the trivial σ-algebra {∅, Ω} (where ∅ is the empty set, and Ω is the sample space).[8]

A sharpened and generalized form

Let X be a one-dimensional random variable with mean and variance . Let be a twice differentiable function, and define the function

Then[9]

In particular, when is convex, then , and the standard form of Jensen's inequality immediately follows for the case where is additionally assumed to be twice differentiable.

Proofs

Intuitive graphical proof

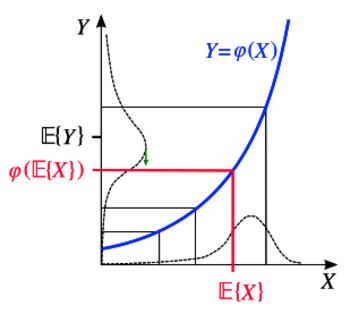

Jensen's inequality can be proved in several ways, and three different proofs corresponding to the different statements above will be offered. Before embarking on these mathematical derivations, however, it is worth analyzing an intuitive graphical argument based on the probabilistic case where X is a real number (see figure). Assuming a hypothetical distribution of X values, one can immediately identify the position of and its image in the graph. Noticing that for convex mappings Y = φ(x) of some x values the corresponding distribution of Y values is increasingly "stretched up" for increasing values of X, it is easy to see that the distribution of Y is broader in the interval corresponding to X > X0 and narrower in X < X0 for any X0; in particular, this is also true for . Consequently, in this picture the expectation of Y will always shift upwards with respect to the position of . A similar reasoning holds if the distribution of X covers a decreasing portion of the convex function, or both a decreasing and an increasing portion of it. This "proves" the inequality, i.e.

with equality when φ(X) is not strictly convex, e.g. when it is a straight line, or when X follows a degenerate distribution (i.e. is a constant).

The proofs below formalize this intuitive notion.

Proof 1 (finite form)

If λ1 and λ2 are two arbitrary nonnegative real numbers such that λ1 + λ2 = 1 then convexity of φ implies

This can be generalized: if λ1, ..., λn are nonnegative real numbers such that λ1 + ... + λn = 1, then

for any x1, ..., xn.

The finite form of the Jensen's inequality can be proved by induction: by convexity hypotheses, the statement is true for n = 2. Suppose the statement is true for some n, so

for any λ1, ..., λn such that λ1 + ... + λn = 1.

One needs to prove it for n + 1. At least one of the λi is strictly smaller than , say λn+1; therefore by convexity inequality:

Since λ1 + ... +λn + λn+1 = 1,

- ,

applying the inductive hypothesis gives

therefore

We deduce the equality is true for n + 1, by induction it follows that the result is also true for all integer n greater than 2.

In order to obtain the general inequality from this finite form, one needs to use a density argument. The finite form can be rewritten as:

where μn is a measure given by an arbitrary convex combination of Dirac deltas:

Since convex functions are continuous, and since convex combinations of Dirac deltas are weakly dense in the set of probability measures (as could be easily verified), the general statement is obtained simply by a limiting procedure.

Proof 2 (measure-theoretic form)

Let be a real-valued -integrable function on a probability space , and let be a convex function on the real numbers. Since is convex, at each real number we have a nonempty set of subderivatives, which may be thought of as lines touching the graph of at , but which are below the graph of at all points (support lines of the graph).

Now, if we define

because of the existence of subderivatives for convex functions, we may choose and such that

for all real and

But then we have that

for almost all . Since we have a probability measure, the integral is monotone with so that

as desired.

Proof 3 (general inequality in a probabilistic setting)

Let X be an integrable random variable that takes values in a real topological vector space T. Since is convex, for any , the quantity

is decreasing as θ approaches 0+. In particular, the subdifferential of evaluated at x in the direction y is well-defined by

It is easily seen that the subdifferential is linear in y [citation needed] (that is false and the assertion requires Hahn-Banach theorem to be proved) and, since the infimum taken in the right-hand side of the previous formula is smaller than the value of the same term for θ = 1, one gets

In particular, for an arbitrary sub-σ-algebra we can evaluate the last inequality when to obtain

Now, if we take the expectation conditioned to on both sides of the previous expression, we get the result since:

by the linearity of the subdifferential in the y variable, and the following well-known property of the conditional expectation:

Applications and special cases

Form involving a probability density function

Suppose Ω is a measurable subset of the real line and f(x) is a non-negative function such that

In probabilistic language, f is a probability density function.

Then Jensen's inequality becomes the following statement about convex integrals:

If g is any real-valued measurable function and is convex over the range of g, then

If g(x) = x, then this form of the inequality reduces to a commonly used special case:

This is applied in Variational Bayesian methods.

Example: even moments of a random variable

If g(x) = x2n, and X is a random variable, then g is convex as

and so

In particular, if some even moment 2n of X is finite, X has a finite mean. An extension of this argument shows X has finite moments of every order dividing n.

Alternative finite form

Let Ω = {x1, ... xn}, and take μ to be the counting measure on Ω, then the general form reduces to a statement about sums:

provided that λi ≥ 0 and

There is also an infinite discrete form.

Statistical physics

Jensen's inequality is of particular importance in statistical physics when the convex function is an exponential, giving:

where the expected values are with respect to some probability distribution in the random variable X.

Proof: Let in

Information theory

If p(x) is the true probability density for X, and q(x) is another density, then applying Jensen's inequality for the random variable Y(X) = q(X)/p(X) and the convex function φ(y) = −log(y) gives

Therefore:

a result called Gibbs' inequality.

It shows that the average message length is minimised when codes are assigned on the basis of the true probabilities p rather than any other distribution q. The quantity that is non-negative is called the Kullback–Leibler divergence of q from p.

Since −log(x) is a strictly convex function for x > 0, it follows that equality holds when p(x) equals q(x) almost everywhere.

Rao–Blackwell theorem

If L is a convex function and a sub-sigma-algebra, then, from the conditional version of Jensen's inequality, we get

So if δ(X) is some estimator of an unobserved parameter θ given a vector of observables X; and if T(X) is a sufficient statistic for θ; then an improved estimator, in the sense of having a smaller expected loss L, can be obtained by calculating

the expected value of δ with respect to θ, taken over all possible vectors of observations X compatible with the same value of T(X) as that observed. Further, because T is a sufficient statistics, does not depend on θ, hence, becomes a statistics.

This result is known as the Rao–Blackwell theorem.

See also

- Karamata's inequality for a more general inequality

- Popoviciu's inequality

- Law of averages

- A proof without words of Jensen's inequality

Notes

- ↑ Jensen, J. L. W. V. (1906). "Sur les fonctions convexes et les inégalités entre les valeurs moyennes". Acta Mathematica 30 (1): 175–193. doi:10.1007/BF02418571. https://zenodo.org/record/2371297.

- ↑ Guessab, A.; Schmeisser, G. (2013). "Necessary and sufficient conditions for the validity of Jensen's inequality". Archiv der Mathematik 100 (6): 561–570. doi:10.1007/s00013-013-0522-3.

- ↑ Dekking, F.M.; Kraaikamp, C.; Lopuhaa, H.P.; Meester, L.E. (2005). A Modern Introduction to Probability and Statistics: Understanding Why and How. Springer Texts in Statistics. London: Springer. doi:10.1007/1-84628-168-7. ISBN 978-1-85233-896-1. https://link.springer.com/book/10.1007%2F1-84628-168-7.

- ↑ Gao, Xiang; Sitharam, Meera; Roitberg, Adrian (2019). "Bounds on the Jensen Gap, and Implications for Mean-Concentrated Distributions". The Australian Journal of Mathematical Analysis and Applications 16 (2). https://ajmaa.org/searchroot/files/pdf/v16n2/v16i2p14.pdf.

- ↑ p. 25 of Rick Durrett (2019). Probability: Theory and Examples (5th ed.). Cambridge University Press. ISBN 978-1108473682. https://services.math.duke.edu/~rtd/PTE/pte.html.

- ↑ Niculescu, Constantin P. "Integral inequalities", P. 12.

- ↑ p. 29 of Rick Durrett (2019). Probability: Theory and Examples (5th ed.). Cambridge University Press. ISBN 978-1108473682. https://services.math.duke.edu/~rtd/PTE/pte.html.

- ↑ Attention: In this generality additional assumptions on the convex function and/ or the topological vector space are needed, see Example (1.3) on p. 53 in Perlman, Michael D. (1974). "Jensen's Inequality for a Convex Vector-Valued Function on an Infinite-Dimensional Space". Journal of Multivariate Analysis 4 (1): 52–65. doi:10.1016/0047-259X(74)90005-0.

- ↑ Liao, J.; Berg, A (2018). "Sharpening Jensen's Inequality". American Statistician 73 (3): 278–281. doi:10.1080/00031305.2017.1419145.

- ↑ Bradley, CJ (2006). Introduction to Inequalities. Leeds, United Kingdom: United Kingdom Mathematics Trust. p. 97. ISBN 978-1-906001-11-7. https://www.ukmt.org.uk/product/85.

References

- David Chandler (1987). Introduction to Modern Statistical Mechanics. Oxford. ISBN 0-19-504277-8. https://archive.org/details/introductiontomo0000chan.

- Tristan Needham (1993) "A Visual Explanation of Jensen's Inequality", American Mathematical Monthly 100(8):768–71.

- Nicola Fusco; Paolo Marcellini; Carlo Sbordone (1996). Analisi Matematica Due. Liguori. ISBN 978-88-207-2675-1.

- Walter Rudin (1987). Real and Complex Analysis. McGraw-Hill. ISBN 0-07-054234-1.

- Rick Durrett (2019). Probability: Theory and Examples (5th ed.). Cambridge University Press. pp. 430. ISBN 978-1108473682. https://services.math.duke.edu/~rtd/PTE/pte.html. Retrieved 21 Dec 2020.

- Sam Savage (2012) The Flaw of Averages: Why We Underestimate Risk in the Face of Uncertainty (1st ed.) Wiley. ISBN 978-0471381976

External links

- Jensen's Operator Inequality of Hansen and Pedersen.

- Hazewinkel, Michiel, ed. (2001), "Jensen inequality", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4, https://www.encyclopediaofmath.org/index.php?title=p/j054220

- Weisstein, Eric W.. "Jensen's inequality". http://mathworld.wolfram.com/JensensInequality.html.

- Arthur Lohwater (1982). "Introduction to Inequalities". Online e-book in PDF format. http://www.mediafire.com/file/1mw1tkgozzu.

|