Sample space

| Part of a series on statistics |

| Probability theory |

|---|

|

In probability theory, the sample space (also called sample description space,[1] possibility space,[2] or outcome space[3]) of an experiment or random trial is the set of all possible outcomes or results of that experiment.[4] A sample space is usually denoted using set notation, and the possible ordered outcomes, or sample points,[5] are listed as elements in the set. It is common to refer to a sample space by the labels S, Ω, or U (for "universal set"). The elements of a sample space may be numbers, words, letters, or symbols. They can also be finite, countably infinite, or uncountably infinite.[6]

A subset of the sample space is an event, denoted by . If the outcome of an experiment is included in , then event has occurred.[7]

For example, if the experiment is tossing a single coin, the sample space is the set , where the outcome means that the coin is heads and the outcome means that the coin is tails.[8] The possible events are , , , and . For tossing two coins, the sample space is , where the outcome is if both coins are heads, if the first coin is heads and the second is tails, if the first coin is tails and the second is heads, and if both coins are tails.[9] The event that at least one of the coins is heads is given by .

For tossing a single six-sided die one time, where the result of interest is the number of pips facing up, the sample space is .[10]

A well-defined, non-empty sample space is one of three components in a probabilistic model (a probability space). The other two basic elements are a well-defined set of possible events (an event space), which is typically the power set of if is discrete or a σ-algebra on if it is continuous, and a probability assigned to each event (a probability measure function).[11]

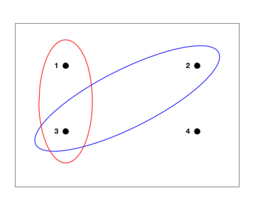

A sample space can be represented visually by a rectangle, with the outcomes of the sample space denoted by points within the rectangle. The events may be represented by ovals, where the points enclosed within the oval make up the event.[12]

Conditions of a sample space

A set with outcomes (i.e. ) must meet some conditions in order to be a sample space:[13]

- The outcomes must be mutually exclusive, i.e. if occurs, then no other will take place, .[6]

- The outcomes must be collectively exhaustive, i.e. on every experiment (or random trial) there will always take place some outcome for .[6]

- The sample space () must have the right granularity depending on what the experimenter is interested in. Irrelevant information must be removed from the sample space and the right abstraction must be chosen.

For instance, in the trial of tossing a coin, one possible sample space is , where is the outcome where the coin lands heads and is for tails. Another possible sample space could be . Here, denotes a rainy day and is a day where it is not raining. For most experiments, would be a better choice than , as an experimenter likely does not care about how the weather affects the coin toss.

Multiple sample spaces

For many experiments, there may be more than one plausible sample space available, depending on what result is of interest to the experimenter. For example, when drawing a card from a standard deck of fifty-two playing cards, one possibility for the sample space could be the various ranks (Ace through King), while another could be the suits (clubs, diamonds, hearts, or spades).[4][14] A more complete description of outcomes, however, could specify both the denomination and the suit, and a sample space describing each individual card can be constructed as the Cartesian product of the two sample spaces noted above (this space would contain fifty-two equally likely outcomes). Still other sample spaces are possible, such as right-side up or upside down, if some cards have been flipped when shuffling.

Equally likely outcomes

Some treatments of probability assume that the various outcomes of an experiment are always defined so as to be equally likely.[15] For any sample space with equally likely outcomes, each outcome is assigned the probability .[16] However, there are experiments that are not easily described by a sample space of equally likely outcomes—for example, if one were to toss a thumb tack many times and observe whether it landed with its point upward or downward, there is no physical symmetry to suggest that the two outcomes should be equally likely.[17]

Though most random phenomena do not have equally likely outcomes, it can be helpful to define a sample space in such a way that outcomes are at least approximately equally likely, since this condition significantly simplifies the computation of probabilities for events within the sample space. If each individual outcome occurs with the same probability, then the probability of any event becomes simply:[18]: 346–347

For example, if two fair six-sided dice are thrown to generate two uniformly distributed integers, and , each in the range from 1 to 6, inclusive, the 36 possible ordered pairs of outcomes constitute a sample space of equally likely events. In this case, the above formula applies, such as calculating the probability of a particular sum of the two rolls in an outcome. The probability of the event that the sum is five is , since four of the thirty-six equally likely pairs of outcomes sum to five.

If the sample space was all of the possible sums obtained from rolling two six-sided dice, the above formula can still be applied because the dice rolls are fair, but the number of outcomes in a given event will vary. A sum of two can occur with the outcome , so the probability is . For a sum of seven, the outcomes in the event are , so the probability is .[19]

Simple random sample

In statistics, inferences are made about characteristics of a population by studying a sample of that population's individuals. In order to arrive at a sample that presents an unbiased estimate of the true characteristics of the population, statisticians often seek to study a simple random sample—that is, a sample in which every individual in the population is equally likely to be included.[18]: 274–275 The result of this is that every possible combination of individuals who could be chosen for the sample has an equal chance to be the sample that is selected (that is, the space of simple random samples of a given size from a given population is composed of equally likely outcomes).[20]

Infinitely large sample spaces

In an elementary approach to probability, any subset of the sample space is usually called an event.[9] However, this gives rise to problems when the sample space is continuous, so that a more precise definition of an event is necessary. Under this definition only measurable subsets of the sample space, constituting a σ-algebra over the sample space itself, are considered events.

An example of an infinitely large sample space is measuring the lifetime of a light bulb. The corresponding sample space would be [0, ∞).[9]

See also

- Parameter space

- Probability space

- Space (mathematics)

- Set (mathematics)

- Event (probability theory)

- σ-algebra

References

- ↑ Stark, Henry; Woods, John W. (2002). Probability and Random Processes with Applications to Signal Processing (3rd ed.). Pearson. p. 7. ISBN 9788177583564.

- ↑ Forbes, Catherine; Evans, Merran; Hastings, Nicholas; Peacock, Brian (2011). Statistical Distributions (4th ed.). Wiley. p. 3. ISBN 9780470390634. https://archive.org/details/statisticaldistr00cfor.

- ↑ Hogg, Robert; Tannis, Elliot; Zimmerman, Dale (December 24, 2013). Probability and Statistical Inference. Pearson Education, Inc. p. 10. ISBN 978-0321923271. "The collection of all possible outcomes... is called the outcome space."

- ↑ 4.0 4.1 Albert, Jim (1998-01-21). "Listing All Possible Outcomes (The Sample Space)". Bowling Green State University. http://www-math.bgsu.edu/~albert/m115/probability/sample_space.html.

- ↑ Soong, T. T. (2004). Fundamentals of probability and statistics for engineers. Chichester: Wiley. ISBN 0-470-86815-5. OCLC 55135988.

- ↑ 6.0 6.1 6.2 "UOR_2.1". https://web.mit.edu/urban_or_book/www/book/chapter2/2.1.html.

- ↑ Ross, Sheldon (2010). A First Course in Probability (8th ed.). Pearson Prentice Hall. pp. 23. ISBN 978-0136033134. http://julio.staff.ipb.ac.id/files/2015/02/Ross_8th_ed_English.pdf. Retrieved 2021-12-02.

- ↑ Dekking, F.M. (Frederik Michel), 1946- (2005). A modern introduction to probability and statistics : understanding why and how. Springer. ISBN 1-85233-896-2. OCLC 783259968.

- ↑ 9.0 9.1 9.2 "Sample Space, Events and Probability". https://faculty.math.illinois.edu/~kkirkpat/SampleSpace.pdf.

- ↑ Larsen, R. J.; Marx, M. L. (2001). An Introduction to Mathematical Statistics and Its Applications (3rd ed.). Upper Saddle River, NJ: Prentice Hall. p. 22. ISBN 9780139223037.

- ↑ LaValle, Steven M. (2006). Planning Algorithms. Cambridge University Press. pp. 442. http://lavalle.pl/planning/ch9.pdf.

- ↑ "Sample Spaces, Events, and Their Probabilities". https://saylordotorg.github.io/text_introductory-statistics/s07-01-sample-spaces-events-and-their.html.

- ↑ Tsitsiklis, John (Spring 2018). "Sample Spaces". Massachusetts Institute of Technology. https://ocw.mit.edu/resources/res-6-012-introduction-to-probability-spring-2018/part-i-the-fundamentals.

- ↑ Jones, James (1996). "Stats: Introduction to Probability - Sample Spaces". Richland Community College. https://people.richland.edu/james/lecture/m170/ch05-int.html.

- ↑ Foerster, Paul A. (2006). Algebra and Trigonometry: Functions and Applications, Teacher's Edition (Classics ed.). Prentice Hall. p. 633. ISBN 0-13-165711-9. https://archive.org/details/algebratrigonome00paul_0/page/633.

- ↑ "Equally Likely outcomes". https://www3.nd.edu/~dgalvin1/10120/10120_S16/Topic09_7p2_Galvin.pdf.

- ↑ "Chapter 3: Probability". https://www.coconino.edu/resources/files/pdfs/academics/arts-and-sciences/MAT142/Chapter_3_Probability.pdf.

- ↑ 18.0 18.1 Yates, Daniel S.; Moore, David S.; Starnes, Daren S. (2003). The Practice of Statistics (2nd ed.). New York: Freeman. ISBN 978-0-7167-4773-4. http://bcs.whfreeman.com/yates2e/.

- ↑ "Probability: Rolling Two Dice". http://www.math.hawaii.edu/~ramsey/Probability/TwoDice.html.

- ↑ "Simple Random Samples". https://web.ma.utexas.edu/users/mks/statmistakes/SRS.html.

External links

|