Gamma process

Also known as the (Moran-)Gamma Process,[1] the gamma process is a random process studied in mathematics, statistics, probability theory, and stochastics. The gamma process is a stochastic or random process consisting of independently distributed gamma distributions where [math]\displaystyle{ N(t) }[/math] represents the number of event occurrences from time 0 to time [math]\displaystyle{ t }[/math]. The gamma distribution has scale parameter [math]\displaystyle{ \gamma }[/math] and shape parameter [math]\displaystyle{ \lambda }[/math], often written as [math]\displaystyle{ \Gamma (\gamma ,\lambda ) }[/math].[1] Both [math]\displaystyle{ \gamma }[/math] and [math]\displaystyle{ \lambda }[/math] must be greater than 0. The gamma process is often written as [math]\displaystyle{ \Gamma (t,\gamma ,\lambda ) }[/math] where [math]\displaystyle{ t }[/math] represents the time from 0. The process is a pure-jump increasing Lévy process with intensity measure [math]\displaystyle{ \nu(x)=\gamma x^{-1} \exp(-\lambda x), }[/math] for all positive [math]\displaystyle{ x }[/math]. Thus jumps whose size lies in the interval [math]\displaystyle{ [x,x+dx) }[/math] occur as a Poisson process with intensity [math]\displaystyle{ \nu(x)\,dx. }[/math] The parameter [math]\displaystyle{ \gamma }[/math] controls the rate of jump arrivals and the scaling parameter [math]\displaystyle{ \lambda }[/math] inversely controls the jump size. It is assumed that the process starts from a value 0 at t = 0 meaning [math]\displaystyle{ N(0)=0 }[/math].

The gamma process is sometimes also parameterised in terms of the mean ([math]\displaystyle{ \mu }[/math]) and variance ([math]\displaystyle{ v }[/math]) of the increase per unit time, which is equivalent to [math]\displaystyle{ \gamma = \mu^2/v }[/math] and [math]\displaystyle{ \lambda = \mu/v }[/math].

Plain English definition

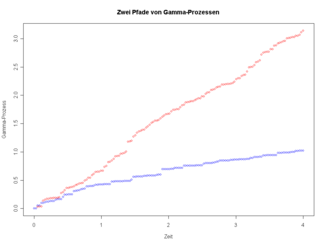

The gamma process is a process which measures the number of occurrences of independent gamma-distributed variables over a span of time. This image below displays two different gamma processes on from time 0 until time 4. The red process has more occurrences in the timeframe compared to the blue process because its shape parameter is larger than the blue shape parameter.

Properties

We use the Gamma function in these properties, so the reader should distinguish between [math]\displaystyle{ \Gamma(\cdot) }[/math] (the Gamma function) and [math]\displaystyle{ \Gamma(t;\gamma, \lambda) }[/math] (the Gamma process). We will sometimes abbreviate the process as [math]\displaystyle{ X_t\equiv\Gamma(t;\gamma, \lambda) }[/math].

Some basic properties of the gamma process are:[citation needed]

Marginal distribution

The marginal distribution of a gamma process at time [math]\displaystyle{ t }[/math] is a gamma distribution with mean [math]\displaystyle{ \gamma t/\lambda }[/math] and variance [math]\displaystyle{ \gamma t/\lambda^2. }[/math]

That is, the probability distribution [math]\displaystyle{ f }[/math] of the random variable [math]\displaystyle{ X_t }[/math] is given by the density [math]\displaystyle{ f(x;t, \gamma, \lambda) = \frac {\lambda^{\gamma t}}{\Gamma (\gamma t)} x^{\gamma t \,-\,1}e^{-\lambda x}. }[/math]

Scaling

Multiplication of a gamma process by a scalar constant [math]\displaystyle{ \alpha }[/math] is again a gamma process with different mean increase rate.

- [math]\displaystyle{ \alpha\Gamma(t;\gamma,\lambda) \simeq \Gamma(t;\gamma,\lambda/\alpha) }[/math]

Adding independent processes

The sum of two independent gamma processes is again a gamma process.

- [math]\displaystyle{ \Gamma(t;\gamma_1,\lambda) + \Gamma(t;\gamma_2,\lambda) \simeq \Gamma(t;\gamma_1+\gamma_2,\lambda) }[/math]

Moments

- The moment function helps mathematicians find expected values, variances, skewness, and kurtosis.

- [math]\displaystyle{ \operatorname E(X_t^n) = \lambda^{-n} \cdot \frac{\Gamma(\gamma t+n)}{\Gamma(\gamma t)},\ \quad n\geq 0 , }[/math] where [math]\displaystyle{ \Gamma(z) }[/math] is the Gamma function.

Moment generating function

- The moment generating function is the expected value of [math]\displaystyle{ \exp(tX) }[/math] where X is the random variable.

- [math]\displaystyle{ \operatorname E\Big(\exp(\theta X_t)\Big) = \left(1- \frac\theta\lambda\right)^{-\gamma t},\ \quad \theta\lt \lambda }[/math]

Correlation

Correlation displays the statistical relationship between any two gamma processes.

- [math]\displaystyle{ \operatorname{Corr}(X_s, X_t) = \sqrt{\frac s t},\ s\lt t }[/math], for any gamma process [math]\displaystyle{ X(t) . }[/math]

The gamma process is used as the distribution for random time change in the variance gamma process.

Literature

- Lévy Processes and Stochastic Calculus by David Applebaum, CUP 2004, ISBN:0-521-83263-2.

References

- ↑ 1.0 1.1 Klenke, Achim, ed. (2008), "The Poisson Point Process" (in en), Probability Theory: A Comprehensive Course (London: Springer): pp. 525–542, doi:10.1007/978-1-84800-048-3_24, ISBN 978-1-84800-048-3, https://doi.org/10.1007/978-1-84800-048-3_24, retrieved 2023-04-04

|