Probabilistic classification

| Machine learning and data mining |

|---|

|

In machine learning, a probabilistic classifier is a classifier that is able to predict, given an observation of an input, a probability distribution over a set of classes, rather than only outputting the most likely class that the observation should belong to. Probabilistic classifiers provide classification that can be useful in its own right[1] or when combining classifiers into ensembles.

Types of classification

Formally, an "ordinary" classifier is some rule, or function, that assigns to a sample x a class label ŷ:

- [math]\displaystyle{ \hat{y} = f(x) }[/math]

The samples come from some set X (e.g., the set of all documents, or the set of all images), while the class labels form a finite set Y defined prior to training.

Probabilistic classifiers generalize this notion of classifiers: instead of functions, they are conditional distributions [math]\displaystyle{ \Pr(Y \vert X) }[/math], meaning that for a given [math]\displaystyle{ x \in X }[/math], they assign probabilities to all [math]\displaystyle{ y \in Y }[/math] (and these probabilities sum to one). "Hard" classification can then be done using the optimal decision rule[2]:39–40

- [math]\displaystyle{ \hat{y} = \operatorname{\arg\max}_{y} \Pr(Y=y \vert X) }[/math]

or, in English, the predicted class is that which has the highest probability.

Binary probabilistic classifiers are also called binary regression models in statistics. In econometrics, probabilistic classification in general is called discrete choice.

Some classification models, such as naive Bayes, logistic regression and multilayer perceptrons (when trained under an appropriate loss function) are naturally probabilistic. Other models such as support vector machines are not, but methods exist to turn them into probabilistic classifiers.

Generative and conditional training

Some models, such as logistic regression, are conditionally trained: they optimize the conditional probability [math]\displaystyle{ \Pr(Y \vert X) }[/math] directly on a training set (see empirical risk minimization). Other classifiers, such as naive Bayes, are trained generatively: at training time, the class-conditional distribution [math]\displaystyle{ \Pr(X \vert Y) }[/math] and the class prior [math]\displaystyle{ \Pr(Y) }[/math] are found, and the conditional distribution [math]\displaystyle{ \Pr (Y \vert X) }[/math] is derived using Bayes' rule.[2]:43

Probability calibration

Not all classification models are naturally probabilistic, and some that are, notably naive Bayes classifiers, decision trees and boosting methods, produce distorted class probability distributions.[3] In the case of decision trees, where Pr(y|x) is the proportion of training samples with label y in the leaf where x ends up, these distortions come about because learning algorithms such as C4.5 or CART explicitly aim to produce homogeneous leaves (giving probabilities close to zero or one, and thus high bias) while using few samples to estimate the relevant proportion (high variance).[4]

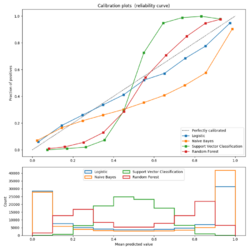

Calibration can be assessed using a calibration plot (also called a reliability diagram).[3][5] A calibration plot shows the proportion of items in each class for bands of predicted probability or score (such as a distorted probability distribution or the "signed distance to the hyperplane" in a support vector machine). Deviations from the identity function indicate a poorly-calibrated classifier for which the predicted probabilities or scores can not be used as probabilities. In this case one can use a method to turn these scores into properly calibrated class membership probabilities.

For the binary case, a common approach is to apply Platt scaling, which learns a logistic regression model on the scores.[6] An alternative method using isotonic regression[7] is generally superior to Platt's method when sufficient training data is available.[3]

In the multiclass case, one can use a reduction to binary tasks, followed by univariate calibration with an algorithm as described above and further application of the pairwise coupling algorithm by Hastie and Tibshirani.[8]

Evaluating probabilistic classification

Commonly used evaluation metrics that compare the predicted probability to observed outcomes include log loss, Brier score, and a variety of calibration errors. The former is also used as a loss function in the training of logistic models.

Calibration errors metrics aim to quantify the extent to which a probabilistic classifier's outputs are well-calibrated. As Philip Dawid put it, "a forecaster is well-calibrated if, for example, of those events to which he assigns a probability 30 percent, the long-run proportion that actually occurs turns out to be 30 percent".[9] Foundational work in the domain of measuring calibration error is the Expected Calibration Error (ECE) metric.[10] More recent works propose variants to ECE that address limitations of the ECE metric that may arise when classifier scores concentrate on narrow subset of the [0,1], including the Adaptive Calibration Error (ACE) [11] and Test-based Calibration Error (TCE).[12]

A method used to assign scores to pairs of predicted probabilities and actual discrete outcomes, so that different predictive methods can be compared, is called a scoring rule.

Software Implementations

- MoRPE[13] is a trainable probabilistic classifier that uses isotonic regression for probability calibration. It solves the multiclass case by reduction to binary tasks. It is a type of kernel machine that uses an inhomogeneous polynomial kernel.

References

- ↑ Hastie, Trevor; Tibshirani, Robert; Friedman, Jerome (2009). The Elements of Statistical Learning. p. 348. http://statweb.stanford.edu/~tibs/ElemStatLearn/. "[I]n data mining applications the interest is often more in the class probabilities [math]\displaystyle{ p_\ell(x), \ell = 1, \dots, K }[/math] themselves, rather than in performing a class assignment."

- ↑ 2.0 2.1 Bishop, Christopher M. (2006). Pattern Recognition and Machine Learning. Springer.

- ↑ 3.0 3.1 3.2 Niculescu-Mizil, Alexandru; Caruana, Rich (2005). "Predicting good probabilities with supervised learning". ICML. doi:10.1145/1102351.1102430. http://machinelearning.wustl.edu/mlpapers/paper_files/icml2005_Niculescu-MizilC05.pdf.

- ↑ Zadrozny, Bianca; Elkan, Charles (2001). "Obtaining calibrated probability estimates from decision trees and naive Bayesian classifiers". ICML. pp. 609–616. http://cseweb.ucsd.edu/~elkan/calibrated.pdf.

- ↑ "Probability calibration". https://jmetzen.github.io/2015-04-14/calibration.html.

- ↑ Platt, John (1999). "Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods". Advances in Large Margin Classifiers 10 (3): 61–74. https://www.researchgate.net/publication/2594015.

- ↑ Zadrozny, Bianca; Elkan, Charles (2002). "Transforming classifier scores into accurate multiclass probability estimates". Proceedings of the eighth ACM SIGKDD international conference on Knowledge discovery and data mining - KDD '02. pp. 694–699. doi:10.1145/775047.775151. CiteSeerX: 10.1.1.13.7457. ISBN 978-1-58113-567-1. http://www.cs.cornell.edu/courses/cs678/2007sp/ZadroznyElkan.pdf.

- ↑ Hastie, Trevor; Tibshirani, Robert (1998). "Classification by pairwise coupling". The Annals of Statistics 26 (2): 451–471. doi:10.1214/aos/1028144844. CiteSeerX: 10.1.1.46.6032.

- ↑ Dawid, A. P (1982). "The Well-Calibrated Bayesian". Journal of the American Statistical Association 77 (379): 605–610. doi:10.1080/01621459.1982.10477856.

- ↑ Naeini, M.P.; Cooper, G.; Hauskrecht, M. (2015). "Obtaining well calibrated probabilities using bayesian binning". Proceedings of the AAAI Conference on Artificial Intelligence. https://www.dbmi.pitt.edu/wp-content/uploads/2022/10/Obtaining-well-calibrated-probabilities-using-Bayesian-binning.pdf.

- ↑ Nixon, J.; Dusenberry, M.W.; Zhang, L.; Jerfel, G.; Tran, D. (2019). "Measuring Calibration in Deep Learning". CVPR workshops. https://openaccess.thecvf.com/content_CVPRW_2019/papers/Uncertainty%20and%20Robustness%20in%20Deep%20Visual%20Learning/Nixon_Measuring_Calibration_in_Deep_Learning_CVPRW_2019_paper.pdf.

- ↑ Matsubara, T.; Tax, N.; Mudd, R.; Guy, I. (2023). "TCE: A Test-Based Approach to Measuring Calibration Error". Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence (UAI).

- ↑ "MoRPE". https://github.com/adaviding/morpe.

|