Real analysis

In mathematics, the branch of real analysis studies the behavior of real numbers, sequences and series of real numbers, and real functions.[1] Some particular properties of real-valued sequences and functions that real analysis studies include convergence, limits, continuity, smoothness, differentiability and integrability.

Real analysis is distinguished from complex analysis, which deals with the study of complex numbers and their functions.

Scope

Construction of the real numbers

The theorems of real analysis rely on the properties of the real number system, which must be established. The real number system consists of an uncountable set (), together with two binary operations denoted + and ⋅, and a total order denoted ≤. The operations make the real numbers a field, and, along with the order, an ordered field. The real number system is the unique complete ordered field, in the sense that any other complete ordered field is isomorphic to it. Intuitively, completeness means that there are no 'gaps' (or 'holes') in the real numbers. This property distinguishes the real numbers from other ordered fields (e.g., the rational numbers ) and is critical to the proof of several key properties of functions of the real numbers. The completeness of the reals is often conveniently expressed as the least upper bound property (see below).

Order properties of the real numbers

The real numbers have various lattice-theoretic properties that are absent in the complex numbers. Also, the real numbers form an ordered field, in which sums and products of positive numbers are also positive. Moreover, the ordering of the real numbers is total, and the real numbers have the least upper bound property:

Every nonempty subset of

that has an upper bound has a least upper bound that is also a real number.

These order-theoretic properties lead to a number of fundamental results in real analysis, such as the monotone convergence theorem, the intermediate value theorem and the mean value theorem.

However, while the results in real analysis are stated for real numbers, many of these results can be generalized to other mathematical objects. In particular, many ideas in functional analysis and operator theory generalize properties of the real numbers – such generalizations include the theories of Riesz spaces and positive operators. Also, mathematicians consider real and imaginary parts of complex sequences, or by pointwise evaluation of operator sequences.[clarification needed]

Topological properties of the real numbers

Many of the theorems of real analysis are consequences of the topological properties of the real number line. The order properties of the real numbers described above are closely related to these topological properties. As a topological space, the real numbers has a standard topology, which is the order topology induced by order . Alternatively, by defining the metric or distance function using the absolute value function as , the real numbers become the prototypical example of a metric space. The topology induced by metric turns out to be identical to the standard topology induced by order . Theorems like the intermediate value theorem that are essentially topological in nature can often be proved in the more general setting of metric or topological spaces rather than in only. Often, such proofs tend to be shorter or simpler compared to classical proofs that apply direct methods.

Sequences

A sequence is a function whose domain is a countable, totally ordered set.[2] The domain is usually taken to be the natural numbers,[3] although it is occasionally convenient to also consider bidirectional sequences indexed by the set of all integers, including negative indices.

Of interest in real analysis, a real-valued sequence, here indexed by the natural numbers, is a map . Each is referred to as a term (or, less commonly, an element) of the sequence. A sequence is rarely denoted explicitly as a function; instead, by convention, it is almost always notated as if it were an ordered ∞-tuple, with individual terms or a general term enclosed in parentheses:[4] A sequence that tends to a limit (i.e., exists) is said to be convergent; otherwise it is divergent. (See the section on limits and convergence for details.) A real-valued sequence is bounded if there exists such that for all . A real-valued sequence is monotonically increasing or decreasing if or holds, respectively. If either holds, the sequence is said to be monotonic. The monotonicity is strict if the chained inequalities still hold with or replaced by < or >.

Given a sequence , another sequence is a subsequence of if for all positive integers and is a strictly increasing sequence of natural numbers.

Limits and convergence

Roughly speaking, a limit is the value that a function or a sequence "approaches" as the input or index approaches some value.[5] (This value can include the symbols when addressing the behavior of a function or sequence as the variable increases or decreases without bound.) The idea of a limit is fundamental to calculus (and mathematical analysis in general) and its formal definition is used in turn to define notions like continuity, derivatives, and integrals. (In fact, the study of limiting behavior has been used as a characteristic that distinguishes calculus and mathematical analysis from other branches of mathematics.)

The concept of limit was informally introduced for functions by Newton and Leibniz, at the end of the 17th century, for building infinitesimal calculus. For sequences, the concept was introduced by Cauchy, and made rigorous, at the end of the 19th century by Bolzano and Weierstrass, who gave the modern ε-δ definition, which follows.

Definition. Let be a real-valued function defined on . We say that tends to as approaches , or that the limit of as approaches is if, for any , there exists such that for all , implies that . We write this symbolically as or as Intuitively, this definition can be thought of in the following way: We say that as , when, given any positive number , no matter how small, we can always find a , such that we can guarantee that and are less than apart, as long as (in the domain of ) is a real number that is less than away from but distinct from . The purpose of the last stipulation, which corresponds to the condition in the definition, is to ensure that does not imply anything about the value of itself. Actually, does not even need to be in the domain of in order for to exist.

In a slightly different but related context, the concept of a limit applies to the behavior of a sequence when becomes large.

Definition. Let be a real-valued sequence. We say that converges to if, for any , there exists a natural number such that implies that . We write this symbolically as or as if fails to converge, we say that diverges.

Generalizing to a real-valued function of a real variable, a slight modification of this definition (replacement of sequence and term by function and value and natural numbers and by real numbers and , respectively) yields the definition of the limit of as increases without bound, notated . Reversing the inequality to gives the corresponding definition of the limit of as decreases without bound, .

Sometimes, it is useful to conclude that a sequence converges, even though the value to which it converges is unknown or irrelevant. In these cases, the concept of a Cauchy sequence is useful.

Definition. Let be a real-valued sequence. We say that is a Cauchy sequence if, for any , there exists a natural number such that implies that .

It can be shown that a real-valued sequence is Cauchy if and only if it is convergent. This property of the real numbers is expressed by saying that the real numbers endowed with the standard metric, , is a complete metric space. In a general metric space, however, a Cauchy sequence need not converge.

In addition, for real-valued sequences that are monotonic, it can be shown that the sequence is bounded if and only if it is convergent.

Uniform and pointwise convergence for sequences of functions

In addition to sequences of numbers, one may also speak of sequences of functions on , that is, infinite, ordered families of functions , denoted , and their convergence properties. However, in the case of sequences of functions, there are two kinds of convergence, known as pointwise convergence and uniform convergence, that need to be distinguished.

Roughly speaking, pointwise convergence of functions to a limiting function , denoted , simply means that given any , as . In contrast, uniform convergence is a stronger type of convergence, in the sense that a uniformly convergent sequence of functions also converges pointwise, but not conversely. Uniform convergence requires members of the family of functions, , to fall within some error of for every value of , whenever , for some integer . For a family of functions to uniformly converge, sometimes denoted , such a value of must exist for any given, no matter how small. Intuitively, we can visualize this situation by imagining that, for a large enough , the functions are all confined within a 'tube' of width about (that is, between and ) for every value in their domain .

The distinction between pointwise and uniform convergence is important when exchanging the order of two limiting operations (e.g., taking a limit, a derivative, or integral) is desired: in order for the exchange to be well-behaved, many theorems of real analysis call for uniform convergence. For example, a sequence of continuous functions (see below) is guaranteed to converge to a continuous limiting function if the convergence is uniform, while the limiting function may not be continuous if convergence is only pointwise. Karl Weierstrass is generally credited for clearly defining the concept of uniform convergence and fully investigating its implications.

Compactness

Compactness is a concept from general topology that plays an important role in many of the theorems of real analysis. The property of compactness is a generalization of the notion of a set being closed and bounded. (In the context of real analysis, these notions are equivalent: a set in Euclidean space is compact if and only if it is closed and bounded.) Briefly, a closed set contains all of its boundary points, while a set is bounded if there exists a real number such that the distance between any two points of the set is less than that number. In

, sets that are closed and bounded, and therefore compact, include the empty set, any finite number of points, closed intervals, and their finite unions. However, this list is not exhaustive; for instance, the set

is a compact set; the Cantor ternary set

is another example of a compact set. On the other hand, the set

is not compact because it is bounded but not closed, as the boundary point 0 is not a member of the set. The set

is also not compact because it is closed but not bounded.

For subsets of the real numbers, there are several equivalent definitions of compactness.

Definition. A set is compact if it is closed and bounded.

This definition also holds for Euclidean space of any finite dimension, , but it is not valid for metric spaces in general. The equivalence of the definition with the definition of compactness based on subcovers, given later in this section, is known as the Heine-Borel theorem.

A more general definition that applies to all metric spaces uses the notion of a subsequence (see above).

Definition. A set in a metric space is compact if every sequence in has a convergent subsequence.

This particular property is known as subsequential compactness. In , a set is subsequentially compact if and only if it is closed and bounded, making this definition equivalent to the one given above. Subsequential compactness is equivalent to the definition of compactness based on subcovers for metric spaces, but not for topological spaces in general.

The most general definition of compactness relies on the notion of open covers and subcovers, which is applicable to topological spaces (and thus to metric spaces and as special cases). In brief, a collection of open sets is said to be an open cover of set if the union of these sets is a superset of . This open cover is said to have a finite subcover if a finite subcollection of the could be found that also covers .

Definition. A set in a topological space is compact if every open cover of has a finite subcover.

Compact sets are well-behaved with respect to properties like convergence and continuity. For instance, any Cauchy sequence in a compact metric space is convergent. As another example, the image of a compact metric space under a continuous map is also compact.

Continuity

A function from the set of real numbers to the real numbers can be represented by a graph in the Cartesian plane; such a function is continuous if, roughly speaking, the graph is a single unbroken curve with no "holes" or "jumps".

There are several ways to make this intuition mathematically rigorous. Several definitions of varying levels of generality can be given. In cases where two or more definitions are applicable, they are readily shown to be equivalent to one another, so the most convenient definition can be used to determine whether a given function is continuous or not. In the first definition given below, is a function defined on a non-degenerate interval of the set of real numbers as its domain. Some possibilities include , the whole set of real numbers, an open interval or a closed interval Here, and are distinct real numbers, and we exclude the case of being empty or consisting of only one point, in particular.

Definition. If is a non-degenerate interval, we say that is continuous at if . We say that is a continuous map if is continuous at every .

In contrast to the requirements for to have a limit at a point , which do not constrain the behavior of at itself, the following two conditions, in addition to the existence of , must also hold in order for to be continuous at : (i) must be defined at , i.e., is in the domain of ; and (ii) as . The definition above actually applies to any domain that does not contain an isolated point, or equivalently, where every is a limit point of . A more general definition applying to with a general domain is the following:

Definition. If is an arbitrary subset of , we say that is continuous at if, for any , there exists such that for all , implies that . We say that is a continuous map if is continuous at every .

A consequence of this definition is that is trivially continuous at any isolated point . This somewhat unintuitive treatment of isolated points is necessary to ensure that our definition of continuity for functions on the real line is consistent with the most general definition of continuity for maps between topological spaces (which includes metric spaces and in particular as special cases). This definition, which extends beyond the scope of our discussion of real analysis, is given below for completeness.

Definition. If and are topological spaces, we say that is continuous at if is a neighborhood of in for every neighborhood of in . We say that is a continuous map if is open in for every open in .

(Here, refers to the preimage of under .)

Uniform continuity

Definition. If is a subset of the real numbers, we say a function is uniformly continuous on if, for any , there exists a such that for all , implies that .

Explicitly, when a function is uniformly continuous on , the choice of needed to fulfill the definition must work for all of for a given . In contrast, when a function is continuous at every point (or said to be continuous on ), the choice of may depend on both and . In contrast to simple continuity, uniform continuity is a property of a function that only makes sense with a specified domain; to speak of uniform continuity at a single point is meaningless.

On a compact set, it is easily shown that all continuous functions are uniformly continuous. If is a bounded noncompact subset of , then there exists that is continuous but not uniformly continuous. As a simple example, consider defined by . By choosing points close to 0, we can always make for any single choice of , for a given .

Absolute continuity

Definition. Let be an interval on the real line. A function is said to be absolutely continuous on if for every positive number , there is a positive number such that whenever a finite sequence of pairwise disjoint sub-intervals of satisfies[6]

then

Absolutely continuous functions are continuous: consider the case n = 1 in this definition. The collection of all absolutely continuous functions on I is denoted AC(I). Absolute continuity is a fundamental concept in the Lebesgue theory of integration, allowing the formulation of a generalized version of the fundamental theorem of calculus that applies to the Lebesgue integral.

Differentiation

The notion of the derivative of a function or differentiability originates from the concept of approximating a function near a given point using the "best" linear approximation. This approximation, if it exists, is unique and is given by the line that is tangent to the function at the given point , and the slope of the line is the derivative of the function at .

A function is differentiable at if the limit

exists. This limit is known as the derivative of at , and the function , possibly defined on only a subset of , is the derivative (or derivative function) of . If the derivative exists everywhere, the function is said to be differentiable.

As a simple consequence of the definition, is continuous at if it is differentiable there. Differentiability is therefore a stronger regularity condition (condition describing the "smoothness" of a function) than continuity, and it is possible for a function to be continuous on the entire real line but not differentiable anywhere (see Weierstrass's nowhere differentiable continuous function). It is possible to discuss the existence of higher-order derivatives as well, by finding the derivative of a derivative function, and so on.

One can classify functions by their differentiability class. The class (sometimes to indicate the interval of applicability) consists of all continuous functions. The class consists of all differentiable functions whose derivative is continuous; such functions are called continuously differentiable. Thus, a function is exactly a function whose derivative exists and is of class . In general, the classes can be defined recursively by declaring to be the set of all continuous functions and declaring for any positive integer to be the set of all differentiable functions whose derivative is in . In particular, is contained in for every , and there are examples to show that this containment is strict. Class is the intersection of the sets as varies over the non-negative integers, and the members of this class are known as the smooth functions. Class consists of all analytic functions, and is strictly contained in (see bump function for a smooth function that is not analytic).

Series

A series formalizes the imprecise notion of taking the sum of an endless sequence of numbers. The idea that taking the sum of an "infinite" number of terms can lead to a finite result was counterintuitive to the ancient Greeks and led to the formulation of a number of paradoxes by Zeno and other philosophers. The modern notion of assigning a value to a series avoids dealing with the ill-defined notion of adding an "infinite" number of terms. Instead, the finite sum of the first terms of the sequence, known as a partial sum, is considered, and the concept of a limit is applied to the sequence of partial sums as grows without bound. The series is assigned the value of this limit, if it exists.

Given an (infinite) sequence , we can define an associated series as the formal mathematical object , sometimes simply written as . The partial sums of a series are the numbers . A series is said to be convergent if the sequence consisting of its partial sums, , is convergent; otherwise it is divergent. The sum of a convergent series is defined as the number .

The word "sum" is used here in a metaphorical sense as a shorthand for taking the limit of a sequence of partial sums and should not be interpreted as simply "adding" an infinite number of terms. For instance, in contrast to the behavior of finite sums, rearranging the terms of an infinite series may result in convergence to a different number (see the article on the Riemann rearrangement theorem for further discussion).

An example of a convergent series is a geometric series which forms the basis of one of Zeno's famous paradoxes:

In contrast, the harmonic series has been known since the Middle Ages to be a divergent series:

(Here, "" is merely a notational convention to indicate that the partial sums of the series grow without bound.)

A series is said to converge absolutely if is convergent. A convergent series for which diverges is said to converge non-absolutely.[7] It is easily shown that absolute convergence of a series implies its convergence. On the other hand, an example of a series that converges non-absolutely is

Taylor series

The Taylor series of a real or complex-valued function ƒ(x) that is infinitely differentiable at a real or complex number a is the power series

which can be written in the more compact sigma notation as

where n! denotes the factorial of n and ƒ (n)(a) denotes the nth derivative of ƒ evaluated at the point a. The derivative of order zero ƒ is defined to be ƒ itself and (x − a)0 and 0! are both defined to be 1. In the case that a = 0, the series is also called a Maclaurin series.

A Taylor series of f about point a may diverge, converge at only the point a, converge for all x such that (the largest such R for which convergence is guaranteed is called the radius of convergence), or converge on the entire real line. Even a converging Taylor series may converge to a value different from the value of the function at that point. If the Taylor series at a point has a nonzero radius of convergence, and sums to the function in the disc of convergence, then the function is analytic. The analytic functions have many fundamental properties. In particular, an analytic function of a real variable extends naturally to a function of a complex variable. It is in this way that the exponential function, the logarithm, the trigonometric functions and their inverses are extended to functions of a complex variable.

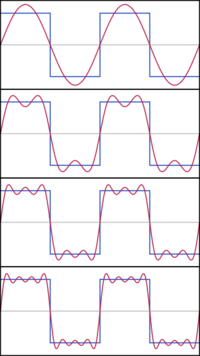

Fourier series

Fourier series decomposes periodic functions or periodic signals into the sum of a (possibly infinite) set of simple oscillating functions, namely sines and cosines (or complex exponentials). The study of Fourier series typically occurs and is handled within the branch mathematics > mathematical analysis > Fourier analysis.

Integration

Integration is a formalization of the problem of finding the area bound by a curve and the related problems of determining the length of a curve or volume enclosed by a surface. The basic strategy to solving problems of this type was known to the ancient Greeks and Chinese, and was known as the method of exhaustion. Generally speaking, the desired area is bounded from above and below, respectively, by increasingly accurate circumscribing and inscribing polygonal approximations whose exact areas can be computed. By considering approximations consisting of a larger and larger ("infinite") number of smaller and smaller ("infinitesimal") pieces, the area bound by the curve can be deduced, as the upper and lower bounds defined by the approximations converge around a common value.

The spirit of this basic strategy can easily be seen in the definition of the Riemann integral, in which the integral is said to exist if upper and lower Riemann (or Darboux) sums converge to a common value as thinner and thinner rectangular slices ("refinements") are considered. Though the machinery used to define it is much more elaborate compared to the Riemann integral, the Lebesgue integral was defined with similar basic ideas in mind. Compared to the Riemann integral, the more sophisticated Lebesgue integral allows area (or length, volume, etc.; termed a "measure" in general) to be defined and computed for much more complicated and irregular subsets of Euclidean space, although there still exist "non-measurable" subsets for which an area cannot be assigned.

Riemann integration

The Riemann integral is defined in terms of Riemann sums of functions with respect to tagged partitions of an interval. Let be a closed interval of the real line; then a tagged partition of is a finite sequence

This partitions the interval into sub-intervals indexed by , each of which is "tagged" with a distinguished point . For a function bounded on , we define the Riemann sum of with respect to tagged partition as

where is the width of sub-interval . Thus, each term of the sum is the area of a rectangle with height equal to the function value at the distinguished point of the given sub-interval, and width the same as the sub-interval width. The mesh of such a tagged partition is the width of the largest sub-interval formed by the partition, . We say that the Riemann integral of on is if for any there exists such that, for any tagged partition with mesh , we have

This is sometimes denoted . When the chosen tags give the maximum (respectively, minimum) value of each interval, the Riemann sum is known as the upper (respectively, lower) Darboux sum. A function is Darboux integrable if the upper and lower Darboux sums can be made to be arbitrarily close to each other for a sufficiently small mesh. Although this definition gives the Darboux integral the appearance of being a special case of the Riemann integral, they are, in fact, equivalent, in the sense that a function is Darboux integrable if and only if it is Riemann integrable, and the values of the integrals are equal. In fact, calculus and real analysis textbooks often conflate the two, introducing the definition of the Darboux integral as that of the Riemann integral, due to the slightly easier to apply definition of the former.

The fundamental theorem of calculus asserts that integration and differentiation are inverse operations in a certain sense.

Lebesgue integration and measure

Lebesgue integration is a mathematical construction that extends the integral to a larger class of functions; it also extends the domains on which these functions can be defined. The concept of a measure, an abstraction of length, area, or volume, is central to Lebesgue integral probability theory.

Distributions

Distributions (or generalized functions) are objects that generalize functions. Distributions make it possible to differentiate functions whose derivatives do not exist in the classical sense. In particular, any locally integrable function has a distributional derivative.

Relation to complex analysis

Real analysis is an area of analysis that studies concepts such as sequences and their limits, continuity, differentiation, integration and sequences of functions. By definition, real analysis focuses on the real numbers, often including positive and negative infinity to form the extended real line. Real analysis is closely related to complex analysis, which studies broadly the same properties of complex numbers. In complex analysis, it is natural to define differentiation via holomorphic functions, which have a number of useful properties, such as repeated differentiability, expressibility as power series, and satisfying the Cauchy integral formula.

In real analysis, it is usually more natural to consider differentiable, smooth, or harmonic functions, which are more widely applicable, but may lack some more powerful properties of holomorphic functions. However, results such as the fundamental theorem of algebra are simpler when expressed in terms of complex numbers.

Techniques from the theory of analytic functions of a complex variable are often used in real analysis – such as evaluation of real integrals by residue calculus.

Important results

Important results include the Bolzano–Weierstrass and Heine–Borel theorems, the intermediate value theorem and mean value theorem, Taylor's theorem, the fundamental theorem of calculus, the Arzelà-Ascoli theorem, the Stone-Weierstrass theorem, Fatou's lemma, and the monotone convergence and dominated convergence theorems.

Generalizations and related areas of mathematics

Various ideas from real analysis can be generalized from the real line to broader or more abstract contexts. These generalizations link real analysis to other disciplines and subdisciplines. For instance, generalization of ideas like continuous functions and compactness from real analysis to metric spaces and topological spaces connects real analysis to the field of general topology, while generalization of finite-dimensional Euclidean spaces to infinite-dimensional analogs led to the concepts of Banach spaces and Hilbert spaces and, more generally to functional analysis. Georg Cantor's investigation of sets and sequence of real numbers, mappings between them, and the foundational issues of real analysis gave birth to naive set theory. The study of issues of convergence for sequences of functions eventually gave rise to Fourier analysis as a subdiscipline of mathematical analysis. Investigation of the consequences of generalizing differentiability from functions of a real variable to ones of a complex variable gave rise to the concept of holomorphic functions and the inception of complex analysis as another distinct subdiscipline of analysis. On the other hand, the generalization of integration from the Riemann sense to that of Lebesgue led to the formulation of the concept of abstract measure spaces, a fundamental concept in measure theory. Finally, the generalization of integration from the real line to curves and surfaces in higher dimensional space brought about the study of vector calculus, whose further generalization and formalization played an important role in the evolution of the concepts of differential forms and smooth (differentiable) manifolds in differential geometry and other closely related areas of geometry and topology.

See also

- List of real analysis topics

- Time-scale calculus – a unification of real analysis with calculus of finite differences

- Real multivariable function

- Real coordinate space

- Complex analysis

References

- ↑ Tao, Terence (2003). "Lecture notes for MATH 131AH". https://www.math.ucla.edu/~tao/resource/general/131ah.1.03w/week1.pdf.

- ↑ "Sequences intro". https://www.khanacademy.org/math/algebra/x2f8bb11595b61c86:sequences/x2f8bb11595b61c86:introduction-to-arithmetic-sequences/v/explicit-and-recursive-definitions-of-sequences.

- ↑ Gaughan, Edward (2009). "1.1 Sequences and Convergence". Introduction to Analysis. AMS (2009). ISBN 978-0-8218-4787-9.

- ↑ Some authors (e.g., Rudin 1976) use braces instead and write . However, this notation conflicts with the usual notation for a set, which, in contrast to a sequence, disregards the order and the multiplicity of its elements.

- ↑ Stewart, James (2008). Calculus: Early Transcendentals (6th ed.). Brooks/Cole. ISBN 978-0-495-01166-8. https://archive.org/details/calculusearlytra00stew_1.

- ↑ Royden 1988, Sect. 5.4, page 108; Nielsen 1997, Definition 15.6 on page 251; Athreya & Lahiri 2006, Definitions 4.4.1, 4.4.2 on pages 128,129. The interval I is assumed to be bounded and closed in the former two books but not the latter book.

- ↑ The term unconditional convergence refers to series whose sum does not depend on the order of the terms (i.e., any rearrangement gives the same sum). Convergence is termed conditional otherwise. For series in , it can be shown that absolute convergence and unconditional convergence are equivalent. Hence, the term "conditional convergence" is often used to mean non-absolute convergence. However, in the general setting of Banach spaces, the terms do not coincide, and there are unconditionally convergent series that do not converge absolutely.

Sources

- Athreya, Krishna B.; Lahiri, Soumendra N. (2006), Measure theory and probability theory, Springer, ISBN 0-387-32903-X

- Nielsen, Ole A. (1997), An introduction to integration and measure theory, Wiley-Interscience, ISBN 0-471-59518-7

- Royden, H.L. (1988), Real Analysis (third ed.), Collier Macmillan, ISBN 0-02-404151-3

Bibliography

- Abbott, Stephen (2001). Understanding Analysis. Undergraduate Texts in Mathematics. New York: Springer-Verlag. ISBN 0-387-95060-5.

- Aliprantis, Charalambos D.; Burkinshaw, Owen (1998). Principles of real analysis (3rd ed.). Academic. ISBN 0-12-050257-7.

- Bartle, Robert G.; Sherbert, Donald R. (2011). Introduction to Real Analysis (4th ed.). New York: John Wiley and Sons. ISBN 978-0-471-43331-6.

- Bressoud, David (2007). A Radical Approach to Real Analysis. MAA. ISBN 978-0-88385-747-2.

- Browder, Andrew (1996). Mathematical Analysis: An Introduction. Undergraduate Texts in Mathematics. New York: Springer-Verlag. ISBN 0-387-94614-4.

- Carothers, Neal L. (2000). Real Analysis. Cambridge: Cambridge University Press. ISBN 978-0521497565. https://archive.org/details/CarothersN.L.RealAnalysisCambridge2000Isbn0521497566416S.

- Dangello, Frank; Seyfried, Michael (1999). Introductory Real Analysis. Brooks Cole. ISBN 978-0-395-95933-6.

- Kolmogorov, A. N.; Fomin, S. V. (1975). Introductory Real Analysis. Translated by Richard A. Silverman. Dover Publications. ISBN 0486612260. https://archive.org/details/introductoryreal00kolm_0. Retrieved 2 April 2013.

- Rudin, Walter (1976). Principles of Mathematical Analysis. Walter Rudin Student Series in Advanced Mathematics (3rd ed.). New York: McGraw–Hill. ISBN 978-0-07-054235-8. https://archive.org/details/PrinciplesOfMathematicalAnalysis.

- Rudin, Walter (1987). Real and Complex Analysis (3rd ed.). New York: McGraw-Hill. ISBN 978-0-07-054234-1. https://archive.org/details/RudinW.RealAndComplexAnalysis3e1987.

- Spivak, Michael (1994). Calculus (3rd ed.). Houston, Texas: Publish or Perish, Inc.. ISBN 091409890X.

External links

- How We Got From There to Here: A Story of Real Analysis by Robert Rogers and Eugene Boman

- A First Course in Analysis by Donald Yau

- Analysis WebNotes by John Lindsay Orr

- Interactive Real Analysis by Bert G. Wachsmuth

- A First Analysis Course by John O'Connor

- Mathematical Analysis I by Elias Zakon

- Mathematical Analysis II by Elias Zakon

- Trench, William F. (2003). Introduction to Real Analysis. Prentice Hall. ISBN 978-0-13-045786-8. http://ramanujan.math.trinity.edu/wtrench/texts/TRENCH_REAL_ANALYSIS.PDF.

- Earliest Known Uses of Some of the Words of Mathematics: Calculus & Analysis

- Basic Analysis: Introduction to Real Analysis by Jiri Lebl

- Topics in Real and Functional Analysis by Gerald Teschl, University of Vienna.

|