Real coordinate space

This article includes a list of references, related reading or external links, but its sources remain unclear because it lacks inline citations. (February 2024) (Learn how and when to remove this template message) |

In mathematics, the real coordinate space or real coordinate n-space, of dimension n, denoted Rn or , is the set of all ordered n-tuples of real numbers, that is the set of all sequences of n real numbers, also known as coordinate vectors. Special cases are called the real line R1, the real coordinate plane R2, and the real coordinate three-dimensional space R3. With component-wise addition and scalar multiplication, it is a real vector space.

The coordinates over any basis of the elements of a real vector space form a real coordinate space of the same dimension as that of the vector space. Similarly, the Cartesian coordinates of the points of a Euclidean space of dimension n, En (Euclidean line, E; Euclidean plane, E2; Euclidean three-dimensional space, E3) form a real coordinate space of dimension n.

These one to one correspondences between vectors, points and coordinate vectors explain the names of coordinate space and coordinate vector. It allows using geometric terms and methods for studying real coordinate spaces, and, conversely, to use methods of calculus in geometry. This approach of geometry was introduced by René Descartes in the 17th century. It is widely used, as it allows locating points in Euclidean spaces, and computing with them.

Definition and structures

For any natural number n, the set Rn consists of all n-tuples of real numbers (R). It is called the "n-dimensional real space" or the "real n-space".

An element of Rn is thus a n-tuple, and is written where each xi is a real number. So, in multivariable calculus, the domain of a function of several real variables and the codomain of a real vector valued function are subsets of Rn for some n.

The real n-space has several further properties, notably:

- With componentwise addition and scalar multiplication, it is a real vector space. Every n-dimensional real vector space is isomorphic to it.

- With the dot product (sum of the term by term product of the components), it is an inner product space. Every n-dimensional real inner product space is isomorphic to it.

- As every inner product space, it is a topological space, and a topological vector space.

- It is a Euclidean space and a real affine space, and every Euclidean or affine space is isomorphic to it.

- It is an analytic manifold, and can be considered as the prototype of all manifolds, as, by definition, a manifold is, near each point, isomorphic to an open subset of Rn.

- It is an algebraic variety, and every real algebraic variety is a subset of Rn.

These properties and structures of Rn make it fundamental in almost all areas of mathematics and their application domains, such as statistics, probability theory, and many parts of physics.

The domain of a function of several variables

Any function f(x1, x2, ..., xn) of n real variables can be considered as a function on Rn (that is, with Rn as its domain). The use of the real n-space, instead of several variables considered separately, can simplify notation and suggest reasonable definitions. Consider, for n = 2, a function composition of the following form: where functions g1 and g2 are continuous. If

- ∀x1 ∈ R : f(x1, ·) is continuous (by x2)

- ∀x2 ∈ R : f(·, x2) is continuous (by x1)

then F is not necessarily continuous. Continuity is a stronger condition: the continuity of f in the natural R2 topology (discussed below), also called multivariable continuity, which is sufficient for continuity of the composition F.

Vector space

The coordinate space Rn forms an n-dimensional vector space over the field of real numbers with the addition of the structure of linearity, and is often still denoted Rn. The operations on Rn as a vector space are typically defined by The zero vector is given by and the additive inverse of the vector x is given by

This structure is important because any n-dimensional real vector space is isomorphic to the vector space Rn.

Matrix notation

In standard matrix notation, each element of Rn is typically written as a column vector and sometimes as a row vector:

The coordinate space Rn may then be interpreted as the space of all n × 1 column vectors, or all 1 × n row vectors with the ordinary matrix operations of addition and scalar multiplication.

Linear transformations from Rn to Rm may then be written as m × n matrices which act on the elements of Rn via left multiplication (when the elements of Rn are column vectors) and on elements of Rm via right multiplication (when they are row vectors). The formula for left multiplication, a special case of matrix multiplication, is:

Any linear transformation is a continuous function (see below). Also, a matrix defines an open map from Rn to Rm if and only if the rank of the matrix equals to m.

Standard basis

The coordinate space Rn comes with a standard basis:

To see that this is a basis, note that an arbitrary vector in Rn can be written uniquely in the form

Geometric properties and uses

Orientation

The fact that real numbers, unlike many other fields, constitute an ordered field yields an orientation structure on Rn. Any full-rank linear map of Rn to itself either preserves or reverses orientation of the space depending on the sign of the determinant of its matrix. If one permutes coordinates (or, in other words, elements of the basis), the resulting orientation will depend on the parity of the permutation.

Diffeomorphisms of Rn or domains in it, by their virtue to avoid zero Jacobian, are also classified to orientation-preserving and orientation-reversing. It has important consequences for the theory of differential forms, whose applications include electrodynamics.

Another manifestation of this structure is that the point reflection in Rn has different properties depending on evenness of n. For even n it preserves orientation, while for odd n it is reversed (see also improper rotation).

Affine space

Rn understood as an affine space is the same space, where Rn as a vector space acts by translations. Conversely, a vector has to be understood as a "difference between two points", usually illustrated by a directed line segment connecting two points. The distinction says that there is no canonical choice of where the origin should go in an affine n-space, because it can be translated anywhere.

Convexity

In a real vector space, such as Rn, one can define a convex cone, which contains all non-negative linear combinations of its vectors. Corresponding concept in an affine space is a convex set, which allows only convex combinations (non-negative linear combinations that sum to 1).

In the language of universal algebra, a vector space is an algebra over the universal vector space R∞ of finite sequences of coefficients, corresponding to finite sums of vectors, while an affine space is an algebra over the universal affine hyperplane in this space (of finite sequences summing to 1), a cone is an algebra over the universal orthant (of finite sequences of nonnegative numbers), and a convex set is an algebra over the universal simplex (of finite sequences of nonnegative numbers summing to 1). This geometrizes the axioms in terms of "sums with (possible) restrictions on the coordinates".

Another concept from convex analysis is a convex function from Rn to real numbers, which is defined through an inequality between its value on a convex combination of points and sum of values in those points with the same coefficients.

Euclidean space

The dot product defines the norm |x| = √x ⋅ x on the vector space Rn. If every vector has its Euclidean norm, then for any pair of points the distance is defined, providing a metric space structure on Rn in addition to its affine structure.

As for vector space structure, the dot product and Euclidean distance usually are assumed to exist in Rn without special explanations. However, the real n-space and a Euclidean n-space are distinct objects, strictly speaking. Any Euclidean n-space has a coordinate system where the dot product and Euclidean distance have the form shown above, called Cartesian. But there are many Cartesian coordinate systems on a Euclidean space.

Conversely, the above formula for the Euclidean metric defines the standard Euclidean structure on Rn, but it is not the only possible one. Actually, any positive-definite quadratic form q defines its own "distance" √q(x − y), but it is not very different from the Euclidean one in the sense that Such a change of the metric preserves some of its properties, for example the property of being a complete metric space. This also implies that any full-rank linear transformation of Rn, or its affine transformation, does not magnify distances more than by some fixed C2, and does not make distances smaller than 1 / C1 times, a fixed finite number times smaller.[clarification needed]

The aforementioned equivalence of metric functions remains valid if √q(x − y) is replaced with M(x − y), where M is any convex positive homogeneous function of degree 1, i.e. a vector norm (see Minkowski distance for useful examples). Because of this fact that any "natural" metric on Rn is not especially different from the Euclidean metric, Rn is not always distinguished from a Euclidean n-space even in professional mathematical works.

In algebraic and differential geometry

Although the definition of a manifold does not require that its model space should be Rn, this choice is the most common, and almost exclusive one in differential geometry.

On the other hand, Whitney embedding theorems state that any real differentiable m-dimensional manifold can be embedded into R2m.

Other appearances

Other structures considered on Rn include the one of a pseudo-Euclidean space, symplectic structure (even n), and contact structure (odd n). All these structures, although can be defined in a coordinate-free manner, admit standard (and reasonably simple) forms in coordinates.

Rn is also a real vector subspace of Cn which is invariant to complex conjugation; see also complexification.

Polytopes in Rn

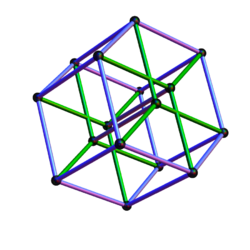

There are three families of polytopes which have simple representations in Rn spaces, for any n, and can be used to visualize any affine coordinate system in a real n-space. Vertices of a hypercube have coordinates (x1, x2, ..., xn) where each xk takes on one of only two values, typically 0 or 1. However, any two numbers can be chosen instead of 0 and 1, for example −1 and 1. An n-hypercube can be thought of as the Cartesian product of n identical intervals (such as the unit interval [0,1]) on the real line. As an n-dimensional subset it can be described with a system of 2n inequalities: for [0,1], and for [−1,1].

Each vertex of the cross-polytope has, for some k, the xk coordinate equal to ±1 and all other coordinates equal to 0 (such that it is the kth standard basis vector up to sign). This is a dual polytope of hypercube. As an n-dimensional subset it can be described with a single inequality which uses the absolute value operation: but this can be expressed with a system of 2n linear inequalities as well.

The third polytope with simply enumerable coordinates is the standard simplex, whose vertices are n standard basis vectors and the origin (0, 0, ..., 0). As an n-dimensional subset it is described with a system of n + 1 linear inequalities: Replacement of all "≤" with "<" gives interiors of these polytopes.

Topological properties

The topological structure of Rn (called the standard topology, Euclidean topology, or usual topology) can be obtained not only from Cartesian product. It is also identical to the natural topology induced by Euclidean metric discussed above: a set is open in the Euclidean topology if and only if it contains an open ball around each of its points. Also, Rn is a linear topological space (see continuity of linear maps above), and there is only one possible (non-trivial) topology compatible with its linear structure. As there are many open linear maps from Rn to itself which are not isometries, there can be many Euclidean structures on Rn which correspond to the same topology. Actually, it does not depend much even on the linear structure: there are many non-linear diffeomorphisms (and other homeomorphisms) of Rn onto itself, or its parts such as a Euclidean open ball or the interior of a hypercube).

Rn has the topological dimension n.

An important result on the topology of Rn, that is far from superficial, is Brouwer's invariance of domain. Any subset of Rn (with its subspace topology) that is homeomorphic to another open subset of Rn is itself open. An immediate consequence of this is that Rm is not homeomorphic to Rn if m ≠ n – an intuitively "obvious" result which is nonetheless difficult to prove.

Despite the difference in topological dimension, and contrary to a naïve perception, it is possible to map a lesser-dimensional[clarification needed] real space continuously and surjectively onto Rn. A continuous (although not smooth) space-filling curve (an image of R1) is possible.[clarification needed]

Examples

| 52px |

| Empty column vector, the only element of R0 |

n ≤ 1

Cases of 0 ≤ n ≤ 1 do not offer anything new: R1 is the real line, whereas R0 (the space containing the empty column vector) is a singleton, understood as a zero vector space. However, it is useful to include these as trivial cases of theories that describe different n.

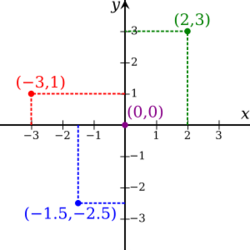

n = 2

The case of (x,y) where x and y are real numbers has been developed as the Cartesian plane P. Further structure has been attached with Euclidean vectors representing directed line segments in P. The plane has also been developed as the field extension by appending roots of X2 + 1 = 0 to the real field The root i acts on P as a quarter turn with counterclockwise orientation. This root generates the group . When (x,y) is written x + y i it is a complex number.

Another group action by , where the actor has been expressed as j, uses the line y=x for the involution of flipping the plane (x,y) ↦ (y,x), an exchange of coordinates. In this case points of P are written x + y j and called split-complex numbers. These numbers, with the coordinate-wise addition and multiplication according to jj=+1, form a ring that is not a field.

Another ring structure on P uses a nilpotent e to write x + y e for (x,y). The action of e on P reduces the plane to a line: It can be decomposed into the projection into the x-coordinate, then quarter-turning the result to the y-axis: e (x + y e) = x e since e2 = 0. A number x + y e is a dual number. The dual numbers form a ring, but, since e has no multiplicative inverse, it does not generate a group so the action is not a group action.

Excluding (0,0) from P makes [x : y] projective coordinates which describe the real projective line, a one-dimensional space. Since the origin is excluded, at least one of the ratios x/y and y/x exists. Then [x : y] = [x/y : 1] or [x : y] = [1 : y/x]. The projective line P1(R) is a topological manifold covered by two coordinate charts, [z : 1] → z or [1 : z] → z, which form an atlas. For points covered by both charts the transition function is multiplicative inversion on an open neighborhood of the point, which provides a homeomorphism as required in a manifold. One application of the real projective line is found in Cayley–Klein metric geometry.

n = 3

n = 4

R4 can be imagined using the fact that 16 points (x1, x2, x3, x4), where each xk is either 0 or 1, are vertices of a tesseract (pictured), the 4-hypercube (see above).

The first major use of R4 is a spacetime model: three spatial coordinates plus one temporal. This is usually associated with theory of relativity, although four dimensions were used for such models since Galilei. The choice of theory leads to different structure, though: in Galilean relativity the t coordinate is privileged, but in Einsteinian relativity it is not. Special relativity is set in Minkowski space. General relativity uses curved spaces, which may be thought of as R4 with a curved metric for most practical purposes. None of these structures provide a (positive-definite) metric on R4.

Euclidean R4 also attracts the attention of mathematicians, for example due to its relation to quaternions, a 4-dimensional real algebra themselves. See rotations in 4-dimensional Euclidean space for some information.

In differential geometry, n = 4 is the only case where Rn admits a non-standard differential structure: see exotic R4.

Norms on Rn

One could define many norms on the vector space Rn. Some common examples are

- the p-norm, defined by for all where is a positive integer. The case is very important, because it is exactly the Euclidean norm.

- the -norm or maximum norm, defined by for all . This is the limit of all the p-norms: .

A really surprising and helpful result is that every norm defined on Rn is equivalent. This means for two arbitrary norms and on Rn you can always find positive real numbers , such that for all .

This defines an equivalence relation on the set of all norms on Rn. With this result you can check that a sequence of vectors in Rn converges with if and only if it converges with .

Here is a sketch of what a proof of this result may look like:

Because of the equivalence relation it is enough to show that every norm on Rn is equivalent to the Euclidean norm . Let be an arbitrary norm on Rn. The proof is divided in two steps:

- We show that there exists a , such that for all . In this step you use the fact that every can be represented as a linear combination of the standard basis: . Then with the Cauchy–Schwarz inequality where .

- Now we have to find an , such that for all . Assume there is no such . Then there exists for every a , such that . Define a second sequence by . This sequence is bounded because . So because of the Bolzano–Weierstrass theorem there exists a convergent subsequence with limit Rn. Now we show that but , which is a contradiction. It is because and , so . This implies , so . On the other hand , because . This can not ever be true, so the assumption was false and there exists such a .

See also

- Exponential object, for theoretical explanation of the superscript notation

- Geometric space

- Real projective space

Sources

- Kelley, John L. (1975). General Topology. Springer-Verlag. ISBN 0-387-90125-6.

- Munkres, James (1999). Topology. Prentice-Hall. ISBN 0-13-181629-2.

|