Linear time-invariant system

This article includes a list of general references, but it remains largely unverified because it lacks sufficient corresponding inline citations. (April 2009) (Learn how and when to remove this template message) |

In system analysis, among other fields of study, a linear time-invariant (LTI) system is a system that produces an output signal from any input signal subject to the constraints of linearity and time-invariance; these terms are briefly defined in the overview below. These properties apply (exactly or approximately) to many important physical systems, in which case the response y(t) of the system to an arbitrary input x(t) can be found directly using convolution: y(t) = (x ∗ h)(t) where h(t) is called the system's impulse response and ∗ represents convolution (not to be confused with multiplication). What's more, there are systematic methods for solving any such system (determining h(t)), whereas systems not meeting both properties are generally more difficult (or impossible) to solve analytically. A good example of an LTI system is any electrical circuit consisting of resistors, capacitors, inductors and linear amplifiers.[2]

Linear time-invariant system theory is also used in image processing, where the systems have spatial dimensions instead of, or in addition to, a temporal dimension. These systems may be referred to as linear translation-invariant to give the terminology the most general reach. In the case of generic discrete-time (i.e., sampled) systems, linear shift-invariant is the corresponding term. LTI system theory is an area of applied mathematics which has direct applications in electrical circuit analysis and design, signal processing and filter design, control theory, mechanical engineering, image processing, the design of measuring instruments of many sorts, NMR spectroscopy , and many other technical areas where systems of ordinary differential equations present themselves.

Overview

The defining properties of any LTI system are linearity and time invariance.

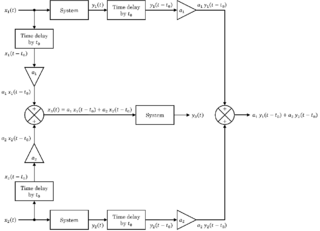

- Linearity means that the relationship between the input and the output , both being regarded as functions, is a linear mapping: If is a constant then the system output to is ; if is a further input with system output then the output of the system to is , this applying for all choices of , , . The latter condition is often referred to as the superposition principle.

- Time invariance means that whether we apply an input to the system now or T seconds from now, the output will be identical except for a time delay of T seconds. That is, if the output due to input is , then the output due to input is . Hence, the system is time invariant because the output does not depend on the particular time the input is applied.[3]

Through these properties, it is reasoned that LTI systems can be characterized entirely by a single function called the system's impulse response, as, by superposition, any arbitrary signal can be expressed as a superposition of time-shifted impulses. The output of the system is simply the convolution of the input to the system with the system's impulse response . This is called a continuous time system. Similarly, a discrete-time linear time-invariant (or, more generally, "shift-invariant") system is defined as one operating in discrete time: where y, x, and h are sequences and the convolution, in discrete time, uses a discrete summation rather than an integral.[4]

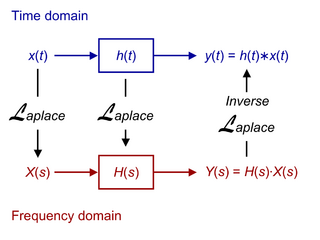

LTI systems can also be characterized in the frequency domain by the system's transfer function, which for a continuous-time or discrete-time system is the Laplace transform or Z-transform of the system's impulse response, respectively. As a result of the properties of these transforms, the output of the system in the frequency domain is the product of the transfer function and the corresponding frequency-domain representation of the input. In other words, convolution in the time domain is equivalent to multiplication in the frequency domain.

For all LTI systems, the eigenfunctions, and the basis functions of the transforms, are complex exponentials. As a result, if the input to a system is the complex waveform for some complex amplitude and complex frequency , the output will be some complex constant times the input, say for some new complex amplitude . The ratio is the transfer function at frequency . The output signal will be shifted in phase and amplitude, but always with the same frequency upon reaching steady-state. LTI systems cannot produce frequency components that are not in the input.

LTI system theory is good at describing many important systems. Most LTI systems are considered "easy" to analyze, at least compared to the time-varying and/or nonlinear case. Any system that can be modeled as a linear differential equation with constant coefficients is an LTI system. Examples of such systems are electrical circuits made up of resistors, inductors, and capacitors (RLC circuits). Ideal spring–mass–damper systems are also LTI systems, and are mathematically equivalent to RLC circuits.

Most LTI system concepts are similar between the continuous-time and discrete-time cases. In image processing, the time variable is replaced with two space variables, and the notion of time invariance is replaced by two-dimensional shift invariance. When analyzing filter banks and MIMO systems, it is often useful to consider vectors of signals. A linear system that is not time-invariant can be solved using other approaches such as the Green function method.

Continuous-time systems

Impulse response and convolution

The behavior of a linear, continuous-time, time-invariant system with input signal x(t) and output signal y(t) is described by the convolution integral:[5]

(using commutativity)

where is the system's response to an impulse: . is therefore proportional to a weighted average of the input function . The weighting function is , simply shifted by amount . As changes, the weighting function emphasizes different parts of the input function. When is zero for all negative , depends only on values of prior to time , and the system is said to be causal.

To understand why the convolution produces the output of an LTI system, let the notation represent the function with variable and constant . And let the shorter notation represent . Then a continuous-time system transforms an input function, into an output function, . And in general, every value of the output can depend on every value of the input. This concept is represented by: where is the transformation operator for time . In a typical system, depends most heavily on the values of that occurred near time . Unless the transform itself changes with , the output function is just constant, and the system is uninteresting.

For a linear system, must satisfy Eq.1:

-

()

And the time-invariance requirement is:

-

( )

In this notation, we can write the impulse response as

Similarly:

(using Eq.3)

Substituting this result into the convolution integral:

which has the form of the right side of Eq.2 for the case and

Eq.2 then allows this continuation:

In summary, the input function, , can be represented by a continuum of time-shifted impulse functions, combined "linearly", as shown at . The system's linearity property allows the system's response to be represented by the corresponding continuum of impulse responses, combined in the same way. And the time-invariance property allows that combination to be represented by the convolution integral.

The mathematical operations above have a simple graphical simulation.[6]

Exponentials as eigenfunctions

An eigenfunction is a function for which the output of the operator is a scaled version of the same function. That is, where f is the eigenfunction and is the eigenvalue, a constant.

The exponential functions , where , are eigenfunctions of a linear, time-invariant operator. A simple proof illustrates this concept. Suppose the input is . The output of the system with impulse response is then which, by the commutative property of convolution, is equivalent to

where the scalar is dependent only on the parameter s.

So the system's response is a scaled version of the input. In particular, for any , the system output is the product of the input and the constant . Hence, is an eigenfunction of an LTI system, and the corresponding eigenvalue is .

Direct proof

It is also possible to directly derive complex exponentials as eigenfunctions of LTI systems.

Let's set some complex exponential and a time-shifted version of it.

by linearity with respect to the constant .

by time invariance of .

So . Setting and renaming we get: i.e. that a complex exponential as input will give a complex exponential of same frequency as output.

Fourier and Laplace transforms

The eigenfunction property of exponentials is very useful for both analysis and insight into LTI systems. The one-sided Laplace transform is exactly the way to get the eigenvalues from the impulse response. Of particular interest are pure sinusoids (i.e., exponential functions of the form where and ). The Fourier transform gives the eigenvalues for pure complex sinusoids. Both of and are called the system function, system response, or transfer function.

The Laplace transform is usually used in the context of one-sided signals, i.e. signals that are zero for all values of t less than some value. Usually, this "start time" is set to zero, for convenience and without loss of generality, with the transform integral being taken from zero to infinity (the transform shown above with lower limit of integration of negative infinity is formally known as the bilateral Laplace transform).

The Fourier transform is used for analyzing systems that process signals that are infinite in extent, such as modulated sinusoids, even though it cannot be directly applied to input and output signals that are not square integrable. The Laplace transform actually works directly for these signals if they are zero before a start time, even if they are not square integrable, for stable systems. The Fourier transform is often applied to spectra of infinite signals via the Wiener–Khinchin theorem even when Fourier transforms of the signals do not exist.

Due to the convolution property of both of these transforms, the convolution that gives the output of the system can be transformed to a multiplication in the transform domain, given signals for which the transforms exist

One can use the system response directly to determine how any particular frequency component is handled by a system with that Laplace transform. If we evaluate the system response (Laplace transform of the impulse response) at complex frequency s = jω, where ω = 2πf, we obtain |H(s)| which is the system gain for frequency f. The relative phase shift between the output and input for that frequency component is likewise given by arg(H(s)).

Examples

- A simple example of an LTI operator is the derivative.

- (i.e., it is linear)

- (i.e., it is time invariant)

When the Laplace transform of the derivative is taken, it transforms to a simple multiplication by the Laplace variable s.

That the derivative has such a simple Laplace transform partly explains the utility of the transform. - Another simple LTI operator is an averaging operator By the linearity of integration, it is linear. Additionally, because it is time invariant. In fact, can be written as a convolution with the boxcar function . That is, where the boxcar function

Important system properties

Some of the most important properties of a system are causality and stability. Causality is a necessity for a physical system whose independent variable is time, however this restriction is not present in other cases such as image processing.

Causality

A system is causal if the output depends only on present and past, but not future inputs. A necessary and sufficient condition for causality is

where is the impulse response. It is not possible in general to determine causality from the two-sided Laplace transform. However, when working in the time domain, one normally uses the one-sided Laplace transform which requires causality.

Stability

A system is bounded-input, bounded-output stable (BIBO stable) if, for every bounded input, the output is finite. Mathematically, if every input satisfying

leads to an output satisfying

(that is, a finite maximum absolute value of implies a finite maximum absolute value of ), then the system is stable. A necessary and sufficient condition is that , the impulse response, is in L1 (has a finite L1 norm):

In the frequency domain, the region of convergence must contain the imaginary axis .

Discrete-time systems

Almost everything in continuous-time systems has a counterpart in discrete-time systems.

Discrete-time systems from continuous-time systems

In many contexts, a discrete time (DT) system is really part of a larger continuous time (CT) system. For example, a digital recording system takes an analog sound, digitizes it, possibly processes the digital signals, and plays back an analog sound for people to listen to.

In practical systems, DT signals obtained are usually uniformly sampled versions of CT signals. If is a CT signal, then the sampling circuit used before an analog-to-digital converter will transform it to a DT signal: where T is the sampling period. Before sampling, the input signal is normally run through a so-called Nyquist filter which removes frequencies above the "folding frequency" 1/(2T); this guarantees that no information in the filtered signal will be lost. Without filtering, any frequency component above the folding frequency (or Nyquist frequency) is aliased to a different frequency (thus distorting the original signal), since a DT signal can only support frequency components lower than the folding frequency.

Impulse response and convolution

Let represent the sequence

And let the shorter notation represent

A discrete system transforms an input sequence, into an output sequence, In general, every element of the output can depend on every element of the input. Representing the transformation operator by , we can write:

Note that unless the transform itself changes with n, the output sequence is just constant, and the system is uninteresting. (Thus the subscript, n.) In a typical system, y[n] depends most heavily on the elements of x whose indices are near n.

For the special case of the Kronecker delta function, the output sequence is the impulse response:

For a linear system, must satisfy:

-

()

And the time-invariance requirement is:

-

()

In such a system, the impulse response, , characterizes the system completely. That is, for any input sequence, the output sequence can be calculated in terms of the input and the impulse response. To see how that is done, consider the identity:

which expresses in terms of a sum of weighted delta functions.

Therefore:

where we have invoked Eq.4 for the case and .

And because of Eq.5, we may write:

Therefore:

which is the familiar discrete convolution formula. The operator can therefore be interpreted as proportional to a weighted average of the function x[k]. The weighting function is h[−k], simply shifted by amount n. As n changes, the weighting function emphasizes different parts of the input function. Equivalently, the system's response to an impulse at n=0 is a "time" reversed copy of the unshifted weighting function. When h[k] is zero for all negative k, the system is said to be causal.

Exponentials as eigenfunctions

An eigenfunction is a function for which the output of the operator is the same function, scaled by some constant. In symbols,

where f is the eigenfunction and is the eigenvalue, a constant.

The exponential functions , where , are eigenfunctions of a linear, time-invariant operator. is the sampling interval, and . A simple proof illustrates this concept.

Suppose the input is . The output of the system with impulse response is then

which is equivalent to the following by the commutative property of convolution where is dependent only on the parameter z.

So is an eigenfunction of an LTI system because the system response is the same as the input times the constant .

Z and discrete-time Fourier transforms

The eigenfunction property of exponentials is very useful for both analysis and insight into LTI systems. The Z transform

is exactly the way to get the eigenvalues from the impulse response.[clarification needed] Of particular interest are pure sinusoids; i.e. exponentials of the form , where . These can also be written as with [clarification needed]. The discrete-time Fourier transform (DTFT) gives the eigenvalues of pure sinusoids[clarification needed]. Both of and are called the system function, system response, or transfer function.

Like the one-sided Laplace transform, the Z transform is usually used in the context of one-sided signals, i.e. signals that are zero for t<0. The discrete-time Fourier transform Fourier series may be used for analyzing periodic signals.

Due to the convolution property of both of these transforms, the convolution that gives the output of the system can be transformed to a multiplication in the transform domain. That is,

Just as with the Laplace transform transfer function in continuous-time system analysis, the Z transform makes it easier to analyze systems and gain insight into their behavior.

Examples

- A simple example of an LTI operator is the delay operator .

- (i.e., it is linear)

- (i.e., it is time invariant)

The Z transform of the delay operator is a simple multiplication by z−1. That is,

- Another simple LTI operator is the averaging operator Because of the linearity of sums, and so it is linear. Because, it is also time invariant.

Important system properties

The input-output characteristics of discrete-time LTI system are completely described by its impulse response . Two of the most important properties of a system are causality and stability. Non-causal (in time) systems can be defined and analyzed as above, but cannot be realized in real-time. Unstable systems can also be analyzed and built, but are only useful as part of a larger system whose overall transfer function is stable.

Causality

A discrete-time LTI system is causal if the current value of the output depends on only the current value and past values of the input.[7] A necessary and sufficient condition for causality is where is the impulse response. It is not possible in general to determine causality from the Z transform, because the inverse transform is not unique . When a region of convergence is specified, then causality can be determined.

Stability

A system is bounded input, bounded output stable (BIBO stable) if, for every bounded input, the output is finite. Mathematically, if

implies that

(that is, if bounded input implies bounded output, in the sense that the maximum absolute values of and are finite), then the system is stable. A necessary and sufficient condition is that , the impulse response, satisfies

In the frequency domain, the region of convergence must contain the unit circle (i.e., the locus satisfying for complex z).

Notes

- ↑ Bessai, Horst J. (2005). MIMO Signals and Systems. Springer. pp. 27–28. ISBN 0-387-23488-8.

- ↑ Hespanha 2009, p. 78.

- ↑ Phillips, Charles L.; Parr, John M.; Riskin, Eve A. (2003). Signals, systems, and transforms (3rd ed.). Upper Saddle River, N.J: Prentice Hall. pp. 89. ISBN 978-0-13-041207-2.

- ↑ Phillips, Charles L.; Parr, John M.; Riskin, Eve A. (2003). Signals, systems, and transforms (3rd ed.). Upper Saddle River, N.J: Pearson Education. pp. 92. ISBN 978-0-13-041207-2.

- ↑ Crutchfield, p. 1. Welcome!

- ↑ Crutchfield, p. 1. Exercises

- ↑ Phillips 2007, p. 508.

See also

- Circulant matrix

- Frequency response

- Impulse response

- System analysis

- Green function

- Signal-flow graph

References

- Phillips, C.L., Parr, J.M., & Riskin, E.A. (2007). Signals, systems and Transforms. Prentice Hall. ISBN 978-0-13-041207-2.

- Hespanha, J.P. (2009). Linear System Theory. Princeton university press. ISBN 978-0-691-14021-6.

- Crutchfield, Steve (October 12, 2010), "The Joy of Convolution", Johns Hopkins University, http://www.jhu.edu/signals/convolve/index.html, retrieved November 21, 2010

- Vaidyanathan, P. P.; Chen, T. (May 1995). "Role of anticausal inverses in multirate filter banks — Part I: system theoretic fundamentals". IEEE Trans. Signal Process. 43 (6): 1090. doi:10.1109/78.382395. Bibcode: 1995ITSP...43.1090V. https://authors.library.caltech.edu/6832/1/VAIieeetsp95b.pdf.

Further reading

- Porat, Boaz (1997). A Course in Digital Signal Processing. New York: John Wiley. ISBN 978-0-471-14961-3.

- Vaidyanathan, P. P.; Chen, T. (May 1995). "Role of anticausal inverses in multirate filter banks — Part I: system theoretic fundamentals". IEEE Trans. Signal Process. 43 (5): 1090. doi:10.1109/78.382395. Bibcode: 1995ITSP...43.1090V. https://authors.library.caltech.edu/6832/1/VAIieeetsp95b.pdf.

External links

- ECE 209: Review of Circuits as LTI Systems – Short primer on the mathematical analysis of (electrical) LTI systems.

- ECE 209: Sources of Phase Shift – Gives an intuitive explanation of the source of phase shift in two common electrical LTI systems.

- JHU 520.214 Signals and Systems course notes. An encapsulated course on LTI system theory. Adequate for self teaching.

- LTI system example: RC low-pass filter. Amplitude and phase response.

|