Loop-erased random walk

In mathematics, loop-erased random walk is a model for a random simple path with important applications in combinatorics, physics and quantum field theory. It is intimately connected to the uniform spanning tree, a model for a random tree. See also random walk for more general treatment of this topic.

Definition

Assume G is some graph and [math]\displaystyle{ \gamma }[/math] is some path of length n on G. In other words, [math]\displaystyle{ \gamma(1),\dots,\gamma(n) }[/math] are vertices of G such that [math]\displaystyle{ \gamma(i) }[/math] and [math]\displaystyle{ \gamma(i+1) }[/math] are connected by an edge. Then the loop erasure of [math]\displaystyle{ \gamma }[/math] is a new simple path created by erasing all the loops of [math]\displaystyle{ \gamma }[/math] in chronological order. Formally, we define indices [math]\displaystyle{ i_j }[/math] inductively using

- [math]\displaystyle{ i_1 = 1\, }[/math]

- [math]\displaystyle{ i_{j+1}=\max\{k:\gamma(k)=\gamma(i_j)\}+1\, }[/math]

where "max" here means up to the length of the path [math]\displaystyle{ \gamma }[/math]. The induction stops when for some [math]\displaystyle{ i_j }[/math] we have [math]\displaystyle{ \gamma(i_j)=\gamma(n) }[/math].

In words, to find [math]\displaystyle{ i_{j+1} }[/math], we hold [math]\displaystyle{ \gamma(i_j) }[/math] in one hand, and with the other hand, we trace back from the end: [math]\displaystyle{ \gamma(n), \gamma(n-1), ... }[/math], until we either hit some [math]\displaystyle{ \gamma(k) = \gamma(i_j) }[/math], in which case we set [math]\displaystyle{ i_{j+1} = k }[/math], or we end up at [math]\displaystyle{ \gamma(i_j) }[/math], in which case we set [math]\displaystyle{ i_{j+1} = i_j+1 }[/math].

Assume this happens at J i.e. [math]\displaystyle{ i_J }[/math] is the last [math]\displaystyle{ i_j }[/math]. Then the loop erasure of [math]\displaystyle{ \gamma }[/math], denoted by [math]\displaystyle{ \mathrm{LE}(\gamma) }[/math] is a simple path of length J defined by

- [math]\displaystyle{ \mathrm{LE}(\gamma)(j)=\gamma(i_j).\, }[/math]

Now let G be some graph, let v be a vertex of G, and let R be a random walk on G starting from v. Let T be some stopping time for R. Then the loop-erased random walk until time T is LE(R([1,T])). In other words, take R from its beginning until T — that's a (random) path — erase all the loops in chronological order as above — you get a random simple path.

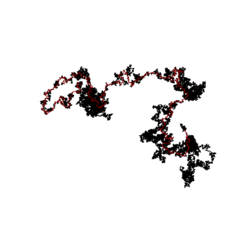

The stopping time T may be fixed, i.e. one may perform n steps and then loop-erase. However, it is usually more natural to take T to be the hitting time in some set. For example, let G be the graph Z2 and let R be a random walk starting from the point (0,0). Let T be the time when R first hits the circle of radius 100 (we mean here of course a discretized circle). LE(R) is called the loop-erased random walk starting at (0,0) and stopped at the circle.

Uniform spanning tree

For any graph G, a spanning tree of G is a subgraph of G containing all vertices and some of the edges, which is a tree, i.e. connected and with no cycles. A spanning tree chosen randomly from among all possible spanning trees with equal probability is called a uniform spanning tree. There are typically exponentially many spanning trees (too many to generate them all and then choose one randomly); instead, uniform spanning trees can be generated more efficiently by an algorithm called Wilson's algorithm which uses loop-erased random walks.

The algorithm proceeds according to the following steps. First, construct a single-vertex tree T by choosing (arbitrarily) one vertex. Then, while the tree T constructed so far does not yet include all of the vertices of the graph, let v be an arbitrary vertex that is not in T, perform a loop-erased random walk from v until reaching a vertex in T, and add the resulting path to T. Repeating this process until all vertices are included produces a uniformly distributed tree, regardless of the arbitrary choices of vertices at each step.

A connection in the other direction is also true. If v and w are two vertices in G then, in any spanning tree, they are connected by a unique path. Taking this path in the uniform spanning tree gives a random simple path. It turns out that the distribution of this path is identical to the distribution of the loop-erased random walk starting at v and stopped at w. This fact can be used to justify the correctness of Wilson's algorithm. Another corollary is that loop-erased random walk is symmetric in its start and end points. More precisely, the distribution of the loop-erased random walk starting at v and stopped at w is identical to the distribution of the reversal of loop-erased random walk starting at w and stopped at v. Loop-erasing a random walk and the reverse walk do not, in general, give the same result, but according to this result the distributions of the two loop-erased walks are identical.

The Laplacian random walk

Another representation of loop-erased random walk stems from solutions of the discrete Laplace equation. Let G again be a graph and let v and w be two vertices in G. Construct a random path from v to w inductively using the following procedure. Assume we have already defined [math]\displaystyle{ \gamma(1),...,\gamma(n) }[/math]. Let f be a function from G to R satisfying

- [math]\displaystyle{ f(\gamma(i))=0 }[/math] for all [math]\displaystyle{ i\leq n }[/math] and [math]\displaystyle{ f(w)=1 }[/math]

- f is discretely harmonic everywhere else

Where a function f on a graph is discretely harmonic at a point x if f(x) equals the average of f on the neighbors of x.

With f defined choose [math]\displaystyle{ \gamma(n+1) }[/math] using f at the neighbors of [math]\displaystyle{ \gamma(n) }[/math] as weights. In other words, if [math]\displaystyle{ x_1,...,x_d }[/math] are these neighbors, choose [math]\displaystyle{ x_i }[/math] with probability

- [math]\displaystyle{ \frac{f(x_i)}{\sum_{j=1}^d f(x_j)}. }[/math]

Continuing this process, recalculating f at each step, with result in a random simple path from v to w; the distribution of this path is identical to that of a loop-erased random walk from v to w.

An alternative view is that the distribution of a loop-erased random walk conditioned to start in some path β is identical to the loop-erasure of a random walk conditioned not to hit β. This property is often referred to as the Markov property of loop-erased random walk (though the relation to the usual Markov property is somewhat vague).

It is important to notice that while the proof of the equivalence is quite easy, models which involve dynamically changing harmonic functions or measures are typically extremely difficult to analyze. Practically nothing is known about the p-Laplacian walk or diffusion-limited aggregation. Another somewhat related model is the harmonic explorer.

Finally there is another link that should be mentioned: Kirchhoff's theorem relates the number of spanning trees of a graph G to the eigenvalues of the discrete Laplacian. See spanning tree for details.

Grids

Let d be the dimension, which we will assume to be at least 2. Examine Zd i.e. all the points [math]\displaystyle{ (a_1,...,a_d) }[/math] with integer [math]\displaystyle{ a_i }[/math]. This is an infinite graph with degree 2d when you connect each point to its nearest neighbors. From now on we will consider loop-erased random walk on this graph or its subgraphs.

High dimensions

The easiest case to analyze is dimension 5 and above. In this case it turns out that there the intersections are only local. A calculation shows that if one takes a random walk of length n, its loop-erasure has length of the same order of magnitude, i.e. n. Scaling accordingly, it turns out that loop-erased random walk converges (in an appropriate sense) to Brownian motion as n goes to infinity. Dimension 4 is more complicated, but the general picture is still true. It turns out that the loop-erasure of a random walk of length n has approximately [math]\displaystyle{ n/\log^{1/3}n }[/math] vertices, but again, after scaling (that takes into account the logarithmic factor) the loop-erased walk converges to Brownian motion.

Two dimensions

In two dimensions, arguments from conformal field theory and simulation results led to a number of exciting conjectures. Assume D is some simply connected domain in the plane and x is a point in D. Take the graph G to be

- [math]\displaystyle{ G:=D\cap \varepsilon \mathbb{Z}^2, }[/math]

that is, a grid of side length ε restricted to D. Let v be the vertex of G closest to x. Examine now a loop-erased random walk starting from v and stopped when hitting the "boundary" of G, i.e. the vertices of G which correspond to the boundary of D. Then the conjectures are

- As ε goes to zero the distribution of the path converges to some distribution on simple paths from x to the boundary of D (different from Brownian motion, of course — in 2 dimensions paths of Brownian motion are not simple). This distribution (denote it by [math]\displaystyle{ S_{D,x} }[/math]) is called the scaling limit of loop-erased random walk.

- These distributions are conformally invariant. Namely, if φ is a Riemann map between D and a second domain E then

- [math]\displaystyle{ \phi(S_{D,x})=S_{E,\phi(x)}.\, }[/math]

- The Hausdorff dimension of these paths is 5/4 almost surely.

The first attack at these conjectures came from the direction of domino tilings. Taking a spanning tree of G and adding to it its planar dual one gets a domino tiling of a special derived graph (call it H). Each vertex of H corresponds to a vertex, edge or face of G, and the edges of H show which vertex lies on which edge and which edge on which face. It turns out that taking a uniform spanning tree of G leads to a uniformly distributed random domino tiling of H. The number of domino tilings of a graph can be calculated using the determinant of special matrices, which allow to connect it to the discrete Green function which is approximately conformally invariant. These arguments allowed to show that certain measurables of loop-erased random walk are (in the limit) conformally invariant, and that the expected number of vertices in a loop-erased random walk stopped at a circle of radius r is of the order of [math]\displaystyle{ r^{5/4} }[/math].[1]

In 2002 these conjectures were resolved (positively) using Stochastic Löwner Evolution. Very roughly, it is a stochastic conformally invariant ordinary differential equation which allows to catch the Markov property of loop-erased random walk (and many other probabilistic processes).

Three dimensions

The scaling limit exists and is invariant under rotations and dilations.[2] If [math]\displaystyle{ L(r) }[/math] denotes the expected number of vertices in the loop-erased random walk until it gets to a distance of r, then

- [math]\displaystyle{ cr^{1+\varepsilon}\leq L(r)\leq Cr^{5/3}\, }[/math]

where ε, c and C are some positive numbers[3] (the numbers can, in principle, be calculated from the proofs, but the author did not do it). This suggests that the scaling limit should have Hausdorff dimension between [math]\displaystyle{ 1+\varepsilon }[/math] and 5/3 almost surely. Numerical experiments show that it should be [math]\displaystyle{ 1.62400\pm 0.00005 }[/math].[4]

Notes

References

- "The asymptotic determinant of the discrete Laplacian", Acta Mathematica 185 (2): 239–286, 2000a, doi:10.1007/BF02392811

- "Conformal invariance of domino tiling", Annals of Probability 28 (2): 759–795, April 2000, doi:10.1214/aop/1019160260

- "Long-range properties of spanning trees", Journal of Mathematical Physics 41 (3): 1338–1363, March 2000, doi:10.1063/1.533190, Bibcode: 2000JMP....41.1338K, http://www.math.ubc.ca/~kenyon/papers/long.ps.Z

- Kozma, Gady (2007), "The scaling limit of loop-erased random walk in three dimensions", Acta Mathematica 199 (1): 29–152, doi:10.1007/s11511-007-0018-8

- "A self-avoiding random walk", Duke Mathematical Journal 47 (3): 655–693, September 1980, doi:10.1215/S0012-7094-80-04741-9

- "The logarithmic correction for loop-erased random walk in four dimensions", Proceedings of the Conference in Honor of Jean-Pierre kahane (Orsay, 1993). Special issue of the Journal of Fourier Analysis and Applications, pp. 347–362, ISBN 9780429332838

- Bramson, Maury; Durrett, Richard T., eds. (1999), "Loop-erased random walk", Perplexing problems in probability: Festschrift in honor of Harry Kesten, Progress in Probability, 44, Birkhäuser, Boston, MA, pp. 197–217, doi:10.1007/978-1-4612-2168-5, ISBN 978-1-4612-7442-1

- "Conformal invariance of planar loop-erased random walks and uniform spanning trees", Annals of Probability 32 (1B): 939–995, 2004, doi:10.1214/aop/1079021469

- Pemantle, Robin (1991), "Choosing a spanning tree for the integer lattice uniformly", Annals of Probability 19 (4): 1559–1574, doi:10.1214/aop/1176990223

- "Scaling limits of loop-erased random walks and uniform spanning trees", Israel Journal of Mathematics 118: 221–288, 2000, doi:10.1007/BF02803524

- Wilson, David Bruce (1996), "Generating random spanning trees more quickly than the cover time", STOC '96: Proceedings of the Twenty-Eighth Annual ACM Symposium on the Theory of Computing (Philadelphia, PA, 1996), Association for Computing Machinery, New York, pp. 296–303, doi:10.1145/237814.237880

- Wilson, David Bruce (2010), "The dimension of loop-erased random walk in three dimensions", Physical Review E 82 (6): 062102, doi:10.1103/PhysRevE.82.062102, PMID 21230692, Bibcode: 2010PhRvE..82f2102W

|