Cartesian tensor

In geometry and linear algebra, a Cartesian tensor uses an orthonormal basis to represent a tensor in a Euclidean space in the form of components. Converting a tensor's components from one such basis to another is done through an orthogonal transformation.

The most familiar coordinate systems are the two-dimensional and three-dimensional Cartesian coordinate systems. Cartesian tensors may be used with any Euclidean space, or more technically, any finite-dimensional vector space over the field of real numbers that has an inner product.

Use of Cartesian tensors occurs in physics and engineering, such as with the Cauchy stress tensor and the moment of inertia tensor in rigid body dynamics. Sometimes general curvilinear coordinates are convenient, as in high-deformation continuum mechanics, or even necessary, as in general relativity. While orthonormal bases may be found for some such coordinate systems (e.g. tangent to spherical coordinates), Cartesian tensors may provide considerable simplification for applications in which rotations of rectilinear coordinate axes suffice. The transformation is a passive transformation, since the coordinates are changed and not the physical system.

Vectors in three dimensions

In 3D Euclidean space, [math]\displaystyle{ \R^3 }[/math], the standard basis is ex, ey, ez. Each basis vector points along the x-, y-, and z-axes, and the vectors are all unit vectors (or normalized), so the basis is orthonormal.

Throughout, when referring to Cartesian coordinates in three dimensions, a right-handed system is assumed and this is much more common than a left-handed system in practice, see orientation (vector space) for details.

For Cartesian tensors of order 1, a Cartesian vector a can be written algebraically as a linear combination of the basis vectors ex, ey, ez:

[math]\displaystyle{ \mathbf{a} = a_\text{x}\mathbf{e}_\text{x} + a_\text{y}\mathbf{e}_\text{y} + a_\text{z}\mathbf{e}_\text{z} }[/math]

where the coordinates of the vector with respect to the Cartesian basis are denoted ax, ay, az. It is common and helpful to display the basis vectors as column vectors

[math]\displaystyle{ \mathbf{e}_\text{x} = \begin{pmatrix} 1 \\ 0 \\ 0 \end{pmatrix} \,,\quad \mathbf{e}_\text{y} = \begin{pmatrix} 0 \\ 1 \\ 0 \end{pmatrix} \,,\quad \mathbf{e}_\text{z} = \begin{pmatrix} 0 \\ 0 \\ 1 \end{pmatrix} }[/math]

when we have a coordinate vector in a column vector representation:

[math]\displaystyle{ \mathbf{a} = \begin{pmatrix} a_\text{x} \\ a_\text{y} \\ a_\text{z} \end{pmatrix} }[/math]

A row vector representation is also legitimate, although in the context of general curvilinear coordinate systems the row and column vector representations are used separately for specific reasons – see Einstein notation and covariance and contravariance of vectors for why.

The term "component" of a vector is ambiguous: it could refer to:

- a specific coordinate of the vector such as az (a scalar), and similarly for x and y, or

- the coordinate scalar-multiplying the corresponding basis vector, in which case the "y-component" of a is ayey (a vector), and similarly for x and z.

A more general notation is tensor index notation, which has the flexibility of numerical values rather than fixed coordinate labels. The Cartesian labels are replaced by tensor indices in the basis vectors ex ↦ e1, ey ↦ e2, ez ↦ e3 and coordinates ax ↦ a1, ay ↦ a2, az ↦ a3. In general, the notation e1, e2, e3 refers to any basis, and a1, a2, a3 refers to the corresponding coordinate system; although here they are restricted to the Cartesian system. Then:

[math]\displaystyle{ \mathbf{a} = a_1\mathbf{e}_1 + a_2\mathbf{e}_2 + a_3\mathbf{e}_3 = \sum_{i=1}^3 a_i\mathbf{e}_i }[/math]

It is standard to use the Einstein notation—the summation sign for summation over an index that is present exactly twice within a term may be suppressed for notational conciseness:

[math]\displaystyle{ \mathbf{a} = \sum_{i=1}^3 a_i\mathbf{e}_i \equiv a_i\mathbf{e}_i }[/math]

An advantage of the index notation over coordinate-specific notations is the independence of the dimension of the underlying vector space, i.e. the same expression on the right hand side takes the same form in higher dimensions (see below). Previously, the Cartesian labels x, y, z were just labels and not indices. (It is informal to say "i = x, y, z").

Second-order tensors in three dimensions

A dyadic tensor T is an order-2 tensor formed by the tensor product ⊗ of two Cartesian vectors a and b, written T = a ⊗ b. Analogous to vectors, it can be written as a linear combination of the tensor basis ex ⊗ ex ≡ exx, ex ⊗ ey ≡ exy, ..., ez ⊗ ez ≡ ezz (the right-hand side of each identity is only an abbreviation, nothing more):

[math]\displaystyle{ \begin{align} \mathbf{T} =\quad &\left(a_\text{x}\mathbf{e}_\text{x} + a_\text{y}\mathbf{e}_\text{y} + a_\text{z}\mathbf{e}_\text{z}\right)\otimes\left(b_\text{x}\mathbf{e}_\text{x} + b_\text{y}\mathbf{e}_\text{y} + b_\text{z}\mathbf{e}_\text{z}\right) \\[5pt] {}=\quad &a_\text{x} b_\text{x} \mathbf{e}_\text{x} \otimes \mathbf{e}_\text{x} + a_\text{x} b_\text{y}\mathbf{e}_\text{x} \otimes \mathbf{e}_\text{y} + a_\text{x} b_\text{z}\mathbf{e}_\text{x} \otimes \mathbf{e}_\text{z} \\[4pt] {}+{} &a_\text{y} b_\text{x}\mathbf{e}_\text{y} \otimes \mathbf{e}_\text{x} + a_\text{y} b_\text{y}\mathbf{e}_\text{y} \otimes \mathbf{e}_\text{y} + a_\text{y} b_\text{z}\mathbf{e}_\text{y} \otimes \mathbf{e}_\text{z} \\[4pt] {}+{} &a_\text{z} b_\text{x} \mathbf{e}_\text{z} \otimes \mathbf{e}_\text{x} + a_\text{z} b_\text{y}\mathbf{e}_\text{z} \otimes \mathbf{e}_\text{y} + a_\text{z} b_\text{z}\mathbf{e}_\text{z} \otimes \mathbf{e}_\text{z} \end{align} }[/math]

Representing each basis tensor as a matrix:

[math]\displaystyle{ \begin{align} \mathbf{e}_\text{x} \otimes \mathbf{e}_\text{x} &\equiv \mathbf{e}_\text{xx} = \begin{pmatrix} 1 & 0 & 0\\ 0 & 0 & 0\\ 0 & 0 & 0 \end{pmatrix}\,,& \mathbf{e}_\text{x} \otimes \mathbf{e}_\text{y} &\equiv \mathbf{e}_\text{xy} = \begin{pmatrix} 0 & 1 & 0\\ 0 & 0 & 0\\ 0 & 0 & 0 \end{pmatrix}\,,& \mathbf{e}_\text{z} \otimes \mathbf{e}_\text{z} &\equiv \mathbf{e}_\text{zz} = \begin{pmatrix} 0 & 0 & 0\\ 0 & 0 & 0\\ 0 & 0 & 1 \end{pmatrix} \end{align} }[/math]

then T can be represented more systematically as a matrix:

[math]\displaystyle{ \mathbf{T} = \begin{pmatrix} a_\text{x} b_\text{x} & a_\text{x} b_\text{y} & a_\text{x} b_\text{z} \\ a_\text{y} b_\text{x} & a_\text{y} b_\text{y} & a_\text{y} b_\text{z} \\ a_\text{z} b_\text{x} & a_\text{z} b_\text{y} & a_\text{z} b_\text{z} \end{pmatrix} }[/math]

See matrix multiplication for the notational correspondence between matrices and the dot and tensor products.

More generally, whether or not T is a tensor product of two vectors, it is always a linear combination of the basis tensors with coordinates Txx, Txy, ..., Tzz:

[math]\displaystyle{ \begin{align} \mathbf{T} =\quad &T_\text{xx}\mathbf{e}_\text{xx} + T_\text{xy}\mathbf{e}_\text{xy} + T_\text{xz}\mathbf{e}_\text{xz} \\[4pt] {}+{} &T_\text{yx}\mathbf{e}_\text{yx} + T_\text{yy}\mathbf{e}_\text{yy} + T_\text{yz}\mathbf{e}_\text{yz} \\[4pt] {}+{} &T_\text{zx}\mathbf{e}_\text{zx} + T_\text{zy}\mathbf{e}_\text{zy} + T_\text{zz}\mathbf{e}_\text{zz} \end{align} }[/math]

while in terms of tensor indices:

[math]\displaystyle{ \mathbf{T} = T_{ij} \mathbf{e}_{ij} \equiv \sum_{ij} T_{ij} \mathbf{e}_i \otimes \mathbf{e}_j \,, }[/math]

and in matrix form:

[math]\displaystyle{ \mathbf{T} = \begin{pmatrix} T_\text{xx} & T_\text{xy} & T_\text{xz} \\ T_\text{yx} & T_\text{yy} & T_\text{yz} \\ T_\text{zx} & T_\text{zy} & T_\text{zz} \end{pmatrix} }[/math]

Second-order tensors occur naturally in physics and engineering when physical quantities have directional dependence in the system, often in a "stimulus-response" way. This can be mathematically seen through one aspect of tensors – they are multilinear functions. A second-order tensor T which takes in a vector u of some magnitude and direction will return a vector v; of a different magnitude and in a different direction to u, in general. The notation used for functions in mathematical analysis leads us to write v − T(u),[1] while the same idea can be expressed in matrix and index notations[2] (including the summation convention), respectively:

[math]\displaystyle{ \begin{align} \begin{pmatrix} v_\text{x} \\ v_\text{y} \\ v_\text{z} \end{pmatrix} &= \begin{pmatrix} T_\text{xx} & T_\text{xy} & T_\text{xz} \\ T_\text{yx} & T_\text{yy} & T_\text{yz} \\ T_\text{zx} & T_\text{zy} & T_\text{zz} \end{pmatrix}\begin{pmatrix} u_\text{x} \\ u_\text{y} \\ u_\text{z} \end{pmatrix}\,, & v_i &= T_{ij}u_j \end{align} }[/math]

By "linear", if u = ρr + σs for two scalars ρ and σ and vectors r and s, then in function and index notations:

[math]\displaystyle{ \begin{align} \mathbf{v} &=&& \mathbf{T}(\rho\mathbf{r} + \sigma\mathbf{s}) &=&& \rho\mathbf{T}(\mathbf{r}) + \sigma\mathbf{T}(\mathbf{s}) \\[1ex] v_i &=&& T_{ij}(\rho r_j + \sigma s_j) &=&& \rho T_{ij} r_j + \sigma T_{ij} s_j \end{align} }[/math]

and similarly for the matrix notation. The function, matrix, and index notations all mean the same thing. The matrix forms provide a clear display of the components, while the index form allows easier tensor-algebraic manipulation of the formulae in a compact manner. Both provide the physical interpretation of directions; vectors have one direction, while second-order tensors connect two directions together. One can associate a tensor index or coordinate label with a basis vector direction.

The use of second-order tensors are the minimum to describe changes in magnitudes and directions of vectors, as the dot product of two vectors is always a scalar, while the cross product of two vectors is always a pseudovector perpendicular to the plane defined by the vectors, so these products of vectors alone cannot obtain a new vector of any magnitude in any direction. (See also below for more on the dot and cross products). The tensor product of two vectors is a second-order tensor, although this has no obvious directional interpretation by itself.

The previous idea can be continued: if T takes in two vectors p and q, it will return a scalar r. In function notation we write r = T(p, q), while in matrix and index notations (including the summation convention) respectively:

[math]\displaystyle{ r = \begin{pmatrix} p_\text{x} & p_\text{y} & p_\text{z} \end{pmatrix}\begin{pmatrix} T_\text{xx} & T_\text{xy} & T_\text{xz} \\ T_\text{yx} & T_\text{yy} & T_\text{yz} \\ T_\text{zx} & T_\text{zy} & T_\text{zz} \end{pmatrix}\begin{pmatrix} q_\text{x} \\ q_\text{y} \\ q_\text{z} \end{pmatrix} = p_i T_{ij} q_j }[/math]

The tensor T is linear in both input vectors. When vectors and tensors are written without reference to components, and indices are not used, sometimes a dot ⋅ is placed where summations over indices (known as tensor contractions) are taken. For the above cases:[1][2]

[math]\displaystyle{ \begin{align} \mathbf{v} &= \mathbf{T}\cdot\mathbf{u}\\ r &= \mathbf{p}\cdot\mathbf{T}\cdot\mathbf{q} \end{align} }[/math]

motivated by the dot product notation:

[math]\displaystyle{ \mathbf{a}\cdot\mathbf{b} \equiv a_i b_i }[/math]

More generally, a tensor of order m which takes in n vectors (where n is between 0 and m inclusive) will return a tensor of order m − n, see Tensor § As multilinear maps for further generalizations and details. The concepts above also apply to pseudovectors in the same way as for vectors. The vectors and tensors themselves can vary within throughout space, in which case we have vector fields and tensor fields, and can also depend on time.

Following are some examples:

| An applied or given... | ...to a material or object of... | ...results in... | ...in the material or object, given by: |

|---|---|---|---|

| unit vector n | Cauchy stress tensor σ | a traction force t | [math]\displaystyle{ \mathbf{t} = \boldsymbol{\sigma}\cdot\mathbf{n} }[/math] |

| angular velocity ω | moment of inertia I | an angular momentum J | [math]\displaystyle{ \mathbf{J} = \mathbf{I}\cdot\boldsymbol{\omega} }[/math] |

| a rotational kinetic energy T | [math]\displaystyle{ T = \tfrac{1}{2}\boldsymbol{\omega}\cdot\mathbf{I}\cdot\boldsymbol{\omega} }[/math] | ||

| electric field E | electrical conductivity σ | a current density flow J | [math]\displaystyle{ \mathbf{J}=\boldsymbol{\sigma}\cdot\mathbf{E} }[/math] |

| polarizability α (related to the permittivity ε and electric susceptibility χE) | an induced polarization field P | [math]\displaystyle{ \mathbf{P}=\boldsymbol{\alpha}\cdot\mathbf{E} }[/math] | |

| magnetic H field | magnetic permeability μ | a magnetic B field | [math]\displaystyle{ \mathbf{B}=\boldsymbol{\mu}\cdot\mathbf{H} }[/math] |

For the electrical conduction example, the index and matrix notations would be:

[math]\displaystyle{ \begin{align} J_i &= \sigma_{ij}E_j \equiv \sum_{j} \sigma_{ij}E_j \\ \begin{pmatrix} J_\text{x} \\ J_\text{y} \\ J_\text{z} \end{pmatrix} &= \begin{pmatrix} \sigma_\text{xx} & \sigma_\text{xy} & \sigma_\text{xz} \\ \sigma_\text{yx} & \sigma_\text{yy} & \sigma_\text{yz} \\ \sigma_\text{zx} & \sigma_\text{zy} & \sigma_\text{zz} \end{pmatrix} \begin{pmatrix} E_\text{x} \\ E_\text{y} \\ E_\text{z} \end{pmatrix} \end{align} }[/math]

while for the rotational kinetic energy T:

[math]\displaystyle{ \begin{align} T &= \frac{1}{2} \omega_i I_{ij} \omega_j \equiv \frac{1}{2} \sum_{ij} \omega_i I_{ij} \omega_j \,, \\ &= \frac{1}{2} \begin{pmatrix} \omega_\text{x} & \omega_\text{y} & \omega_\text{z} \end{pmatrix} \begin{pmatrix} I_\text{xx} & I_\text{xy} & I_\text{xz} \\ I_\text{yx} & I_\text{yy} & I_\text{yz} \\ I_\text{zx} & I_\text{zy} & I_\text{zz} \end{pmatrix} \begin{pmatrix} \omega_\text{x} \\ \omega_\text{y} \\ \omega_\text{z} \end{pmatrix} \,. \end{align} }[/math]

See also constitutive equation for more specialized examples.

Vectors and tensors in n dimensions

In n-dimensional Euclidean space over the real numbers, [math]\displaystyle{ \mathbb{R}^n }[/math], the standard basis is denoted e1, e2, e3, ... en. Each basis vector ei points along the positive xi axis, with the basis being orthonormal. Component j of ei is given by the Kronecker delta:

[math]\displaystyle{ (\mathbf{e}_i)_j = \delta_{ij} }[/math]

A vector in [math]\displaystyle{ \mathbb{R}^n }[/math] takes the form:

[math]\displaystyle{ \mathbf{a} = a_i\mathbf{e}_i \equiv \sum_i a_i\mathbf{e}_i \,. }[/math]

Similarly for the order-2 tensor above, for each vector a and b in [math]\displaystyle{ \mathbb{R}^n }[/math]:

[math]\displaystyle{ \mathbf{T} = a_i b_j \mathbf{e}_{ij} \equiv \sum_{ij} a_i b_j \mathbf{e}_i \otimes \mathbf{e}_j \,, }[/math]

or more generally:

[math]\displaystyle{ \mathbf{T} = T_{ij} \mathbf{e}_{ij} \equiv \sum_{ij} T_{ij} \mathbf{e}_i \otimes \mathbf{e}_j \,. }[/math]

Transformations of Cartesian vectors (any number of dimensions)

Meaning of "invariance" under coordinate transformations

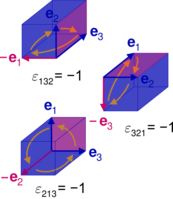

The position vector x in [math]\displaystyle{ \mathbb{R}^n }[/math] is a simple and common example of a vector, and can be represented in any coordinate system. Consider the case of rectangular coordinate systems with orthonormal bases only. It is possible to have a coordinate system with rectangular geometry if the basis vectors are all mutually perpendicular and not normalized, in which case the basis is orthogonal but not orthonormal. However, orthonormal bases are easier to manipulate and are often used in practice. The following results are true for orthonormal bases, not orthogonal ones.

In one rectangular coordinate system, x as a contravector has coordinates xi and basis vectors ei, while as a covector it has coordinates xi and basis covectors ei, and we have:

[math]\displaystyle{ \begin{align} \mathbf{x} &= x^i\mathbf{e}_i\,, & \mathbf{x} &= x_i\mathbf{e}^i \end{align} }[/math]

In another rectangular coordinate system, x as a contravector has coordinates xi and basis ei, while as a covector it has coordinates xi and basis ei, and we have:

[math]\displaystyle{ \begin{align} \mathbf{x} &= \bar{x}^i\bar{\mathbf{e}}_i\,, & \mathbf{x} &= \bar{x}_i\bar{\mathbf{e}}^i \end{align} }[/math]

Each new coordinate is a function of all the old ones, and vice versa for the inverse function:

[math]\displaystyle{ \begin{align} \bar{x}{}^i = \bar{x}{}^i\left(x^1, x^2, \ldots\right) \quad &\rightleftharpoons \quad x{}^i = x{}^i\left(\bar{x}^1, \bar{x}^2, \ldots\right) \\ \bar{x}{}_i = \bar{x}{}_i\left(x_1, x_2, \ldots\right) \quad &\rightleftharpoons \quad x{}_i = x{}_i\left(\bar{x}_1, \bar{x}_2, \ldots\right) \end{align} }[/math]

and similarly each new basis vector is a function of all the old ones, and vice versa for the inverse function:

[math]\displaystyle{ \begin{align} \bar{\mathbf{e}}{}_j = \bar{\mathbf{e}}{}_j\left(\mathbf{e}_1, \mathbf{e}_2, \ldots\right) \quad &\rightleftharpoons \quad \mathbf{e}{}_j = \mathbf{e}{}_j \left(\bar{\mathbf{e}}_1, \bar{\mathbf{e}}_2, \ldots\right) \\ \bar{\mathbf{e}}{}^j = \bar{\mathbf{e}}{}^j\left(\mathbf{e}^1,\mathbf{e}^2, \ldots\right) \quad &\rightleftharpoons \quad \mathbf{e}{}^j = \mathbf{e}{}^j \left(\bar{\mathbf{e}}^1, \bar{\mathbf{e}}^2, \ldots\right) \end{align} }[/math]

for all i, j.

A vector is invariant under any change of basis, so if coordinates transform according to a transformation matrix L, the bases transform according to the matrix inverse L−1, and conversely if the coordinates transform according to inverse L−1, the bases transform according to the matrix L. The difference between each of these transformations is shown conventionally through the indices as superscripts for contravariance and subscripts for covariance, and the coordinates and bases are linearly transformed according to the following rules:

| Vector elements | Contravariant transformation law | Covariant transformation law |

|---|---|---|

| Coordinates | [math]\displaystyle{ \bar{x}^j = x^i (\boldsymbol{\mathsf{L}})_i{}^j = x^i \mathsf{L}_i{}^j }[/math] | [math]\displaystyle{ \bar{x}_j = x_k \left(\boldsymbol{\mathsf{L}}^{-1}\right)_j{}^k }[/math] |

| Basis | [math]\displaystyle{ \bar{\mathbf{e}}_j = \left(\boldsymbol{\mathsf{L}}^{-1}\right)_j{}^k\mathbf{e}_k }[/math] | [math]\displaystyle{ \bar{\mathbf{e}}^j = (\boldsymbol{\mathsf{L}})_i{}^j \mathbf{e}^i = \mathsf{L}_i{}^j \mathbf{e}^i }[/math] |

| Any vector | [math]\displaystyle{ \bar{x}^j \bar{\mathbf{e}}_j = x^i \mathsf{L}_i{}^j \left(\boldsymbol{\mathsf{L}}^{-1}\right)_j{}^k \mathbf{e}_k = x^i \delta_i{}^k \mathbf{e}_k = x^i \mathbf{e}_i }[/math] | [math]\displaystyle{ \bar{x}_j \bar{\mathbf{e}}^j = x_i \left(\boldsymbol{\mathsf{L}}^{-1}\right)_j{}^i \mathsf{L}_k{}^j \mathbf{e}^k = x_i \delta^i{}_k \mathbf{e}^k = x_i \mathbf{e}^i }[/math] |

where Lij represents the entries of the transformation matrix (row number is i and column number is j) and (L−1)ik denotes the entries of the inverse matrix of the matrix Lik.

If L is an orthogonal transformation (orthogonal matrix), the objects transforming by it are defined as Cartesian tensors. This geometrically has the interpretation that a rectangular coordinate system is mapped to another rectangular coordinate system, in which the norm of the vector x is preserved (and distances are preserved).

The determinant of L is det(L) = ±1, which corresponds to two types of orthogonal transformation: (+1) for rotations and (−1) for improper rotations (including reflections).

There are considerable algebraic simplifications, the matrix transpose is the inverse from the definition of an orthogonal transformation:

[math]\displaystyle{ \boldsymbol{\mathsf{L}}^\textsf{T} = \boldsymbol{\mathsf{L}}^{-1} \Rightarrow \left(\boldsymbol{\mathsf{L}}^{-1}\right)_i{}^j = \left(\boldsymbol{\mathsf{L}}^\textsf{T}\right)_i{}^j = (\boldsymbol{\mathsf{L}})^j{}_i = \mathsf{L}^j{}_i }[/math]

From the previous table, orthogonal transformations of covectors and contravectors are identical. There is no need to differ between raising and lowering indices, and in this context and applications to physics and engineering the indices are usually all subscripted to remove confusion for exponents. All indices will be lowered in the remainder of this article. One can determine the actual raised and lowered indices by considering which quantities are covectors or contravectors, and the relevant transformation rules.

Exactly the same transformation rules apply to any vector a, not only the position vector. If its components ai do not transform according to the rules, a is not a vector.

Despite the similarity between the expressions above, for the change of coordinates such as xj = Lijxi, and the action of a tensor on a vector like bi = Tij aj, L is not a tensor, but T is. In the change of coordinates, L is a matrix, used to relate two rectangular coordinate systems with orthonormal bases together. For the tensor relating a vector to a vector, the vectors and tensors throughout the equation all belong to the same coordinate system and basis.

Derivatives and Jacobian matrix elements

The entries of L are partial derivatives of the new or old coordinates with respect to the old or new coordinates, respectively.

Differentiating xi with respect to xk:

[math]\displaystyle{ \frac{\partial\bar{x}_i}{\partial x_k} = \frac{\partial}{\partial x_k}(x_j \mathsf{L}_{ji}) = \mathsf{L}_{ji}\frac{\partial x_j}{\partial x_k} = \delta_{kj}\mathsf{L}_{ji} = \mathsf{L}_{ki} }[/math]

so

[math]\displaystyle{ {\mathsf{L}_i}^j \equiv \mathsf{L}_{ij} = \frac{\partial\bar{x}_j}{\partial x_i} }[/math]

is an element of the Jacobian matrix. There is a (partially mnemonical) correspondence between index positions attached to L and in the partial derivative: i at the top and j at the bottom, in each case, although for Cartesian tensors the indices can be lowered.

Conversely, differentiating xj with respect to xi:

[math]\displaystyle{ \frac{\partial x_j}{\partial\bar{x}_k} = \frac{\partial}{\partial\bar{x}_k} \left(\bar{x}_i\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ij}\right) = \frac{\partial\bar{x}_i}{\partial\bar{x}_k}\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ij} = \delta_{ki} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ij} = \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{kj} }[/math]

so

[math]\displaystyle{ \left(\boldsymbol{\mathsf{L}}^{-1}\right)_i{}^j \equiv \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ij} = \frac{\partial x_j}{\partial\bar{x}_i} }[/math]

is an element of the inverse Jacobian matrix, with a similar index correspondence.

Many sources state transformations in terms of the partial derivatives:

[math]\displaystyle{ \begin{array}{c} \displaystyle \bar{x}_j = x_i\frac{\partial\bar{x}_j}{\partial x_i} \\[3pt] \upharpoonleft\downharpoonright \\[3pt] \displaystyle x_j = \bar{x}_i\frac{\partial x_j}{\partial\bar{x}_i} \end{array} }[/math]

and the explicit matrix equations in 3d are:

[math]\displaystyle{ \begin{align} \bar{\mathbf{x}} &= \boldsymbol{\mathsf{L}}\mathbf{x} \\ \begin{pmatrix} \bar{x}_1 \\ \bar{x}_2 \\ \bar{x}_3 \end{pmatrix} &= \begin{pmatrix} \frac{\partial\bar{x}_1}{\partial x_1} & \frac{\partial\bar{x}_1}{\partial x_2} & \frac{\partial\bar{x}_1}{\partial x_3}\\ \frac{\partial\bar{x}_2}{\partial x_1} & \frac{\partial\bar{x}_2}{\partial x_2} & \frac{\partial\bar{x}_2}{\partial x_3}\\ \frac{\partial\bar{x}_3}{\partial x_1} & \frac{\partial\bar{x}_3}{\partial x_2} & \frac{\partial\bar{x}_3}{\partial x_3} \end{pmatrix}\begin{pmatrix} x_1 \\ x_2 \\ x_3 \end{pmatrix} \end{align} }[/math]

similarly for

[math]\displaystyle{ \mathbf{x} = \boldsymbol{\mathsf{L}}^{-1}\bar{\mathbf{x}} = \boldsymbol{\mathsf{L}}^\textsf{T}\bar{\mathbf{x}} }[/math]

Projections along coordinate axes

As with all linear transformations, L depends on the basis chosen. For two orthonormal bases

[math]\displaystyle{ \begin{align} \bar{\mathbf{e}}_i\cdot\bar{\mathbf{e}}_j &= \mathbf{e}_i\cdot\mathbf{e}_j = \delta_{ij}\,, & \left|\mathbf{e}_i\right| &= \left|\bar{\mathbf{e}}_i\right| = 1\,, \end{align} }[/math]

- projecting x to the x axes: [math]\displaystyle{ \bar{x}_i=\bar{\mathbf{e}}_i\cdot\mathbf{x}=\bar{\mathbf{e}}_i\cdot x_j\mathbf{e}_j=x_i \mathsf{L}_{ij} \,, }[/math]

- projecting x to the x axes: [math]\displaystyle{ x_i=\mathbf{e}_i\cdot\mathbf{x}=\mathbf{e}_i\cdot\bar{x}_j\bar{\mathbf{e}}_j=\bar{x}_j\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ji} \,. }[/math]

Hence the components reduce to direction cosines between the xi and xj axes: [math]\displaystyle{ \begin{align} \mathsf{L}_{ij} &= \bar{\mathbf{e}}_i\cdot\mathbf{e}_j = \cos\theta_{ij} \\ \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ij} &= \mathbf{e}_i\cdot\bar{\mathbf{e}}_j = \cos\theta_{ji} \end{align} }[/math]

where θij and θji are the angles between the xi and xj axes. In general, θij is not equal to θji, because for example θ12 and θ21 are two different angles.

The transformation of coordinates can be written:

[math]\displaystyle{ \begin{array}{c} \bar{x}_j = x_i \left(\bar{\mathbf{e}}_i\cdot\mathbf{e}_j \right) = x_i\cos\theta_{ij}\\[3pt] \upharpoonleft\downharpoonright\\[3pt] x_j = \bar{x}_i \left( \mathbf{e}_i\cdot\bar{\mathbf{e}}_j \right) = \bar{x}_i\cos\theta_{ji} \end{array} }[/math]

and the explicit matrix equations in 3d are:

[math]\displaystyle{ \begin{align} \bar{\mathbf{x}} &= \boldsymbol{\mathsf{L}}\mathbf{x} \\ \begin{pmatrix} \bar{x}_1\\ \bar{x}_2\\ \bar{x}_3 \end{pmatrix} &= \begin{pmatrix}\bar{\mathbf{e}}_1\cdot\mathbf{e}_1 & \bar{\mathbf{e}}_1\cdot\mathbf{e}_2 & \bar{\mathbf{e}}_1\cdot\mathbf{e}_3\\ \bar{\mathbf{e}}_2\cdot\mathbf{e}_1 & \bar{\mathbf{e}}_2\cdot\mathbf{e}_2 & \bar{\mathbf{e}}_2\cdot\mathbf{e}_3\\ \bar{\mathbf{e}}_3\cdot\mathbf{e}_1 & \bar{\mathbf{e}}_3\cdot\mathbf{e}_2 & \bar{\mathbf{e}}_3\cdot\mathbf{e}_3 \end{pmatrix}\begin{pmatrix}x_1\\ x_2\\ x_3 \end{pmatrix}=\begin{pmatrix}\cos\theta_{11} & \cos\theta_{12} & \cos\theta_{13}\\ \cos\theta_{21} & \cos\theta_{22} & \cos\theta_{23}\\ \cos\theta_{31} & \cos\theta_{32} & \cos\theta_{33} \end{pmatrix}\begin{pmatrix}x_1\\ x_2\\ x_3 \end{pmatrix} \end{align} }[/math]

similarly for

[math]\displaystyle{ \mathbf{x} = \boldsymbol{\mathsf{L}}^{-1}\bar{\mathbf{x}} = \boldsymbol{\mathsf{L}}^\textsf{T}\bar{\mathbf{x}} }[/math]

The geometric interpretation is the xi components equal to the sum of projecting the xj components onto the xj axes.

The numbers ei⋅ej arranged into a matrix would form a symmetric matrix (a matrix equal to its own transpose) due to the symmetry in the dot products, in fact it is the metric tensor g. By contrast ei⋅ej or ei⋅ej do not form symmetric matrices in general, as displayed above. Therefore, while the L matrices are still orthogonal, they are not symmetric.

Apart from a rotation about any one axis, in which the xi and xi for some i coincide, the angles are not the same as Euler angles, and so the L matrices are not the same as the rotation matrices.

Transformation of the dot and cross products (three dimensions only)

The dot product and cross product occur very frequently, in applications of vector analysis to physics and engineering, examples include:

- power transferred P by an object exerting a force F with velocity v along a straight-line path: [math]\displaystyle{ P = \mathbf{v} \cdot \mathbf{F} }[/math]

- tangential velocity v at a point x of a rotating rigid body with angular velocity ω: [math]\displaystyle{ \mathbf{v} = \boldsymbol{\omega} \times \mathbf{x} }[/math]

- potential energy U of a magnetic dipole of magnetic moment m in a uniform external magnetic field B: [math]\displaystyle{ U = -\mathbf{m}\cdot\mathbf{B} }[/math]

- angular momentum J for a particle with position vector r and momentum p: [math]\displaystyle{ \mathbf{J} = \mathbf{r}\times \mathbf{p} }[/math]

- torque τ acting on an electric dipole of electric dipole moment p in a uniform external electric field E: [math]\displaystyle{ \boldsymbol{\tau} = \mathbf{p}\times\mathbf{E} }[/math]

- induced surface current density jS in a magnetic material of magnetization M on a surface with unit normal n: [math]\displaystyle{ \mathbf{j}_\mathrm{S} = \mathbf{M} \times \mathbf{n} }[/math]

How these products transform under orthogonal transformations is illustrated below.

Dot product, Kronecker delta, and metric tensor

The dot product ⋅ of each possible pairing of the basis vectors follows from the basis being orthonormal. For perpendicular pairs we have

[math]\displaystyle{ \begin{array}{llll} \mathbf{e}_\text{x}\cdot\mathbf{e}_\text{y} &= \mathbf{e}_\text{y}\cdot\mathbf{e}_\text{z} &= \mathbf{e}_\text{z}\cdot\mathbf{e}_\text{x} &=\\ \mathbf{e}_\text{y}\cdot\mathbf{e}_\text{x} &= \mathbf{e}_\text{z}\cdot\mathbf{e}_\text{y} &= \mathbf{e}_\text{x}\cdot\mathbf{e}_\text{z} &= 0 \end{array} }[/math]

while for parallel pairs we have

[math]\displaystyle{ \mathbf{e}_\text{x}\cdot\mathbf{e}_\text{x} = \mathbf{e}_\text{y}\cdot\mathbf{e}_\text{y} = \mathbf{e}_\text{z}\cdot\mathbf{e}_\text{z} = 1. }[/math]

Replacing Cartesian labels by index notation as shown above, these results can be summarized by

[math]\displaystyle{ \mathbf{e}_i\cdot\mathbf{e}_j = \delta_{ij} }[/math]

where δij are the components of the Kronecker delta. The Cartesian basis can be used to represent δ in this way.

In addition, each metric tensor component gij with respect to any basis is the dot product of a pairing of basis vectors:

[math]\displaystyle{ g_{ij} = \mathbf{e}_i\cdot\mathbf{e}_j . }[/math]

For the Cartesian basis the components arranged into a matrix are:

[math]\displaystyle{ \mathbf{g} = \begin{pmatrix} g_\text{xx} & g_\text{xy} & g_\text{xz} \\ g_\text{yx} & g_\text{yy} & g_\text{yz} \\ g_\text{zx} & g_\text{zy} & g_\text{zz} \\ \end{pmatrix} = \begin{pmatrix} \mathbf{e}_\text{x}\cdot\mathbf{e}_\text{x} & \mathbf{e}_\text{x}\cdot\mathbf{e}_\text{y} & \mathbf{e}_\text{x}\cdot\mathbf{e}_\text{z} \\ \mathbf{e}_\text{y}\cdot\mathbf{e}_\text{x} & \mathbf{e}_\text{y}\cdot\mathbf{e}_\text{y} & \mathbf{e}_\text{y}\cdot\mathbf{e}_\text{z} \\ \mathbf{e}_\text{z}\cdot\mathbf{e}_\text{x} & \mathbf{e}_\text{z}\cdot\mathbf{e}_\text{y} & \mathbf{e}_\text{z}\cdot\mathbf{e}_\text{z} \\ \end{pmatrix} = \begin{pmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \\ \end{pmatrix} }[/math]

so are the simplest possible for the metric tensor, namely the δ:

[math]\displaystyle{ g_{ij} = \delta_{ij} }[/math]

This is not true for general bases: orthogonal coordinates have diagonal metrics containing various scale factors (i.e. not necessarily 1), while general curvilinear coordinates could also have nonzero entries for off-diagonal components.

The dot product of two vectors a and b transforms according to

[math]\displaystyle{ \mathbf{a}\cdot\mathbf{b} = \bar{a}_j \bar{b}_j = a_i \mathsf{L}_{ij} b_k \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{jk} = a_i \delta_i{}_k b_k = a_i b_i }[/math]

which is intuitive, since the dot product of two vectors is a single scalar independent of any coordinates. This also applies more generally to any coordinate systems, not just rectangular ones; the dot product in one coordinate system is the same in any other.

Cross product, Levi-Civita symbol, and pseudovectors

For the cross product (×) of two vectors, the results are (almost) the other way round. Again, assuming a right-handed 3d Cartesian coordinate system, cyclic permutations in perpendicular directions yield the next vector in the cyclic collection of vectors:

[math]\displaystyle{ \begin{align} \mathbf{e}_\text{x}\times\mathbf{e}_\text{y} &= \mathbf{e}_\text{z} & \mathbf{e}_\text{y}\times\mathbf{e}_\text{z} &= \mathbf{e}_\text{x} & \mathbf{e}_\text{z}\times\mathbf{e}_\text{x} &= \mathbf{e}_\text{y} \\[1ex] \mathbf{e}_\text{y}\times\mathbf{e}_\text{x} &= -\mathbf{e}_\text{z} & \mathbf{e}_\text{z}\times\mathbf{e}_\text{y} &= -\mathbf{e}_\text{x} & \mathbf{e}_\text{x}\times\mathbf{e}_\text{z} &= -\mathbf{e}_\text{y} \end{align} }[/math]

while parallel vectors clearly vanish:

[math]\displaystyle{ \mathbf{e}_\text{x}\times\mathbf{e}_\text{x} = \mathbf{e}_\text{y}\times\mathbf{e}_\text{y} = \mathbf{e}_\text{z}\times\mathbf{e}_\text{z} = \boldsymbol{0} }[/math]

and replacing Cartesian labels by index notation as above, these can be summarized by:

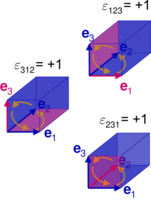

[math]\displaystyle{ \mathbf{e}_i\times\mathbf{e}_j = \begin{cases} +\mathbf{e}_k & \text{cyclic permutations: } (i,j,k) = (1,2,3), (2,3,1), (3,1,2) \\[2pt] -\mathbf{e}_k & \text{anticyclic permutations: } (i,j,k) = (2,1,3), (3,2,1), (1,3,2) \\[2pt] \boldsymbol{0} & i = j \end{cases} }[/math]

where i, j, k are indices which take values 1, 2, 3. It follows that:

[math]\displaystyle{ {\mathbf{e}_k\cdot\mathbf{e}_i\times\mathbf{e}_j} = \begin{cases} +1 & \text{cyclic permutations: } (i,j,k) = (1,2,3), (2,3,1), (3,1,2) \\[2pt] -1 & \text{anticyclic permutations: } (i,j,k) = (2,1,3), (3,2,1), (1,3,2) \\[2pt] 0 & i = j\text{ or }j = k\text{ or }k=i \end{cases} }[/math]

These permutation relations and their corresponding values are important, and there is an object coinciding with this property: the Levi-Civita symbol, denoted by ε. The Levi-Civita symbol entries can be represented by the Cartesian basis:

[math]\displaystyle{ \varepsilon_{ijk} = \mathbf{e}_i\cdot \mathbf{e}_j\times\mathbf{e}_k }[/math]

which geometrically corresponds to the volume of a cube spanned by the orthonormal basis vectors, with sign indicating orientation (and not a "positive or negative volume"). Here, the orientation is fixed by ε123 = +1, for a right-handed system. A left-handed system would fix ε123 = −1 or equivalently ε321 = +1.

The scalar triple product can now be written:

[math]\displaystyle{ \mathbf{c} \cdot \mathbf{a} \times \mathbf{b} = c_i\mathbf{e}_i \cdot a_j\mathbf{e}_j \times b_k\mathbf{e}_k = \varepsilon_{ijk} c_i a_j b_k }[/math]

with the geometric interpretation of volume (of the parallelepiped spanned by a, b, c) and algebraically is a determinant:[3]:{{{1}}}

[math]\displaystyle{ \mathbf{c} \cdot \mathbf{a} \times \mathbf{b} = \begin{vmatrix} c_\text{x} & a_\text{x} & b_\text{x} \\ c_\text{y} & a_\text{y} & b_\text{y} \\ c_\text{z} & a_\text{z} & b_\text{z} \end{vmatrix} }[/math]

This in turn can be used to rewrite the cross product of two vectors as follows:

[math]\displaystyle{ \begin{align} (\mathbf{a} \times \mathbf{b})_i = {\mathbf{e}_i \cdot \mathbf{a} \times \mathbf{b}} &= \varepsilon_{\ell jk} {(\mathbf{e}_i)}_\ell a_j b_k = \varepsilon_{\ell jk} \delta_{i \ell} a_j b_k = \varepsilon_{ijk} a_j b_k \\ \Rightarrow\quad {\mathbf{a} \times \mathbf{b}} = (\mathbf{a} \times \mathbf{b})_i \mathbf{e}_i &= \varepsilon_{ijk} a_j b_k \mathbf{e}_i \end{align} }[/math]

Contrary to its appearance, the Levi-Civita symbol is not a tensor, but a pseudotensor, the components transform according to:

[math]\displaystyle{ \bar{\varepsilon}_{pqr} = \det(\boldsymbol{\mathsf{L}}) \varepsilon_{ijk} \mathsf{L}_{ip}\mathsf{L}_{jq}\mathsf{L}_{kr} \,. }[/math]

Therefore, the transformation of the cross product of a and b is: [math]\displaystyle{ \begin{align} &\left(\bar{\mathbf{a}} \times \bar{\mathbf{b}}\right)_i \\[1ex] {}={} &\bar{\varepsilon}_{ijk} \bar{a}_j \bar{b}_k \\[1ex] {}={} &\det(\boldsymbol{\mathsf{L}}) \;\; \varepsilon_{pqr} \;\; \mathsf{L}_{pi}\mathsf{L}_{qj} \mathsf{L}_{rk} \;\; a_m \mathsf{L}_{mj} \;\; b_n \mathsf{L}_{nk} \\[1ex] {}={} &\det(\boldsymbol{\mathsf{L}}) \;\; \varepsilon_{pqr} \;\; \mathsf{L}_{pi} \;\; \mathsf{L}_{qj} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{jm} \;\; \mathsf{L}_{rk} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{kn} \;\; a_m \;\; b_n \\[1ex] {}={} &\det(\boldsymbol{\mathsf{L}}) \;\; \varepsilon_{pqr} \;\; \mathsf{L}_{pi} \;\; \delta_{qm} \;\; \delta_{rn} \;\; a_m \;\; b_n \\[1ex] {}={} &\det(\boldsymbol{\mathsf{L}}) \;\; \mathsf{L}_{pi} \;\; \varepsilon_{pqr} a_q b_r \\[1ex] {}={} &\det(\boldsymbol{\mathsf{L}}) \;\; (\mathbf{a}\times\mathbf{b})_p \mathsf{L}_{pi} \end{align} }[/math]

and so a × b transforms as a pseudovector, because of the determinant factor.

The tensor index notation applies to any object which has entities that form multidimensional arrays – not everything with indices is a tensor by default. Instead, tensors are defined by how their coordinates and basis elements change under a transformation from one coordinate system to another.

Note the cross product of two vectors is a pseudovector, while the cross product of a pseudovector with a vector is another vector.

Applications of the δ tensor and ε pseudotensor

Other identities can be formed from the δ tensor and ε pseudotensor, a notable and very useful identity is one that converts two Levi-Civita symbols adjacently contracted over two indices into an antisymmetrized combination of Kronecker deltas:

[math]\displaystyle{ \varepsilon_{ijk}\varepsilon_{pqk} = \delta_{ip}\delta_{jq} - \delta_{iq}\delta_{jp} }[/math]

The index forms of the dot and cross products, together with this identity, greatly facilitate the manipulation and derivation of other identities in vector calculus and algebra, which in turn are used extensively in physics and engineering. For instance, it is clear the dot and cross products are distributive over vector addition:

[math]\displaystyle{ \begin{align} \mathbf{a}\cdot(\mathbf{b} + \mathbf{c}) &= a_i ( b_i + c_i ) = a_i b_i + a_i c_i = \mathbf{a}\cdot\mathbf{b} + \mathbf{a}\cdot\mathbf{c} \\[1ex] \mathbf{a}\times(\mathbf{b} + \mathbf{c}) &= \mathbf{e}_i\varepsilon_{ijk} a_j ( b_k + c_k ) = \mathbf{e}_i \varepsilon_{ijk} a_j b_k + \mathbf{e}_i \varepsilon_{ijk} a_j c_k = \mathbf{a}\times\mathbf{b} + \mathbf{a}\times\mathbf{c} \end{align} }[/math]

without resort to any geometric constructions – the derivation in each case is a quick line of algebra. Although the procedure is less obvious, the vector triple product can also be derived. Rewriting in index notation:

[math]\displaystyle{ \left[ \mathbf{a}\times(\mathbf{b}\times\mathbf{c})\right]_i = \varepsilon_{ijk} a_j ( \varepsilon_{k \ell m} b_\ell c_m ) = (\varepsilon_{ijk} \varepsilon_{k \ell m} ) a_j b_\ell c_m }[/math]

and because cyclic permutations of indices in the ε symbol does not change its value, cyclically permuting indices in εkℓm to obtain εℓmk allows us to use the above δ-ε identity to convert the ε symbols into δ tensors:

[math]\displaystyle{ \begin{align} \left[ \mathbf{a}\times(\mathbf{b}\times\mathbf{c})\right]_i {}={} &\left(\delta_{i\ell} \delta_{jm} - \delta_{im} \delta_{j\ell}\right) a_j b_\ell c_m \\ {}={} &\delta_{i\ell} \delta_{jm} a_j b_\ell c_m - \delta_{im} \delta_{j\ell} a_j b_\ell c_m \\ {}={} &a_j b_i c_j - a_j b_j c_i \\ {}={} &\left[(\mathbf{a}\cdot\mathbf{c})\mathbf{b} - (\mathbf{a}\cdot\mathbf{b})\mathbf{c}\right]_i \end{align} }[/math]

thusly:

[math]\displaystyle{ \mathbf{a}\times(\mathbf{b}\times\mathbf{c}) = (\mathbf{a}\cdot\mathbf{c})\mathbf{b} - (\mathbf{a}\cdot\mathbf{b})\mathbf{c} }[/math]

Note this is antisymmetric in b and c, as expected from the left hand side. Similarly, via index notation or even just cyclically relabelling a, b, and c in the previous result and taking the negative:

[math]\displaystyle{ (\mathbf{a}\times \mathbf{b})\times\mathbf{c} = (\mathbf{c}\cdot\mathbf{a})\mathbf{b} - (\mathbf{c}\cdot\mathbf{b})\mathbf{a} }[/math]

and the difference in results show that the cross product is not associative. More complex identities, like quadruple products;

[math]\displaystyle{ (\mathbf{a}\times \mathbf{b})\cdot(\mathbf{c}\times\mathbf{d}),\quad (\mathbf{a}\times \mathbf{b})\times(\mathbf{c}\times\mathbf{d}),\quad \ldots }[/math]

and so on, can be derived in a similar manner.

Transformations of Cartesian tensors (any number of dimensions)

Tensors are defined as quantities which transform in a certain way under linear transformations of coordinates.

Second order

Let a = aiei and b = biei be two vectors, so that they transform according to aj = aiLij, bj = biLij.

Taking the tensor product gives:

[math]\displaystyle{ \mathbf{a}\otimes\mathbf{b}=a_i\mathbf{e}_i\otimes b_j\mathbf{e}_j=a_i b_j\mathbf{e}_i\otimes\mathbf{e}_j }[/math]

then applying the transformation to the components

[math]\displaystyle{ \bar{a}_p\bar{b}_q = a_i \mathsf{L}_i{}_p b_j \mathsf{L}_j{}_q = \mathsf{L}_i{}_p\mathsf{L}_j{}_q a_i b_j }[/math]

and to the bases

[math]\displaystyle{ \bar{\mathbf{e}}_p\otimes\bar{\mathbf{e}}_q = \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{pi}\mathbf{e}_i\otimes\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{qj}\mathbf{e}_j = \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{pi}\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{qj}\mathbf{e}_i\otimes\mathbf{e}_j = \mathsf{L}_{ip} \mathsf{L}_{jq} \mathbf{e}_i\otimes\mathbf{e}_j }[/math]

gives the transformation law of an order-2 tensor. The tensor a⊗b is invariant under this transformation:

[math]\displaystyle{ \begin{align} \bar{a}_p\bar{b}_q\bar{\mathbf{e}}_p\otimes\bar{\mathbf{e}}_q {}={} &\mathsf{L}_{kp} \mathsf{L}_{\ell q} a_k b_{\ell} \, \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{pi} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{qj} \mathbf{e}_i\otimes\mathbf{e}_j \\[1ex] {}={} &\mathsf{L}_{kp} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{pi} \mathsf{L}_{\ell q} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{q j} \, a_k b_{\ell} \mathbf{e}_i\otimes\mathbf{e}_j \\[1ex] {}={} &\delta_k{}_i \delta_{\ell j} \, a_k b_{\ell} \mathbf{e}_i\otimes\mathbf{e}_j \\[1ex] {}={} &a_ib_j\mathbf{e}_i\otimes\mathbf{e}_j \end{align} }[/math]

More generally, for any order-2 tensor

[math]\displaystyle{ \mathbf{R} = R_{ij}\mathbf{e}_i\otimes\mathbf{e}_j\,, }[/math]

the components transform according to;

[math]\displaystyle{ \bar{R}_{pq}=\mathsf{L}_i{}_p\mathsf{L}_j{}_q R_{ij}, }[/math]

and the basis transforms by:

[math]\displaystyle{ \bar{\mathbf{e}}_p\otimes\bar{\mathbf{e}}_q = \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{ip}\mathbf{e}_i\otimes \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{jq}\mathbf{e}_j }[/math]

If R does not transform according to this rule – whatever quantity R may be – it is not an order-2 tensor.

Any order

More generally, for any order p tensor

[math]\displaystyle{ \mathbf{T} = T_{j_1 j_2 \cdots j_p} \mathbf{e}_{j_1}\otimes\mathbf{e}_{j_2}\otimes\cdots\mathbf{e}_{j_p} }[/math]

the components transform according to;

[math]\displaystyle{ \bar{T}_{j_1j_2\cdots j_p} = \mathsf{L}_{i_1 j_1} \mathsf{L}_{i_2 j_2}\cdots \mathsf{L}_{i_p j_p} T_{i_1 i_2\cdots i_p} }[/math]

and the basis transforms by:

[math]\displaystyle{ \bar{\mathbf{e}}_{j_1}\otimes\bar{\mathbf{e}}_{j_2}\cdots\otimes\bar{\mathbf{e}}_{j_p}=\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{j_1 i_1}\mathbf{e}_{i_1}\otimes\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{j_2 i_2}\mathbf{e}_{i_2}\cdots\otimes\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{j_p i_p}\mathbf{e}_{i_p} }[/math]

For a pseudotensor S of order p, the components transform according to;

[math]\displaystyle{ \bar{S}_{j_1j_2\cdots j_p} = \det(\boldsymbol{\mathsf{L}}) \mathsf{L}_{i_1 j_1} \mathsf{L}_{i_2 j_2}\cdots \mathsf{L}_{i_p j_p} S_{i_1 i_2\cdots i_p}\,. }[/math]

Pseudovectors as antisymmetric second order tensors

The antisymmetric nature of the cross product can be recast into a tensorial form as follows.[2] Let c be a vector, a be a pseudovector, b be another vector, and T be a second order tensor such that:

[math]\displaystyle{ \mathbf{c} = \mathbf{a}\times\mathbf{b} = \mathbf{T}\cdot\mathbf{b} }[/math]

As the cross product is linear in a and b, the components of T can be found by inspection, and they are:

[math]\displaystyle{ \mathbf{T} = \begin{pmatrix} 0 & - a_\text{z} & a_\text{y} \\ a_\text{z} & 0 & - a_\text{x} \\ - a_\text{y} & a_\text{x} & 0 \\ \end{pmatrix} }[/math]

so the pseudovector a can be written as an antisymmetric tensor. This transforms as a tensor, not a pseudotensor. For the mechanical example above for the tangential velocity of a rigid body, given by v = ω × x, this can be rewritten as v = Ω ⋅ x where Ω is the tensor corresponding to the pseudovector ω:

[math]\displaystyle{ \boldsymbol{\Omega} = \begin{pmatrix} 0 & - \omega_\text{z} & \omega_\text{y} \\ \omega_\text{z} & 0 & - \omega_\text{x} \\ - \omega_\text{y} & \omega_\text{x} & 0 \\ \end{pmatrix} }[/math]

For an example in electromagnetism, while the electric field E is a vector field, the magnetic field B is a pseudovector field. These fields are defined from the Lorentz force for a particle of electric charge q traveling at velocity v:

[math]\displaystyle{ \mathbf{F} = q(\mathbf{E} + \mathbf{v} \times \mathbf{B}) = q(\mathbf{E} - \mathbf{B} \times \mathbf{v}) }[/math]

and considering the second term containing the cross product of a pseudovector B and velocity vector v, it can be written in matrix form, with F, E, and v as column vectors and B as an antisymmetric matrix:

[math]\displaystyle{ \begin{pmatrix} F_\text{x} \\ F_\text{y} \\ F_\text{z} \\ \end{pmatrix} = q\begin{pmatrix} E_\text{x} \\ E_\text{y} \\ E_\text{z} \\ \end{pmatrix} - q \begin{pmatrix} 0 & - B_\text{z} & B_\text{y} \\ B_\text{z} & 0 & - B_\text{x} \\ - B_\text{y} & B_\text{x} & 0 \\ \end{pmatrix} \begin{pmatrix} v_\text{x} \\ v_\text{y} \\ v_\text{z} \\ \end{pmatrix} }[/math]

If a pseudovector is explicitly given by a cross product of two vectors (as opposed to entering the cross product with another vector), then such pseudovectors can also be written as antisymmetric tensors of second order, with each entry a component of the cross product. The angular momentum of a classical pointlike particle orbiting about an axis, defined by J = x × p, is another example of a pseudovector, with corresponding antisymmetric tensor:

[math]\displaystyle{ \mathbf{J} = \begin{pmatrix} 0 & - J_\text{z} & J_\text{y} \\ J_\text{z} & 0 & - J_\text{x} \\ - J_\text{y} & J_\text{x} & 0 \\ \end{pmatrix} = \begin{pmatrix} 0 & - (x p_\text{y} - y p_\text{x}) & (z p_\text{x} - x p_\text{z}) \\ (x p_\text{y} - y p_\text{x}) & 0 & - (y p_\text{z} - z p_\text{y}) \\ - (z p_\text{x} - x p_\text{z}) & (y p_\text{z} - z p_\text{y}) & 0 \\ \end{pmatrix} }[/math]

Although Cartesian tensors do not occur in the theory of relativity; the tensor form of orbital angular momentum J enters the spacelike part of the relativistic angular momentum tensor, and the above tensor form of the magnetic field B enters the spacelike part of the electromagnetic tensor.

Vector and tensor calculus

The following formulae are only so simple in Cartesian coordinates – in general curvilinear coordinates there are factors of the metric and its determinant – see tensors in curvilinear coordinates for more general analysis.

Vector calculus

Following are the differential operators of vector calculus. Throughout, let Φ(r, t) be a scalar field, and

[math]\displaystyle{ \begin{align} \mathbf{A}(\mathbf{r},t) &= A_\text{x}(\mathbf{r},t)\mathbf{e}_\text{x} + A_\text{y}(\mathbf{r},t)\mathbf{e}_\text{y} + A_\text{z}(\mathbf{r},t)\mathbf{e}_\text{z} \\[1ex] \mathbf{B}(\mathbf{r},t) &= B_\text{x}(\mathbf{r},t)\mathbf{e}_\text{x} + B_\text{y}(\mathbf{r},t)\mathbf{e}_\text{y} + B_\text{z}(\mathbf{r},t)\mathbf{e}_\text{z} \end{align} }[/math]

be vector fields, in which all scalar and vector fields are functions of the position vector r and time t.

The gradient operator in Cartesian coordinates is given by:

[math]\displaystyle{ \nabla = \mathbf{e}_\text{x}\frac{\partial}{\partial x} + \mathbf{e}_\text{y}\frac{\partial}{\partial y} + \mathbf{e}_\text{z}\frac{\partial}{\partial z} }[/math]

and in index notation, this is usually abbreviated in various ways:

[math]\displaystyle{ \nabla_i \equiv \partial_i \equiv \frac{\partial}{\partial x_i} }[/math]

This operator acts on a scalar field Φ to obtain the vector field directed in the maximum rate of increase of Φ:

[math]\displaystyle{ \left(\nabla\Phi\right)_i = \nabla_i \Phi }[/math]

The index notation for the dot and cross products carries over to the differential operators of vector calculus.[3]:{{{1}}}

The directional derivative of a scalar field Φ is the rate of change of Φ along some direction vector a (not necessarily a unit vector), formed out of the components of a and the gradient:

[math]\displaystyle{ \mathbf{a}\cdot(\nabla\Phi) = a_j (\nabla\Phi)_j }[/math]

The divergence of a vector field A is:

[math]\displaystyle{ \nabla\cdot\mathbf{A} = \nabla_i A_i }[/math]

Note the interchange of the components of the gradient and vector field yields a different differential operator

[math]\displaystyle{ \mathbf{A}\cdot\nabla = A_i \nabla_i }[/math]

which could act on scalar or vector fields. In fact, if A is replaced by the velocity field u(r, t) of a fluid, this is a term in the material derivative (with many other names) of continuum mechanics, with another term being the partial time derivative:

[math]\displaystyle{ \frac{D}{D t} = \frac{\partial}{\partial t} + \mathbf{u}\cdot\nabla }[/math]

which usually acts on the velocity field leading to the non-linearity in the Navier-Stokes equations.

As for the curl of a vector field A, this can be defined as a pseudovector field by means of the ε symbol:

[math]\displaystyle{ \left(\nabla\times\mathbf{A}\right)_i = \varepsilon_{ijk} \nabla_j A_k }[/math]

which is only valid in three dimensions, or an antisymmetric tensor field of second order via antisymmetrization of indices, indicated by delimiting the antisymmetrized indices by square brackets (see Ricci calculus):

[math]\displaystyle{ \left(\nabla\times\mathbf{A}\right)_{ij} = \nabla_i A_j - \nabla_j A_i = 2\nabla_{[i} A_{j]} }[/math]

which is valid in any number of dimensions. In each case, the order of the gradient and vector field components should not be interchanged as this would result in a different differential operator:

[math]\displaystyle{ \varepsilon_{ijk} A_j \nabla_k = A_i \nabla_j - A_j \nabla_i = 2 A_{[i} \nabla_{j]} }[/math]

which could act on scalar or vector fields.

Finally, the Laplacian operator is defined in two ways, the divergence of the gradient of a scalar field Φ:

[math]\displaystyle{ \nabla\cdot(\nabla \Phi) = \nabla_i (\nabla_i \Phi) }[/math]

or the square of the gradient operator, which acts on a scalar field Φ or a vector field A:

[math]\displaystyle{ \begin{align} (\nabla\cdot\nabla) \Phi &= (\nabla_i \nabla_i) \Phi \\ (\nabla\cdot\nabla) \mathbf{A} &= (\nabla_i \nabla_i) \mathbf{A} \end{align} }[/math]

In physics and engineering, the gradient, divergence, curl, and Laplacian operator arise inevitably in fluid mechanics, Newtonian gravitation, electromagnetism, heat conduction, and even quantum mechanics.

Vector calculus identities can be derived in a similar way to those of vector dot and cross products and combinations. For example, in three dimensions, the curl of a cross product of two vector fields A and B:

[math]\displaystyle{ \begin{align} &\left[\nabla\times(\mathbf{A}\times\mathbf{B})\right]_i \\ {}={} &\varepsilon_{ijk} \nabla_j (\varepsilon_{k\ell m} A_\ell B_m) \\ {}={} &(\varepsilon_{ijk} \varepsilon_{\ell m k}) \nabla_j (A_\ell B_m) \\ {}={} &(\delta_{i\ell}\delta_{jm} - \delta_{im}\delta_{j\ell}) (B_m \nabla_j A_\ell + A_\ell \nabla_j B_m) \\ {}={} &(B_j \nabla_j A_i + A_i \nabla_j B_j) - (B_i \nabla_j A_j + A_j \nabla_j B_i) \\ {}={} &(B_j \nabla_j)A_i + A_i(\nabla_j B_j) - B_i (\nabla_j A_j ) - (A_j \nabla_j) B_i \\ {}={} &\left[(\mathbf{B} \cdot \nabla)\mathbf{A} + \mathbf{A}(\nabla\cdot \mathbf{B}) - \mathbf{B}(\nabla\cdot \mathbf{A}) - (\mathbf{A}\cdot \nabla) \mathbf{B} \right]_i \\ \end{align} }[/math]

where the product rule was used, and throughout the differential operator was not interchanged with A or B. Thus:

[math]\displaystyle{ \nabla\times(\mathbf{A}\times\mathbf{B}) = (\mathbf{B} \cdot \nabla)\mathbf{A} + \mathbf{A}(\nabla \cdot \mathbf{B}) - \mathbf{B}(\nabla \cdot \mathbf{A}) - (\mathbf{A} \cdot \nabla) \mathbf{B} }[/math]

Tensor calculus

One can continue the operations on tensors of higher order. Let T = T(r, t) denote a second order tensor field, again dependent on the position vector r and time t.

For instance, the gradient of a vector field in two equivalent notations ("dyadic" and "tensor", respectively) is:

[math]\displaystyle{ (\nabla \mathbf{A})_{ij} \equiv (\nabla \otimes \mathbf{A})_{ij} = \nabla_i A_j }[/math]

which is a tensor field of second order.

The divergence of a tensor is:

[math]\displaystyle{ (\nabla \cdot \mathbf{T})_j = \nabla_i T_{ij} }[/math]

which is a vector field. This arises in continuum mechanics in Cauchy's laws of motion – the divergence of the Cauchy stress tensor σ is a vector field, related to body forces acting on the fluid.

Difference from the standard tensor calculus

Cartesian tensors are as in tensor algebra, but Euclidean structure of and restriction of the basis brings some simplifications compared to the general theory.

The general tensor algebra consists of general mixed tensors of type (p, q):

[math]\displaystyle{ \mathbf{T} = T_{j_1 j_2 \cdots j_q}^{i_1 i_2 \cdots i_p} \mathbf{e}_{i_1 i_2 \cdots i_p}^{j_1 j_2 \cdots j_q} }[/math]

with basis elements:

[math]\displaystyle{ \mathbf{e}_{i_1 i_2 \cdots i_p}^{j_1 j_2 \cdots j_q} = \mathbf{e}_{i_1}\otimes\mathbf{e}_{i_2}\otimes\cdots\mathbf{e}_{i_p}\otimes\mathbf{e}^{j_1}\otimes\mathbf{e}^{j_2}\otimes\cdots\mathbf{e}^{j_q} }[/math]

the components transform according to:

[math]\displaystyle{ \bar{T}_{\ell_1 \ell_2 \cdots \ell_q}^{k_1 k_2 \cdots k_p} = \mathsf{L}_{i_1}{}^{k_1} \mathsf{L}_{i_2}{}^{k_2} \cdots \mathsf{L}_{i_p}{}^{k_p} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{\ell_1}{}^{j_1}\left(\boldsymbol{\mathsf{L}}^{-1}\right)_{\ell_2}{}^{j_2} \cdots \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{\ell_q}{}^{j_q} T_{j_1 j_2 \cdots j_q}^{i_1 i_2 \cdots i_p} }[/math]

as for the bases:

[math]\displaystyle{ \bar{\mathbf{e}}_{k_1 k_2 \cdots k_p}^{\ell_1 \ell_2 \cdots \ell_q} = \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{k_1}{}^{i_1} \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{k_2}{}^{i_2} \cdots \left(\boldsymbol{\mathsf{L}}^{-1}\right)_{k_p}{}^{i_p} \mathsf{L}_{j_1}{}^{\ell_1} \mathsf{L}_{j_2}{}^{\ell_2} \cdots \mathsf{L}_{j_q}{}^{\ell_q} \mathbf{e}_{i_1 i_2 \cdots i_p}^{j_1 j_2 \cdots j_q} }[/math]

For Cartesian tensors, only the order p + q of the tensor matters in a Euclidean space with an orthonormal basis, and all p + q indices can be lowered. A Cartesian basis does not exist unless the vector space has a positive-definite metric, and thus cannot be used in relativistic contexts.

History

Dyadic tensors were historically the first approach to formulating second-order tensors, similarly triadic tensors for third-order tensors, and so on. Cartesian tensors use tensor index notation, in which the variance may be glossed over and is often ignored, since the components remain unchanged by raising and lowering indices.

See also

- Tensor algebra

- Tensor calculus

- Tensors in curvilinear coordinates

- Rotation group

References

- ↑ Jump up to: 1.0 1.1 C.W. Misner; K.S. Thorne; J.A. Wheeler (15 September 1973). Gravitation. Macmillan. ISBN 0-7167-0344-0., used throughout

- ↑ Jump up to: 2.0 2.1 2.2 T. W. B. Kibble (1973). Classical Mechanics. European physics series (2nd ed.). McGraw Hill. ISBN 978-0-07-084018-8., see Appendix C.

- ↑ Jump up to: 3.0 3.1 M. R. Spiegel; S. Lipcshutz; D. Spellman (2009). Vector analysis. Schaum's Outlines (2nd ed.). McGraw Hill. ISBN 978-0-07-161545-7.

General references

- D. C. Kay (1988). Tensor Calculus. Schaum's Outlines. McGraw Hill. pp. 18–19, 31–32. ISBN 0-07-033484-6.

- M. R. Spiegel; S. Lipcshutz; D. Spellman (2009). Vector analysis. Schaum's Outlines (2nd ed.). McGraw Hill. p. 227. ISBN 978-0-07-161545-7.

- J.R. Tyldesley (1975). An introduction to tensor analysis for engineers and applied scientists. Longman. pp. 5–13. ISBN 0-582-44355-5. https://books.google.com/books?id=PODXAAAAMAAJ.

Further reading and applications

- S. Lipcshutz; M. Lipson (2009). Linear Algebra. Schaum's Outlines (4th ed.). McGraw Hill. ISBN 978-0-07-154352-1.

- Pei Chi Chou (1992). Elasticity: Tensor, Dyadic, and Engineering Approaches. Courier Dover Publications. ISBN 048-666-958-0. https://books.google.com/books?id=9-pJ7Kg5XmAC&q=cartesian+tensor.

- T. W. Körner (2012). Vectors, Pure and Applied: A General Introduction to Linear Algebra. Cambridge University Press. p. 216. ISBN 978-11070-3356-6. https://books.google.com/books?id=RO_TMc7ETPEC&q=cartesian+tensor.

- R. Torretti (1996). Relativity and Geometry. Courier Dover Publications. p. 103. ISBN 0-4866-90466. https://books.google.com/books?id=vpW_sBxwr88C&q=cartesian+tensor&pg=PA103.

- J. J. L. Synge; A. Schild (1978). Tensor Calculus. Courier Dover Publications. p. 128. ISBN 0-4861-4139-X. https://books.google.com/books?id=8vlGhlxqZjsC&q=cartesian+tensor&pg=PA127.

- C. A. Balafoutis; R. V. Patel (1991). Dynamic Analysis of Robot Manipulators: A Cartesian Tensor Approach. The Kluwer International Series in Engineering and Computer Science: Robotics: vision, manipulation and sensors. 131. Springer. ISBN 0792-391-454. https://books.google.com/books?id=7BcpyUjmLpUC&q=cartesian+tensor.

- S. G. Tzafestas (1992). Robotic systems: advanced techniques and applications. Springer. ISBN 0-792-317-491. https://books.google.com/books?id=iG5W9IQhjd4C&q=cartesian+tensor&pg=PA45.

- T. Dass; S. K. Sharma (1998). Mathematical Methods In Classical And Quantum Physics. Universities Press. p. 144. ISBN 817-371-0899. https://books.google.com/books?id=AQCsAxpZ7ToC&q=cartesian+tensor&pg=PA144.

- G. F. J. Temple (2004). Cartesian Tensors: An Introduction. Dover Books on Mathematics Series. Dover. ISBN 0-4864-3908-9. https://books.google.com/books?id=56WqzKbTMtMC&q=Cartesian+Tensors:+An+Introduction+temple.

- H. Jeffreys (1961). Cartesian Tensors. Cambridge University Press. ISBN 9780521054232. https://books.google.com/books?id=oOYIAQAAIAAJ&q=cartesian+tensors.

External links

- Cartesian Tensors

- V. N. Kaliakin, Brief Review of Tensors, University of Delaware

- R. E. Hunt, Cartesian Tensors, University of Cambridge

|