Physics:Bose–Einstein statistics

In quantum statistics, Bose–Einstein statistics (B–E statistics) describes one of two possible ways in which a collection of non-interacting identical particles may occupy a set of available discrete energy states at thermodynamic equilibrium. The aggregation of particles in the same state, which is a characteristic of particles obeying Bose–Einstein statistics, accounts for the cohesive streaming of laser light and the frictionless creeping of superfluid helium. The theory of this behaviour was developed (1924–25) by Satyendra Nath Bose, who recognized that a collection of identical and indistinguishable particles can be distributed in this way. The idea was later adopted and extended by Albert Einstein in collaboration with Bose.

Bose–Einstein statistics apply only to particles that do not follow the Pauli exclusion principle restrictions. Particles that follow Bose-Einstein statistics are called bosons, which have integer values of spin. In contrast, particles that follow Fermi-Dirac statistics are called fermions and have half-integer spins.

Bose–Einstein distribution

At low temperatures, bosons behave differently from fermions (which obey the Fermi–Dirac statistics) in a way that an unlimited number of them can "condense" into the same energy state. This apparently unusual property also gives rise to the special state of matter – the Bose–Einstein condensate. Fermi–Dirac and Bose–Einstein statistics apply when quantum effects are important and the particles are "indistinguishable". Quantum effects appear if the concentration of particles satisfies

[math]\displaystyle{ \frac{N}{V} \ge n_q, }[/math]

where N is the number of particles, V is the volume, and nq is the quantum concentration, for which the interparticle distance is equal to the thermal de Broglie wavelength, so that the wavefunctions of the particles are barely overlapping.

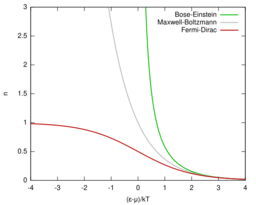

Fermi–Dirac statistics applies to fermions (particles that obey the Pauli exclusion principle), and Bose–Einstein statistics applies to bosons. As the quantum concentration depends on temperature, most systems at high temperatures obey the classical (Maxwell–Boltzmann) limit, unless they also have a very high density, as for a white dwarf. Both Fermi–Dirac and Bose–Einstein become Maxwell–Boltzmann statistics at high temperature or at low concentration.

Bose–Einstein statistics was introduced for photons in 1924 by Bose and generalized to atoms by Einstein in 1924–25.

The expected number of particles in an energy state i for Bose–Einstein statistics is:

[math]\displaystyle{ \bar{n}_i = \frac{g_i}{e^{(\varepsilon_i-\mu) / k_\text{B} T} - 1} }[/math]

with εi > μ and where ni is the occupation number (the number of particles) in state i, [math]\displaystyle{ g_i }[/math] is the degeneracy of energy level i, εi is the energy of the i-th state, μ is the chemical potential (zero for a photon gas), kB is the Boltzmann constant, and T is the absolute temperature.

The variance of this distribution [math]\displaystyle{ V(n) }[/math] is calculated directly from the expression above for the average number.[1]

[math]\displaystyle{ V(n) = kT\frac{\partial}{\partial \mu}\bar{n}_i = \langle n\rangle(1+\langle n\rangle) = \bar{n} + \bar{n}^2 }[/math]

For comparison, the average number of fermions with energy [math]\displaystyle{ \varepsilon_i }[/math] given by Fermi–Dirac particle-energy distribution has a similar form:

[math]\displaystyle{ \bar{n}_i(\varepsilon_i) = \frac{g_i}{e^{(\varepsilon_i - \mu)/k_\text{B}T} + 1}. }[/math]

As mentioned above, both the Bose–Einstein distribution and the Fermi–Dirac distribution approaches the Maxwell–Boltzmann distribution in the limit of high temperature and low particle density, without the need for any ad hoc assumptions:

- In the limit of low particle density, [math]\displaystyle{ \bar{n}_i = \frac{g_i}{e^{(\varepsilon_i-\mu)/k_\text{B}T}\pm 1} \ll 1 }[/math], therefore [math]\displaystyle{ e^{(\varepsilon_i-\mu)/k_\text{B}T} \pm 1 \gg 1 }[/math] or equivalently [math]\displaystyle{ e^{(\varepsilon_i-\mu)/k_\text{B}T} \gg 1 }[/math]. In that case, [math]\displaystyle{ \bar{n}_i \approx \frac{g_i}{e^{(\varepsilon_i-\mu)/k_\text{B}T}}=\frac{1}{Z}e^{-(\varepsilon_i - \mu)/k_\text{B} T} }[/math], which is the result from Maxwell–Boltzmann statistics.

- In the limit of high temperature, the particles are distributed over a large range of energy values, therefore the occupancy on each state (especially the high energy ones with [math]\displaystyle{ \varepsilon_i - \mu \gg k_\text{B}T }[/math]) is again very small, [math]\displaystyle{ \bar{n}_i = \frac{g_i}{e^{(\varepsilon_i-\mu)/k_\text{B}T} \pm 1} \ll 1 }[/math]. This again reduces to Maxwell–Boltzmann statistics.

In addition to reducing to the Maxwell–Boltzmann distribution in the limit of high [math]\displaystyle{ T }[/math] and low density, Bose–Einstein statistics also reduces to Rayleigh–Jeans law distribution for low energy states with

[math]\displaystyle{ \varepsilon_i - \mu \ll k_\text{B}T }[/math], namely

[math]\displaystyle{ \begin{align} \bar{n}_i & = \frac{g_i}{e^{(\varepsilon_i-\mu)/k_{\text{B}}T}-1} \\ &\approx \frac{g_i}{(\varepsilon_i-\mu)/k_\text{B}T} = \frac{g_i k_\text{B}T}{\varepsilon_i - \mu}. \end{align} }[/math]

History

Władysław Natanson in 1911 concluded that Planck's law requires indistinguishability of "units of energy", although he did not frame this in terms of Einstein's light quanta.[2][3]

While presenting a lecture at the University of Dhaka (in what was then British India and is now Bangladesh) on the theory of radiation and the ultraviolet catastrophe, Satyendra Nath Bose intended to show his students that the contemporary theory was inadequate, because it predicted results not in accordance with experimental results. During this lecture, Bose committed an error in applying the theory, which unexpectedly gave a prediction that agreed with the experiment. The error was a simple mistake—similar to arguing that flipping two fair coins will produce two heads one-third of the time—that would appear obviously wrong to anyone with a basic understanding of statistics (remarkably, this error resembled the famous blunder by d'Alembert known from his Croix ou Pile article[4][5]). However, the results it predicted agreed with experiment, and Bose realized it might not be a mistake after all. For the first time, he took the position that the Maxwell–Boltzmann distribution would not be true for all microscopic particles at all scales. Thus, he studied the probability of finding particles in various states in phase space, where each state is a little patch having phase volume of h3, and the position and momentum of the particles are not kept particularly separate but are considered as one variable.

Bose adapted this lecture into a short article called "Planck's law and the hypothesis of light quanta"[6][7] and submitted it to the Philosophical Magazine. However, the referee's report was negative, and the paper was rejected. Undaunted, he sent the manuscript to Albert Einstein requesting publication in the Zeitschrift für Physik. Einstein immediately agreed, personally translated the article from English into German (Bose had earlier translated Einstein's article on the general theory of relativity from German to English), and saw to it that it was published. Bose's theory achieved respect when Einstein sent his own paper in support of Bose's to Zeitschrift für Physik, asking that they be published together. The paper came out in 1924.[8]

The reason Bose produced accurate results was that since photons are indistinguishable from each other, one cannot treat any two photons having equal quantum numbers (e.g., polarization and momentum vector) as being two distinct identifiable photons. Bose originally had a factor of 2 for the possible spin states, but Einstein changed it to polarization.[9] By analogy, if in an alternate universe coins were to behave like photons and other bosons, the probability of producing two heads would indeed be one-third, and so is the probability of getting a head and a tail which equals one-half for the conventional (classical, distinguishable) coins. Bose's "error" leads to what is now called Bose–Einstein statistics.

Bose and Einstein extended the idea to atoms and this led to the prediction of the existence of phenomena which became known as Bose–Einstein condensate, a dense collection of bosons (which are particles with integer spin, named after Bose), which was demonstrated to exist by experiment in 1995.

Derivation

Derivation from the microcanonical ensemble

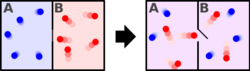

In the microcanonical ensemble, one considers a system with fixed energy, volume, and number of particles. We take a system composed of [math]\displaystyle{ N = \sum_i n_i }[/math] identical bosons, [math]\displaystyle{ n_i }[/math] of which have energy [math]\displaystyle{ \varepsilon_i }[/math] and are distributed over [math]\displaystyle{ g_i }[/math] levels or states with the same energy [math]\displaystyle{ \varepsilon_i }[/math], i.e. [math]\displaystyle{ g_i }[/math] is the degeneracy associated with energy [math]\displaystyle{ \varepsilon_i }[/math] of total energy [math]\displaystyle{ E = \sum_i n_i \varepsilon_i }[/math]. Calculation of the number of arrangements of [math]\displaystyle{ n_i }[/math] particles distributed among [math]\displaystyle{ g_i }[/math] states is a problem of combinatorics. Since particles are indistinguishable in the quantum mechanical context here, the number of ways for arranging [math]\displaystyle{ n_i }[/math] particles in [math]\displaystyle{ g_i }[/math] boxes (for the [math]\displaystyle{ i }[/math]th energy level) would be (see image):

[math]\displaystyle{ w_{i,\text{BE}} = \frac{(n_i+g_i-1)!}{n_i! (g_i-1)!} = C^{n_i+g_i-1}_{n_i}, }[/math]

where [math]\displaystyle{ C^m_k }[/math] is the k-combination of a set with m elements. The total number of arrangements in an ensemble of bosons is simply the product of the binomial coefficients [math]\displaystyle{ C^{n_i+g_i-1}_{n_i} }[/math] above over all the energy levels, i.e. [math]\displaystyle{ W_\text{BE} =\prod_i w_{i,\text{BE}}=\prod_i\frac{(n_i +g_i -1)!}{(g_i-1)! n_i!}, }[/math]

The maximum number of arrangements determining the corresponding occupation number [math]\displaystyle{ n_i }[/math] is obtained by maximizing the entropy, or equivalently, setting [math]\displaystyle{ \mathrm{d}(\ln W_\text{BE}) = 0 }[/math] and taking the subsidiary conditions [math]\displaystyle{ N=\sum n_i, E=\sum_i n_i\varepsilon_i }[/math] into account (as Lagrange multipliers).[10] The result for [math]\displaystyle{ n_i\gg 1 }[/math], [math]\displaystyle{ g_i\gg 1 }[/math], [math]\displaystyle{ n_i/g_i=O(1) }[/math] is the Bose–Einstein distribution.

Derivation from the grand canonical ensemble

The Bose–Einstein distribution, which applies only to a quantum system of non-interacting bosons, is naturally derived from the grand canonical ensemble without any approximations.[11] In this ensemble, the system is able to exchange energy and exchange particles with a reservoir (temperature T and chemical potential µ fixed by the reservoir).

Due to the non-interacting quality, each available single-particle level (with energy level ϵ) forms a separate thermodynamic system in contact with the reservoir. That is, the number of particles within the overall system that occupy a given single particle state form a sub-ensemble that is also grand canonical ensemble; hence, it may be analysed through the construction of a grand partition function.

Every single-particle state is of a fixed energy, [math]\displaystyle{ \varepsilon }[/math]. As the sub-ensemble associated with a single-particle state varies by the number of particles only, it is clear that the total energy of the sub-ensemble is also directly proportional to the number of particles in the single-particle state; where [math]\displaystyle{ N }[/math] is the number of particles, the total energy of the sub-ensemble will then be [math]\displaystyle{ N\varepsilon }[/math]. Beginning with the standard expression for a grand partition function and replacing [math]\displaystyle{ E }[/math] with [math]\displaystyle{ N \varepsilon }[/math], the grand partition function takes the form

[math]\displaystyle{ \mathcal Z = \sum_N \exp((N\mu - N\varepsilon)/k_\text{B} T) = \sum_N \exp(N(\mu - \varepsilon)/k_\text{B} T) }[/math]

This formula applies to fermionic systems as well as bosonic systems. Fermi–Dirac statistics arises when considering the effect of the Pauli exclusion principle: whilst the number of fermions occupying the same single-particle state can only be either 1 or 0, the number of bosons occupying a single particle state may be any integer. Thus, the grand partition function for bosons can be considered a geometric series and may be evaluated as such:

[math]\displaystyle{ \begin{align}\mathcal Z & = \sum_{N=0}^\infty \exp(N(\mu - \varepsilon)/k_\text{B} T) = \sum_{N=0}^\infty [\exp((\mu - \varepsilon)/k_\text{B}T)]^N \\ & = \frac{1}{1 - \exp((\mu - \varepsilon)/k_\text{B} T)}.\end{align} }[/math]

Note that the geometric series is convergent only if [math]\displaystyle{ e^{(\mu - \varepsilon)/k_\text{B}T}\lt 1 }[/math], including the case where [math]\displaystyle{ \epsilon = 0 }[/math]. This implies that the chemical potential for the Bose gas must be negative, i.e., [math]\displaystyle{ \mu\lt 0 }[/math], whereas the Fermi gas is allowed to take both positive and negative values for the chemical potential.[12]

The average particle number for that single-particle substate is given by

[math]\displaystyle{ \langle N\rangle = k_\text{B} T \frac{1}{\mathcal Z} \left(\frac{\partial \mathcal Z}{\partial \mu}\right)_{V,T} = \frac{1}{\exp((\varepsilon-\mu)/k_\text{B} T)-1} }[/math] This result applies for each single-particle level and thus forms the Bose–Einstein distribution for the entire state of the system.[13][14]

The variance in particle number, [math]\displaystyle{ \sigma_N^2 = \langle N^2 \rangle - \langle N \rangle^2 }[/math], is:

[math]\displaystyle{ \sigma_N^2 = k_\text{B} T \left(\frac{d\langle N\rangle}{d\mu}\right)_{V,T} = \frac{\exp((\varepsilon-\mu)/k_\text{B} T)}{(\exp((\varepsilon-\mu)/k_\text{B} T)-1)^2} = \langle N\rangle(1 + \langle N\rangle). }[/math]

As a result, for highly occupied states the standard deviation of the particle number of an energy level is very large, slightly larger than the particle number itself: [math]\displaystyle{ \sigma_N \approx \langle N\rangle }[/math]. This large uncertainty is due to the fact that the probability distribution for the number of bosons in a given energy level is a geometric distribution; somewhat counterintuitively, the most probable value for N is always 0. (In contrast, classical particles have instead a Poisson distribution in particle number for a given state, with a much smaller uncertainty of [math]\displaystyle{ \sigma_{N,{\rm classical}} = \sqrt{\langle N\rangle} }[/math], and with the most-probable N value being near [math]\displaystyle{ \langle N \rangle }[/math].)

Derivation in the canonical approach

It is also possible to derive approximate Bose–Einstein statistics in the canonical ensemble. These derivations are lengthy and only yield the above results in the asymptotic limit of a large number of particles. The reason is that the total number of bosons is fixed in the canonical ensemble. The Bose–Einstein distribution in this case can be derived as in most texts by maximization, but the mathematically best derivation is by the Darwin–Fowler method of mean values as emphasized by Dingle.[15] See also Müller-Kirsten.[10] The fluctuations of the ground state in the condensed region are however markedly different in the canonical and grand-canonical ensembles.[16]

Interdisciplinary applications

Viewed as a pure probability distribution, the Bose–Einstein distribution has found application in other fields:

- In recent years, Bose–Einstein statistics has also been used as a method for term weighting in information retrieval. The method is one of a collection of DFR ("Divergence From Randomness") models,[18] the basic notion being that Bose–Einstein statistics may be a useful indicator in cases where a particular term and a particular document have a significant relationship that would not have occurred purely by chance. Source code for implementing this model is available from the Terrier project at the University of Glasgow.

- The evolution of many complex systems, including the World Wide Web, business, and citation networks, is encoded in the dynamic web describing the interactions between the system's constituents. Despite their irreversible and nonequilibrium nature these networks follow Bose statistics and can undergo Bose–Einstein condensation. Addressing the dynamical properties of these nonequilibrium systems within the framework of equilibrium quantum gases predicts that the "first-mover-advantage", "fit-get-rich" (FGR) and "winner-takes-all" phenomena observed in competitive systems are thermodynamically distinct phases of the underlying evolving networks.[19]

See also

- Bose–Einstein correlations

- Bose–Einstein condensate

- Bose gas

- Einstein solid

- Higgs boson

- Parastatistics

- Planck's law of black body radiation

- Superconductivity

- Fermi–Dirac statistics

- Maxwell–Boltzmann statistics

Notes

- ↑ Pearsall, Thomas (2020). Quantum Photonics, 2nd edition. Graduate Texts in Physics. Springer. doi:10.1007/978-3-030-47325-9. ISBN 978-3-030-47324-2. https://www.springer.com/us/book/9783030473242.

- ↑ Jammer, Max (1966). The conceptual development of quantum mechanics. McGraw-Hill. p. 51. ISBN 0-88318-617-9.

- ↑ Passon, Oliver; Grebe-Ellis, Johannes (2017-05-01). "Planck's radiation law, the light quantum, and the prehistory of indistinguishability in the teaching of quantum mechanics". European Journal of Physics 38 (3): 035404. doi:10.1088/1361-6404/aa6134. ISSN 0143-0807. Bibcode: 2017EJPh...38c5404P. https://iopscience.iop.org/article/10.1088/1361-6404/aa6134.

- ↑ d'Alembert, Jean (1754). "Croix ou pile" (in fr). L'Encyclopédie 4.

- ↑ d'Alembert, Jean (1754). "Croix ou pile". http://www.cs.xu.edu/math/Sources/Dalembert/croix_ou_pile.pdf.

- ↑ See p. 14, note 3, of the thesis: Michelangeli, Alessandro (October 2007). Bose–Einstein condensation: Analysis of problems and rigorous results (PDF) (Ph.D.). International School for Advanced Studies. Archived (PDF) from the original on 3 November 2018. Retrieved 14 February 2019.

- ↑ Bose (2 July 1924). "Planck's law and the hypothesis of light quanta" (PostScript). University of Oldenburg. http://www.condmat.uni-oldenburg.de/TeachingSP/bose.ps.

- ↑ Bose (1924), "Plancks Gesetz und Lichtquantenhypothese" (in de), Zeitschrift für Physik 26 (1): 178–181, doi:10.1007/BF01327326, Bibcode: 1924ZPhy...26..178B

- ↑ [1]

- ↑ Jump up to: 10.0 10.1 H. J. W. Müller-Kirsten, Basics of Statistical Physics, 2nd ed., World Scientific (2013), ISBN:978-981-4449-53-3.

- ↑ Srivastava, R. K.; Ashok, J. (2005). "Chapter 7". Statistical Mechanics. New Delhi: PHI Learning Pvt. Ltd.. ISBN 9788120327825.

- ↑ Landau, L. D., Lifšic, E. M., Lifshitz, E. M., & Pitaevskii, L. P. (1980). Statistical physics (Vol. 5). Pergamon Press.

- ↑ "Chapter 6". Statistical Mechanics. January 2005. ISBN 9788120327825.

- ↑ The BE distribution can be derived also from thermal field theory.

- ↑ R. B. Dingle, Asymptotic Expansions: Their Derivation and Interpretation, Academic Press (1973), pp. 267–271.

- ↑ Ziff R. M.; Kac, M.; Uhlenbeck, G. E. (1977). "The ideal Bose–Einstein gas, revisited". Physics Reports 32: 169–248.

- ↑ See McQuarrie in citations

- ↑ Amati, G.; C. J. Van Rijsbergen (2002). "Probabilistic models of information retrieval based on measuring the divergence from randomness " ACM TOIS 20(4):357–389.

- ↑ Bianconi, G.; Barabási, A.-L. (2001). "Bose–Einstein Condensation in Complex Networks". Physical Review Letters 86: 5632–5635.

References

- Annett, James F. (2004). Superconductivity, Superfluids and Condensates. New York: Oxford University Press. ISBN 0-19-850755-0.

- Carter, Ashley H. (2001). Classical and Statistical Thermodynamics. Upper Saddle River, New Jersey: Prentice Hall. ISBN 0-13-779208-5.

- Griffiths, David J. (2005). Introduction to Quantum Mechanics (2nd ed.). Upper Saddle River, New Jersey: Pearson, Prentice Hall. ISBN 0-13-191175-9.

- McQuarrie, Donald A. (2000). Statistical Mechanics (1st ed.). Sausalito, California 94965: University Science Books. p. 55. ISBN 1-891389-15-7. https://archive.org/details/statisticalmecha00mcqu_0/page/55.

|