Physics:Helmholtz free energy

| Thermodynamics |

|---|

|

In thermodynamics, the Helmholtz free energy (or Helmholtz energy) is a thermodynamic potential that measures the useful work obtainable from a closed thermodynamic system at a constant temperature (isothermal). The change in the Helmholtz energy during a process is equal to the maximum amount of work that the system can perform in a thermodynamic process in which temperature is held constant. At constant temperature, the Helmholtz free energy is minimized at equilibrium.

In contrast, the Gibbs free energy or free enthalpy is most commonly used as a measure of thermodynamic potential (especially in chemistry) when it is convenient for applications that occur at constant pressure. For example, in explosives research Helmholtz free energy is often used, since explosive reactions by their nature induce pressure changes. It is also frequently used to define fundamental equations of state of pure substances.

The concept of free energy was developed by Hermann von Helmholtz, a German physicist, and first presented in 1882 in a lecture called "On the thermodynamics of chemical processes".[1] From the German word Arbeit (work), the International Union of Pure and Applied Chemistry (IUPAC) recommends the symbol A and the name Helmholtz energy.[2] In physics, the symbol F is also used in reference to free energy or Helmholtz function.

Definition

The Helmholtz free energy is defined as[3] where

- A is the Helmholtz free energy[2] (sometimes also called F, particularly in the field of physics) (SI: joules, CGS: ergs),

- U is the internal energy of the system (SI: joules, CGS: ergs),

- T is the absolute temperature (kelvins) of the surroundings, modelled as a heat bath,

- S is the entropy of the system (SI: joules per kelvin, CGS: ergs per kelvin).

The Helmholtz energy is the Legendre transformation of the internal energy U, in which temperature replaces entropy as the independent variable.

Formal development

The first law of thermodynamics in a closed system provides where is the internal energy, is the energy added as heat, and is the work done on the system. The second law of thermodynamics for a reversible process yields . In case of a reversible change, the work done can be expressed as (ignoring electrical and other non-PV work) and so:

Applying the product rule for differentiation to , it follows and

The definition of allows us to rewrite this as

Because A is a thermodynamic function of state, this relation is also valid for a process (without electrical work or composition change) that is not reversible.

Minimum free energy and maximum work principles

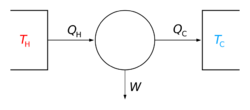

The laws of thermodynamics are only directly applicable to systems in thermal equilibrium. If we wish to describe phenomena like chemical reactions, then the best we can do is to consider suitably chosen initial and final states in which the system is in (metastable) thermal equilibrium. If the system is kept at fixed volume and is in contact with a heat bath at some constant temperature, then we can reason as follows.

Since the thermodynamical variables of the system are well defined in the initial state and the final state, the internal energy increase , the entropy increase , and the total amount of work that can be extracted, performed by the system, , are well defined quantities. Conservation of energy implies

The volume of the system is kept constant. This means that the volume of the heat bath does not change either, and we can conclude that the heat bath does not perform any work. This implies that the amount of heat that flows into the heat bath is given by

The heat bath remains in thermal equilibrium at temperature T no matter what the system does. Therefore, the entropy change of the heat bath is

The total entropy change is thus given by

Since the system is in thermal equilibrium with the heat bath in the initial and the final states, T is also the temperature of the system in these states. The fact that the system's temperature does not change allows us to express the numerator as the free energy change of the system:

Since the total change in entropy must always be larger or equal to zero, we obtain the inequality

We see that the total amount of work that can be extracted in an isothermal process is limited by the free-energy decrease, and that increasing the free energy in a reversible process requires work to be done on the system. If no work is extracted from the system, then

and thus for a system kept at constant temperature and volume and not capable of performing electrical or other non-PV work, the total free energy during a spontaneous change can only decrease.

This result seems to contradict the equation , as keeping T and V constant seems to imply , and hence In reality there is no contradiction: In a simple one-component system, to which the validity of the equation is restricted, no process can occur at constant T and V, since there is a unique relation, and thus T, V, and P are all fixed. To allow for spontaneous processes at constant T and V, one needs to enlarge the thermodynamical state space of the system. In case of a chemical reaction, one must allow for changes in the numbers Nj of particles of each type j. The differential of the free energy then generalizes to

where the are the numbers of particles of type j and the are the corresponding chemical potentials. This equation is then again valid for both reversible and non-reversible changes. In case of a spontaneous change at constant T and V, the last term will thus be negative.

In case there are other external parameters, the above relation further generalizes to

Here the are the external variables, and the the corresponding generalized forces.

Relation to the canonical partition function

A system kept at constant volume, temperature, and particle number is described by the canonical ensemble. The probability of finding the system in some energy eigenstate r, for any microstate i, is given by where

- is the energy of accessible state

Z is called the partition function of the system. The fact that the system does not have a unique energy means that the various thermodynamical quantities must be defined as expectation values. In the thermodynamical limit of infinite system size, the relative fluctuations in these averages will go to zero.

The average internal energy of the system is the expectation value of the energy and can be expressed in terms of Z as follows:

If the system is in state r, then the generalized force corresponding to an external variable x is given by

The thermal average of this can be written as

Suppose that the system has one external variable . Then changing the system's temperature parameter by and the external variable by will lead to a change in :

If we write as

we get

This means that the change in the internal energy is given by

In the thermodynamic limit, the fundamental thermodynamic relation should hold:

This then implies that the entropy of the system is given by

where c is some constant. The value of c can be determined by considering the limit T → 0. In this limit the entropy becomes , where is the ground-state degeneracy. The partition function in this limit is , where is the ground-state energy. Thus, we see that and that

Relating free energy to other variables

Combining the definition of Helmholtz free energy

along with the fundamental thermodynamic relation

one can find expressions for entropy, pressure and chemical potential:[4]

These three equations, along with the free energy in terms of the partition function,

allow an efficient way of calculating thermodynamic variables of interest given the partition function and are often used in density of state calculations. One can also do Legendre transformations for different systems. For example, for a system with a magnetic field or potential, it is true that

Bogoliubov inequality

Computing the free energy is an intractable problem for all but the simplest models in statistical physics. A powerful approximation method is mean-field theory, which is a variational method based on the Bogoliubov inequality. This inequality can be formulated as follows.

Suppose we replace the real Hamiltonian of the model by a trial Hamiltonian , which has different interactions and may depend on extra parameters that are not present in the original model. If we choose this trial Hamiltonian such that

where both averages are taken with respect to the canonical distribution defined by the trial Hamiltonian , then the Bogoliubov inequality states

where is the free energy of the original Hamiltonian, and is the free energy of the trial Hamiltonian. We will prove this below.

By including a large number of parameters in the trial Hamiltonian and minimizing the free energy, we can expect to get a close approximation to the exact free energy.

The Bogoliubov inequality is often applied in the following way. If we write the Hamiltonian as

where is some exactly solvable Hamiltonian, then we can apply the above inequality by defining

Here we have defined to be the average of X over the canonical ensemble defined by . Since defined this way differs from by a constant, we have in general

where is still the average over , as specified above. Therefore,

and thus the inequality

holds. The free energy is the free energy of the model defined by plus . This means that

and thus

Proof of the Bogoliubov inequality

For a classical model we can prove the Bogoliubov inequality as follows. We denote the canonical probability distributions for the Hamiltonian and the trial Hamiltonian by and , respectively. From Gibbs' inequality we know that:

holds. To see this, consider the difference between the left hand side and the right hand side. We can write this as:

Since

it follows that:

where in the last step we have used that both probability distributions are normalized to 1.

We can write the inequality as:

where the averages are taken with respect to . If we now substitute in here the expressions for the probability distributions:

and

we get:

Since the averages of and are, by assumption, identical we have:

Here we have used that the partition functions are constants with respect to taking averages and that the free energy is proportional to minus the logarithm of the partition function.

We can easily generalize this proof to the case of quantum mechanical models. We denote the eigenstates of by . We denote the diagonal components of the density matrices for the canonical distributions for and in this basis as:

and

where the are the eigenvalues of

We assume again that the averages of H and in the canonical ensemble defined by are the same:

where

The inequality

still holds as both the and the sum to 1. On the left-hand side we can replace:

On the right-hand side we can use the inequality

where we have introduced the notation

for the expectation value of the operator Y in the state r. See here for a proof. Taking the logarithm of this inequality gives:

This allows us to write:

The fact that the averages of H and are the same then leads to the same conclusion as in the classical case:

Generalized Helmholtz energy

In the more general case, the mechanical term must be replaced by the product of volume, stress, and an infinitesimal strain:[5]

where is the stress tensor, and is the strain tensor. In the case of linear elastic materials that obey Hooke's law, the stress is related to the strain by

where we are now using Einstein notation for the tensors, in which repeated indices in a product are summed. We may integrate the expression for to obtain the Helmholtz energy:

Application to fundamental equations of state

The Helmholtz free energy function for a pure substance (together with its partial derivatives) can be used to determine all other thermodynamic properties for the substance. See, for example, the equations of state for water, as given by the IAPWS in their IAPWS-95 release.

Application to training auto-encoders

Hinton and Zemel[6] "derive an objective function for training auto-encoder based on the minimum description length (MDL) principle". "The description length of an input vector using a particular code is the sum of the code cost and reconstruction cost. They define this to be the energy of the code. Given an input vector, they define the energy of a code to be the sum of the code cost and the reconstruction cost." The true expected combined cost is "which has exactly the form of Helmholtz free energy".

See also

- Gibbs free energy and thermodynamic free energy for thermodynamics history overview and discussion of free energy

- Grand potential

- Enthalpy

- Statistical mechanics

- This page details the Helmholtz energy from the point of view of thermal and statistical physics.

- Bennett acceptance ratio for an efficient way to calculate free energy differences and comparison with other methods.

References

- ↑ von Helmholtz, H. (1882). Physical memoirs, selected and translated from foreign sources. Taylor & Francis.

- ↑ 2.0 2.1 Gold, Victor, ed (2019). Gold Book. IUPAC. doi:10.1351/goldbook. http://goldbook.iupac.org/H02772.html. Retrieved 2012-08-19.

- ↑ Levine, Ira. N. (1978). "Physical Chemistry" McGraw-Hill: University of Brooklyn.

- ↑ "4.3 Entropy, Helmholtz Free Energy and the Partition Function". http://theory.physics.manchester.ac.uk/~judith/stat_therm/node70.html.

- ↑ Landau, L. D. (1986). Theory of Elasticity (Course of Theoretical Physics Volume 7). (Translated from Russian by J. B. Sykes and W. H. Reid) (Third ed.). Boston, MA: Butterworth Heinemann. ISBN 0-7506-2633-X.

- ↑ Hinton, G. E.; Zemel, R. S. (1994). "Autoencoders, minimum description length and Helmholtz free energy". Advances in Neural Information Processing Systems: 3–10. https://proceedings.neurips.cc/paper/1993/file/9e3cfc48eccf81a0d57663e129aef3cb-Paper.pdf.

Further reading

- Atkins' Physical Chemistry, 7th edition, by Peter Atkins and Julio de Paula, Oxford University Press

- HyperPhysics Helmholtz Free Energy Helmholtz and Gibbs Free Energies

|