Physics:Feynman diagram

| Quantum field theory |

|---|

|

| History |

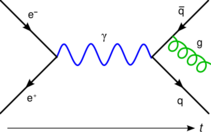

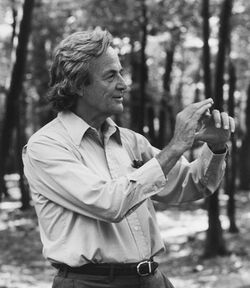

In theoretical physics, a Feynman diagram is a pictorial representation of the mathematical expressions describing the behavior and interaction of subatomic particles. The scheme is named after American physicist Richard Feynman, who introduced the diagrams in 1948.

The calculation of probability amplitudes in theoretical particle physics requires the use of large, complicated integrals over a large number of variables. Feynman diagrams instead represent these integrals graphically.

Feynman diagrams give a simple visualization of what would otherwise be an arcane and abstract formula. According to David Kaiser, "Since the middle of the 20th century, theoretical physicists have increasingly turned to this tool to help them undertake critical calculations. Feynman diagrams have revolutionized nearly every aspect of theoretical physics."[1]

While the diagrams apply primarily to quantum field theory, they can be used in other areas of physics, such as solid-state theory. Frank Wilczek wrote that the calculations that won him the 2004 Nobel Prize in Physics "would have been literally unthinkable without Feynman diagrams, as would [Wilczek's] calculations that established a route to production and observation of the Higgs particle."[2]

A Feynman diagram is a graphical representation of a perturbative contribution to the transition amplitude or correlation function of a quantum mechanical or statistical field theory. Within the canonical formulation of quantum field theory, a Feynman diagram represents a term in the Wick's expansion of the perturbative S-matrix. Alternatively, the path integral formulation of quantum field theory represents the transition amplitude as a weighted sum of all possible histories of the system from the initial to the final state, in terms of either particles or fields. The transition amplitude is then given as the matrix element of the S-matrix between the initial and final states of the quantum system.

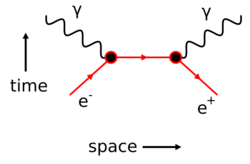

Feynman used Ernst Stueckelberg's interpretation of the positron as if it were an electron moving backward in time.[3] Thus, antiparticles are represented as moving backward along the time axis in Feynman diagrams.

Motivation and history

When calculating scattering cross-sections in particle physics, the interaction between particles can be described by starting from a free field that describes the incoming and outgoing particles, and including an interaction Hamiltonian to describe how the particles deflect one another. The amplitude for scattering is the sum of each possible interaction history over all possible intermediate particle states. The number of times the interaction Hamiltonian acts is the order of the perturbation expansion, and the time-dependent perturbation theory for fields is known as the Dyson series. When the intermediate states at intermediate times are energy eigenstates (collections of particles with a definite momentum) the series is called old-fashioned perturbation theory (or time-dependent/time-ordered perturbation theory).

The Dyson series can be alternatively rewritten as a sum over Feynman diagrams, where at each vertex both the energy and momentum are conserved, but where the length of the energy-momentum four-vector is not necessarily equal to the mass, i.e. the intermediate particles are so-called off-shell. The Feynman diagrams are much easier to keep track of than "old-fashioned" terms, because the old-fashioned way treats the particle and antiparticle contributions as separate. Each Feynman diagram is the sum of exponentially many old-fashioned terms, because each internal line can separately represent either a particle or an antiparticle. In a non-relativistic theory, there are no antiparticles and there is no doubling, so each Feynman diagram includes only one term.

Feynman gave a prescription for calculating the amplitude (the Feynman rules, below) for any given diagram from a field theory Lagrangian. Each internal line corresponds to a factor of the virtual particle's propagator; each vertex where lines meet gives a factor derived from an interaction term in the Lagrangian, and incoming and outgoing lines carry an energy, momentum, and spin.

In addition to their value as a mathematical tool, Feynman diagrams provide deep physical insight into the nature of particle interactions. Particles interact in every way available; in fact, intermediate virtual particles are allowed to propagate faster than light. The probability of each final state is then obtained by summing over all such possibilities. This is closely tied to the functional integral formulation of quantum mechanics, also invented by Feynman—see path integral formulation.

The naïve application of such calculations often produces diagrams whose amplitudes are infinite, because the short-distance particle interactions require a careful limiting procedure, to include particle self-interactions. The technique of renormalization, suggested by Ernst Stueckelberg and Hans Bethe and implemented by Dyson, Feynman, Schwinger, and Tomonaga compensates for this effect and eliminates the troublesome infinities. After renormalization, calculations using Feynman diagrams match experimental results with very high accuracy.

Feynman diagram and path integral methods are also used in statistical mechanics and can even be applied to classical mechanics.[4]

Alternate names

Murray Gell-Mann always referred to Feynman diagrams as Stueckelberg diagrams, after Swiss physicist Ernst Stueckelberg, who devised a similar notation many years earlier. Stueckelberg was motivated by the need for a manifestly covariant formalism for quantum field theory, but did not provide as automated a way to handle symmetry factors and loops, although he was first to find the correct physical interpretation in terms of forward and backward in time particle paths, all without the path-integral.[5]

Historically, as a book-keeping device of covariant perturbation theory, the graphs were called Feynman–Dyson diagrams or Dyson graphs,[6] because the path integral was unfamiliar when they were introduced, and Freeman Dyson's derivation from old-fashioned perturbation theory borrowed from the perturbative expansions in statistical mechanics was easier to follow for physicists trained in earlier methods.[lower-alpha 1] Feynman had to lobby hard for the diagrams, which confused physicists trained in equations and graphs.[7]

Representation of physical reality

In their presentations of fundamental interactions,[8][9] written from the particle physics perspective, Gerard 't Hooft and Martinus Veltman gave good arguments for taking the original, non-regularized Feynman diagrams as the most succinct representation of the physics of quantum scattering of fundamental particles. Their motivations are consistent with the convictions of James Daniel Bjorken and Sidney Drell:[10]

The Feynman graphs and rules of calculation summarize quantum field theory in a form in close contact with the experimental numbers one wants to understand. Although the statement of the theory in terms of graphs may imply perturbation theory, use of graphical methods in the many-body problem shows that this formalism is flexible enough to deal with phenomena of nonperturbative characters ... Some modification of the Feynman rules of calculation may well outlive the elaborate mathematical structure of local canonical quantum field theory ...

In quantum field theories, Feynman diagrams are obtained from a Lagrangian by Feynman rules.

Dimensional regularization is a method for regularizing integrals in the evaluation of Feynman diagrams; it assigns values to them that are meromorphic functions of an auxiliary complex parameter d, called the dimension. Dimensional regularization writes a Feynman integral as an integral depending on the spacetime dimension d and spacetime points.

Particle-path interpretation

A Feynman diagram is a representation of quantum field theory processes in terms of particle interactions. The particles are represented by the diagram lines. The lines can be squiggly or straight, with an arrow or without, depending on the type of particle. A point where lines connect to other lines is a vertex, and this is where the particles meet and interact. The interactions are: emit/absorb particles, deflect particles, or change particle type.

The three different types of lines are: internal lines, connecting vertices; incoming lines, extending from "the past" to a vertex, representing an initial state; and outgoing lines, extending from a vertex to "the future", representing the end state (the latter two are also known as external lines). Traditionally, the bottom of the diagram is the past and the top the future; alternatively, the past is to the left and the future to the right. When calculating correlation functions instead of scattering amplitudes, past and future are not relevant and all lines are internal. The particles then begin and end on small x's, which represent the positions of the operators whose correlation is calculated.

Feynman diagrams are a pictorial representation of a contribution to the total amplitude for a process that can happen in different ways. When a group of incoming particles scatter off each other, the process can be thought of as one where the particles travel over all possible paths, including paths that go backward in time.

Feynman diagrams are graphs that represent the interaction of particles rather than the physical position of the particle during a scattering process. They are not the same as spacetime diagrams and bubble chamber images even though they all describe particle scattering. Unlike a bubble chamber picture, only the sum of all relevant Feynman diagrams represent any given particle interaction; particles do not choose a particular diagram each time they interact. The law of summation is in accord with the principle of superposition—every diagram contributes to the total process's amplitude.

Description

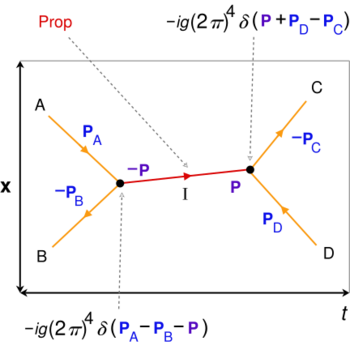

• internal lines (red) for intermediate particles and processes, which has a propagator factor ("prop"), external lines (orange) for incoming/outgoing particles to/from vertices (black),

• at each vertex there is 4-momentum conservation using delta functions, 4-momenta entering the vertex are positive while those leaving are negative, the factors at each vertex and internal line are multiplied in the amplitude integral,

• space x and time t axes are not always shown, directions of external lines correspond to passage of time.

A Feynman diagram represents a perturbative contribution to the amplitude of a quantum transition from some initial quantum state to some final quantum state.

For example, in the process of electron-positron annihilation the initial state is one electron and one positron, while the final state is two photons.

Conventionally, the initial state is at the left of the diagram and the final state at the right (although other layouts are also used).

The particles in the initial state are depicted by lines pointing in the direction of the initial state (e.g., to the left). The particles in the final state are represented by lines pointing in the direction of the final state (e.g., to the right).

QED involves two types of particles: matter particles such as electrons or positrons (called fermions) and exchange particles (called gauge bosons). They are represented in Feynman diagrams as follows:

- Electron in the initial state is represented by a solid line, with an arrow indicating the spin of the particle e.g. pointing toward the vertex (→•).

- Electron in the final state is represented by a line, with an arrow indicating the spin of the particle e.g. pointing away from the vertex: (•→).

- Positron in the initial state is represented by a solid line, with an arrow indicating the spin of the particle e.g. pointing away from the vertex: (←•).

- Positron in the final state is represented by a line, with an arrow indicating the spin of the particle e.g. pointing toward the vertex: (•←).

- Virtual Photon in the initial and the final states is represented by a wavy line (~• and •~).

In QED each vertex has three lines attached to it: one bosonic line, one fermionic line with arrow toward the vertex, and one fermionic line with arrow away from the vertex.

Vertices can be connected by a bosonic or fermionic propagator. A bosonic propagator is represented by a wavy line connecting two vertices (•~•). A fermionic propagator is represented by a solid line with an arrow connecting two vertices, (•←•).

The number of vertices gives the order of the term in the perturbation series expansion of the transition amplitude.

Electron–positron annihilation example

The electron–positron annihilation interaction:

- e+ + e− → 2γ

has a contribution from the second order Feynman diagram:

In the initial state (at the bottom; early time) there is one electron (e−) and one positron (e+) and in the final state (at the top; late time) there are two photons (γ).

Canonical quantization formulation

The probability amplitude for a transition of a quantum system (between asymptotically free states) from the initial state |i⟩ to the final state | f ⟩ is given by the matrix element

where S is the S-matrix. In terms of the time-evolution operator U, it is simply

In the interaction picture, this expands to

where HV is the interaction Hamiltonian and signifies the time-ordered product of operators. Dyson's formula expands the time-ordered matrix exponential into a perturbation series in the powers of the interaction Hamiltonian density,

Equivalently, with the interaction Lagrangian LV, it is

A Feynman diagram is a graphical representation of a single summand in the Wick's expansion of the time-ordered product in the nth-order term S(n) of the Dyson series of the S-matrix,

where N signifies the normal-ordered product of the operators and (±) takes care of the possible sign change when commuting the fermionic operators to bring them together for a contraction (a propagator) and A represents all possible contractions.

Feynman rules

The diagrams are drawn according to the Feynman rules, which depend upon the interaction Lagrangian. For the QED interaction Lagrangian

describing the interaction of a fermionic field ψ with a bosonic gauge field Aμ, the Feynman rules can be formulated in coordinate space as follows:

- Each integration coordinate xj is represented by a point (sometimes called a vertex);

- A bosonic propagator is represented by a wiggly line connecting two points;

- A fermionic propagator is represented by a solid line connecting two points;

- A bosonic field is represented by a wiggly line attached to the point xi;

- A fermionic field ψ(xi) is represented by a solid line attached to the point xi with an arrow toward the point;

- An anti-fermionic field ψ(xi) is represented by a solid line attached to the point xi with an arrow away from the point;

Example: second order processes in QED

The second order perturbation term in the S-matrix is

Scattering of fermions

|

The Wick's expansion of the integrand gives (among others) the following term

where

is the electromagnetic contraction (propagator) in the Feynman gauge. This term is represented by the Feynman diagram at the right. This diagram gives contributions to the following processes:

- e− e− scattering (initial state at the right, final state at the left of the diagram);

- e+ e+ scattering (initial state at the left, final state at the right of the diagram);

- e− e+ scattering (initial state at the bottom/top, final state at the top/bottom of the diagram).

Compton scattering and annihilation/generation of e− e+ pairs

Another interesting term in the expansion is

where

is the fermionic contraction (propagator).

Path integral formulation

In a path integral, the field Lagrangian, integrated over all possible field histories, defines the probability amplitude to go from one field configuration to another. In order to make sense, the field theory must have a well-defined ground state, and the integral must be performed a little bit rotated into imaginary time, i.e. a Wick rotation. The path integral formalism is completely equivalent to the canonical operator formalism above.

Scalar field Lagrangian

A simple example is the free relativistic scalar field in d dimensions, whose action integral is:

The probability amplitude for a process is:

where A and B are space-like hypersurfaces that define the boundary conditions. The collection of all the φ(A) on the starting hypersurface give the field's initial value, analogous to the starting position for a point particle, and the field values φ(B) at each point of the final hypersurface defines the final field value, which is allowed to vary, giving a different amplitude to end up at different values. This is the field-to-field transition amplitude.

The path integral gives the expectation value of operators between the initial and final state:

and in the limit that A and B recede to the infinite past and the infinite future, the only contribution that matters is from the ground state (this is only rigorously true if the path-integral is defined slightly rotated into imaginary time). The path integral can be thought of as analogous to a probability distribution, and it is convenient to define it so that multiplying by a constant does not change anything:

The field's partition function is the normalization factor on the bottom, which coincides with the statistical mechanical partition function at zero temperature when rotated into imaginary time.

The initial-to-final amplitudes are ill-defined if one thinks of the continuum limit right from the beginning, because the fluctuations in the field can become unbounded. So the path-integral can be thought of as on a discrete square lattice, with lattice spacing a and the limit a → 0 should be taken carefully[clarification needed]. If the final results do not depend on the shape of the lattice or the value of a, then the continuum limit exists.

On a lattice

On a lattice, (i), the field can be expanded in Fourier modes:

Here the integration domain is over k restricted to a cube of side length 2π/a, so that large values of k are not allowed. It is important to note that the k-measure contains the factors of 2π from Fourier transforms, this is the best standard convention for k-integrals in QFT. The lattice means that fluctuations at large k are not allowed to contribute right away, they only start to contribute in the limit a → 0. Sometimes, instead of a lattice, the field modes are just cut off at high values of k instead.

It is also convenient from time to time to consider the space-time volume to be finite, so that the k modes are also a lattice. This is not strictly as necessary as the space-lattice limit, because interactions in k are not localized, but it is convenient for keeping track of the factors in front of the k-integrals and the momentum-conserving delta functions that will arise.

On a lattice, (ii), the action needs to be discretized:

where ⟨x,y⟩ is a pair of nearest lattice neighbors x and y. The discretization should be thought of as defining what the derivative ∂μφ means.

In terms of the lattice Fourier modes, the action can be written:

For k near zero this is:

Now we have the continuum Fourier transform of the original action. In finite volume, the quantity ddk is not infinitesimal, but becomes the volume of a box made by neighboring Fourier modes, or (2π/V)d .

The field φ is real-valued, so the Fourier transform obeys:

In terms of real and imaginary parts, the real part of φ(k) is an even function of k, while the imaginary part is odd. The Fourier transform avoids double-counting, so that it can be written:

over an integration domain that integrates over each pair (k,−k) exactly once.

For a complex scalar field with action

the Fourier transform is unconstrained:

and the integral is over all k.

Integrating over all different values of φ(x) is equivalent to integrating over all Fourier modes, because taking a Fourier transform is a unitary linear transformation of field coordinates. When you change coordinates in a multidimensional integral by a linear transformation, the value of the new integral is given by the determinant of the transformation matrix. If

then

If A is a rotation, then

so that det A = ±1, and the sign depends on whether the rotation includes a reflection or not.

The matrix that changes coordinates from φ(x) to φ(k) can be read off from the definition of a Fourier transform.

and the Fourier inversion theorem tells you the inverse:

which is the complex conjugate-transpose, up to factors of 2π. On a finite volume lattice, the determinant is nonzero and independent of the field values.

and the path integral is a separate factor at each value of k.

The factor ddk is the infinitesimal volume of a discrete cell in k-space, in a square lattice box

where L is the side-length of the box. Each separate factor is an oscillatory Gaussian, and the width of the Gaussian diverges as the volume goes to infinity.

In imaginary time, the Euclidean action becomes positive definite, and can be interpreted as a probability distribution. The probability of a field having values φk is

The expectation value of the field is the statistical expectation value of the field when chosen according to the probability distribution:

Since the probability of φk is a product, the value of φk at each separate value of k is independently Gaussian distributed. The variance of the Gaussian is 1/k2ddk, which is formally infinite, but that just means that the fluctuations are unbounded in infinite volume. In any finite volume, the integral is replaced by a discrete sum, and the variance of the integral is V/k2.

Monte Carlo

The path integral defines a probabilistic algorithm to generate a Euclidean scalar field configuration. Randomly pick the real and imaginary parts of each Fourier mode at wavenumber k to be a Gaussian random variable with variance 1/k2. This generates a configuration φC(k) at random, and the Fourier transform gives φC(x). For real scalar fields, the algorithm must generate only one of each pair φ(k), φ(−k), and make the second the complex conjugate of the first.

To find any correlation function, generate a field again and again by this procedure, and find the statistical average:

where |C| is the number of configurations, and the sum is of the product of the field values on each configuration. The Euclidean correlation function is just the same as the correlation function in statistics or statistical mechanics. The quantum mechanical correlation functions are an analytic continuation of the Euclidean correlation functions.

For free fields with a quadratic action, the probability distribution is a high-dimensional Gaussian, and the statistical average is given by an explicit formula. But the Monte Carlo method also works well for bosonic interacting field theories where there is no closed form for the correlation functions.

Scalar propagator

Each mode is independently Gaussian distributed. The expectation of field modes is easy to calculate:

for k ≠ k′, since then the two Gaussian random variables are independent and both have zero mean.

in finite volume V, when the two k-values coincide, since this is the variance of the Gaussian. In the infinite volume limit,

Strictly speaking, this is an approximation: the lattice propagator is:

But near k = 0, for field fluctuations long compared to the lattice spacing, the two forms coincide.

The delta functions contain factors of 2π, so that they cancel out the 2π factors in the measure for k integrals.

where δD(k) is the ordinary one-dimensional Dirac delta function. This convention for delta-functions is not universal—some authors keep the factors of 2π in the delta functions (and in the k-integration) explicit.

Equation of motion

The form of the propagator can be more easily found by using the equation of motion for the field. From the Lagrangian, the equation of motion is:

and in an expectation value, this says:

Where the derivatives act on x, and the identity is true everywhere except when x and y coincide, and the operator order matters. The form of the singularity can be understood from the canonical commutation relations to be a delta-function. Defining the (Euclidean) Feynman propagator Δ as the Fourier transform of the time-ordered two-point function (the one that comes from the path-integral):

So that:

If the equations of motion are linear, the propagator will always be the reciprocal of the quadratic-form matrix that defines the free Lagrangian, since this gives the equations of motion. This is also easy to see directly from the path integral. The factor of i disappears in the Euclidean theory.

Wick theorem

Because each field mode is an independent Gaussian, the expectation values for the product of many field modes obeys Wick's theorem:

is zero unless the field modes coincide in pairs. This means that it is zero for an odd number of φ, and for an even number of φ, it is equal to a contribution from each pair separately, with a delta function.

where the sum is over each partition of the field modes into pairs, and the product is over the pairs. For example,

An interpretation of Wick's theorem is that each field insertion can be thought of as a dangling line, and the expectation value is calculated by linking up the lines in pairs, putting a delta function factor that ensures that the momentum of each partner in the pair is equal, and dividing by the propagator.

Higher Gaussian moments — completing Wick's theorem

There is a subtle point left before Wick's theorem is proved—what if more than two of the s have the same momentum? If it's an odd number, the integral is zero; negative values cancel with the positive values. But if the number is even, the integral is positive. The previous demonstration assumed that the s would only match up in pairs.

But the theorem is correct even when arbitrarily many of the are equal, and this is a notable property of Gaussian integration:

Dividing by I,

If Wick's theorem were correct, the higher moments would be given by all possible pairings of a list of 2n different x:

where the x are all the same variable, the index is just to keep track of the number of ways to pair them. The first x can be paired with 2n − 1 others, leaving 2n − 2. The next unpaired x can be paired with 2n − 3 different x leaving 2n − 4, and so on. This means that Wick's theorem, uncorrected, says that the expectation value of x2n should be:

and this is in fact the correct answer. So Wick's theorem holds no matter how many of the momenta of the internal variables coincide.

Interaction

Interactions are represented by higher order contributions, since quadratic contributions are always Gaussian. The simplest interaction is the quartic self-interaction, with an action:

The reason for the combinatorial factor 4! will be clear soon. Writing the action in terms of the lattice (or continuum) Fourier modes:

Where SF is the free action, whose correlation functions are given by Wick's theorem. The exponential of S in the path integral can be expanded in powers of λ, giving a series of corrections to the free action.

The path integral for the interacting action is then a power series of corrections to the free action. The term represented by X should be thought of as four half-lines, one for each factor of φ(k). The half-lines meet at a vertex, which contributes a delta-function that ensures that the sum of the momenta are all equal.

To compute a correlation function in the interacting theory, there is a contribution from the X terms now. For example, the path-integral for the four-field correlator:

which in the free field was only nonzero when the momenta k were equal in pairs, is now nonzero for all values of k. The momenta of the insertions φ(ki) can now match up with the momenta of the Xs in the expansion. The insertions should also be thought of as half-lines, four in this case, which carry a momentum k, but one that is not integrated.

The lowest-order contribution comes from the first nontrivial term e−SFX in the Taylor expansion of the action. Wick's theorem requires that the momenta in the X half-lines, the φ(k) factors in X, should match up with the momenta of the external half-lines in pairs. The new contribution is equal to:

The 4! inside X is canceled because there are exactly 4! ways to match the half-lines in X to the external half-lines. Each of these different ways of matching the half-lines together in pairs contributes exactly once, regardless of the values of k1,2,3,4, by Wick's theorem.

Feynman diagrams

The expansion of the action in powers of X gives a series of terms with progressively higher number of Xs. The contribution from the term with exactly n Xs is called nth order.

The nth order terms has:

- 4n internal half-lines, which are the factors of φ(k) from the Xs. These all end on a vertex, and are integrated over all possible k.

- external half-lines, which are the come from the φ(k) insertions in the integral.

By Wick's theorem, each pair of half-lines must be paired together to make a line, and this line gives a factor of

which multiplies the contribution. This means that the two half-lines that make a line are forced to have equal and opposite momentum. The line itself should be labelled by an arrow, drawn parallel to the line, and labeled by the momentum in the line k. The half-line at the tail end of the arrow carries momentum k, while the half-line at the head-end carries momentum −k. If one of the two half-lines is external, this kills the integral over the internal k, since it forces the internal k to be equal to the external k. If both are internal, the integral over k remains.

The diagrams that are formed by linking the half-lines in the Xs with the external half-lines, representing insertions, are the Feynman diagrams of this theory. Each line carries a factor of 1/k2, the propagator, and either goes from vertex to vertex, or ends at an insertion. If it is internal, it is integrated over. At each vertex, the total incoming k is equal to the total outgoing k.

The number of ways of making a diagram by joining half-lines into lines almost completely cancels the factorial factors coming from the Taylor series of the exponential and the 4! at each vertex.

Loop order

A forest diagram is one where all the internal lines have momentum that is completely determined by the external lines and the condition that the incoming and outgoing momentum are equal at each vertex. The contribution of these diagrams is a product of propagators, without any integration. A tree diagram is a connected forest diagram.

An example of a tree diagram is the one where each of four external lines end on an X. Another is when three external lines end on an X, and the remaining half-line joins up with another X, and the remaining half-lines of this X run off to external lines. These are all also forest diagrams (as every tree is a forest); an example of a forest that is not a tree is when eight external lines end on two Xs.

It is easy to verify that in all these cases, the momenta on all the internal lines is determined by the external momenta and the condition of momentum conservation in each vertex.

A diagram that is not a forest diagram is called a loop diagram, and an example is one where two lines of an X are joined to external lines, while the remaining two lines are joined to each other. The two lines joined to each other can have any momentum at all, since they both enter and leave the same vertex. A more complicated example is one where two Xs are joined to each other by matching the legs one to the other. This diagram has no external lines at all.

The reason loop diagrams are called loop diagrams is because the number of k-integrals that are left undetermined by momentum conservation is equal to the number of independent closed loops in the diagram, where independent loops are counted as in homology theory. The homology is real-valued (actually Rd valued), the value associated with each line is the momentum. The boundary operator takes each line to the sum of the end-vertices with a positive sign at the head and a negative sign at the tail. The condition that the momentum is conserved is exactly the condition that the boundary of the k-valued weighted graph is zero.

A set of valid k-values can be arbitrarily redefined whenever there is a closed loop. A closed loop is a cyclical path of adjacent vertices that never revisits the same vertex. Such a cycle can be thought of as the boundary of a hypothetical 2-cell. The k-labellings of a graph that conserve momentum (i.e. which has zero boundary) up to redefinitions of k (i.e. up to boundaries of 2-cells) define the first homology of a graph. The number of independent momenta that are not determined is then equal to the number of independent homology loops. For many graphs, this is equal to the number of loops as counted in the most intuitive way.

Symmetry factors

The number of ways to form a given Feynman diagram by joining half-lines is large, and by Wick's theorem, each way of pairing up the half-lines contributes equally. Often, this completely cancels the factorials in the denominator of each term, but the cancellation is sometimes incomplete.

The uncancelled denominator is called the symmetry factor of the diagram. The contribution of each diagram to the correlation function must be divided by its symmetry factor.

For example, consider the Feynman diagram formed from two external lines joined to one X, and the remaining two half-lines in the X joined to each other. There are 4 × 3 ways to join the external half-lines to the X, and then there is only one way to join the two remaining lines to each other. The X comes divided by 4! = 4 × 3 × 2, but the number of ways to link up the X half lines to make the diagram is only 4 × 3, so the contribution of this diagram is divided by two.

For another example, consider the diagram formed by joining all the half-lines of one X to all the half-lines of another X. This diagram is called a vacuum bubble, because it does not link up to any external lines. There are 4! ways to form this diagram, but the denominator includes a 2! (from the expansion of the exponential, there are two Xs) and two factors of 4!. The contribution is multiplied by 4!/2 × 4! × 4! = 1/48.

Another example is the Feynman diagram formed from two Xs where each X links up to two external lines, and the remaining two half-lines of each X are joined to each other. The number of ways to link an X to two external lines is 4 × 3, and either X could link up to either pair, giving an additional factor of 2. The remaining two half-lines in the two Xs can be linked to each other in two ways, so that the total number of ways to form the diagram is 4 × 3 × 4 × 3 × 2 × 2, while the denominator is 4! × 4! × 2!. The total symmetry factor is 2, and the contribution of this diagram is divided by 2.

The symmetry factor theorem gives the symmetry factor for a general diagram: the contribution of each Feynman diagram must be divided by the order of its group of automorphisms, the number of symmetries that it has.

An automorphism of a Feynman graph is a permutation M of the lines and a permutation N of the vertices with the following properties:

- If a line l goes from vertex v to vertex v′, then M(l) goes from N(v) to N(v′). If the line is undirected, as it is for a real scalar field, then M(l) can go from N(v′) to N(v) too.

- If a line l ends on an external line, M(l) ends on the same external line.

- If there are different types of lines, M(l) should preserve the type.

This theorem has an interpretation in terms of particle-paths: when identical particles are present, the integral over all intermediate particles must not double-count states that differ only by interchanging identical particles.

Proof: To prove this theorem, label all the internal and external lines of a diagram with a unique name. Then form the diagram by linking a half-line to a name and then to the other half line.

Now count the number of ways to form the named diagram. Each permutation of the Xs gives a different pattern of linking names to half-lines, and this is a factor of n!. Each permutation of the half-lines in a single X gives a factor of 4!. So a named diagram can be formed in exactly as many ways as the denominator of the Feynman expansion.

But the number of unnamed diagrams is smaller than the number of named diagram by the order of the automorphism group of the graph.

Connected diagrams: linked-cluster theorem

Roughly speaking, a Feynman diagram is called connected if all vertices and propagator lines are linked by a sequence of vertices and propagators of the diagram itself. If one views it as an undirected graph it is connected. The remarkable relevance of such diagrams in QFTs is due to the fact that they are sufficient to determine the quantum partition function Z[J]. More precisely, connected Feynman diagrams determine

To see this, one should recall that

with Dk constructed from some (arbitrary) Feynman diagram that can be thought to consist of several connected components Ci. If one encounters ni (identical) copies of a component Ci within the Feynman diagram Dk one has to include a symmetry factor ni!. However, in the end each contribution of a Feynman diagram Dk to the partition function has the generic form

where i labels the (infinitely) many connected Feynman diagrams possible.

A scheme to successively create such contributions from the Dk to Z[J] is obtained by

and therefore yields

To establish the normalization Z0 = exp W[0] = 1 one simply calculates all connected vacuum diagrams, i.e., the diagrams without any sources J (sometimes referred to as external legs of a Feynman diagram).

The linked-cluster theorem was first proved to order four by Keith Brueckner in 1955, and for infinite orders by Jeffrey Goldstone in 1957.[11]

Vacuum bubbles

An immediate consequence of the linked-cluster theorem is that all vacuum bubbles, diagrams without external lines, cancel when calculating correlation functions. A correlation function is given by a ratio of path-integrals:

The top is the sum over all Feynman diagrams, including disconnected diagrams that do not link up to external lines at all. In terms of the connected diagrams, the numerator includes the same contributions of vacuum bubbles as the denominator:

Where the sum over E diagrams includes only those diagrams each of whose connected components end on at least one external line. The vacuum bubbles are the same whatever the external lines, and give an overall multiplicative factor. The denominator is the sum over all vacuum bubbles, and dividing gets rid of the second factor.

The vacuum bubbles then are only useful for determining Z itself, which from the definition of the path integral is equal to:

where ρ is the energy density in the vacuum. Each vacuum bubble contains a factor of δ(k) zeroing the total k at each vertex, and when there are no external lines, this contains a factor of δ(0), because the momentum conservation is over-enforced. In finite volume, this factor can be identified as the total volume of space time. Dividing by the volume, the remaining integral for the vacuum bubble has an interpretation: it is a contribution to the energy density of the vacuum.

Sources

Correlation functions are the sum of the connected Feynman diagrams, but the formalism treats the connected and disconnected diagrams differently. Internal lines end on vertices, while external lines go off to insertions. Introducing sources unifies the formalism, by making new vertices where one line can end.

Sources are external fields, fields that contribute to the action, but are not dynamical variables. A scalar field source is another scalar field h that contributes a term to the (Lorentz) Lagrangian:

In the Feynman expansion, this contributes H terms with one half-line ending on a vertex. Lines in a Feynman diagram can now end either on an X vertex, or on an H vertex, and only one line enters an H vertex. The Feynman rule for an H vertex is that a line from an H with momentum k gets a factor of h(k).

The sum of the connected diagrams in the presence of sources includes a term for each connected diagram in the absence of sources, except now the diagrams can end on the source. Traditionally, a source is represented by a little "×" with one line extending out, exactly as an insertion.

where C(k1,...,kn) is the connected diagram with n external lines carrying momentum as indicated. The sum is over all connected diagrams, as before.

The field h is not dynamical, which means that there is no path integral over h: h is just a parameter in the Lagrangian, which varies from point to point. The path integral for the field is:

and it is a function of the values of h at every point. One way to interpret this expression is that it is taking the Fourier transform in field space. If there is a probability density on Rn, the Fourier transform of the probability density is:

The Fourier transform is the expectation of an oscillatory exponential. The path integral in the presence of a source h(x) is:

which, on a lattice, is the product of an oscillatory exponential for each field value:

The Fourier transform of a delta-function is a constant, which gives a formal expression for a delta function:

This tells you what a field delta function looks like in a path-integral. For two scalar fields φ and η,

which integrates over the Fourier transform coordinate, over h. This expression is useful for formally changing field coordinates in the path integral, much as a delta function is used to change coordinates in an ordinary multi-dimensional integral.

The partition function is now a function of the field h, and the physical partition function is the value when h is the zero function:

The correlation functions are derivatives of the path integral with respect to the source:

In Euclidean space, source contributions to the action can still appear with a factor of i, so that they still do a Fourier transform.

Spin 1/2; "photons" and "ghosts"

Spin 1/2: Grassmann integrals

The field path integral can be extended to the Fermi case, but only if the notion of integration is expanded. A Grassmann integral of a free Fermi field is a high-dimensional determinant or Pfaffian, which defines the new type of Gaussian integration appropriate for Fermi fields.

The two fundamental formulas of Grassmann integration are:

where M is an arbitrary matrix and ψ, ψ are independent Grassmann variables for each index i, and

where A is an antisymmetric matrix, ψ is a collection of Grassmann variables, and the 1/2 is to prevent double-counting (since ψiψj = −ψjψi).

In matrix notation, where ψ and η are Grassmann-valued row vectors, η and ψ are Grassmann-valued column vectors, and M is a real-valued matrix:

where the last equality is a consequence of the translation invariance of the Grassmann integral. The Grassmann variables η are external sources for ψ, and differentiating with respect to η pulls down factors of ψ.

again, in a schematic matrix notation. The meaning of the formula above is that the derivative with respect to the appropriate component of η and η gives the matrix element of M−1. This is exactly analogous to the bosonic path integration formula for a Gaussian integral of a complex bosonic field:

So that the propagator is the inverse of the matrix in the quadratic part of the action in both the Bose and Fermi case.

For real Grassmann fields, for Majorana fermions, the path integral is a Pfaffian times a source quadratic form, and the formulas give the square root of the determinant, just as they do for real Bosonic fields. The propagator is still the inverse of the quadratic part.

The free Dirac Lagrangian:

formally gives the equations of motion and the anticommutation relations of the Dirac field, just as the Klein Gordon Lagrangian in an ordinary path integral gives the equations of motion and commutation relations of the scalar field. By using the spatial Fourier transform of the Dirac field as a new basis for the Grassmann algebra, the quadratic part of the Dirac action becomes simple to invert:

The propagator is the inverse of the matrix M linking ψ(k) and ψ(k), since different values of k do not mix together.

The analog of Wick's theorem matches ψ and ψ in pairs:

where S is the sign of the permutation that reorders the sequence of ψ and ψ to put the ones that are paired up to make the delta-functions next to each other, with the ψ coming right before the ψ. Since a ψ, ψ pair is a commuting element of the Grassmann algebra, it does not matter what order the pairs are in. If more than one ψ, ψ pair have the same k, the integral is zero, and it is easy to check that the sum over pairings gives zero in this case (there are always an even number of them). This is the Grassmann analog of the higher Gaussian moments that completed the Bosonic Wick's theorem earlier.

The rules for spin-1/2 Dirac particles are as follows: The propagator is the inverse of the Dirac operator, the lines have arrows just as for a complex scalar field, and the diagram acquires an overall factor of −1 for each closed Fermi loop. If there are an odd number of Fermi loops, the diagram changes sign. Historically, the −1 rule was very difficult for Feynman to discover. He discovered it after a long process of trial and error, since he lacked a proper theory of Grassmann integration.

The rule follows from the observation that the number of Fermi lines at a vertex is always even. Each term in the Lagrangian must always be Bosonic. A Fermi loop is counted by following Fermionic lines until one comes back to the starting point, then removing those lines from the diagram. Repeating this process eventually erases all the Fermionic lines: this is the Euler algorithm to 2-color a graph, which works whenever each vertex has even degree. The number of steps in the Euler algorithm is only equal to the number of independent Fermionic homology cycles in the common special case that all terms in the Lagrangian are exactly quadratic in the Fermi fields, so that each vertex has exactly two Fermionic lines. When there are four-Fermi interactions (like in the Fermi effective theory of the weak nuclear interactions) there are more k-integrals than Fermi loops. In this case, the counting rule should apply the Euler algorithm by pairing up the Fermi lines at each vertex into pairs that together form a bosonic factor of the term in the Lagrangian, and when entering a vertex by one line, the algorithm should always leave with the partner line.

To clarify and prove the rule, consider a Feynman diagram formed from vertices, terms in the Lagrangian, with Fermion fields. The full term is Bosonic, it is a commuting element of the Grassmann algebra, so the order in which the vertices appear is not important. The Fermi lines are linked into loops, and when traversing the loop, one can reorder the vertex terms one after the other as one goes around without any sign cost. The exception is when you return to the starting point, and the final half-line must be joined with the unlinked first half-line. This requires one permutation to move the last ψ to go in front of the first ψ, and this gives the sign.

This rule is the only visible effect of the exclusion principle in internal lines. When there are external lines, the amplitudes are antisymmetric when two Fermi insertions for identical particles are interchanged. This is automatic in the source formalism, because the sources for Fermi fields are themselves Grassmann valued.

Spin 1: photons

The naive propagator for photons is infinite, since the Lagrangian for the A-field is:

The quadratic form defining the propagator is non-invertible. The reason is the gauge invariance of the field; adding a gradient to A does not change the physics.

To fix this problem, one needs to fix a gauge. The most convenient way is to demand that the divergence of A is some function f, whose value is random from point to point. It does no harm to integrate over the values of f, since it only determines the choice of gauge. This procedure inserts the following factor into the path integral for A:

The first factor, the delta function, fixes the gauge. The second factor sums over different values of f that are inequivalent gauge fixings. This is simply

The additional contribution from gauge-fixing cancels the second half of the free Lagrangian, giving the Feynman Lagrangian:

which is just like four independent free scalar fields, one for each component of A. The Feynman propagator is:

The one difference is that the sign of one propagator is wrong in the Lorentz case: the timelike component has an opposite sign propagator. This means that these particle states have negative norm—they are not physical states. In the case of photons, it is easy to show by diagram methods that these states are not physical—their contribution cancels with longitudinal photons to only leave two physical photon polarization contributions for any value of k.

If the averaging over f is done with a coefficient different from 1/2, the two terms do not cancel completely. This gives a covariant Lagrangian with a coefficient , which does not affect anything:

and the covariant propagator for QED is:

Spin 1: non-Abelian ghosts

To find the Feynman rules for non-Abelian gauge fields, the procedure that performs the gauge fixing must be carefully corrected to account for a change of variables in the path-integral.

The gauge fixing factor has an extra determinant from popping the delta function:

To find the form of the determinant, consider first a simple two-dimensional integral of a function f that depends only on r, not on the angle θ. Inserting an integral over θ:

The derivative-factor ensures that popping the delta function in θ removes the integral. Exchanging the order of integration,

but now the delta-function can be popped in y,

The integral over θ just gives an overall factor of 2π, while the rate of change of y with a change in θ is just x, so this exercise reproduces the standard formula for polar integration of a radial function:

In the path-integral for a nonabelian gauge field, the analogous manipulation is:

The factor in front is the volume of the gauge group, and it contributes a constant, which can be discarded. The remaining integral is over the gauge fixed action.

To get a covariant gauge, the gauge fixing condition is the same as in the Abelian case:

Whose variation under an infinitesimal gauge transformation is given by:

where α is the adjoint valued element of the Lie algebra at every point that performs the infinitesimal gauge transformation. This adds the Faddeev Popov determinant to the action:

which can be rewritten as a Grassmann integral by introducing ghost fields:

The determinant is independent of f, so the path-integral over f can give the Feynman propagator (or a covariant propagator) by choosing the measure for f as in the abelian case. The full gauge fixed action is then the Yang Mills action in Feynman gauge with an additional ghost action:

The diagrams are derived from this action. The propagator for the spin-1 fields has the usual Feynman form. There are vertices of degree 3 with momentum factors whose couplings are the structure constants, and vertices of degree 4 whose couplings are products of structure constants. There are additional ghost loops, which cancel out timelike and longitudinal states in A loops.

In the Abelian case, the determinant for covariant gauges does not depend on A, so the ghosts do not contribute to the connected diagrams.

Particle-path representation

Feynman diagrams were originally discovered by Feynman, by trial and error, as a way to represent the contribution to the S-matrix from different classes of particle trajectories.

Schwinger representation

The Euclidean scalar propagator has a suggestive representation:

The meaning of this identity (which is an elementary integration) is made clearer by Fourier transforming to real space.

The contribution at any one value of τ to the propagator is a Gaussian of width ̀̀√τ. The total propagation function from 0 to x is a weighted sum over all proper times τ of a normalized Gaussian, the probability of ending up at x after a random walk of time τ.

The path-integral representation for the propagator is then:

which is a path-integral rewrite of the Schwinger representation.

The Schwinger representation is both useful for making manifest the particle aspect of the propagator, and for symmetrizing denominators of loop diagrams.

Combining denominators

The Schwinger representation has an immediate practical application to loop diagrams. For example, for the diagram in the φ4 theory formed by joining two xs together in two half-lines, and making the remaining lines external, the integral over the internal propagators in the loop is:

Here one line carries momentum k and the other k + p. The asymmetry can be fixed by putting everything in the Schwinger representation.

Now the exponent mostly depends on t + t′,

except for the asymmetrical little bit. Defining the variable u = t + t′ and v = t′/u, the variable u goes from 0 to ∞, while v goes from 0 to 1. The variable u is the total proper time for the loop, while v parametrizes the fraction of the proper time on the top of the loop versus the bottom.

The Jacobian for this transformation of variables is easy to work out from the identities:

and "wedging" gives

- .

This allows the u integral to be evaluated explicitly:

leaving only the v-integral. This method, invented by Schwinger but usually attributed to Feynman, is called combining denominator. Abstractly, it is the elementary identity:

But this form does not provide the physical motivation for introducing v; v is the proportion of proper time on one of the legs of the loop.

Once the denominators are combined, a shift in k to k′ = k + vp symmetrizes everything:

This form shows that the moment that p2 is more negative than four times the mass of the particle in the loop, which happens in a physical region of Lorentz space, the integral has a cut. This is exactly when the external momentum can create physical particles.

When the loop has more vertices, there are more denominators to combine:

The general rule follows from the Schwinger prescription for n + 1 denominators:

The integral over the Schwinger parameters ui can be split up as before into an integral over the total proper time u = u0 + u1 ... + un and an integral over the fraction of the proper time in all but the first segment of the loop vi = ui/u for i ∈ {1,2,...,n}. The vi are positive and add up to less than 1, so that the v integral is over an n-dimensional simplex.

The Jacobian for the coordinate transformation can be worked out as before:

Wedging all these equations together, one obtains

This gives the integral:

where the simplex is the region defined by the conditions

as well as

Performing the u integral gives the general prescription for combining denominators:

Since the numerator of the integrand is not involved, the same prescription works for any loop, no matter what the spins are carried by the legs. The interpretation of the parameters vi is that they are the fraction of the total proper time spent on each leg.

Scattering

The correlation functions of a quantum field theory describe the scattering of particles. The definition of "particle" in relativistic field theory is not self-evident, because if you try to determine the position so that the uncertainty is less than the compton wavelength, the uncertainty in energy is large enough to produce more particles and antiparticles of the same type from the vacuum. This means that the notion of a single-particle state is to some extent incompatible with the notion of an object localized in space.

In the 1930s, Wigner gave a mathematical definition for single-particle states: they are a collection of states that form an irreducible representation of the Poincaré group. Single particle states describe an object with a finite mass, a well defined momentum, and a spin. This definition is fine for protons and neutrons, electrons and photons, but it excludes quarks, which are permanently confined, so the modern point of view is more accommodating: a particle is anything whose interaction can be described in terms of Feynman diagrams, which have an interpretation as a sum over particle trajectories.

A field operator can act to produce a one-particle state from the vacuum, which means that the field operator φ(x) produces a superposition of Wigner particle states. In the free field theory, the field produces one particle states only. But when there are interactions, the field operator can also produce 3-particle, 5-particle (if there is no +/− symmetry also 2, 4, 6 particle) states too. To compute the scattering amplitude for single particle states only requires a careful limit, sending the fields to infinity and integrating over space to get rid of the higher-order corrections.

The relation between scattering and correlation functions is the LSZ-theorem: The scattering amplitude for n particles to go to m particles in a scattering event is the given by the sum of the Feynman diagrams that go into the correlation function for n + m field insertions, leaving out the propagators for the external legs.

For example, for the λφ4 interaction of the previous section, the order λ contribution to the (Lorentz) correlation function is:

Stripping off the external propagators, that is, removing the factors of i/k2, gives the invariant scattering amplitude M:

which is a constant, independent of the incoming and outgoing momentum. The interpretation of the scattering amplitude is that the sum of |M|2 over all possible final states is the probability for the scattering event. The normalization of the single-particle states must be chosen carefully, however, to ensure that M is a relativistic invariant.

Non-relativistic single particle states are labeled by the momentum k, and they are chosen to have the same norm at every value of k. This is because the nonrelativistic unit operator on single particle states is:

In relativity, the integral over the k-states for a particle of mass m integrates over a hyperbola in E,k space defined by the energy–momentum relation:

If the integral weighs each k point equally, the measure is not Lorentz-invariant. The invariant measure integrates over all values of k and E, restricting to the hyperbola with a Lorentz-invariant delta function:

So the normalized k-states are different from the relativistically normalized k-states by a factor of

The invariant amplitude M is then the probability amplitude for relativistically normalized incoming states to become relativistically normalized outgoing states.

For nonrelativistic values of k, the relativistic normalization is the same as the nonrelativistic normalization (up to a constant factor ). In this limit, the φ4 invariant scattering amplitude is still constant. The particles created by the field φ scatter in all directions with equal amplitude.

The nonrelativistic potential, which scatters in all directions with an equal amplitude (in the Born approximation), is one whose Fourier transform is constant—a delta-function potential. The lowest order scattering of the theory reveals the non-relativistic interpretation of this theory—it describes a collection of particles with a delta-function repulsion. Two such particles have an aversion to occupying the same point at the same time.

Nonperturbative effects

Thinking of Feynman diagrams as a perturbation series, nonperturbative effects like tunneling do not show up, because any effect that goes to zero faster than any polynomial does not affect the Taylor series. Even bound states are absent, since at any finite order particles are only exchanged a finite number of times, and to make a bound state, the binding force must last forever.

But this point of view is misleading, because the diagrams not only describe scattering, but they also are a representation of the short-distance field theory correlations. They encode not only asymptotic processes like particle scattering, they also describe the multiplication rules for fields, the operator product expansion. Nonperturbative tunneling processes involve field configurations that on average get big when the coupling constant gets small, but each configuration is a coherent superposition of particles whose local interactions are described by Feynman diagrams. When the coupling is small, these become collective processes that involve large numbers of particles, but where the interactions between each of the particles is simple. (The perturbation series of any interacting quantum field theory has zero radius of convergence, complicating the limit of the infinite series of diagrams needed (in the limit of vanishing coupling) to describe such field configurations.)

This means that nonperturbative effects show up asymptotically in resummations of infinite classes of diagrams, and these diagrams can be locally simple. The graphs determine the local equations of motion, while the allowed large-scale configurations describe non-perturbative physics. But because Feynman propagators are nonlocal in time, translating a field process to a coherent particle language is not completely intuitive, and has only been explicitly worked out in certain special cases. In the case of nonrelativistic bound states, the Bethe–Salpeter equation describes the class of diagrams to include to describe a relativistic atom. For quantum chromodynamics, the Shifman–Vainshtein–Zakharov sum rules describe non-perturbatively excited long-wavelength field modes in particle language, but only in a phenomenological way.

The number of Feynman diagrams at high orders of perturbation theory is very large, because there are as many diagrams as there are graphs with a given number of nodes. Nonperturbative effects leave a signature on the way in which the number of diagrams and resummations diverge at high order. It is only because non-perturbative effects appear in hidden form in diagrams that it was possible to analyze nonperturbative effects in string theory, where in many cases a Feynman description is the only one available.

In popular culture

- The use of the above diagram of the virtual particle producing a quark–antiquark pair was featured in the television sit-com The Big Bang Theory, in the episode "The Bat Jar Conjecture".

- PhD Comics of January 11, 2012, shows Feynman diagrams that visualize and describe quantum academic interactions, i.e. the paths followed by Ph.D. students when interacting with their advisors.[12]

- Vacuum Diagrams, a science fiction story by Stephen Baxter, features the titular vacuum diagram, a specific type of Feynman diagram.

- Feynman and his wife, Gweneth Howarth, bought a Dodge Tradesman Maxivan in 1975, and had it painted with Feynman diagrams.[13] The van is currently owned by video game designer and physicist Seamus Blackley.[14][15][16][17][18][19][20][21][22] Qantum was the license plate ID.[23]

See also

Notes

- ↑ "It was Dyson's contribution to indicate how Feynman's visual insights could be used [...] He realized that Feynman diagrams [...] can also be viewed as a representation of the logical content of field theories (as stated in their perturbative expansions)". Schweber, op.cit (1994)

References

- ↑ Kaiser, David (2005). "Physics and Feynman's Diagrams". American Scientist 93 (2): 156. doi:10.1511/2005.52.957. http://web.mit.edu/dikaiser/www/FdsAmSci.pdf.

- ↑ "Why Feynman Diagrams Are So Important" (in en). 5 July 2016. https://www.quantamagazine.org/why-feynman-diagrams-are-so-important-20160705/.

- ↑ Feynman, Richard (1949). "The Theory of Positrons". Physical Review 76 (6): 749–759. doi:10.1103/PhysRev.76.749. Bibcode: 1949PhRv...76..749F. https://authors.library.caltech.edu/3520/. Retrieved 2021-11-12. "In this solution, the 'negative energy states' appear in a form which may be pictured (as by Stückelberg) in space-time as waves traveling away from the external potential backwards in time. Experimentally, such a wave corresponds to a positron approaching the potential and annihilating the electron.".

- ↑ Penco, R.; Mauro, D. (2006). "Perturbation theory via Feynman diagrams in classical mechanics". European Journal of Physics 27 (5): 1241–1250. doi:10.1088/0143-0807/27/5/023. Bibcode: 2006EJPh...27.1241P.

- ↑ George Johnson (July 2000). "The Jaguar and the Fox". The Atlantic. https://www.theatlantic.com/issues/2000/07/johnson.htm.

- ↑ Gribbin, John; Gribbin, Mary (1997). "5". Richard Feynman: A Life in Science. Penguin-Putnam.

- ↑ Mlodinow, Leonard (2011). Feynman's Rainbow. Vintage. p. 29.

- ↑ Gerardus 't Hooft, Martinus Veltman, Diagrammar, CERN Yellow Report 1973, reprinted in G. 't Hooft, Under the Spell of Gauge Principle (World Scientific, Singapore, 1994), Introduction online

- ↑ Martinus Veltman, Diagrammatica: The Path to Feynman Diagrams, Cambridge Lecture Notes in Physics, ISBN 0-521-45692-4

- ↑ Bjorken, J. D.; Drell, S. D. (1965). Relativistic Quantum Fields. New York: McGraw-Hill. p. viii. ISBN 978-0-07-005494-3.

- ↑ Fetter, Alexander L.; Walecka, John Dirk (2003-06-20) (in en). Quantum Theory of Many-particle Systems. Courier Corporation. ISBN 978-0-486-42827-7. https://books.google.com/books?id=0wekf1s83b0C.

- ↑ Jorge Cham, Academic Interaction – Feynman Diagrams, January 11, 2012.

- ↑ Jepsen, Kathryn (2014-08-05). "Saving the Feynman van" (in en). https://www.symmetrymagazine.org/article/may-2014/saving-the-feynman-van.

- ↑ Dubner, Stephen J. (Feb 7, 2024). "The Brilliant Mr. Feynman" (in en). https://freakonomics.com/podcast/the-brilliant-mr-feynman/.

- ↑ "Fermilab Today". https://www.fnal.gov/pub/today/archive/archive_2014/today14-05-14.html.

- ↑

- ↑

- ↑ "The Feynman Van". 21 October 2021. https://www.youtube.com/watch?v=PDGFUYTOBcI.

- ↑

- Pathways for Theoretical Advances in Visualization

- January 2017

- IEEE Computer Graphics and Applications

- 37(4):103-112

- DOI:10.1109/MCG.2017.3271463

- https://www.researchgate.net/publication/319224539

- ↑ "Fermilab | TUFTE Exhibit | April 12-June 26, 2014 | About the Exhibit". https://www.fnal.gov/pub/tufte/about.html.

- ↑

- ↑ "Dr. Feynman's Doodles". July 12, 2005. https://www.sciencenews.org/article/dr-feynmans-doodles.

- ↑ "Quantum". https://www.lizalzonaart.com/quantum.

Sources

- Veltman, Martinus J G; 'T Hooft, Gerardus (1973). Diagrammar (Report). CERN Yellow Report. doi:10.5170/CERN-1973-009. http://cds.cern.ch/record/186259.

- Kaiser, David (2005). Drawing theories apart: the dispersion of Feynman diagrams in postwar physics. Chicago: University of Chicago Press. ISBN 978-0-226-42266-4.

- Veltman, Martinus (1994-06-16). Diagrammatica: The Path to Feynman Diagrams. Cambridge Lecture Notes in Physics. ISBN 0-521-45692-4. https://archive.org/details/diagrammaticapat00velt. (expanded, updated version of 't Hooft & Veltman, 1973, cited above)

- Srednicki, Mark Allen (2006). Quantum field theory. Script (Draft ed.). Santa Barbara, Calif: University of California, Santa Barbara. https://web.physics.ucsb.edu/~mark/qft.html. Retrieved 2011-01-28.

- Schweber, Silvan S. (1994). QED and the men who made it: Dyson, Feynman, Schwinger, and Tomonaga. Princeton series in physics. Princeton, NJ: Princeton University Press. ISBN 978-0-691-03327-3. https://archive.org/details/qedmenwhomadeitd0000schw.

External links

- AMS article: "What's New in Mathematics: Finite-dimensional Feynman Diagrams"

- Draw Feynman diagrams explained by Flip Tanedo at Quantumdiaries.com

- Drawing Feynman diagrams with FeynDiagram C++ library that produces PostScript output.

- Online Diagram Tool A graphical application for creating publication ready diagrams.

- JaxoDraw A Java program for drawing Feynman diagrams.

- Bowley, Roger; Copeland, Ed (2010). "Feynman Diagrams". Sixty Symbols. Brady Haran for the University of Nottingham. http://www.sixtysymbols.com/videos/feynman.htm.

|