Orthogonality

In mathematics, orthogonality is the generalization of the notion of perpendicularity to the linear algebra of bilinear forms. Two elements u and v of a vector space with bilinear form B are orthogonal when B(u, v) = 0. Depending on the bilinear form, the vector space may contain nonzero self-orthogonal vectors. In the case of function spaces, families of orthogonal functions are used to form a basis.

By extension, orthogonality is also used to refer to the separation of specific features of a system. The term also has specialized meanings in other fields including art and chemistry.

Etymology

The word comes from the Ancient Greek ὀρθός (orthós), meaning "upright",[1] and γωνία (gōnía), meaning "angle".[2]

The Ancient Greek ὀρθογώνιον (orthogṓnion) and Classical Latin orthogonium originally denoted a rectangle.[3] Later, they came to mean a right triangle. In the 12th century, the post-classical Latin word orthogonalis came to mean a right angle or something related to a right angle.[4]

Mathematics and physics

Definitions

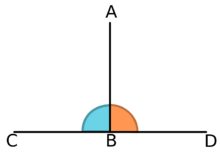

- In geometry, two Euclidean vectors are orthogonal if they are perpendicular, i.e., they form a right angle.

- Two vectors, x and y, in an inner product space, V, are orthogonal if their inner product is zero.[6] This relationship is denoted .

- An orthogonal matrix is a matrix whose column vectors are orthonormal to each other.

- Two vector subspaces, A and B, of an inner product space V, are called orthogonal subspaces if each vector in A is orthogonal to each vector in B. The largest subspace of V that is orthogonal to a given subspace is its orthogonal complement.

- Given a module M and its dual M∗, an element m′ of M∗ and an element m of M are orthogonal if their natural pairing is zero, i.e. ⟨m′, m⟩ = 0. Two sets S′ ⊆ M∗ and S ⊆ M are orthogonal if each element of S′ is orthogonal to each element of S.[7]

- A term rewriting system is said to be orthogonal if it is left-linear and is non-ambiguous. Orthogonal term rewriting systems are confluent.

A set of vectors in an inner product space is called pairwise orthogonal if each pairing of them is orthogonal. Such a set is called an orthogonal set.

In certain cases, the word normal is used to mean orthogonal, particularly in the geometric sense as in the normal to a surface. For example, the y-axis is normal to the curve y = x2 at the origin. However, normal may also refer to the magnitude of a vector. In particular, a set is called orthonormal (orthogonal plus normal) if it is an orthogonal set of unit vectors. As a result, use of the term normal to mean "orthogonal" is often avoided. The word "normal" also has a different meaning in probability and statistics.

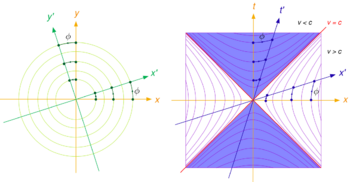

A vector space with a bilinear form generalizes the case of an inner product. When the bilinear form applied to two vectors results in zero, then they are orthogonal. The case of a pseudo-Euclidean plane uses the term hyperbolic orthogonality. In the diagram, axes x′ and t′ are hyperbolic-orthogonal for any given ϕ.

Euclidean vector spaces

In Euclidean space, two vectors are orthogonal if and only if their dot product is zero, i.e. they make an angle of 90° (π/2 radians), or one of the vectors is zero.[8] Hence orthogonality of vectors is an extension of the concept of perpendicular vectors to spaces of any dimension.

The orthogonal complement of a subspace is the space of all vectors that are orthogonal to every vector in the subspace. In a three-dimensional Euclidean vector space, the orthogonal complement of a line through the origin is the plane through the origin perpendicular to it, and vice versa.[9]

Note that the geometric concept of two planes being perpendicular does not correspond to the orthogonal complement, since in three dimensions a pair of vectors, one from each of a pair of perpendicular planes, might meet at any angle.

In four-dimensional Euclidean space, the orthogonal complement of a line is a hyperplane and vice versa, and that of a plane is a plane.[9]

Orthogonal functions

By using integral calculus, it is common to use the following to define the inner product of two functions f and g with respect to a nonnegative weight function w over an interval [a, b]:

In simple cases, w(x) = 1.

We say that functions f and g are orthogonal if their inner product (equivalently, the value of this integral) is zero:

Orthogonality of two functions with respect to one inner product does not imply orthogonality with respect to another inner product.

We write the norm with respect to this inner product as

The members of a set of functions {fi : i = 1, 2, 3, ...} are orthogonal with respect to w on the interval [a, b] if

The members of such a set of functions are orthonormal with respect to w on the interval [a, b] if

where

is the Kronecker delta. In other words, every pair of them (excluding pairing of a function with itself) is orthogonal, and the norm of each is 1. See in particular the orthogonal polynomials.

Examples

- The vectors (1, 3, 2)T, (3, −1, 0)T, (1, 3, −5)T are orthogonal to each other, since (1)(3) + (3)(−1) + (2)(0) = 0, (3)(1) + (−1)(3) + (0)(−5) = 0, and (1)(1) + (3)(3) + (2)(−5) = 0.

- The vectors (1, 0, 1, 0, ...)T and (0, 1, 0, 1, ...)T are orthogonal to each other. The dot product of these vectors is 0. We can then make the generalization to consider the vectors in Z2n: for some positive integer a, and for 1 ≤ k ≤ a − 1, these vectors are orthogonal, for example , , are orthogonal.

- The functions 2t + 3 and 45t2 + 9t − 17 are orthogonal with respect to a unit weight function on the interval from −1 to 1:

- The functions 1, sin(nx), cos(nx) : n = 1, 2, 3, ... are orthogonal with respect to Riemann integration on the intervals [0, 2π], [−π, π], or any other closed interval of length 2π. This fact is a central one in Fourier series.

Orthogonal polynomials

Various polynomial sequences named for mathematicians of the past are sequences of orthogonal polynomials. In particular:

- The Hermite polynomials are orthogonal with respect to the Gaussian distribution with zero mean value.

- The Legendre polynomials are orthogonal with respect to the uniform distribution on the interval [−1, 1].

- The Laguerre polynomials are orthogonal with respect to the exponential distribution. Somewhat more general Laguerre polynomial sequences are orthogonal with respect to gamma distributions.

- The Chebyshev polynomials of the first kind are orthogonal with respect to the measure

- The Chebyshev polynomials of the second kind are orthogonal with respect to the Wigner semicircle distribution.

Orthogonal states in quantum mechanics

- In quantum mechanics, a sufficient (but not necessary) condition that two eigenstates of a Hermitian operator, and , are orthogonal is that they correspond to different eigenvalues. This means, in Dirac notation, that if and correspond to different eigenvalues. This follows from the fact that Schrödinger's equation is a Sturm–Liouville equation (in Schrödinger's formulation) or that observables are given by Hermitian operators (in Heisenberg's formulation).[citation needed]

Art

In art, the perspective (imaginary) lines pointing to the vanishing point are referred to as "orthogonal lines". The term "orthogonal line" often has a quite different meaning in the literature of modern art criticism. Many works by painters such as Piet Mondrian and Burgoyne Diller are noted for their exclusive use of "orthogonal lines" — not, however, with reference to perspective, but rather referring to lines that are straight and exclusively horizontal or vertical, forming right angles where they intersect. For example, an essay at the web site of the Thyssen-Bornemisza Museum states that "Mondrian ... dedicated his entire oeuvre to the investigation of the balance between orthogonal lines and primary colours."

Computer science

Orthogonality in programming language design is the ability to use various language features in arbitrary combinations with consistent results.[10] This usage was introduced by Van Wijngaarden in the design of Algol 68:

The number of independent primitive concepts has been minimized in order that the language be easy to describe, to learn, and to implement. On the other hand, these concepts have been applied “orthogonally” in order to maximize the expressive power of the language while trying to avoid deleterious superfluities.[11]

Orthogonality is a system design property which guarantees that modifying the technical effect produced by a component of a system neither creates nor propagates side effects to other components of the system. Typically this is achieved through the separation of concerns and encapsulation, and it is essential for feasible and compact designs of complex systems. The emergent behavior of a system consisting of components should be controlled strictly by formal definitions of its logic and not by side effects resulting from poor integration, i.e., non-orthogonal design of modules and interfaces. Orthogonality reduces testing and development time because it is easier to verify designs that neither cause side effects nor depend on them.

An instruction set is said to be orthogonal if it lacks redundancy (i.e., there is only a single instruction that can be used to accomplish a given task)[12] and is designed such that instructions can use any register in any addressing mode. This terminology results from considering an instruction as a vector whose components are the instruction fields. One field identifies the registers to be operated upon and another specifies the addressing mode. An orthogonal instruction set uniquely encodes all combinations of registers and addressing modes.[13]

Communications

In communications, multiple-access schemes are orthogonal when an ideal receiver can completely reject arbitrarily strong unwanted signals from the desired signal using different basis functions. One such scheme is time-division multiple access (TDMA), where the orthogonal basis functions are nonoverlapping rectangular pulses ("time slots").

Another scheme is orthogonal frequency-division multiplexing (OFDM), which refers to the use, by a single transmitter, of a set of frequency multiplexed signals with the exact minimum frequency spacing needed to make them orthogonal so that they do not interfere with each other. Well known examples include (a, g, and n) versions of 802.11 Wi-Fi; WiMAX; ITU-T G.hn, DVB-T, the terrestrial digital TV broadcast system used in most of the world outside North America; and DMT (Discrete Multi Tone), the standard form of ADSL.

In OFDM, the subcarrier frequencies are chosen so that the subcarriers are orthogonal to each other, meaning that crosstalk between the subchannels is eliminated and intercarrier guard bands are not required. This greatly simplifies the design of both the transmitter and the receiver. In conventional FDM, a separate filter for each subchannel is required.

Statistics, econometrics, and economics

When performing statistical analysis, independent variables that affect a particular dependent variable are said to be orthogonal if they are uncorrelated,[14] since the covariance forms an inner product. In this case the same results are obtained for the effect of any of the independent variables upon the dependent variable, regardless of whether one models the effects of the variables individually with simple regression or simultaneously with multiple regression. If correlation is present, the factors are not orthogonal and different results are obtained by the two methods. This usage arises from the fact that if centered by subtracting the expected value (the mean), uncorrelated variables are orthogonal in the geometric sense discussed above, both as observed data (i.e., vectors) and as random variables (i.e., density functions). One econometric formalism that is alternative to the maximum likelihood framework, the Generalized Method of Moments, relies on orthogonality conditions. In particular, the Ordinary Least Squares estimator may be easily derived from an orthogonality condition between the explanatory variables and model residuals.

Taxonomy

In taxonomy, an orthogonal classification is one in which no item is a member of more than one group, that is, the classifications are mutually exclusive.

Combinatorics

In combinatorics, two n×n Latin squares are said to be orthogonal if their superimposition yields all possible n2 combinations of entries.[15]

Chemistry and biochemistry

In synthetic organic chemistry orthogonal protection is a strategy allowing the deprotection of functional groups independently of each other. In chemistry and biochemistry, an orthogonal interaction occurs when there are two pairs of substances and each substance can interact with their respective partner, but does not interact with either substance of the other pair. For example, DNA has two orthogonal pairs: cytosine and guanine form a base-pair, and adenine and thymine form another base-pair, but other base-pair combinations are strongly disfavored. As a chemical example, tetrazine reacts with transcyclooctene and azide reacts with cyclooctyne without any cross-reaction, so these are mutually orthogonal reactions, and so, can be performed simultaneously and selectively.[16] Bioorthogonal chemistry refers to chemical reactions occurring inside living systems without reacting with naturally present cellular components. In supramolecular chemistry the notion of orthogonality refers to the possibility of two or more supramolecular, often non-covalent, interactions being compatible; reversibly forming without interference from the other.

In analytical chemistry, analyses are "orthogonal" if they make a measurement or identification in completely different ways, thus increasing the reliability of the measurement. Orthogonal testing thus can be viewed as "cross-checking" of results, and the "cross" notion corresponds to the etymologic origin of orthogonality. Orthogonal testing is often required as a part of a new drug application.

System reliability

In the field of system reliability orthogonal redundancy is that form of redundancy where the form of backup device or method is completely different from the prone to error device or method. The failure mode of an orthogonally redundant back-up device or method does not intersect with and is completely different from the failure mode of the device or method in need of redundancy to safeguard the total system against catastrophic failure.

Neuroscience

In neuroscience, a sensory map in the brain which has overlapping stimulus coding (e.g. location and quality) is called an orthogonal map.

Gaming

In board games such as chess which feature a grid of squares, 'orthogonal' is used to mean "in the same row/'rank' or column/'file'". This is the counterpart to squares which are "diagonally adjacent".[17] In the ancient Chinese board game Go a player can capture the stones of an opponent by occupying all orthogonally-adjacent points.

Other examples

Stereo vinyl records encode both the left and right stereo channels in a single groove. The V-shaped groove in the vinyl has walls that are 90 degrees to each other, with variations in each wall separately encoding one of the two analogue channels that make up the stereo signal. The cartridge senses the motion of the stylus following the groove in two orthogonal directions: 45 degrees from vertical to either side.[18] A pure horizontal motion corresponds to a mono signal, equivalent to a stereo signal in which both channels carry identical (in-phase) signals.

See also

- Imaginary number

- Isogonal

- Isogonal trajectory

- Orthogonal complement

- Orthogonal group

- Orthogonal matrix

- Orthogonal polynomials

- Orthogonalization

- Orthonormal basis

- Orthonormality

- Orthogonal transform

- Pan-orthogonality occurs in coquaternions

- Surface normal

- Orthogonal ligand-protein pair

References

- ↑ Liddell and Scott, A Greek–English Lexicon s.v. ὀρθός

- ↑ Liddell and Scott, A Greek–English Lexicon s.v. γωνία

- ↑ Liddell and Scott, A Greek–English Lexicon s.v. ὀρθογώνιον

- ↑ (3rd ed.). Oxford University Press. September 2004.

- ↑ J.A. Wheeler; C. Misner; K.S. Thorne (1973). Gravitation. W.H. Freeman & Co. p. 58. ISBN 0-7167-0344-0.

- ↑ "Wolfram MathWorld". http://mathworld.wolfram.com/Orthogonal.html.

- ↑ Bourbaki, Algebra I, p. 234

- ↑ Trefethen, Lloyd N.; Bau, David (1997). Numerical linear algebra. SIAM. p. 13. ISBN 978-0-89871-361-9. https://books.google.com/books?id=bj-Lu6zjWbEC&pg=PA13.

- ↑ 9.0 9.1 R. Penrose (2007). The Road to Reality. Vintage books. pp. 417–419. ISBN 978-0-679-77631-4.

- ↑ Michael L. Scott, Programming Language Pragmatics, p. 228.

- ↑ 1968, Adriaan van Wijngaarden et al., Revised Report on the Algorithmic Language ALGOL 68, section 0.1.2, Orthogonal design

- ↑ Null, Linda; Lobur, Julia (2006). The essentials of computer organization and architecture (2nd ed.). Jones & Bartlett Learning. p. 257. ISBN 978-0-7637-3769-6. https://books.google.com/books?id=QGPHAl9GE-IC&pg=PA257.

- ↑ Linda Null (2010). The Essentials of Computer Organization and Architecture. Jones & Bartlett Publishers. pp. 287–288. ISBN 978-1449600068. https://samples.jbpub.com/9781449600068/00068_ch05_null3e.pdf.

- ↑ Athanasios Papoulis; S. Unnikrishna Pillai (2002). Probability, Random Variables and Stochastic Processes. McGraw-Hill. pp. 211. ISBN 0-07-366011-6.

- ↑ Hedayat, A. (1999). Orthogonal arrays: theory and applications. Springer. p. 168. ISBN 978-0-387-98766-8. https://books.google.com/books?id=HrUYlIbI2mEC&pg=PA168.

- ↑ Karver, Mark R.; Hilderbrand, Scott A. (2012). "Bioorthogonal Reaction Pairs Enable Simultaneous, Selective, Multi-Target Imaging". Angewandte Chemie International Edition 51 (4): 920–2. doi:10.1002/anie.201104389. PMID 22162316.

- ↑ "chessvariants.org chess glossary". http://www.chessvariants.org/misc.dir/coreglossary.html#orthogonal_direction.

- ↑ For an illustration, see YouTube.

Further reading