Regularized least squares

This article should be summarized in Least squares#Regularization and a link provided from there to here using the {{Main}} template. See guidance in Wikipedia:Summary style. (November 2020) |

| Part of a series on |

| Regression analysis |

|---|

|

| Models |

| Estimation |

| Background |

|

|

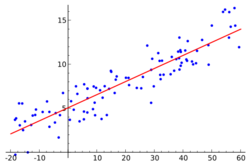

Regularized least squares (RLS) is a family of methods for solving the least-squares problem while using regularization to further constrain the resulting solution.

RLS is used for two main reasons. The first comes up when the number of variables in the linear system exceeds the number of observations. In such settings, the ordinary least-squares problem is ill-posed and is therefore impossible to fit because the associated optimization problem has infinitely many solutions. RLS allows the introduction of further constraints that uniquely determine the solution.

The second reason for using RLS arises when the learned model suffers from poor generalization. RLS can be used in such cases to improve the generalizability of the model by constraining it at training time. This constraint can either force the solution to be "sparse" in some way or to reflect other prior knowledge about the problem such as information about correlations between features. A Bayesian understanding of this can be reached by showing that RLS methods are often equivalent to priors on the solution to the least-squares problem.

General formulation

Consider a learning setting given by a probabilistic space , . Let denote a training set of pairs i.i.d. with respect to the joint distribution . Let be a loss function. Define as the space of the functions such that expected risk: is well defined. The main goal is to minimize the expected risk: Since the problem cannot be solved exactly there is a need to specify how to measure the quality of a solution. A good learning algorithm should provide an estimator with a small risk.

As the joint distribution is typically unknown, the empirical risk is taken. For regularized least squares the square loss function is introduced:

However, if the functions are from a relatively unconstrained space, such as the set of square-integrable functions on , this approach may overfit the training data, and lead to poor generalization. Thus, it should somehow constrain or penalize the complexity of the function . In RLS, this is accomplished by choosing functions from a reproducing kernel Hilbert space (RKHS) , and adding a regularization term to the objective function, proportional to the norm of the function in :

Kernel formulation

Definition of RKHS

A RKHS can be defined by a symmetric positive-definite kernel function with the reproducing property: where . The RKHS for a kernel consists of the completion of the space of functions spanned by : , where all are real numbers. Some commonly used kernels include the linear kernel, inducing the space of linear functions: the polynomial kernel, inducing the space of polynomial functions of order : and the Gaussian kernel:

Note that for an arbitrary loss function , this approach defines a general class of algorithms named Tikhonov regularization. For instance, using the hinge loss leads to the support vector machine algorithm, and using the epsilon-insensitive loss leads to support vector regression.

Arbitrary kernel

The representer theorem guarantees that the solution can be written as: for some .

The minimization problem can be expressed as: where, with some abuse of notation, the entry of kernel matrix (as opposed to kernel function ) is .

For such a function,

The following minimization problem can be obtained:

As the sum of convex functions is convex, the solution is unique and its minimum can be found by setting the gradient with respect to to : where

Complexity

The complexity of training is basically the cost of computing the kernel matrix plus the cost of solving the linear system which is roughly . The computation of the kernel matrix for the linear or Gaussian kernel is . The complexity of testing is .

Prediction

The prediction at a new test point is:

Linear kernel

For convenience a vector notation is introduced. Let be an matrix, where the rows are input vectors, and a vector where the entries are corresponding outputs. In terms of vectors, the kernel matrix can be written as . The learning function can be written as:

Here we define . The objective function can be rewritten as:

The first term is the objective function from ordinary least squares (OLS) regression, corresponding to the residual sum of squares. The second term is a regularization term, not present in OLS, which penalizes large values. As a smooth finite dimensional problem is considered and it is possible to apply standard calculus tools. In order to minimize the objective function, the gradient is calculated with respect to and set it to zero:

This solution closely resembles that of standard linear regression, with an extra term . If the assumptions of OLS regression hold, the solution , with , is an unbiased estimator, and is the minimum-variance linear unbiased estimator, according to the Gauss–Markov theorem. The term therefore leads to a biased solution; however, it also tends to reduce variance. This is easy to see, as the covariance matrix of the -values is proportional to , and therefore large values of will lead to lower variance. Therefore, manipulating corresponds to trading-off bias and variance. For problems with high-variance estimates, such as cases with relatively small or with correlated regressors, the optimal prediction accuracy may be obtained by using a nonzero , and thus introducing some bias to reduce variance. Furthermore, it is not uncommon in machine learning to have cases where , in which case is rank-deficient, and a nonzero is necessary to compute .

Complexity

The parameter controls the invertibility of the matrix . Several methods can be used to solve the above linear system, Cholesky decomposition being probably the method of choice, since the matrix is symmetric and positive definite. The complexity of this method is for training and for testing. The cost is essentially that of computing , whereas the inverse computation (or rather the solution of the linear system) is roughly .

Feature maps and Mercer's theorem

In this section it will be shown how to extend RLS to any kind of reproducing kernel K. Instead of linear kernel a feature map is considered for some Hilbert space , called the feature space. In this case the kernel is defined as: The matrix is now replaced by the new data matrix , where , or the -th component of the . It means that for a given training set . Thus, the objective function can be written as

This approach is known as the kernel trick. This technique can significantly simplify the computational operations. If is high dimensional, computing may be rather intensive. If the explicit form of the kernel function is known, we just need to compute and store the kernel matrix .

In fact, the Hilbert space need not be isomorphic to , and can be infinite dimensional. This follows from Mercer's theorem, which states that a continuous, symmetric, positive definite kernel function can be expressed as where form an orthonormal basis for , and . If feature maps is defined with components , it follows that . This demonstrates that any kernel can be associated with a feature map, and that RLS generally consists of linear RLS performed in some possibly higher-dimensional feature space. While Mercer's theorem shows how one feature map that can be associated with a kernel, in fact multiple feature maps can be associated with a given reproducing kernel. For instance, the map satisfies the property for an arbitrary reproducing kernel.

Bayesian interpretation

Least squares can be viewed as a likelihood maximization under an assumption of normally distributed residuals. This is because the exponent of the Gaussian distribution is quadratic in the data, and so is the least-squares objective function. In this framework, the regularization terms of RLS can be understood to be encoding priors on .[1] For instance, Tikhonov regularization corresponds to a normally distributed prior on that is centered at 0. To see this, first note that the OLS objective is proportional to the log-likelihood function when each sampled is normally distributed around . Then observe that a normal prior on centered at 0 has a log-probability of the formwhere and are constants that depend on the variance of the prior and are independent of . Thus, minimizing the logarithm of the likelihood times the prior is equivalent to minimizing the sum of the OLS loss function and the ridge regression regularization term.

This gives a more intuitive interpretation for why Tikhonov regularization leads to a unique solution to the least-squares problem: there are infinitely many vectors satisfying the constraints obtained from the data, but since we come to the problem with a prior belief that is normally distributed around the origin, we will end up choosing a solution with this constraint in mind.

Other regularization methods correspond to different priors. See the list below for more details.

Specific examples

Ridge regression (or Tikhonov regularization)

One particularly common choice for the penalty function is the squared norm, i.e., and the solution is found as The most common names for this are called Tikhonov regularization and ridge regression. It admits a closed-form solution for : The name ridge regression alludes to the fact that the term adds positive entries along the diagonal "ridge" of the sample covariance matrix .

When , i.e., in the case of ordinary least squares, the condition that causes the sample covariance matrix to not have full rank and so it cannot be inverted to yield a unique solution. This is why there can be an infinitude of solutions to the ordinary least squares problem when . However, when , i.e., when ridge regression is used, the addition of to the sample covariance matrix ensures that all of its eigenvalues will be strictly greater than 0. In other words, it becomes invertible, and the solution is then unique.

Compared to ordinary least squares, ridge regression is not unbiased. It accepts bias to reduce variance and the mean square error.

Simplifications and automatic regularization

If we want to find for different values of the regularization coefficient (which we denote ) we may use the eigenvalue decomposition of the covariance matrix where columns of are the eigenvectors of and - its eigenvalues.

The solution in then given by where

Using the above results, the algorithm for finding a maximum likelihood estimate of may be defined as follows: [2]

This algorithm, for automatic (as opposed to heuristic) regularization, is obtained as a fixed point solution in the maximum likelihood estimation of the parameters.[2] Although the guarantees of convergence are not provided, the examples indicate that a satisfactory solution may be obtained after a couple of iterations.

The eigenvalue decomposition simplifies derivation of the algorithm and also simplifies the calculations:

An alternative fixed-point algorithm known as Gull-McKay algorithm[2] usually has a faster convergence, but may be used only if . Thus, while it can be used without problems for caution is recommended for .

Lasso regression

The least absolute selection and shrinkage (LASSO) method is another popular choice. In lasso regression, the lasso penalty function is the norm, i.e.

Note that the lasso penalty function is convex but not strictly convex. Unlike Tikhonov regularization, this scheme does not have a convenient closed-form solution: instead, the solution is typically found using quadratic programming or more general convex optimization methods, as well as by specific algorithms such as the least-angle regression algorithm.

An important difference between lasso regression and Tikhonov regularization is that lasso regression forces more entries of to actually equal 0 than would otherwise. In contrast, while Tikhonov regularization forces entries of to be small, it does not force more of them to be 0 than would be otherwise. Thus, LASSO regularization is more appropriate than Tikhonov regularization in cases in which we expect the number of non-zero entries of to be small, and Tikhonov regularization is more appropriate when we expect that entries of will generally be small but not necessarily zero. Which of these regimes is more relevant depends on the specific data set at hand.

Besides feature selection described above, LASSO has some limitations. Ridge regression provides better accuracy in the case for highly correlated variables.[3] In another case, , LASSO selects at most variables. Moreover, LASSO tends to select some arbitrary variables from group of highly correlated samples, so there is no grouping effect.

ℓ0 Penalization

The most extreme way to enforce sparsity is to say that the actual magnitude of the coefficients of does not matter; rather, the only thing that determines the complexity of is the number of non-zero entries. This corresponds to setting to be the norm of . This regularization function, while attractive for the sparsity that it guarantees, is very difficult to solve because doing so requires optimization of a function that is not even weakly convex. Lasso regression is the minimal possible relaxation of penalization that yields a weakly convex optimization problem.

Elastic net

For any non-negative and the objective has the following form:

Let , then the solution of the minimization problem is described as: for some .

Consider as an Elastic Net penalty function.

When , elastic net becomes ridge regression, whereas it becomes Lasso. Elastic Net penalty function doesn't have the first derivative at 0 and it is strictly convex taking the properties both lasso regression and ridge regression.

One of the main properties of the Elastic Net is that it can select groups of correlated variables. The difference between weight vectors of samples and is given by: where .[4]

If and are highly correlated (), the weight vectors are very close. In the case of negatively correlated samples () the samples can be taken. To summarize, for highly correlated variables the weight vectors tend to be equal up to a sign in the case of negative correlated variables.

Partial list of RLS methods

The following is a list of possible choices of the regularization function , along with the name for each one, the corresponding prior if there is a simple one, and ways for computing the solution to the resulting optimization problem.

| Name | Regularization function | Corresponding prior | Methods for solving |

|---|---|---|---|

| Tikhonov regularization | Normal | Closed form | |

| Lasso regression | Laplace | Proximal gradient descent, least angle regression | |

| penalization | – | Forward selection, Backward elimination, use of priors such as spike and slab | |

| Elastic nets | Normal and Laplace mixture | Proximal gradient descent | |

| Total variation regularization | – | Split–Bregman method, among others |

See also

- Least squares

- Regularization in mathematics.

- Generalization error, one of the reasons regularization is used.

- Tikhonov regularization

- Lasso regression

- Elastic net regularization

- :Least-angle regression

References

- ↑ Huang, Yunfei. (2022). "Sparse inference and active learning of stochastic differential equations from data". Scientific Reports 12 (1): 21691. doi:10.1038/s41598-022-25638-9. PMID 36522347. Bibcode: 2022NatSR..1221691H.

- ↑ 2.0 2.1 2.2 Gomes de Pinho Zanco, Daniel; Szczecinski, Leszek; Benesty, Jacob (2025). "Automatic regularization for linear MMSE filters". Signal Processing 230. doi:10.1016/j.sigpro.2024.109820. Bibcode: 2025SigPr.23009820G. https://www.sciencedirect.com/science/article/pii/S0165168424004407.

- ↑ Tibshirani Robert (1996). "Regression shrinkage and selection via the lasso". Journal of the Royal Statistical Society, Series B 58: pp. 266–288. doi:10.1111/j.2517-6161.1996.tb02080.x. https://web.stanford.edu/~hastie/Papers/elasticnet.pdf.

- ↑ Hui, Zou; Hastie, Trevor (2003). "Regularization and Variable Selection via the Elastic Net". Journal of the Royal Statistical Society, Series B 67 (2): pp. 301–320. https://web.stanford.edu/~hastie/Papers/elasticnet.pdf.

External links

- http://www.stanford.edu/~hastie/TALKS/enet_talk.pdf Regularization and Variable Selection via the Elastic Net (presentation)

- Regularized Least Squares and Support Vector Machines (presentation)

- Regularized Least Squares(presentation)

|