Nonparametric regression

| Part of a series on |

| Regression analysis |

|---|

|

| Models |

| Estimation |

| Background |

|

|

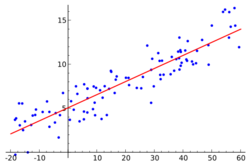

Nonparametric regression is a category of regression analysis in which the predictor does not take a predetermined form but is constructed according to information derived from the data. That is, no parametric form is assumed for the relationship between predictors and dependent variable. Nonparametric regression requires larger sample sizes than regression based on parametric models because the data must supply the model structure as well as the model estimates.

Definition

In nonparametric regression, we have random variables and and assume the following relationship:

where is some deterministic function. Linear regression is a restricted case of nonparametric regression where is assumed to be affine. Some authors use a slightly stronger assumption of additive noise:

where the random variable is the `noise term', with mean 0. Without the assumption that belongs to a specific parametric family of functions it is impossible to get an unbiased estimate for , however most estimators are consistent under suitable conditions.

List of general-purpose nonparametric regression algorithms

This is a non-exhaustive list of non-parametric models for regression.

- nearest neighbors, see nearest-neighbor interpolation and k-nearest neighbors algorithm

- regression trees

- kernel regression

- local regression

- multivariate adaptive regression splines

- smoothing splines

- neural networks[1]

Examples

Gaussian process regression or Kriging

In Gaussian process regression, also known as Kriging, a Gaussian prior is assumed for the regression curve. The errors are assumed to have a multivariate normal distribution and the regression curve is estimated by its posterior mode. The Gaussian prior may depend on unknown hyperparameters, which are usually estimated via empirical Bayes. The hyperparameters typically specify a prior covariance kernel. In case the kernel should also be inferred nonparametrically from the data, the critical filter can be used.

Smoothing splines have an interpretation as the posterior mode of a Gaussian process regression.

Kernel regression

This section does not cite any external source. HandWiki requires at least one external source. See citing external sources. (August 2020) (Learn how and when to remove this template message) |

Kernel regression estimates the continuous dependent variable from a limited set of data points by convolving the data points' locations with a kernel function—approximately speaking, the kernel function specifies how to "blur" the influence of the data points so that their values can be used to predict the value for nearby locations.

Regression trees

Decision tree learning algorithms can be applied to learn to predict a dependent variable from data.[2] Although the original Classification And Regression Tree (CART) formulation applied only to predicting univariate data, the framework can be used to predict multivariate data, including time series.[3]

See also

- Lasso (statistics)

- Local regression

- Non-parametric statistics

- Semiparametric regression

- Isotonic regression

- Multivariate adaptive regression splines

References

- ↑ Cherkassky, Vladimir; Mulier, Filip (1994). Cheeseman, P.; Oldford, R. W.. eds. "Statistical and neural network techniques for nonparametric regression" (in en). Selecting Models from Data. Lecture Notes in Statistics (New York, NY: Springer): 383–392. doi:10.1007/978-1-4612-2660-4_39. ISBN 978-1-4612-2660-4. https://link.springer.com/chapter/10.1007/978-1-4612-2660-4_39.

- ↑ Breiman, Leo; Friedman, J. H.; Olshen, R. A.; Stone, C. J. (1984). Classification and regression trees. Monterey, CA: Wadsworth & Brooks/Cole Advanced Books & Software. ISBN 978-0-412-04841-8.

- ↑ Segal, M.R. (1992). "Tree-structured methods for longitudinal data". Journal of the American Statistical Association (American Statistical Association, Taylor & Francis) 87 (418): 407–418. doi:10.2307/2290271.

Further reading

- Bowman, A. W.; Azzalini, A. (1997). Applied Smoothing Techniques for Data Analysis. Oxford: Clarendon Press. ISBN 0-19-852396-3. https://books.google.com/books?id=7WBMrZ9umRYC.

- Fan, J.; Gijbels, I. (1996). Local Polynomial Modelling and its Applications. Boca Raton: Chapman and Hall. ISBN 0-412-98321-4. https://books.google.com/books?id=BM1ckQKCXP8C.

- Henderson, D. J.; Parmeter, C. F. (2015). Applied Nonparametric Econometrics. New York: Cambridge University Press. ISBN 978-1-107-01025-3. https://books.google.com/books?id=hD3WBQAAQBAJ.

- Li, Q.; Racine, J. (2007). Nonparametric Econometrics: Theory and Practice. Princeton: Princeton University Press. ISBN 978-0-691-12161-1. https://books.google.com/books?id=BI_PiWazY0YC.

- Pagan, A.; Ullah, A. (1999). Nonparametric Econometrics. New York: Cambridge University Press. ISBN 0-521-35564-8. https://archive.org/details/nonparametriceco00paga.

External links

- HyperNiche, software for nonparametric multiplicative regression.

- Scale-adaptive nonparametric regression (with Matlab software).

|