General linear model

| Part of a series on |

| Regression analysis |

|---|

|

| Models |

| Estimation |

| Background |

|

|

The general linear model or general multivariate regression model is a compact way of simultaneously writing several multiple linear regression models. In that sense it is not a separate statistical linear model. The various multiple linear regression models may be compactly written as[1]

where Y is a matrix with series of multivariate measurements (each column being a set of measurements on one of the dependent variables), X is a matrix of observations on independent variables that might be a design matrix (each column being a set of observations on one of the independent variables), B is a matrix containing parameters that are usually to be estimated and U is a matrix containing errors (noise). The errors are usually assumed to be uncorrelated across measurements, and follow a multivariate normal distribution. If the errors do not follow a multivariate normal distribution, generalized linear models may be used to relax assumptions about Y and U.

The general linear model incorporates a number of different statistical models: ANOVA, ANCOVA, MANOVA, MANCOVA, ordinary linear regression, t-test and F-test. The general linear model is a generalization of multiple linear regression to the case of more than one dependent variable. If Y, B, and U were column vectors, the matrix equation above would represent multiple linear regression.

Hypothesis tests with the general linear model can be made in two ways: multivariate or as several independent univariate tests. In multivariate tests the columns of Y are tested together, whereas in univariate tests the columns of Y are tested independently, i.e., as multiple univariate tests with the same design matrix.

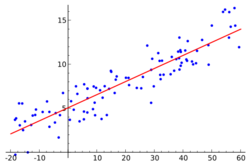

Comparison to multiple linear regression

Multiple linear regression is a generalization of simple linear regression to the case of more than one independent variable, and a special case of general linear models, restricted to one dependent variable. The basic model for multiple linear regression is

- or more compactly

for each observation i = 1, ... , n.

In the formula above we consider n observations of one dependent variable and p independent variables. Thus, Yi is the ith observation of the dependent variable, Xij is ith observation of the jth independent variable, j = 1, 2, ..., p. The values βj represent parameters to be estimated, and εi is the ith independent identically distributed normal error.

In the more general multivariate linear regression, there is one equation of the above form for each of m > 1 dependent variables that share the same set of explanatory variables and hence are estimated simultaneously with each other:

- or more compactly

for all observations indexed as i = 1, ... , n and for all dependent variables indexed as j = 1, ... , m.

Note that, since each dependent variable has its own set of regression parameters to be fitted, from a computational point of view the general multivariate regression is simply a sequence of standard multiple linear regressions using the same explanatory variables.

Comparison to generalized linear model

The general linear model and the generalized linear model (GLM)[2][3] are two commonly used families of statistical methods to relate some number of continuous and/or categorical predictors to a single outcome variable.

The main difference between the two approaches is that the general linear model strictly assumes that the residuals will follow a conditionally normal distribution,[4] while the GLM loosens this assumption and allows for a variety of other distributions from the exponential family for the residuals.[2] Of note, the general linear model is a special case of the GLM in which the distribution of the residuals follow a conditionally normal distribution.

The distribution of the residuals largely depends on the type and distribution of the outcome variable; different types of outcome variables lead to the variety of models within the GLM family. Commonly used models in the GLM family include binary logistic regression[5] for binary or dichotomous outcomes, Poisson regression[6] for count outcomes, and linear regression for continuous, normally distributed outcomes. This means that GLM may be spoken of as a general family of statistical models or as specific models for specific outcome types.

| General linear model | Generalized linear model | |

|---|---|---|

| Typical estimation method | Least squares, best linear unbiased prediction | Maximum likelihood or Bayesian |

| Examples | ANOVA, ANCOVA, linear regression | |

| Extensions and related methods | MANOVA, MANCOVA, linear mixed model | generalized linear mixed model (GLMM), generalized estimating equations (GEE) |

| R package and function | lm() in stats package (base R) | glm() in stats package (base R) |

| Matlab function | mvregress() | glmfit() |

| SAS procedures | PROC GLM, PROC REG | PROC GENMOD, PROC LOGISTIC (for binary & ordered or unordered categorical outcomes) |

| Stata command | regress | glm |

| SPSS command | regression, glm | genlin, logistic |

| Wolfram Language & Mathematica function | LinearModelFit[][7] | GeneralizedLinearModelFit[][8] |

| EViews command | ls[9] | glm[10] |

| statsmodels Python Package | regression-and-linear-models | GLM |

Applications

An application of the general linear model appears in the analysis of multiple brain scans in scientific experiments where Y contains data from brain scanners, X contains experimental design variables and confounds. It is usually tested in a univariate way (usually referred to a mass-univariate in this setting) and is often referred to as statistical parametric mapping.[11]

See also

Notes

- ↑ K. V. Mardia, J. T. Kent and J. M. Bibby (1979). Multivariate Analysis. Academic Press. ISBN 0-12-471252-5.

- ↑ 2.0 2.1 McCullagh, P.; Nelder, J. A. (1989), "An outline of generalized linear models", Generalized Linear Models (Springer US): pp. 21–47, doi:10.1007/978-1-4899-3242-6_2, ISBN 9780412317606

- ↑ Fox, J. (2015). Applied regression analysis and generalized linear models. Sage Publications.

- ↑ Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Applied multiple regression/correlation analysis for the behavioral sciences.

- ↑ Hosmer Jr, D. W., Lemeshow, S., & Sturdivant, R. X. (2013). Applied logistic regression (Vol. 398). John Wiley & Sons.

- ↑ Gardner, W.; Mulvey, E. P.; Shaw, E. C. (1995). "Regression analyses of counts and rates: Poisson, overdispersed Poisson, and negative binomial models.". Psychological Bulletin 118 (3): 392–404. doi:10.1037/0033-2909.118.3.392. PMID 7501743.

- ↑ LinearModelFit, Wolfram Language Documentation Center.

- ↑ GeneralizedLinearModelFit, Wolfram Language Documentation Center.

- ↑ ls, EViews Help.

- ↑ glm, EViews Help.

- ↑ K.J. Friston; A.P. Holmes; K.J. Worsley; J.-B. Poline; C.D. Frith; R.S.J. Frackowiak (1995). "Statistical Parametric Maps in functional imaging: A general linear approach". Human Brain Mapping 2 (4): 189–210. doi:10.1002/hbm.460020402.

References

- Christensen, Ronald (2020). Plane Answers to Complex Questions: The Theory of Linear Models (Fifth ed.). New York: Springer. ISBN 978-3-030-32096-6.

- Wichura, Michael J. (2006). The coordinate-free approach to linear models. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge: Cambridge University Press. pp. xiv+199. ISBN 978-0-521-86842-6.

- Rawlings, John O.; Pantula, Sastry G.; Dickey, David A., eds (1998). Applied Regression Analysis. Springer Texts in Statistics. doi:10.1007/b98890. ISBN 0-387-98454-2.

|