Sequential quadratic programming

This article provides insufficient context for those unfamiliar with the subject. (October 2009) (Learn how and when to remove this template message) |

Sequential quadratic programming (SQP) is an iterative method for constrained nonlinear optimization, also known as Lagrange-Newton method. SQP methods are used on mathematical problems for which the objective function and the constraints are twice continuously differentiable, but not necessarily convex.

SQP methods solve a sequence of optimization subproblems, each of which optimizes a quadratic model of the objective subject to a linearization of the constraints. If the problem is unconstrained, then the method reduces to Newton's method for finding a point where the gradient of the objective vanishes. If the problem has only equality constraints, then the method is equivalent to applying Newton's method to the first-order optimality conditions, or Karush–Kuhn–Tucker conditions, of the problem.

Algorithm basics

Consider a nonlinear programming problem of the form:

where , , and .

The Lagrangian for this problem is[1]

where and are Lagrange multipliers.

The equality constrained case

If the problem does not have inequality constraints (that is, ), the first-order optimality conditions (aka KKT conditions) are a set of nonlinear equations that may be iteratively solved with Newton's Method. Newton's method linearizes the KKT conditions at the current iterate , which provides the following expression for the Newton step :

,

where denotes the Hessian matrix of the Lagrangian, and and are the primal and dual displacements, respectively. Note that the Lagrangian Hessian is not explicitly inverted and a linear system is solved instead.

When the Lagrangian Hessian is not positive definite, the Newton step may not exist or it may characterize a stationary point that is not a local minimum (but rather, a local maximum or a saddle point). In this case, the Lagrangian Hessian must be regularized, for example one can add a multiple of the identity to it such that the resulting matrix is positive definite.

An alternative view for obtaining the primal-dual displacements is to construct and solve a local quadratic model of the original problem at the current iterate:

The optimality conditions of this quadratic problem correspond to the linearized KKT conditions of the original problem. Note that the term in the expression above may be left out, since it is constant under the operator.

The inequality constrained case

In the presence of inequality constraints (), we can naturally extend the definition of the local quadratic model introduced in the previous section:

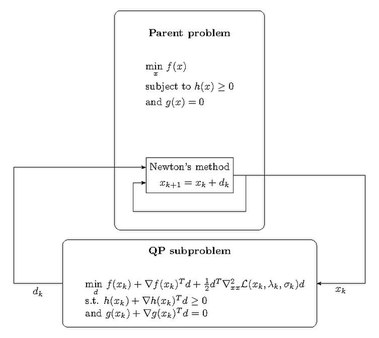

The SQP algorithm

The SQP algorithm starts from the initial iterate . At each iteration, the QP subproblem is built and solved; the resulting Newton step direction is used to update current iterate:

This process is repeated for until some convergence criterion is satisfied.

Practical implementations

Practical implementations of the SQP algorithm are significantly more complex than its basic version above. To adapt SQP for real-world applications, the following challenges must be addressed:

- The possibility of an infeasible QP subproblem.

- QP subproblem yielding a bad step: one that either fails to reduce the target or increases constraints violation.

- Breakdown of iterations due to significant deviation of the target/constraints from their quadratic/linear models.

To overcome these challenges, various strategies are typically employed:

- Use of merit functions, which assess progress towards a constrained solution, non-monotonic steps or filter methods.

- Trust region or line search methods to manage deviations between the quadratic model and the actual target.

- Special feasibility restoration phases to handle infeasible subproblems, or the use of L1-penalized subproblems to gradually decrease infeasibility

These strategies can be combined in numerous ways, resulting in a diverse range of SQP methods.

Alternative approaches

- Sequential linear programming

- Sequential linear-quadratic programming

- Augmented Lagrangian method

Implementations

SQP methods have been implemented in well known numerical environments such as MATLAB and GNU Octave. There also exist numerous software libraries, including open source:

- SciPy (de facto standard for scientific Python) has scipy.optimize.minimize(method='SLSQP') solver.

- NLopt (C/C++ implementation, with numerous interfaces including Julia, Python, R, MATLAB/Octave), implemented by Dieter Kraft as part of a package for optimal control, and modified by S. G. Johnson.[2][3]

- ALGLIB SQP solver (C++, C#, Java, Python API)

- acados (C with interfaces to Python, MATLAB, Simulink, Octave) implements a SQP method tailored to the problem structure arising in optimal control, but tackles also general nonlinear programs.

and commercial

- LabVIEW

- KNITRO[4] (C, C++, C#, Java, Python, Julia, Fortran)

- NPSOL (Fortran)

- SNOPT (Fortran)

- NLPQL (Fortran)

- MATLAB

- SuanShu (Java)

See also

Notes

- ↑ Jorge Nocedal and Stephen J. Wright (2006). Numerical Optimization. Springer.. ISBN 978-0-387-30303-1. http://www.ece.northwestern.edu/~nocedal/book/num-opt.html.

- ↑ Kraft, Dieter (Sep 1994). "Algorithm 733: TOMP–Fortran modules for optimal control calculations". ACM Transactions on Mathematical Software 20 (3): 262–281. doi:10.1145/192115.192124. https://github.com/scipy/scipy/blob/master/scipy/optimize/slsqp/slsqp_optmz.f. Retrieved 1 February 2019.

- ↑ "NLopt Algorithms: SLSQP". July 1988. https://nlopt.readthedocs.io/en/latest/NLopt_Algorithms/#slsqp.

- ↑ KNITRO User Guide: Algorithms

References

- Bonnans, J. Frédéric; Gilbert, J. Charles; Lemaréchal, Claude; Sagastizábal, Claudia A. (2006). Numerical optimization: Theoretical and practical aspects. Universitext (Second revised ed. of translation of 1997 French ed.). Berlin: Springer-Verlag. pp. xiv+490. doi:10.1007/978-3-540-35447-5. ISBN 978-3-540-35445-1. https://www.springer.com/mathematics/applications/book/978-3-540-35445-1.

- Jorge Nocedal and Stephen J. Wright (2006). Numerical Optimization. Springer.. ISBN 978-0-387-30303-1. http://www.ece.northwestern.edu/~nocedal/book/num-opt.html.

External links

|