Newton's method in optimization

In calculus, Newton's method (also called Newton–Raphson) is an iterative method for finding the roots of a differentiable function F, which are solutions to the equation F (x) = 0. As such, Newton's method can be applied to the derivative f ′ of a twice-differentiable function f to find the roots of the derivative (solutions to f ′(x) = 0), also known as the critical points of f. These solutions may be minima, maxima, or saddle points; see section "Several variables" in Critical point (mathematics) and also section "Geometric interpretation" in this article. This is relevant in optimization, which aims to find (global) minima of the function f.

Newton's method

The central problem of optimization is minimization of functions. Let us first consider the case of univariate functions, i.e., functions of a single real variable. We will later consider the more general and more practically useful multivariate case.

Given a twice differentiable function , we seek to solve the optimization problem

Newton's method attempts to solve this problem by constructing a sequence from an initial guess (starting point) that converges towards a minimizer of by using a sequence of second-order Taylor approximations of around the iterates. The second-order Taylor expansion of f around is

The next iterate is defined so as to minimize this quadratic approximation in , and setting . If the second derivative is positive, the quadratic approximation is a convex function of , and its minimum can be found by setting the derivative to zero. Since

the minimum is achieved for

Putting everything together, Newton's method performs the iteration

Geometric interpretation

The geometric interpretation of Newton's method is that at each iteration, it amounts to the fitting of a parabola to the graph of at the trial value , having the same slope and curvature as the graph at that point, and then proceeding to the maximum or minimum of that parabola (in higher dimensions, this may also be a saddle point), see below. Note that if happens to be a quadratic function, then the exact extremum is found in one step.

Higher dimensions

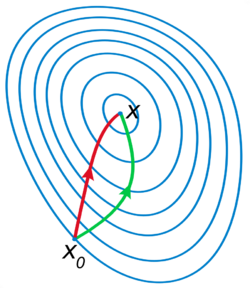

The above iterative scheme can be generalized to dimensions by replacing the derivative with the gradient (different authors use different notation for the gradient, including ), and the reciprocal of the second derivative with the inverse of the Hessian matrix (different authors use different notation for the Hessian, including ). One thus obtains the iterative scheme

Often Newton's method is modified to include a small step size instead of :

This is often done to ensure that the Wolfe conditions, or much simpler and efficient Armijo's condition, are satisfied at each step of the method. For step sizes other than 1, the method is often referred to as the relaxed or damped Newton's method.

Convergence

If f is a strongly convex function with Lipschitz Hessian, then provided that is close enough to , the sequence generated by Newton's method will converge to the (necessarily unique) minimizer of quadratically fast.[1] That is,

Computing the Newton direction

Finding the inverse of the Hessian in high dimensions to compute the Newton direction can be an expensive operation. In such cases, instead of directly inverting the Hessian, it is better to calculate the vector as the solution to the system of linear equations

which may be solved by various factorizations or approximately (but to great accuracy) using iterative methods. Many of these methods are only applicable to certain types of equations, for example the Cholesky factorization and conjugate gradient will only work if is a positive definite matrix. While this may seem like a limitation, it is often a useful indicator of something gone wrong; for example if a minimization problem is being approached and is not positive definite, then the iterations are converging to a saddle point and not a minimum.

On the other hand, if a constrained optimization is done (for example, with Lagrange multipliers), the problem may become one of saddle point finding, in which case the Hessian will be symmetric indefinite and the solution of will need to be done with a method that will work for such, such as the variant of Cholesky factorization or the conjugate residual method.

There also exist various quasi-Newton methods, where an approximation for the Hessian (or its inverse directly) is built up from changes in the gradient.

If the Hessian is close to a non-invertible matrix, the inverted Hessian can be numerically unstable and the solution may diverge. In this case, certain workarounds have been tried in the past, which have varied success with certain problems. One can, for example, modify the Hessian by adding a correction matrix so as to make positive definite. One approach is to diagonalize the Hessian and choose so that has the same eigenvectors as the Hessian, but with each negative eigenvalue replaced by .

An approach exploited in the Levenberg–Marquardt algorithm (which uses an approximate Hessian) is to add a scaled identity matrix to the Hessian, , with the scale adjusted at every iteration as needed. For large and small Hessian, the iterations will behave like gradient descent with step size . This results in slower but more reliable convergence where the Hessian doesn't provide useful information.

Some caveats

Newton's method, in its original version, has several caveats:

- It does not work if the Hessian is not invertible. This is clear from the very definition of Newton's method, which requires taking the inverse of the Hessian.

- It may not converge at all, but can enter a cycle having more than 1 point. See the section "Failure analysis" in Newton's method.

- It can converge to a saddle point instead of to a local minimum, see the section "Geometric interpretation" in this article.

The popular modifications of Newton's method, such as quasi-Newton methods or Levenberg-Marquardt algorithm mentioned above, also have caveats:

For example, it is usually required that the cost function is (strongly) convex and the Hessian is globally bounded or Lipschitz continuous, for example this is mentioned in the section "Convergence" in this article. If one looks at the papers by Levenberg and Marquardt in the reference for Levenberg–Marquardt algorithm, which are the original sources for the mentioned method, one can see that there is basically no theoretical analysis in the paper by Levenberg, while the paper by Marquardt only analyses a local situation and does not prove a global convergence result. One can compare with Backtracking line search method for Gradient descent, which has good theoretical guarantee under more general assumptions, and can be implemented and works well in practical large scale problems such as Deep Neural Networks.

See also

- Quasi-Newton method

- Gradient descent

- Gauss–Newton algorithm

- Levenberg–Marquardt algorithm

- Trust region

- Optimization

- Nelder–Mead method

- Self-concordant function - a function for which Newton's method has very good global convergence rate.[2]: Sec.6.2

Notes

- ↑ Nocedal, Jorge; Wright, Stephen J. (2006). Numerical optimization (2nd ed.). New York: Springer. p. 44. ISBN 0387303030.

- ↑ Nemirovsky and Ben-Tal (2023). "Optimization III: Convex Optimization". http://www2.isye.gatech.edu/~nemirovs/OPTIIILN2023Spring.pdf.

References

- Avriel, Mordecai (2003). Nonlinear Programming: Analysis and Methods. Dover Publishing. ISBN 0-486-43227-0.

- Bonnans, J. Frédéric; Gilbert, J. Charles; Lemaréchal, Claude; Sagastizábal, Claudia A. (2006). Numerical optimization: Theoretical and practical aspects. Universitext (Second revised ed. of translation of 1997 French ed.). Berlin: Springer-Verlag. doi:10.1007/978-3-540-35447-5. ISBN 3-540-35445-X.

- Fletcher, Roger (1987). Practical Methods of Optimization (2nd ed.). New York: John Wiley & Sons. ISBN 978-0-471-91547-8. https://archive.org/details/practicalmethods0000flet.

- Givens, Geof H.; Hoeting, Jennifer A. (2013). Computational Statistics. Hoboken, New Jersey: John Wiley & Sons. pp. 24–58. ISBN 978-0-470-53331-4.

- Nocedal, Jorge; Wright, Stephen J. (1999). Numerical Optimization. Springer-Verlag. ISBN 0-387-98793-2.

- Kovalev, Dmitry; Mishchenko, Konstantin; Richtárik, Peter (2019). "Stochastic Newton and cubic Newton methods with simple local linear-quadratic rates". arXiv:1912.01597 [cs.LG].

External links

- Korenblum, Daniel (Aug 29, 2015). "Newton-Raphson visualization (1D)". http://bl.ocks.org/dannyko/ffe9653768cb80dfc0da/.

fr:Méthode de Newton

|