Metaheuristic

In computer science and mathematical optimization, a metaheuristic is a higher-level procedure or heuristic designed to find, generate, tune, or select a heuristic (partial search algorithm) that may provide a sufficiently good solution to an optimization problem or a machine learning problem, especially with incomplete or imperfect information or limited computation capacity.[1][2] Metaheuristics sample a subset of solutions which is otherwise too large to be completely enumerated or otherwise explored. Metaheuristics may make relatively few assumptions about the optimization problem being solved and so may be usable for a variety of problems.[3]

Compared to optimization algorithms and iterative methods, metaheuristics do not guarantee that a globally optimal solution can be found on some class of problems.[3] Many metaheuristics implement some form of stochastic optimization, so that the solution found is dependent on the set of random variables generated.[2] In combinatorial optimization, by searching over a large set of feasible solutions, metaheuristics can often find good solutions with less computational effort than optimization algorithms, iterative methods, or simple heuristics.[3] As such, they are useful approaches for optimization problems.[2] Several books and survey papers have been published on the subject.[2][3][4][5][6] Literature review on metaheuristic optimization,[7] suggested that it was Fred Glover who coined the word metaheuristics.[8]

Most literature on metaheuristics is experimental in nature, describing empirical results based on computer experiments with the algorithms. But some formal theoretical results are also available, often on convergence and the possibility of finding the global optimum.[3] Many metaheuristic methods have been published with claims of novelty and practical efficacy. While the field also features high-quality research, many of the publications have been of poor quality; flaws include vagueness, lack of conceptual elaboration, poor experiments, and ignorance of previous literature.[9]

Properties

These are properties that characterize most metaheuristics:[3]

- Metaheuristics are strategies that guide the search process.

- The goal is to efficiently explore the search space in order to find near–optimal solutions.

- Techniques which constitute metaheuristic algorithms range from simple local search procedures to complex learning processes.

- Metaheuristic algorithms are approximate and usually non-deterministic.

- Metaheuristics are not problem-specific.

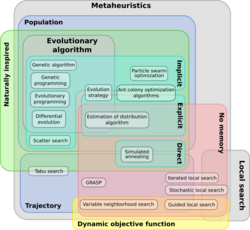

Classification

There are a wide variety of metaheuristics[2] and a number of properties with respect to which to classify them.[3]

Local search vs. global search

One approach is to characterize the type of search strategy.[3] One type of search strategy is an improvement on simple local search algorithms. A well known local search algorithm is the hill climbing method which is used to find local optimums. However, hill climbing does not guarantee finding global optimum solutions.

Many metaheuristic ideas were proposed to improve local search heuristic in order to find better solutions. Such metaheuristics include simulated annealing, tabu search, iterated local search, variable neighborhood search, and GRASP.[3] These metaheuristics can both be classified as local search-based or global search metaheuristics.

Other global search metaheuristic that are not local search-based are usually population-based metaheuristics. Such metaheuristics include ant colony optimization, evolutionary computation such as genetic algorithm or evolution strategies, particle swarm optimization, rider optimization algorithm[11] and bacterial foraging algorithm.[12]

Single-solution vs. population-based

Another classification dimension is single solution vs population-based searches.[3][6] Single solution approaches focus on modifying and improving a single candidate solution; single solution metaheuristics include simulated annealing, iterated local search, variable neighborhood search, and guided local search.[6] Population-based approaches maintain and improve multiple candidate solutions, often using population characteristics to guide the search; population based metaheuristics include evolutionary computation and particle swarm optimization.[6] Another category of metaheuristics is Swarm intelligence which is a collective behavior of decentralized, self-organized agents in a population or swarm. Ant colony optimization,[13] particle swarm optimization,[6] social cognitive optimization and bacterial foraging algorithm[12] are examples of this category.

Hybridization and memetic algorithms

A hybrid metaheuristic is one that combines a metaheuristic with other optimization approaches, such as algorithms from mathematical programming, constraint programming, and machine learning. Both components of a hybrid metaheuristic may run concurrently and exchange information to guide the search.

On the other hand, Memetic algorithms[14] represent the synergy of evolutionary or any population-based approach with separate individual learning or local improvement procedures for problem search. An example of memetic algorithm is the use of a local search algorithm instead of or in addition to a basic mutation operator in evolutionary algorithms.

Parallel metaheuristics

A parallel metaheuristic is one that uses the techniques of parallel programming to run multiple metaheuristic searches in parallel; these may range from simple distributed schemes to concurrent search runs that interact to improve the overall solution.

Nature-inspired and metaphor-based metaheuristics

A very active area of research is the design of nature-inspired metaheuristics. Many recent metaheuristics, especially evolutionary computation-based algorithms, are inspired by natural systems. Nature acts as a source of concepts, mechanisms and principles for designing of artificial computing systems to deal with complex computational problems. Such metaheuristics include simulated annealing, evolutionary algorithms, ant colony optimization and particle swarm optimization. A large number of more recent metaphor-inspired metaheuristics have started to attract criticism in the research community for hiding their lack of novelty behind an elaborate metaphor.[9]

Applications

Metaheuristics are used for all types of optimization problems, ranging from continuous through mixed integer problems to combinatorial optimization or combinations thereof. In combinatorial optimization, an optimal solution is sought over a discrete search-space. An example problem is the travelling salesman problem where the search-space of candidate solutions grows faster than exponentially as the size of the problem increases, which makes an exhaustive search for the optimal solution infeasible. Additionally, multidimensional combinatorial problems, including most design problems in engineering[15][16][17] such as form-finding and behavior-finding, suffer from the curse of dimensionality, which also makes them infeasible for exhaustive search or analytical methods.

Metaheuristics are also frequently applied to scheduling problems. A typical representative of this combinatorial task class is job shop scheduling, which involves assigning the work steps of jobs to processing stations in such a way that all jobs are completed on time and altogether in the shortest possible time.[18][19] In practice, restrictions often have to be observed, e.g. by limiting the permissible sequence of work steps of a job through predefined workflows[20] and/or with regard to resource utilisation, e.g. in the form of smoothing the energy demand.[21][22] Popular metaheuristics for combinatorial problems include genetic algorithms by Holland et al.,[23] scatter search[24] and tabu search[25] by Glover.

Another large field of application are optimization tasks in continuous or mixed-integer search spaces. This includes, e.g., design optimization[26][27][28] or various engineering tasks.[29][30][31] An example of the mixture of combinatorial and continuous optimization is the planning of favourable motion paths for industrial robots.[32][33]

Metaheuristic Optimization Frameworks

A MOF can be defined as ‘‘a set of software tools that provide a correct and reusable implementation of a set of metaheuristics, and the basic mechanisms to accelerate the implementation of its partner subordinate heuristics (possibly including solution encodings and technique-specific operators), which are necessary to solve a particular problem instance using techniques provided’’.[34]

There are many candidate optimization tools which can be considered as a MOF of varying feature: Comet, EvA2, evolvica, Evolutionary::Algorithm, GAPlayground, jaga, JCLEC, JGAP, jMetal, n-genes, Open Beagle, Opt4j, ParadisEO/EO, Pisa, Watchmaker, FOM, Hypercube, HotFrame, Templar, EasyLocal, iOpt, OptQuest, JDEAL, Optimization Algorithm Toolkit, HeuristicLab, MAFRA, Localizer, GALIB, DREAM, Discropt, MALLBA, MAGMA, Metaheuristics.jl, UOF[34] and OptaPlanner.

Contributions

Many different metaheuristics are in existence and new variants are continually being proposed. Some of the most significant contributions to the field are:

- 1952: Robbins and Monro work on stochastic optimization methods.[35]

- 1954: Barricelli carries out the first simulations of the evolution process and uses them on general optimization problems.[36]

- 1963: Rastrigin proposes random search.[37]

- 1965: Matyas proposes random optimization.[38]

- 1965: Nelder and Mead propose a simplex heuristic, which was shown by Powell to converge to non-stationary points on some problems.[39]

- 1965: Ingo Rechenberg discovers the first Evolution Strategies algorithm.[40]

- 1966: Fogel et al. propose evolutionary programming.[41]

- 1970: Hastings proposes the Metropolis–Hastings algorithm.[42]

- 1970: Cavicchio proposes adaptation of control parameters for an optimizer.[43]

- 1970: Kernighan and Lin propose a graph partitioning method, related to variable-depth search and prohibition-based (tabu) search.[44]

- 1975: Holland proposes the genetic algorithm.[23]

- 1977: Glover proposes scatter search.[24]

- 1978: Mercer and Sampson propose a metaplan for tuning an optimizer's parameters by using another optimizer.[45]

- 1980: Smith describes genetic programming.[46]

- 1983: Kirkpatrick et al. propose simulated annealing.[47]

- 1986: Glover proposes tabu search, first mention of the term metaheuristic.[25]

- 1989: Moscato proposes memetic algorithms.[14]

- 1990: Moscato and Fontanari,[48] and Dueck and Scheuer,[49] independently proposed a deterministic update rule for simulated annealing which accelerated the search. This led to the threshold accepting metaheuristic.

- 1992: Dorigo introduces ant colony optimization in his PhD thesis.[13]

- 1995: Wolpert and Macready prove the no free lunch theorems.[50][51][52][53]

See also

- Stochastic search

- Meta-optimization

- Matheuristics

- Hyper-heuristics

- Swarm intelligence

- Evolutionary algorithms and in particular genetic algorithms, genetic programming, or evolution strategies.

- Simulated annealing

- Workforce modeling

References

- ↑ R. Balamurugan; A.M. Natarajan; K. Premalatha (2015). "Stellar-Mass Black Hole Optimization for Biclustering Microarray Gene Expression Data". Applied Artificial Intelligence 29 (4): 353–381. doi:10.1080/08839514.2015.1016391.

- ↑ 2.0 2.1 2.2 2.3 2.4 Bianchi, Leonora; Marco Dorigo; Luca Maria Gambardella; Walter J. Gutjahr (2009). "A survey on metaheuristics for stochastic combinatorial optimization". Natural Computing 8 (2): 239–287. doi:10.1007/s11047-008-9098-4. http://doc.rero.ch/record/319945/files/11047_2008_Article_9098.pdf.

- ↑ 3.00 3.01 3.02 3.03 3.04 3.05 3.06 3.07 3.08 3.09 Blum, C.; Roli, A. (2003). Metaheuristics in combinatorial optimization: Overview and conceptual comparison. 35. ACM Computing Surveys. pp. 268–308. https://www.researchgate.net/publication/221900771.

- ↑ Goldberg, D.E. (1989). Genetic Algorithms in Search, Optimization and Machine Learning. Kluwer Academic Publishers. ISBN 978-0-201-15767-3.

- ↑ Glover, F.; Kochenberger, G.A. (2003). Handbook of metaheuristics. 57. Springer, International Series in Operations Research & Management Science. ISBN 978-1-4020-7263-5.

- ↑ 6.0 6.1 6.2 6.3 6.4 Talbi, E-G. (2009). Metaheuristics: from design to implementation. Wiley. ISBN 978-0-470-27858-1.

- ↑ X. S. Yang, Metaheuristic optimization, Scholarpedia, 6(8):11472 (2011).

- ↑ Glover F., (1986). Future paths for integer programming and links to artificial intelligence, Computers and Operations Research, 13, 533–549 (1986).

- ↑ 9.0 9.1 Sörensen, Kenneth (2015). "Metaheuristics—the metaphor exposed". International Transactions in Operational Research 22: 3–18. doi:10.1111/itor.12001. http://antor.ua.ac.be/system/files/mme.pdf.

- ↑ Classification of metaheuristics

- ↑ D, Binu (2019). "RideNN: A New Rider Optimization Algorithm-Based Neural Network for Fault Diagnosis in Analog Circuits". IEEE Transactions on Instrumentation and Measurement 68 (1): 2–26. doi:10.1109/TIM.2018.2836058. Bibcode: 2019ITIM...68....2B. https://ieeexplore.ieee.org/document/8370147.

- ↑ 12.0 12.1 Pang, Shinsiong; Chen, Mu-Chen (2023-06-01). "Optimize railway crew scheduling by using modified bacterial foraging algorithm" (in en). Computers & Industrial Engineering 180: 109218. doi:10.1016/j.cie.2023.109218. ISSN 0360-8352. https://www.sciencedirect.com/science/article/pii/S0360835223002425.

- ↑ 13.0 13.1 M. Dorigo, Optimization, Learning and Natural Algorithms, PhD thesis, Politecnico di Milano, Italie, 1992.

- ↑ 14.0 14.1 Moscato, P. (1989). "On Evolution, Search, Optimization, Genetic Algorithms and Martial Arts: Towards Memetic Algorithms". Caltech Concurrent Computation Program (report 826). https://www.researchgate.net/publication/2354457.

- ↑ Tomoiagă B, Chindriş M, Sumper A, Sudria-Andreu A, Villafafila-Robles R. Pareto Optimal Reconfiguration of Power Distribution Systems Using a Genetic Algorithm Based on NSGA-II. Energies. 2013; 6(3):1439–1455.

- ↑ Ganesan, T.; Elamvazuthi, I.; Ku Shaari, Ku Zilati; Vasant, P. (2013-03-01). "Swarm intelligence and gravitational search algorithm for multi-objective optimization of synthesis gas production". Applied Energy 103: 368–374. doi:10.1016/j.apenergy.2012.09.059. Bibcode: 2013ApEn..103..368G.

- ↑ Ganesan, T.; Elamvazuthi, I.; Vasant, P. (2011-11-01). "Evolutionary normal-boundary intersection (ENBI) method for multi-objective optimization of green sand mould system". 2011 IEEE International Conference on Control System, Computing and Engineering. pp. 86–91. doi:10.1109/ICCSCE.2011.6190501. ISBN 978-1-4577-1642-3.

- ↑ Jarboui, Bassem, ed (2013). Metaheuristics for production scheduling. Automation - control and industrial engineering series. London: ISTE. ISBN 978-1-84821-497-2. https://www.wiley.com/en-dk/Metaheuristics+for+Production+Scheduling-p-9781848214972.

- ↑ Xhafa, Fatos, ed (2008) (in en). Metaheuristics for Scheduling in Industrial and Manufacturing Applications. Studies in Computational Intelligence. 128. Berlin, Heidelberg: Springer Berlin Heidelberg. doi:10.1007/978-3-540-78985-7. ISBN 978-3-540-78984-0. http://link.springer.com/10.1007/978-3-540-78985-7.

- ↑ Jakob, Wilfried; Strack, Sylvia; Quinte, Alexander; Bengel, Günther; Stucky, Karl-Uwe; Süß, Wolfgang (2013-04-22). "Fast Rescheduling of Multiple Workflows to Constrained Heterogeneous Resources Using Multi-Criteria Memetic Computing" (in en). Algorithms 6 (2): 245–277. doi:10.3390/a6020245. ISSN 1999-4893.

- ↑ Kizilay, Damla; Tasgetiren, M. Fatih; Pan, Quan-Ke; Süer, Gürsel (2019). "An Ensemble of Meta-Heuristics for the Energy-Efficient Blocking Flowshop Scheduling Problem" (in en). Procedia Manufacturing 39: 1177–1184. doi:10.1016/j.promfg.2020.01.352.

- ↑ Grosch, Benedikt; Weitzel, Timm; Panten, Niklas; Abele, Eberhard (2019). "A metaheuristic for energy adaptive production scheduling with multiple energy carriers and its implementation in a real production system" (in en). Procedia CIRP 80: 203–208. doi:10.1016/j.procir.2019.01.043.

- ↑ 23.0 23.1 Holland, J.H. (1975). Adaptation in Natural and Artificial Systems. University of Michigan Press. ISBN 978-0-262-08213-6. https://archive.org/details/adaptationinnatu00holl.

- ↑ 24.0 24.1 Glover, Fred (1977). "Heuristics for Integer programming Using Surrogate Constraints". Decision Sciences 8 (1): 156–166. doi:10.1111/j.1540-5915.1977.tb01074.x.

- ↑ 25.0 25.1 Glover, F. (1986). "Future Paths for Integer Programming and Links to Artificial Intelligence". Computers and Operations Research 13 (5): 533–549. doi:10.1016/0305-0548(86)90048-1.

- ↑ Gupta, Shubham; Abderazek, Hammoudi; Yıldız, Betül Sultan; Yildiz, Ali Riza; Mirjalili, Seyedali; Sait, Sadiq M. (2021). "Comparison of metaheuristic optimization algorithms for solving constrained mechanical design optimization problems" (in en). Expert Systems with Applications 183: 115351. doi:10.1016/j.eswa.2021.115351. https://linkinghub.elsevier.com/retrieve/pii/S095741742100779X.

- ↑ Quinte, Alexander; Jakob, Wilfried; Scherer, Klaus-Peter; Eggert, Horst (2002), Laudon, Matthew, ed., "Optimization of a Micro Actuator Plate Using Evolutionary Algorithms and Simulation Based on Discrete Element Methods", International Conference on Modeling and Simulation of Microsystems: MSM 2002 (Cambridge, Mass: Computational Publications): pp. 192–197, ISBN 978-0-9708275-7-9, https://www.researchgate.net/publication/228790476

- ↑ Parmee, I. C. (1997), Dasgupta, Dipankar; Michalewicz, Zbigniew, eds., "Strategies for the Integration of Evolutionary/Adaptive Search with the Engineering Design Process" (in en), Evolutionary Algorithms in Engineering Applications (Berlin, Heidelberg: Springer): pp. 453–477, doi:10.1007/978-3-662-03423-1_25, ISBN 978-3-642-08282-5, http://link.springer.com/10.1007/978-3-662-03423-1_25, retrieved 2023-07-17

- ↑ Valadi, Jayaraman, ed (2014) (in en). Applications of Metaheuristics in Process Engineering. Cham: Springer International Publishing. doi:10.1007/978-3-319-06508-3. ISBN 978-3-319-06507-6. https://link.springer.com/10.1007/978-3-319-06508-3.

- ↑ Sanchez, Ernesto; Squillero, Giovanni; Tonda, Alberto (2012) (in en). Industrial Applications of Evolutionary Algorithms. Intelligent Systems Reference Library. 34. Berlin, Heidelberg: Springer. doi:10.1007/978-3-642-27467-1. ISBN 978-3-642-27466-4. http://link.springer.com/10.1007/978-3-642-27467-1.

- ↑ Akan, Taymaz, ed (2023) (in en). Engineering Applications of Modern Metaheuristics. Studies in Computational Intelligence. 1069. Cham: Springer International Publishing. doi:10.1007/978-3-031-16832-1. ISBN 978-3-031-16831-4. https://link.springer.com/10.1007/978-3-031-16832-1.

- ↑ Blume, Christian (2000), Cagnoni, Stefano, ed., "Optimized Collision Free Robot Move Statement Generation by the Evolutionary Software GLEAM" (in en), Real-World Applications of Evolutionary Computing, Lecture Notes in Computer Science (Berlin, Heidelberg: Springer) 1803: pp. 330–341, doi:10.1007/3-540-45561-2_32, ISBN 978-3-540-67353-8, http://link.springer.com/10.1007/3-540-45561-2_32, retrieved 2023-07-17

- ↑ Pholdee, Nantiwat; Bureerat, Sujin (2018-12-14), "Multiobjective Trajectory Planning of a 6D Robot based on Multiobjective Meta Heuristic Search" (in en), International Conference on Network, Communication and Computing (ICNCC 2018) (ACM): pp. 352–356, doi:10.1145/3301326.3301356, ISBN 978-1-4503-6553-6, https://dl.acm.org/doi/10.1145/3301326.3301356

- ↑ 34.0 34.1 Moscato, P. (2012). "Metaheuristic optimization frameworks a survey and benchmarking". Soft Comput 16 (3): 527–561. doi:10.1007/s00500-011-0754-8.

- ↑ Robbins, H.; Monro, S. (1951). "A Stochastic Approximation Method". Annals of Mathematical Statistics 22 (3): 400–407. doi:10.1214/aoms/1177729586. http://dml.cz/bitstream/handle/10338.dmlcz/100283/CzechMathJ_08-1958-1_10.pdf.

- ↑ Barricelli, N.A. (1954). "Esempi numerici di processi di evoluzione". Methodos: 45–68.

- ↑ Rastrigin, L.A. (1963). "The convergence of the random search method in the extremal control of a many parameter system". Automation and Remote Control 24 (10): 1337–1342.

- ↑ Matyas, J. (1965). "Random optimization". Automation and Remote Control 26 (2): 246–253. http://www.mathnet.ru/eng/at11288.

- ↑ Nelder, J.A.; Mead, R. (1965). "A simplex method for function minimization". Computer Journal 7 (4): 308–313. doi:10.1093/comjnl/7.4.308.

- ↑ Rechenberg, Ingo (1965). "Cybernetic Solution Path of an Experimental Problem". Royal Aircraft Establishment, Library Translation.

- ↑ Fogel, L.; Owens, A.J.; Walsh, M.J. (1966). Artificial Intelligence through Simulated Evolution. Wiley. ISBN 978-0-471-26516-0.

- ↑ Hastings, W.K. (1970). "Monte Carlo Sampling Methods Using Markov Chains and Their Applications". Biometrika 57 (1): 97–109. doi:10.1093/biomet/57.1.97. Bibcode: 1970Bimka..57...97H.

- ↑ Cavicchio, D.J. (1970). "Adaptive search using simulated evolution". Technical Report (University of Michigan, Computer and Communication Sciences Department).

- ↑ Kernighan, B.W.; Lin, S. (1970). "An efficient heuristic procedure for partitioning graphs". Bell System Technical Journal 49 (2): 291–307. doi:10.1002/j.1538-7305.1970.tb01770.x.

- ↑ Mercer, R.E.; Sampson, J.R. (1978). "Adaptive search using a reproductive metaplan". Kybernetes 7 (3): 215–228. doi:10.1108/eb005486.

- ↑ Smith, S.F. (1980). A Learning System Based on Genetic Adaptive Algorithms (PhD Thesis). University of Pittsburgh.

- ↑ Kirkpatrick, S.; Gelatt Jr., C.D.; Vecchi, M.P. (1983). "Optimization by Simulated Annealing". Science 220 (4598): 671–680. doi:10.1126/science.220.4598.671. PMID 17813860. Bibcode: 1983Sci...220..671K.

- ↑ Moscato, P.; Fontanari, J.F. (1990), "Stochastic versus deterministic update in simulated annealing", Physics Letters A 146 (4): 204–208, doi:10.1016/0375-9601(90)90166-L, Bibcode: 1990PhLA..146..204M

- ↑ Dueck, G.; Scheuer, T. (1990), "Threshold accepting: A general purpose optimization algorithm appearing superior to simulated annealing", Journal of Computational Physics 90 (1): 161–175, doi:10.1016/0021-9991(90)90201-B, ISSN 0021-9991, Bibcode: 1990JCoPh..90..161D

- ↑ Wolpert, D.H.; Macready, W.G. (1995). "No free lunch theorems for search". Technical Report SFI-TR-95-02-010 (Santa Fe Institute).

- ↑ Igel, Christian, Toussaint, Marc (Jun 2003). "On classes of functions for which No Free Lunch results hold". Information Processing Letters 86 (6): 317–321. doi:10.1016/S0020-0190(03)00222-9. ISSN 0020-0190.

- ↑ Auger, Anne, Teytaud, Olivier (2010). "Continuous Lunches Are Free Plus the Design of Optimal Optimization Algorithms". Algorithmica 57 (1): 121–146. doi:10.1007/s00453-008-9244-5. ISSN 0178-4617.

- ↑ Stefan Droste; Thomas Jansen; Ingo Wegener (2002). "Optimization with Randomized Search Heuristics – The (A)NFL Theorem, Realistic Scenarios, and Difficult Functions". Theoretical Computer Science 287 (1): 131–144. doi:10.1016/s0304-3975(02)00094-4.

Further reading

- Sörensen, Kenneth; Sevaux, Marc; Glover, Fred (2017-01-16). "A History of Metaheuristics". Handbook of Heuristics. Springer. ISBN 978-3-319-07123-7. http://leeds-faculty.colorado.edu/glover/468%20-%20A%20History%20of%20Metaheuristics%20w%20Sorensen%20%26%20Sevaux.pdf.

- Ashish Sharma (2022), Nature Inspired Algorithms with Randomized Hypercomputational Perspective. Information Sciences. https://doi.org/10.1016/j.ins.2022.05.020.

External links

- EU/ME forum for researchers in the field.

|